From: Lynn Wheeler <lynn@garlic.com> Subject: 3270 Date: 07 Aug, 2023 Blog: FacebookWhen 3274/3278 (controller & terminal) came out .... it was much worse than 3272/3277 and letter was written to the 3278 product admin. complaining. He eventually responded that 3278 wasn't targeted for interactive computing ... but for "data entry" (aka electronic keypunch). This was during period of studies of interactive computing showing quarter second response improved productivity. The 3272/3277 had .086sec hardware response .... which with .164 system response ... gives quarter second. For the 3278, they moved a lot of electronics back into the 3274 (reducing 3278 manufacturing cost) ... also driving up the coax protocol chatter ... and hardware response was .3-.5sec (based on data stream and coax chatter) ... precluding quarter second response.. Trivia: MVS/TSO would never notice since it was a rare MVS/TSO had even one second system response (my internally distributed systems tended to .11sec system interactive response).

Later with IBM/PC, a 3277 emulation card had 3-4 times the upload/download throughput of 3278 emulation card (because the significant difference in protocol chatter latency & overhead).

The 3277 also had enough electronics that it was possible to wire a large tektronix graphics screen into side of the terminal ("3277GA") ... sort of an inexpensive 2250

some recent 3272/3277 interactive computing

https://www.garlic.com/~lynn/2023c.html#42 VM/370 3270 Terminal

https://www.garlic.com/~lynn/2023b.html#4 IBM 370

https://www.garlic.com/~lynn/2023.html#2 big and little, Can BCD and binary multipliers share circuitry?

https://www.garlic.com/~lynn/2022h.html#96 IBM 3270

https://www.garlic.com/~lynn/2022c.html#68 IBM Mainframe market was Re: Approximate reciprocals

https://www.garlic.com/~lynn/2022b.html#123 System Response

https://www.garlic.com/~lynn/2022b.html#110 IBM 4341 & 3270

https://www.garlic.com/~lynn/2022b.html#33 IBM 3270 Terminals

https://www.garlic.com/~lynn/2022.html#94 VM/370 Interactive Response

https://www.garlic.com/~lynn/2021j.html#74 IBM 3278

https://www.garlic.com/~lynn/2021i.html#69 IBM MYTE

https://www.garlic.com/~lynn/2021c.html#92 IBM SNA/VTAM (& HSDT)

https://www.garlic.com/~lynn/2021c.html#0 Colours on screen (mainframe history question) [EXTERNAL]

https://www.garlic.com/~lynn/2021.html#84 3272/3277 interactive computing

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: DASD, Channel and I/O long winded trivia Date: 07 Aug, 2023 Blog: Facebookre:

My wife did a stint in POK responsible for loosely-coupled architecture (didn't remain long, in part repeated battles with communication group trying to force her into using SNA/VTAM for loosely-coupled operation). She also failed to get some throughput improvements for trouter/3088.

peer-coupled shared data (loosely-coupled) architecture posts

https://www.garlic.com/~lynn/submain.html#shareddata

trivia: SJR had a 4341 (up to eight) cluster support using (non-SNA) 3088 ... cluster coordination operations taking sub-second elapsed time. To release it, communication group said that it had to be done using VTAM/SNA ... that implementation resulted in cluster coordination operations increasing upwards of 30sec elapsed time.

posts mentioning communication group fiercely fighting off

client/server and distributed computing (in part trying to preserve

their dumb terminal paradigm)

https://www.garlic.com/~lynn/subnetwork.html#terminal

some past posts mentioning trouter/3088:

https://www.garlic.com/~lynn/2015e.html#47 GRS Control Unit ( Was IBM mainframe operations in the 80s)

https://www.garlic.com/~lynn/2006j.html#31 virtual memory

https://www.garlic.com/~lynn/2000f.html#37 OT?

https://www.garlic.com/~lynn/2000f.html#30 OT?

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: DASD, Channel and I/O long winded trivia Date: 08 Aug, 2023 Blog: Facebookre:

z196 had "Peak I/O" benchmark getting 2m IOPS using 104 FICON (running over 104 FCS) ... about the same time FCS was announced for E5-2600 server blade (commonly used in cloud megadatacenters that have half million or more E5-2600 blades) claiming over million IOPS (two such FCS having higher throughput than 104 FICON).

At the time, max configured z196 was $30M and 50BIPS (industry benchmark, # of iterations compared to 158-3 assumed to be one MIP) or $600,000/BIPS. By comparison IBM had base list price of $1815 for E5-2600 blade and (same) benchmark was 500BIPS (ten times max configured z196) or $3.63/BIPS.

Note large cloud megadatacenters have been claiming for a couple decades that they assemble their own blade servers for 1/3rd the cost of brand name servers or $1.21/BIPS. Then about the same time there was press that server chip and component vendors were shipping at least half their product directly to cloud megadatacenters, IBM sells off its "server" business.

A large cloud operation will have at least a dozen (or scores) of megadatacenters around the world, each with at least half million blade servers (millions of processor cores) ... a megadatacenter something like 250 million BIPS or equivalent of five million max configured z196 systems.

FICON

https://en.wikipedia.org/wiki/FICON

Fibre Channel

https://en.wikipedia.org/wiki/Fibre_Channel

other Fibre Channel:

Fibre Channel Protocol

https://en.wikipedia.org/wiki/Fibre_Channel_Protocol

Fibre Channel switch

https://en.wikipedia.org/wiki/Fibre_Channel_switch

Fibre Channel electrical interface

https://en.wikipedia.org/wiki/Fibre_Channel_electrical_interface

Fibre Channel over Ethernet

https://en.wikipedia.org/wiki/Fibre_Channel_over_Ethernet

fcs &/or ficon posts

https://www.garlic.com/~lynn/submisc.html#ficon

megadatacenter posts

https://www.garlic.com/~lynn/submisc.html#megadatacenter

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: DASD, Channel and I/O long winded trivia Date: 08 Aug, 2023 Blog: Facebookre: re:

... repost .... I had also wrote test for channel/controller speed. VM370 had a 3330/3350/2305 page format that had "dummy short block" between 4k page blocks. Standard channel program had seek followed by search record, read/write 4k ... possibly chained to search, read/write 4k (trying to maximize number of 4k transfers in single rotation). For records on the same cylinder, but different track, in the same rotation, had to add a seek track. The channel and/or controller time to process the embedded seek could exceed the rotation time for the dummy block ... causing an additional revolution. Test would format cylinder with maximum possible dummy block size (between page records) and then start reducing to minimum 50byte size ... checking to see if additional rotation was required. 158 (also 303x channel director and 3081) had the slowest channel program processing. I also got several customers with non-IBM processors, channels, and disk controllers to run the tests ... so had combinations of IBM and non-IBM 370s with IBM and non-IBM disk controller.

posts mentioning getting to play disk engineer in bldgs14&15

https://www.garlic.com/~lynn/subtopic.html#disk

some past posts mentioning (disk) "dummy blocks"

https://www.garlic.com/~lynn/2023d.html#19 IBM 3880 Disk Controller

https://www.garlic.com/~lynn/2023.html#38 Disk optimization

https://www.garlic.com/~lynn/2011e.html#75 I'd forgotten what a 2305 looked like

https://www.garlic.com/~lynn/2010m.html#15 History of Hard-coded Offsets

https://www.garlic.com/~lynn/2010.html#49 locate mode, was Happy DEC-10 Day

https://www.garlic.com/~lynn/2006t.html#19 old vm370 mitre benchmark

https://www.garlic.com/~lynn/2006r.html#40 REAL memory column in SDSF

https://www.garlic.com/~lynn/2002b.html#17 index searching

https://www.garlic.com/~lynn/2000d.html#7 4341 was "Is a VAX a mainframe?"

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: HASP, JES, MVT, 370 Virtual Memory, VS2 Date: 08 Aug, 2023 Blog: Facebook... in another life, my wife was in gburg jes group (reporting to crabby) until she was con'ed into going to POK to be responsible for loosely-coupled architecture (and doing peer-coupled shared data architecture).

periodically repost about a decade ago, being asked to track down

decision to make all 370s virtual memory .... basically MVT storage

management was so bad that regions had to be specified four times

larger than used ... as result typical 1mbyte 370/165 would only run

four regions concurrently ... insufficient to keep system busy and

justified ... mapping MVT to 16mbyte virtual memory (for VS2/ SVS)

would allow number of concurrent regions to be increased by factor of

four (with little or no paging) ... quite similar to running MVT in

CP67 16mbyte virtual machine. pieces of email exchange (in

archived post)

https://www.garlic.com/~lynn/2011d.html#73

... post also mentions at univ, I crafted terminal support and an editor (with CMS edit syntax) into HASP to get a CRJE that I thot was better than IBM CRJE and TSO.

I would drop by Ludlow doing SVS prototype on 360/67, offshift. Biggest piece of code was EXCP ... almost exactly same as CP67 ... in fact Ludlow borrowed CCWTRANS from CP67 and crafted it into EXCP (aka applications called EXCP with channel programs ... which now had virtual addresses ... EXCP had to make copy of the channel programs, substituting real addresses for virtual).

HASP, ASP, JES, NJE/NJI, etc posts

https://www.garlic.com/~lynn/submain.html#hasp

loosely-coupled and peer-coupled shared data architecture

https://www.garlic.com/~lynn/submain.html#shareddata

some recent post refs 370 virtual memory

https://www.garlic.com/~lynn/2023d.html#113 VM370

https://www.garlic.com/~lynn/2023d.html#98 IBM DASD, Virtual Memory

https://www.garlic.com/~lynn/2023d.html#90 IBM 3083

https://www.garlic.com/~lynn/2023d.html#71 IBM System/360, 1964

https://www.garlic.com/~lynn/2023d.html#32 IBM 370/195

https://www.garlic.com/~lynn/2023d.html#24 VM370, SMP, HONE

https://www.garlic.com/~lynn/2023d.html#20 IBM 360/195

https://www.garlic.com/~lynn/2023d.html#17 IBM MVS RAS

https://www.garlic.com/~lynn/2023d.html#9 IBM MVS RAS

https://www.garlic.com/~lynn/2023d.html#0 Some 3033 (and other) Trivia

https://www.garlic.com/~lynn/2023c.html#79 IBM TLA

https://www.garlic.com/~lynn/2023c.html#25 IBM Downfall

https://www.garlic.com/~lynn/2023b.html#103 2023 IBM Poughkeepsie, NY

https://www.garlic.com/~lynn/2023b.html#44 IBM 370

https://www.garlic.com/~lynn/2023b.html#41 Sunset IBM JES3

https://www.garlic.com/~lynn/2023b.html#24 IBM HASP (& 2780 terminal)

https://www.garlic.com/~lynn/2023b.html#15 IBM User Group, SHARE

https://www.garlic.com/~lynn/2023b.html#6 z/VM 50th - part 7

https://www.garlic.com/~lynn/2023b.html#0 IBM 370

https://www.garlic.com/~lynn/2023.html#76 IBM 4341

https://www.garlic.com/~lynn/2023.html#65 7090/7044 Direct Couple

https://www.garlic.com/~lynn/2023.html#50 370 Virtual Memory Decision

https://www.garlic.com/~lynn/2023.html#34 IBM Punch Cards

https://www.garlic.com/~lynn/2023.html#4 Mainrame Channel Redrive

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Boyd and OODA-loop Date: 09 Aug, 2023 Blog: LinkedinBoyd and OODA-loop

... in briefings Boyd would talk about constantly observing from every possible facet (as countermeasures to numerous kinds of biases) ... also references to observation, orientation, decisions, and actions are constantly occurring asynchronous operations (not strictly serialized operations).

... when I was first introduced to Boyd, I felt a natural affinity from the way I programmed computers. There is anecdote from after the turn of century about (Microsoft) Gates complaining to Intel about transition to multi-core chips (from increasingly faster single core) because it was too hard to write programs where multiple things went on independently in asynchronously operating cores

Boyd posts and WEB URLs

https://www.garlic.com/~lynn/subboyd.html

SMP, tightly-coupled, shared memory multiprocessor posts

https://www.garlic.com/~lynn/subtopic.html#smp

(loosely-coupled/cluster) peer-coupled shared data architecture posts

https://www.garlic.com/~lynn/submain.html#shareddata

(loosely-coupled/cluster-scaleup) HA/CMP posts

https://www.garlic.com/~lynn/subtopic.html#hacmp

posts mentioning the Gates/Intel anecdote

https://www.garlic.com/~lynn/2023d.html#49 Computer Speed Gains Erased By Modern Software

https://www.garlic.com/~lynn/2022h.html#55 More John Boyd and OODA-loop

https://www.garlic.com/~lynn/2022h.html#51 Sun Tzu, Aristotle, and John Boyd

https://www.garlic.com/~lynn/2018d.html#57 tablets and desktops was Has Microsoft

https://www.garlic.com/~lynn/2017e.html#52 [CM] What was your first home computer?

https://www.garlic.com/~lynn/2016c.html#60 Which Books Can You Recommend For Learning Computer Programming?

https://www.garlic.com/~lynn/2016c.html#56 Which Books Can You Recommend For Learning Computer Programming?

https://www.garlic.com/~lynn/2014m.html#118 By the time we get to 'O' in OODA

https://www.garlic.com/~lynn/2014d.html#85 Parallel programming may not be so daunting

https://www.garlic.com/~lynn/2013.html#48 New HD

https://www.garlic.com/~lynn/2012j.html#44 Monopoly/ Cartons of Punch Cards

https://www.garlic.com/~lynn/2012e.html#15 Why do people say "the soda loop is often depicted as a simple loop"?

https://www.garlic.com/~lynn/2008f.html#42 Panic in Multicore Land

https://www.garlic.com/~lynn/2007m.html#2 John W. Backus, 82, Fortran developer, dies

https://www.garlic.com/~lynn/2007i.html#78 John W. Backus, 82, Fortran developer, dies

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: HASP, JES, MVT, 370 Virtual Memory, VS2 Date: 09 Aug, 2023 Blog: Facebookre:

23Jun1969 unbundling announcement included starting to charge for

(application) software (made the case that kernel software was still

free). Then in the early/mid-70s, there was Future System program,

completely different and to completely replace 370s (internal politics

was killing off 370 efforts, and lack of new 370 stuff, is credited

with giving clone 370 makers, their market foothold). When FS finally

implodes there is mad rush to get stuff back into 370 product

pipelines, including kicking off quick&dirty 303x&3081 efforts

in parallel. some more FS details

http://www.jfsowa.com/computer/memo125.htm

The rise of clone 370 makers also contributes to change decision about not charging for kernel software, incremental/new features charged for, transitioning to all kernel software charged for, by the early 80s. Then starts the OCO-wars (object code only).

Note TYMSHARE

https://en.wikipedia.org/wiki/Tymshare

had started offering their CMS-based online computer conferencing free

to SHARE

https://en.wikipedia.org/wiki/SHARE_(computing)

in Aug1976, archives here

http://vm.marist.edu/~vmshare

where some of the OCO-war discussions can be found. Lots of this also discussed at the monthly user group "BAYBUNCH" meetings hosted by (Stanford) SLAC.

unbundling posts

https://www.garlic.com/~lynn/submain.html#unbundle

future system posts

https://www.garlic.com/~lynn/submain.html#futuresys

posts mentioning vmshare & OCO-wars

https://www.garlic.com/~lynn/2023c.html#55 IBM VM/370

https://www.garlic.com/~lynn/2023.html#96 Mainframe Assembler

https://www.garlic.com/~lynn/2023.html#68 IBM and OSS

https://www.garlic.com/~lynn/2022e.html#7 RED and XEDIT fullscreen editors

https://www.garlic.com/~lynn/2022b.html#118 IBM Disks

https://www.garlic.com/~lynn/2022b.html#30 Online at home

https://www.garlic.com/~lynn/2022.html#36 Error Handling

https://www.garlic.com/~lynn/2021k.html#104 DUMPRX

https://www.garlic.com/~lynn/2021k.html#50 VM/SP crashing all over the place

https://www.garlic.com/~lynn/2021g.html#27 IBM Fan-fold cards

https://www.garlic.com/~lynn/2021c.html#5 Z/VM

https://www.garlic.com/~lynn/2021.html#14 Unbundling and Kernel Software

https://www.garlic.com/~lynn/2018d.html#48 IPCS, DUMPRX, 3092, EREP

https://www.garlic.com/~lynn/2017g.html#23 Eliminating the systems programmer was Re: IBM cuts contractor billing by 15 percent (our else)

https://www.garlic.com/~lynn/2017.html#59 The ICL 2900

https://www.garlic.com/~lynn/2016g.html#68 "I used a real computer at home...and so will you" (Popular Science May 1967)

https://www.garlic.com/~lynn/2015d.html#59 Western Union envisioned internet functionality

https://www.garlic.com/~lynn/2014m.html#35 BBC News - Microsoft fixes '19-year-old' bug with emergency patch

https://www.garlic.com/~lynn/2014.html#19 the suckage of MS-DOS, was Re: 'Free Unix!

https://www.garlic.com/~lynn/2013o.html#45 the nonsuckage of source, was MS-DOS, was Re: 'Free Unix!

https://www.garlic.com/~lynn/2013m.html#55 'Free Unix!': The world-changing proclamation made 30 years ago today

https://www.garlic.com/~lynn/2013l.html#66 model numbers; was re: World's worst programming environment?

https://www.garlic.com/~lynn/2012j.html#31 How smart do you need to be to be really good with Assembler?

https://www.garlic.com/~lynn/2012j.html#30 Can anybody give me a clear idea about Cloud Computing in MAINFRAME ?

https://www.garlic.com/~lynn/2012j.html#20 Operating System, what is it?

https://www.garlic.com/~lynn/2011o.html#33 Data Areas?

https://www.garlic.com/~lynn/2007u.html#8 Open z/Architecture or Not

https://www.garlic.com/~lynn/2007u.html#6 Open z/Architecture or Not

https://www.garlic.com/~lynn/2007k.html#15 Data Areas Manuals to be dropped

https://www.garlic.com/~lynn/2007f.html#67 The Perfect Computer - 36 bits?

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: HASP, JES, MVT, 370 Virtual Memory, VS2 Date: 09 Aug, 2023 Blog: Facebookre:

I took two credit hr intro to fortran/comuters and at the end of semester, was hired to redo 1401 MPIO for 360/30. The univ had been sold 360/67 for tss/360 to replace 709/1401. Temporarily pending 360/67, the 1401 was replaced with 360/30 ... which had 1401 emulation and could continue to run 1401 MPIO ... I guess I was part of getting 360 experience. The univ. shutdown datacenter on weekends and they let me have the whole place dedicated (48hrs w/o sleep, making monday classes a little hard). They gave me a bunch of hardware and software manuals and I got to design and implement my own monitor, device drivers, interrupt handlers, error recovery, storage management, etc ... and within a few weeks I had 2000 card assembler program (assembler option generated either 1) stand alone with BPS loader or 2) under OS/360 with system services macros).

I quickly learned when I came in sat. morning to first disassemble and clean 2540 printer/punch, clean tape drives, clean printer. Sometimes when I came in sat. morning, production had finished early and everything was powered off. Sometimes 360/30 wouldn't complete power-on and with lots of trial and error ... learned to put all the control units into CE mode, power on 360/30, individually power on control units, return control units to normal. Within a year of taking intro class, the 360/67 has arrived and I'm hired fulltime responsible for os/360 (tss/360 never came to production, so ran as 360/65 with os/360).

Student fortran jobs took under sec on 709 (IBSYS tape->tape), initially on 360/65, ran more than a minute. I install HASP and time is cut in half. I start modifying SYSGEN STAGE2 so I could run in production job stream ... then reorder things to place datasets and PDS members to optimize DASD seeks and multi-track searches ... cutting time another 2/3rds to 12.9sec. Sometimes heavy PTF activity would start affecting careful placement and as elapsed time crept towards 20secs, I would have to redo SYSGEN (to restore placement). Student jobs never got better than 709 until I install Univ. of Waterloo WATFOR.

Mid-70s, I would pontificate CKD was 60s technology trade-off offloading functions (like multi-track search) to abundant I/O resources because of scarce memory resources ... but that by mid-70s that trade-off was starting to flip and by early 80s I was also pontificating that between late 60s and early 80s, relative system DASD throughput had declined by an order of magnitude (i.e. disk got 3-5 times faster while system processing got 40-50 times faster). Some disk (GPD) division executive took exception and assigned the division performance group to refute by claims. However, after a few weeks they came back and said that I had understated the situation. They eventually respun this for a SHARE presentation about optimizing DASD configuration for improving system throughput, 16Aug1984, SHARE 63, B874.

This also showed up in some competition with IMS (when I was working with Jim Gray and Vera Watson) and System/R (original SQL/relational implementation). IMS claiming that System/R doubled disk space requirements and significantly increased I/O (for indexes). Counter was that IMS required significant human resources to maintain data structures. However, by the early 80s; 1) disk space price was significantly dropping, 2) real memory significantly increased allow index caching, reducing physical I/O, and 3) IMS required more admin resources that were getting scarcer and more expensive. trivia: we managed to do tech transfer ("under the radar" while company was preoccupied with next new DBMS, "EAGLE") to Endicott for SQL/DS. Then when EAGLE implodes, there was request how fast could System/R be migrated to MVS ... which is eventually announced as DB2 (originally for decision support *only*).

system/r posts

https://www.garlic.com/~lynn/submain.html#systemr

Recent observations is that (cache miss) latency to memory, when measured in count of processor cycles is comparable to 60s latency for disk I/O when measured in count of 60s processor cycles (real memory is the new disk).

trivia: late 70s, I was brought into a national grocery chain datacenter that had large VS2 loosely-coupled multi-system operation ... that was experiencing severe throughput problems (that already had most of the usual IBM experts brought through). They had classroom with tables completely covered with activity monitoring stacks of paper (from all the systems).

After about 30 mins, I started to notice that manually summing the throughput of a specific (shared) DASD activity across all the systems, was peaking at 7/sec. I asked what it was. They said it was the 3330 that had large PDS library (3cyl PDS directory) of all the store & controller applications. Little manual calculations showed avg PDS member lookup was taking avg two multi-track searchers, one for 19/60sec (.317) and one for 9.5/60sec (.158) or .475sec plus the seek/load of the actual member. Basically was limited to aggregate of slightly less than two application loads/sec for all systems and for all stores in the country.

Note the multi-track searches not only locked out all other activity to the drive ... but also made the controller busy, locking out activity to all other drives on the same controller (for all systems in the complex). So split the PDS into smaller pieces across multi-drives and created duplicated set of non-shared private drives for each system.

DASD, CKD, FBA, multi-track search, etc

https://www.garlic.com/~lynn/submain.html#dasd

some recent posts mentioning 709, 1401 mpio, student fortran 12.9sec,

watfor

https://www.garlic.com/~lynn/2023d.html#106 DASD, Channel and I/O long winded trivia

https://www.garlic.com/~lynn/2023d.html#88 545tech sq, 3rd, 4th, & 5th flrs

https://www.garlic.com/~lynn/2023d.html#79 IBM System/360 JCL

https://www.garlic.com/~lynn/2023d.html#69 Fortran, IBM 1130

https://www.garlic.com/~lynn/2023d.html#64 IBM System/360, 1964

https://www.garlic.com/~lynn/2023d.html#32 IBM 370/195

https://www.garlic.com/~lynn/2023d.html#14 Rent/Leased IBM 360

https://www.garlic.com/~lynn/2023c.html#96 Fortran

https://www.garlic.com/~lynn/2023c.html#82 Dataprocessing Career

https://www.garlic.com/~lynn/2023c.html#73 Dataprocessing 48hr shift

https://www.garlic.com/~lynn/2023b.html#91 360 Announce Stories

https://www.garlic.com/~lynn/2023b.html#75 IBM Mainframe

https://www.garlic.com/~lynn/2023b.html#15 IBM User Group, SHARE

https://www.garlic.com/~lynn/2023.html#65 7090/7044 Direct Couple

https://www.garlic.com/~lynn/2023.html#22 IBM Punch Cards

some recent posts mentioning share 63, b874

https://www.garlic.com/~lynn/2023b.html#26 DISK Performance and Reliability

https://www.garlic.com/~lynn/2023b.html#16 IBM User Group, SHARE

https://www.garlic.com/~lynn/2023.html#33 IBM Punch Cards

https://www.garlic.com/~lynn/2023.html#6 Mainrame Channel Redrive

https://www.garlic.com/~lynn/2022g.html#84 RS/6000 (and some mainframe)

https://www.garlic.com/~lynn/2022f.html#0 Mainframe Channel I/O

https://www.garlic.com/~lynn/2022e.html#49 Channel Program I/O Processing Efficiency

https://www.garlic.com/~lynn/2022d.html#48 360&370 I/O Channels

https://www.garlic.com/~lynn/2022d.html#22 COMPUTER HISTORY: REMEMBERING THE IBM SYSTEM/360 MAINFRAME, its Origin and Technology

https://www.garlic.com/~lynn/2022b.html#77 Channel I/O

https://www.garlic.com/~lynn/2022.html#92 Processor, DASD, VTAM & TCP/IP performance

https://www.garlic.com/~lynn/2022.html#70 165/168/3033 & 370 virtual memory

https://www.garlic.com/~lynn/2021k.html#131 Multitrack Search Performance

https://www.garlic.com/~lynn/2021k.html#108 IBM Disks

https://www.garlic.com/~lynn/2021j.html#105 IBM CKD DASD and multi-track search

https://www.garlic.com/~lynn/2021j.html#78 IBM 370 and Future System

https://www.garlic.com/~lynn/2021g.html#44 iBM System/3 FORTRAN for engineering/science work?

https://www.garlic.com/~lynn/2021f.html#53 3380 disk capacity

https://www.garlic.com/~lynn/2021e.html#33 Univac 90/30 DIAG instruction

https://www.garlic.com/~lynn/2021.html#79 IBM Disk Division

https://www.garlic.com/~lynn/2021.html#59 San Jose bldg 50 and 3380 manufacturing

https://www.garlic.com/~lynn/2021.html#17 Performance History, 5-10Oct1986, SEAS

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM Storage photo album Date: 10 Aug, 2023 Blog: Facebookre:

I seem to remember the storage division (GPD/Adstar) web pages had a lot more ... but that was before IBM got rid of it.

Note: Late 80s, disk senior engineer got talk scheduled at an annual, internal, world-wide communication group conference, supposedly on 3174 performance ... but opened the talk with the statement that the communication group was going to be responsible for the demise of the disk division. The disk division was seeing drop in disk sales with data fleeing mainframe datacenters to more distributed computing friendly platforms. They had come up with a number of solutions that would address the problem, but they were constantly being vetoed by the communication group. The issue was the communication group had stranglehold on mainframes with their corporate strategic ownership of everything that crossed the datacenter walls ... and were fiercely fighting off client/server and distributed computing. One of the Adstar executives had partial work-around investing in distributed computing startups that would use IBM disks (he would periodically ask us to drop by his investments to see if we could offer any help).

Just a couple years later, IBM has one of the largest losses in history

of US comapnies and was being reorg'ed into the 13 "baby blues" in

preparation for breaking up the company ... old references:

https://web.archive.org/web/20101120231857/http://www.time.com/time/magazine/article/0,9171,977353,00.html

may also work

https://content.time.com/time/subscriber/article/0,33009,977353-1,00.html

we had already left IBM, but we get a call from the bowels of Armonk (corp hdqtrs) asking if we could help with breakup of the company. Lots of business units were using supplier contracts in other units via MOUs. After the breakup, many of these contracts would be in different companies ... all of those MOUs would have to be cataloged and turned into their own contracts. Before we get started, a new CEO is brought in and reverses the breakup ... but not long later, the storage division is gone anyway.

getting to play disk engineer in bldgs 14&15

https://www.garlic.com/~lynn/subtopic.html#disk

communication group fighting to preserve dumb terminal paradigm

https://www.garlic.com/~lynn/subnetwork.html#terminal

gerstner posts

https://www.garlic.com/~lynn/submisc.html#gerstner

ibm downfall, breakup, controlling market posts

https://www.garlic.com/~lynn/submisc.html#ibmdownfall

2001 A History of IBM "firsts" in storage technology (gone 404, but

still lives on at wayback machine, but doesn't have images, but URL

associated text there)

https://web.archive.org/web/20010809021812/www.storage.ibm.com/hdd/firsts/index.htm

2003 wayback redirected

https://web.archive.org/web/20030404234346/http://www.storage.ibm.com/hddredirect.html?/firsts/index.htm

IBM Storage Technology has merged with Hitachi Storage to become

Hitachi Global Storage Technologies. To visit us and find out more

http://www.hgst.com.

2004 & then no more

https://web.archive.org/web/20040130073217/http://www.storage.ibm.com:80/hddredirect.html?/firsts/index.htm

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Tymshare Date: 10 Aug, 2023 Blog: FacebookI would drop by TYMSHARE periodically and/or see them at the monthly BAYBUNCH meetings at SLAC

https://en.wikipedia.org/wiki/SHARE_(computing)

for free starting in Aug1976 ... archives here

http://vm.marist.edu/~vmshare

I cut a deal with TYMSHARE to get a monthly tape dump off all VMSHARE files for putting up on the internal network and systems (biggest problem was IBM lawyers concerned internal employees would be contaminated with information about customers).

On one visit they demo'ed ADVENTURE game. Somebody had found it on

Stanford SAIL PDP10 system and ported to VM/CMS system. I got copy and

made it available internally (would send source to anybody that could

show they got all the points). Shortly versions with more points

appeared and a port to PLI.

https://en.wikipedia.org/wiki/Colossal_Cave_Adventure

trivia: they told a story about TYMSHARE executive finding out people were playing games on their systems ... and directed TYMSHARE was for business use and all games had to be removed. He quickly changed his mind when told that game playing had increased to 30% of TYMSHARE revenue.

Most IBM internal systems had "For Business Purposes Only" on the 3270 VM370 login screen; however, IBM San Jose Research had "For Management Approved Uses Only". It played a role when corporate audit said all games had to be removed and we refused.

website topic drift: (Stanford) SLAC (CERN sister institution) had

first website in US (on VM370 system)

https://www.slac.stanford.edu/history/earlyweb/history.shtml

https://www.slac.stanford.edu/history/earlyweb/firstpages.shtml

MD bought Tymshare ... part of buy-out, I was brought into Tymshare to evaluate GNOSIS as part of spin-off to Key Logic ... also tymshare asked me if I could find anybody in IBM that would make Engelbart an offer

Ann Hardy at Computer History Museum

https://www.computerhistory.org/collections/catalog/102717167

Ann rose up to become Vice President of the Integrated Systems

Division at Tymshare, from 1976 to 1984, which did online airline

reservations, home banking, and other applications. When Tymshare was

acquired by McDonnell-Douglas in 1984, Ann's position as a female VP

became untenable, and was eased out of the company by being encouraged

to spin out Gnosis, a secure, capabilities-based operating system

developed at Tymshare. Ann founded Key Logic, with funding from Gene

Amdahl, which produced KeyKOS, based on Gnosis, for IBM and Amdahl

mainframes. After closing Key Logic, Ann became a consultant, leading

to her cofounding Agorics with members of Ted Nelson's Xanadu project.

... snip ...

... also If Discrimination, Then Branch: Ann Hardy's Contributions to

Computing

https://computerhistory.org/blog/if-discrimination-then-branch-ann-hardy-s-contributions-to-computing/

commercial, virtual machine-based timesharing posts

https://www.garlic.com/~lynn/submain.html#timeshare

some posts mentioning Tymshare, gnosis, & engelbart

https://www.garlic.com/~lynn/2018f.html#77 Douglas Engelbart, the forgotten hero of modern computing

https://www.garlic.com/~lynn/2015g.html#43 [Poll] Computing favorities

https://www.garlic.com/~lynn/2014d.html#44 [CM] Ten recollections about the early WWW and Internet

https://www.garlic.com/~lynn/2013d.html#55 Arthur C. Clarke Predicts the Internet, 1974

https://www.garlic.com/~lynn/2012i.html#40 GNOSIS & KeyKOS

https://www.garlic.com/~lynn/2012i.html#39 Just a quick link to a video by the National Research Council of Canada made in 1971 on computer technology for filmmaking

https://www.garlic.com/~lynn/2011c.html#2 Other early NSFNET backbone

https://www.garlic.com/~lynn/2011b.html#31 Colossal Cave Adventure in PL/I

https://www.garlic.com/~lynn/2010q.html#63 VMSHARE Archives

https://www.garlic.com/~lynn/2010d.html#84 Adventure - Or Colossal Cave Adventure

https://www.garlic.com/~lynn/2008s.html#3 New machine code

https://www.garlic.com/~lynn/2008g.html#23 Doug Engelbart's "Mother of All Demos"

https://www.garlic.com/~lynn/2005s.html#12 Flat Query

https://www.garlic.com/~lynn/2002g.html#4 markup vs wysiwyg (was: Re: learning how to use a computer)

https://www.garlic.com/~lynn/2000g.html#22 No more innovation? Get serious

https://www.garlic.com/~lynn/aadsm17.htm#31 Payment system and security conference

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: DASD, Channel and I/O long winded trivia Date: 13 Aug, 2023 Blog: LinkedinLinkedin

As undergraduate in the 60s, univ hired me responsible for os/360 (univ. had sold 360/67 for tss/360 to replace 709/1401, but tss/360 never came to fruition so ran as 360/65). Univ. shutdown datacenter on weekends and I had place dedicated, although 48hrs w/o sleep made monday classes hard. Student fortran jobs ran under sec. on 709, but initially ran over minute on 360. I install HASP and cuts time in half. I then redo SYSGEN so it can be run in job stream, also reorg statements to carefully place datasets and PDS members optimizing arm seek and multi-track search, cutting another 2/3rds to 12.9sec. Never got better than 709 until installed (unv waterloo) WATFOR.

Cambridge Science center came out to install CP67 (3rd after CSC and

MIT Lincol Labs, precursor to vm370) ... and I mostly got to play with

it on weekends. Over a few months, I rewrote a lot of code ... OS/360

test ran 322secs, but in virtual machine ran 856secs ... CP67 CPU

534secs. I got that down to 113secs, reduction of 435secs). Then I did

new page replacement algorithm and dynamic adaptive resource

management (scheduling) for CMS interactive, improving throughput and

number of users and cutting interactive response. CP67 original I/O

was FIFO order and paging was single 4k transfer per I/O. I

implemented ordered arm seek (increasing 2314 throughput) and for

paging would chain multiple 4k to maximize transfers per revolution

(for both 2314 disk and 2301 drum). 2301 drum was approx. 75 4k/sec;

changes could get it up to nearly channel speed around 270/sec.

Archived post with part of SHARE presentation on some of the work:

https://www.garlic.com/~lynn/94.html#18

some posts on undergraduate work

https://www.garlic.com/~lynn/2023c.html#68 VM/370 3270 Terminal

https://www.garlic.com/~lynn/2023c.html#67 VM/370 3270 Terminal

https://www.garlic.com/~lynn/2023c.html#46 IBM DASD

https://www.garlic.com/~lynn/2023c.html#26 Global & Local Page Replacement

https://www.garlic.com/~lynn/2023c.html#25 IBM Downfall

https://www.garlic.com/~lynn/2013g.html#39 Old data storage or data base

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Tymshare Date: 13 Aug, 2023 Blog: Facebookre:

Other trivia: TYMNET

https://en.wikipedia.org/wiki/Tymnet

MD acquired TYMNET as part of TYMSHARE purchase ... and then fairly

early sold off to BT

https://en.wikipedia.org/wiki/Tymshare#Tymshare_sold_to_McDonnell_Douglas

Then MD "merges" with Boeing (joke MD bought Boeing with Boeing's own

money, turning it into financial engineering company)

https://mattstoller.substack.com/p/the-coming-boeing-bailout

https://newrepublic.com/article/154944/boeing-737-max-investigation-indonesia-lion-air-ethiopian-airlines-managerial-revolution

The 100yr, 2016 Boeing "century" publication had article that the "merger" with M/D nearly took down Boeing and might yet still.

... older history, as undergraduate in 60s, I was hired fulltime into small group in the Boeing CFO office to help with the formation of Boeing Computer Services (consolidate all dataprocessing into an independent business unit to better monetize investment, including offering services to non-Boeing entities). I thot Renton datacenter possibly largest in world, couple hundred million in IBM 360s, 360/65s arriving faster than could be installed, boxes constantly staged in hallways around machine room. Disaster plan had Renton being replicated up at new 747 plant in Everett (Mt. Rainier heats up and the resulting mud slide takes out Renton). Lots of politics between Renton director and CFO ... who only had a 360/30 up at Boeing Field for payroll (although they enlarge the machine room to install a 360/67 for me to play with when I'm not doing other stuff).

Posts mentioning working for Boeing CFO and later MD "merges" with

Boeing

https://www.garlic.com/~lynn/2022d.html#91 Short-term profits and long-term consequences -- did Jack Welch break capitalism?

https://www.garlic.com/~lynn/2022b.html#117 Downfall: The Case Against Boeing

https://www.garlic.com/~lynn/2022.html#109 Not counting dividends IBM delivered an annualized yearly loss of 2.27%

https://www.garlic.com/~lynn/2021f.html#78 The Long-Forgotten Flight That Sent Boeing Off Course

https://www.garlic.com/~lynn/2021f.html#57 "Hollywood model" for dealing with engineers

https://www.garlic.com/~lynn/2021b.html#40 IBM & Boeing run by Financiers

https://www.garlic.com/~lynn/2020.html#10 "This Plane Was Designed By Clowns, Who Are Supervised By Monkeys"

https://www.garlic.com/~lynn/2019e.html#153 At Boeing, C.E.O.'s Stumbles Deepen a Crisis

https://www.garlic.com/~lynn/2019e.html#151 OT: Boeing to temporarily halt manufacturing of 737 MAX

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Tymshare Date: 13 Aug, 2023 Blog: Facebookre:

Tymshare Beginnings

https://en.wikipedia.org/wiki/Tymshare#Beginnings

Tymshare initially focussed on the SDS 940 platform, initially running

at University of California Berkeley. They received their own leased

940 in mid-1966, running the Berkeley Timesharing System, which had

limited time-sharing capability. IBM Stretch programmer Ann Hardy

rewrote the time-sharing system to service 32 simultaneous users. By

1969 the company had three locations, 100 staff, and five SDS 940s.[6]

...

It soon became apparent that the SDS 940 could not keep up with the

rapid growth of the network. In 1972, Joseph Rinde joined the Tymnet

group and began porting the Supervisor code to the 32-bit Interdata

7/32, as the 8/32 was not yet ready. In 1973, the 8/32 became

available, but the performance was disappointing, and a crash-effort

was made to develop a machine that could run Rinde's Supervisor.

... snip ...

... trivia & topic drift; Univ had 709/1401 and IBM had sold them 360/67 for tss/360 to replace 709/1401. within year of taking intro to fortran/computers and 360/67 arriving, univ. hires me fulltimes responsible of os/360 (tss/360 never came to production and 360/67 was used as 360/65). Shutdown datacenter on weekends and I had it dedicated (but 48hrs w/o sleep could make monday class hard). I did a lot of os/360 optimization. Student fortran had run under second on 709, but was over a minute on 360/65. I installed HASP and cut time in half, then optimized disk layout (for arm seek and multi-track search) cutting another 2/3rds to 12.9sec ... never got better until I install WATFOR.

posts mentioning HASP, ASP, JES, NJE/NJI

https://www.garlic.com/~lynn/submain.html#hasp

Then CP67 was installed at univ (3rd after CSC itself and MIT lincoln

labs) and I mostly got to play with it in weekend dedicated

time. OS/360 benchmark ran 322secs standalone, 856secs in virtual

machine. After a few months I cut CP67 overhead CPU from 534secs to

113secs (reduction of 435secs). There was TSS/360 IBMer still around

and one weekend he did simulated 4user, interactive, fortran edit,

compile, execute ... and I did same with CP67/CMS for 35 users on same

hardware ... with better interactive response and throughput. Archived

post with part of SHARE presentation on OS/360 and CP67 improvement

work

https://www.garlic.com/~lynn/94.html#18

Then I did new page replacement and dynamic adaptive resource management (scheduling). Originally CP67 did FIFO I/O queuing and single 4k page transfer per I/O. I redid it with ordered seek queuing (increasing 2314 disk throughput) and chained multiple page transfers ordered to maximize transferrs per revolution (increasing 2314 disk and 2301 drum throughput, 2301 had been about 75/sec, change allowed it to hit nearly channel transfer at 270/sec).

CP67 had arrived with 1052 and 2741 support that did dynamic terminal type identification (capable of switching port scanner). Univ had some number of TTY/ascii ... so I added TTY/ascii support integrated with dynamically type identification (adding ascii port scanner support, trivia: the MES to add ascii port scanner arrived in Heathkit box). I then wanted to do a single phone number for all terminal types, but official IBM controller had port line speed hard wired (could change port terminal-type scanner but not the speed) so didn't work.

That starts a univ. project to do clone controller, build a mainframe channel interface for Interdata/3 programmed to simulate IBM controller ... with the addition could do dynamic line speed. This is then upgraded to Interdata/4 for the channel interface and a cluster of Interdate/3s for port scanners. Interdata (and then Perkin/Elmer) use it selling clone controller to IBM mainframe customers (along the way, upgrading to faster Interdatas) ... four of us get written up for (some part of) IBM mainframe clone controller business.

360 plug compatible controller posts

https://www.garlic.com/~lynn/submain.html#360pcm

I was then hired into Boeing CFO office (mentioned previously). When I graduate, I join IBM Cambridge Science Center. Note in the 60s two commercial spinoffs of CSC were IDC and NCSS (mentioned in the Tymshare wiki page). CSC had same size 360/67 (768kbyte memory) as univ. and I migrate all my CP67 enhancements to CSC CP67 (including global LRU replacement). The IBM Grenoble Science Center had a 1mbyte 360/67 and modified CP67 to implement working set dispatcher (found in academic literature of the period & "local LRU" replacement). Both CSC and Grenoble had similar workloads, but my CP67 with 80users (and 104 available 4kpages) had better response and throughput than the Grenoble CP67 with 35 users (and 155 available 4kpages). When virtual memory support was added to all IBM 370s, CP67 morphed into VM370 ... and TYMSHARE starts offering VM370 online services.

After transferring to SJR, I did some work with Jim Gray and Vera Watson on the original SQL/relational RDBMS. Jim then left IBM for Tandem. Later Jim contacts me and asks if I can help a Tandem co-worker get his Stanford Phd ... involving global LRU page replacement and the "local LRU" forces from the 60s are lobbying Stanford to not grant a Phd involving global LRU (Jim knew I had detailed Cambridge/Grenoble local/global comparison for CP67).

CSC posts

https://www.garlic.com/~lynn/subtopic.html#545tech

commercial (virtual machine) online services

https://www.garlic.com/~lynn/submain.html#online

system/r posts

https://www.garlic.com/~lynn/submain.html#systemr

page replacement and working set posts

https://www.garlic.com/~lynn/subtopic.html#wsclock

dynamic adaptive resource management posts

https://www.garlic.com/~lynn/subtopic.html#fairshare

some posts mentioning CP67 work for ordered seek queuing and

chaining multiple 4k page requests in single I/O

https://www.garlic.com/~lynn/2023d.html#106 DASD, Channel and I/O long winded trivia

https://www.garlic.com/~lynn/2022f.html#44 z/VM 50th

https://www.garlic.com/~lynn/2022d.html#30 COMPUTER HISTORY: REMEMBERING THE IBM SYSTEM/360 MAINFRAME, its Origin and Technology

https://www.garlic.com/~lynn/2022d.html#21 COMPUTER HISTORY: REMEMBERING THE IBM SYSTEM/360 MAINFRAME, its Origin and Technology

https://www.garlic.com/~lynn/2021e.html#37 Drums

https://www.garlic.com/~lynn/2020.html#42 If Memory Had Been Cheaper

https://www.garlic.com/~lynn/2017j.html#71 A Computer That Never Was: the IBM 7095

https://www.garlic.com/~lynn/2017e.html#4 TSS/8, was A Whirlwind History of the Computer

https://www.garlic.com/~lynn/2017d.html#65 Paging subsystems in the era of bigass memory

https://www.garlic.com/~lynn/2016g.html#29 Computer hard drives have shrunk like crazy over the last 60 years -- here's a look back

https://www.garlic.com/~lynn/2013g.html#39 Old data storage or data base

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Maneuver Warfare as a Tradition. A Blast from the Past Date: 13 Aug, 2023 Blog: FacebookManeuver Warfare as a Tradition. A Blast from the Past

In briefings, Boyd would also mention Guderian instructed Verbal Orders Only for the blitzgrieg ... stressing officers on the spot were encouraged to make decisions without having to worry about the Monday morning quarterbacks questioning what should have been done.

one of my old posts here

https://slightlyeastofnew.com/2019/11/18/creating-agile-leaders/

Impact Of Technology On Military Manpower Requirements (Dec1980)

https://books.google.com/books?id=wKY3AAAAIAAJ&printsec=frontcover&source=gbs_ge_summary_r&cad=0#v

top of pg. 104:

# Verbal orders only, convey only general intentions, delegate

authority to lowest possible level and give subordinates broad

latitude to devise their own means to achieve commander's

intent. Subordinates restrict communications to upper echelons to

general difficulties and progress, Result: clear, high speed, low

volume communications,

... snip ...

post also at Linkedin

https://www.linkedin.com/posts/lynnwheeler_maneuver-warfare-as-a-tradition-activity-7096921373977022464-XLku/

Boyd posts & URLs

https://www.garlic.com/~lynn/subboyd.html

past posts mentioning Verbal Orders Only

https://www.garlic.com/~lynn/2022h.html#19 Sun Tzu, Aristotle, and John Boyd

https://www.garlic.com/~lynn/2020.html#12 Boyd: The Fighter Pilot Who Loathed Lean?

https://www.garlic.com/~lynn/2017i.html#5 Mission Command: The Who, What, Where, When and Why An Anthology

https://www.garlic.com/~lynn/2017i.html#2 Mission Command: The Who, What, Where, When and Why An Anthology

https://www.garlic.com/~lynn/2017f.html#14 Fast OODA-Loops increase Maneuverability

https://www.garlic.com/~lynn/2016g.html#13 Rogue sysadmins the target of Microsoft's new 'Shielded VM' security

https://www.garlic.com/~lynn/2016d.html#28 Manazir: Networked Systems Are The Future Of 5th-Generation Warfare, Training

https://www.garlic.com/~lynn/2016d.html#18 What Would Be Your Ultimate Computer?

https://www.garlic.com/~lynn/2015d.html#19 Where to Flatten the Officer Corps

https://www.garlic.com/~lynn/2015.html#80 Here's how a retired submarine captain would save IBM

https://www.garlic.com/~lynn/2014f.html#46 The Pentagon Wars

https://www.garlic.com/~lynn/2014.html#16 Command Culture

https://www.garlic.com/~lynn/2013k.html#48 John Boyd's Art of War

https://www.garlic.com/~lynn/2013e.html#81 How Criticizing in Private Undermines Your Team - Harvard Business Review

https://www.garlic.com/~lynn/2013e.html#10 The Knowledge Economy Two Classes of Workers

https://www.garlic.com/~lynn/2012k.html#7 Is there a connection between your strategic and tactical assertions?

https://www.garlic.com/~lynn/2012i.html#50 Is there a connection between your strategic and tactical assertions?

https://www.garlic.com/~lynn/2012h.html#63 Is this Boyd's fundamental postulate, 'to improve our capacity for independent action'?

https://www.garlic.com/~lynn/2012g.html#84 Monopoly/ Cartons of Punch Cards

https://www.garlic.com/~lynn/2012f.html#2 Did they apply Boyd's concepts?

https://www.garlic.com/~lynn/2012c.html#51 How would you succinctly desribe maneuver warfare?

https://www.garlic.com/~lynn/2012b.html#26 Strategy subsumes culture

https://www.garlic.com/~lynn/2012.html#45 You may ask yourself, well, how did I get here?

https://www.garlic.com/~lynn/2011l.html#52 An elusive command philosophy and a different command culture

https://www.garlic.com/~lynn/2011k.html#3 Preparing for Boyd II

https://www.garlic.com/~lynn/2011j.html#7 Innovation and iconoclasm

https://www.garlic.com/~lynn/2010i.html#68 Favourite computer history books?

https://www.garlic.com/~lynn/2010e.html#43 Boyd's Briefings

https://www.garlic.com/~lynn/2009j.html#34 Mission Control & Air Cooperation

https://www.garlic.com/~lynn/2009e.html#73 Most 'leaders' do not 'lead' and the majority of 'managers' do not 'manage'. Why is this?

https://www.garlic.com/~lynn/2008o.html#69 Blinkenlights

https://www.garlic.com/~lynn/2008h.html#63 how can a hierarchical mindset really ficilitate inclusive and empowered organization

https://www.garlic.com/~lynn/2008h.html#61 Up, Up, ... and Gone?

https://www.garlic.com/~lynn/2008h.html#8a Using Military Philosophy to Drive High Value Sales

https://www.garlic.com/~lynn/2007c.html#25 Special characters in passwords was Re: RACF - Password rules

https://www.garlic.com/~lynn/2007b.html#37 Special characters in passwords was Re: RACF - Password rules

https://www.garlic.com/~lynn/2006q.html#41 was change headers: The Fate of VM - was: Re: Baby MVS???

https://www.garlic.com/~lynn/2006g.html#9 The Pankian Metaphor

https://www.garlic.com/~lynn/2006f.html#14 The Pankian Metaphor

https://www.garlic.com/~lynn/2004q.html#86 Organizations with two or more Managers

https://www.garlic.com/~lynn/2004k.html#24 Timeless Classics of Software Engineering

https://www.garlic.com/~lynn/2003p.html#27 The BASIC Variations

https://www.garlic.com/~lynn/2003h.html#51 employee motivation & executive compensation

https://www.garlic.com/~lynn/2002q.html#33 Star Trek: TNG reference

https://www.garlic.com/~lynn/2002d.html#38 Mainframers: Take back the light (spotlight, that is)

https://www.garlic.com/~lynn/2002d.html#36 Mainframers: Take back the light (spotlight, that is)

https://www.garlic.com/~lynn/2001.html#29 Review of Steve McConnell's AFTER THE GOLD RUSH

https://www.garlic.com/~lynn/99.html#120 atomic History

https://www.garlic.com/~lynn/aadsm28.htm#10 Why Security Modelling doesn't work -- the OODA-loop of today's battle

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Copyright Software Date: 14 Aug, 2023 Blog: Facebook... result from legal action, IBM 23Jun1969 "unbundling" announcement ... starting to charge for software .... and adding "copyright" banner as comments in headers of source files. They were able to make the case that kernel software could still be free. First half of 70s, IBM had the "future system" project ... new computer generation that was completely different and was going to completely replace 360/370.

During FS, internal politics was killing off 370 efforts ... the lack of new 370 during FS is credited with giving 370 clone makers (Amdahl, etc) their market foothold. When FS implodes, there is mad rush to get stuff back into the 370 product pipelines, including kicking off the quick&dirty 303x&3081. Also with the rise of 370 clone makers, the decision was also made to start charging for kernel software (previous kernel software was still free, but incremental new kernel add-ons would be charged for, eventually after a few years, transitioning to all kernel software was being charged).

unbundling posts

https://www.garlic.com/~lynn/submain.html#unbundle

future system posts

https://www.garlic.com/~lynn/submain.html#futuresys

Note: after joining IBM, I was to allowed to wander around IBM and customer datacenters. Director of one the largest customer financial datacenters liked me to stop by and talk technology. At some point the local IBM branch manager horribly offended the customer and in retaliation, the customer ordered an Amdahl system (lone Amdahl in vast football field of IBM systems). Up until them Amdahl had been primarily selling into the technical/scientific/university market ... and this would be the first clone 370 in true blue commercial account. Then I was asked to go live on site for 6-12 months (apparently to obfuscate why the customer was ordering an Amdahl machine). I talked it over with the customer and decided to decline the offer. I was then told that the branch manager was good sailing buddy of the IBM CEO, and if I didn't, I could forget having career, promotions, raises.

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

Posts mentioning commercial customer ordering Amdahl

https://www.garlic.com/~lynn/2023c.html#56 IBM Empty Suits

https://www.garlic.com/~lynn/2023b.html#84 Clone/OEM IBM systems

https://www.garlic.com/~lynn/2023.html#51 IBM Bureaucrats, Careerists, MBAs (and Empty Suits)

https://www.garlic.com/~lynn/2023.html#45 IBM 3081 TCM

https://www.garlic.com/~lynn/2022g.html#66 IBM Dress Code

https://www.garlic.com/~lynn/2022g.html#59 Stanford SLAC (and BAYBUNCH)

https://www.garlic.com/~lynn/2022e.html#103 John Boyd and IBM Wild Ducks

https://www.garlic.com/~lynn/2022e.html#82 Enhanced Production Operating Systems

https://www.garlic.com/~lynn/2022e.html#60 IBM CEO: Only 60% of office workers will ever return full-time

https://www.garlic.com/~lynn/2022e.html#14 IBM "Fast-Track" Bureaucrats

https://www.garlic.com/~lynn/2022d.html#35 IBM Business Conduct Guidelines

https://www.garlic.com/~lynn/2022d.html#21 COMPUTER HISTORY: REMEMBERING THE IBM SYSTEM/360 MAINFRAME, its Origin and Technology

https://www.garlic.com/~lynn/2022b.html#95 IBM Salary

https://www.garlic.com/~lynn/2022b.html#88 Computer BUNCH

https://www.garlic.com/~lynn/2022b.html#27 Dataprocessing Career

https://www.garlic.com/~lynn/2022.html#74 165/168/3033 & 370 virtual memory

https://www.garlic.com/~lynn/2022.html#47 IBM Conduct

https://www.garlic.com/~lynn/2022.html#15 Mainframe I/O

https://www.garlic.com/~lynn/2021k.html#119 70s & 80s mainframes

https://www.garlic.com/~lynn/2021k.html#105 IBM Future System

https://www.garlic.com/~lynn/2021j.html#93 IBM 3278

https://www.garlic.com/~lynn/2021j.html#4 IBM Lost Opportunities

https://www.garlic.com/~lynn/2021i.html#81 IBM Downturn

https://www.garlic.com/~lynn/2021h.html#61 IBM Starting Salary

https://www.garlic.com/~lynn/2021e.html#66 Amdahl

https://www.garlic.com/~lynn/2021e.html#15 IBM Internal Network

https://www.garlic.com/~lynn/2021d.html#85 Bizarre Career Events

https://www.garlic.com/~lynn/2021d.html#66 IBM CEO Story

https://www.garlic.com/~lynn/2021c.html#37 Some CP67, Future System and other history

https://www.garlic.com/~lynn/2021.html#82 Kinder/Gentler IBM

https://www.garlic.com/~lynn/2021.html#52 Amdahl Computers

https://www.garlic.com/~lynn/2021.html#39 IBM Tech

https://www.garlic.com/~lynn/2021.html#8 IBM CEOs

https://www.garlic.com/~lynn/2019e.html#138 Half an operating system: The triumph and tragedy of OS/2

https://www.garlic.com/~lynn/2019e.html#29 IBM History

https://www.garlic.com/~lynn/2019b.html#80 TCM

https://www.garlic.com/~lynn/2018f.html#68 IBM Suits

https://www.garlic.com/~lynn/2018e.html#27 Wearing a tie cuts circulation to your brain

https://www.garlic.com/~lynn/2018d.html#6 Workplace Advice I Wish I Had Known

https://www.garlic.com/~lynn/2018c.html#27 Software Delivery on Tape to be Discontinued

https://www.garlic.com/~lynn/2018.html#55 Now Hear This--Prepare For The "To Be Or To Do" Moment

https://www.garlic.com/~lynn/2017d.html#49 IBM Career

https://www.garlic.com/~lynn/2017c.html#92 An OODA-loop is a far-from-equilibrium, non-linear system with feedback

https://www.garlic.com/~lynn/2016h.html#86 Computer/IBM Career

https://www.garlic.com/~lynn/2016e.html#95 IBM History

https://www.garlic.com/~lynn/2016.html#41 1976 vs. 2016?

https://www.garlic.com/~lynn/2014i.html#54 IBM Programmer Aptitude Test

https://www.garlic.com/~lynn/2014i.html#52 IBM Programmer Aptitude Test

https://www.garlic.com/~lynn/2013l.html#22 Teletypewriter Model 33

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Copyright Software Date: 14 Aug, 2023 Blog: Facebookre:

trivia/topic drift: a decade ago I was asked to track down decision to make all 370s virtual memory (found staff member for executive that made decision). Basically OS/MVT storage management was so bad that region sizes had to be specified four times larger than used ... so a typical 1mbyte 370/165 could only run four regions concurrently, insufficient to keep system busy and justified. Going to 16mbyte virtual memory could increase the number of concurrently running regions by factor of four with little or no paging. Initially OS/VS2 SVS was little different than running MVT in a 16mbyte CP67 virtual machine.

However, 165 people were then complaining that if they had to implement the full 370 virtual memory architecture ... the announce would slip six months. Eventually it was stripped back to subset maintaining the schedule ... and all the other models (and software) that already implemented/used the full architecture had to retrench to the 165 subset.

trivia: I would drop by to see Ludlow who was doing the SVS prototype offshift on 360/67. The biggest code was doing EXCP channel programs; aka application libraries built the I/O channel programs and passed address to SVC0/EXCP. Channel programs had to have real addresses ... which met that a copy of the passed channel programs was made substituting real addresses for virtual. This was same problem that CP67 had (running virtual machines) ... and Ludlow borrowed the CP67 CCWTRANS for crafting into EXCP.

archived post with pieces of email exchange

https://www.garlic.com/~lynn/2011d.html#73

recent posts mentioning Ludlow & SVS prototype

https://www.garlic.com/~lynn/2023e.html#4 HASP, JES, MVT, 370 Virtual Memory, VS2

https://www.garlic.com/~lynn/2023b.html#103 2023 IBM Poughkeepsie, NY

https://www.garlic.com/~lynn/2022h.html#93 IBM 360

https://www.garlic.com/~lynn/2022h.html#22 370 virtual memory

https://www.garlic.com/~lynn/2022f.html#41 MVS

https://www.garlic.com/~lynn/2022f.html#7 Vintage Computing

https://www.garlic.com/~lynn/2022e.html#91 Enhanced Production Operating Systems

https://www.garlic.com/~lynn/2022d.html#55 CMS OS/360 Simulation

https://www.garlic.com/~lynn/2022.html#70 165/168/3033 & 370 virtual memory

https://www.garlic.com/~lynn/2022.html#58 Computer Security

https://www.garlic.com/~lynn/2022.html#10 360/65, 360/67, 360/75

https://www.garlic.com/~lynn/2021h.html#48 Dynamic Adaptive Resource Management

https://www.garlic.com/~lynn/2021g.html#6 IBM 370

https://www.garlic.com/~lynn/2021b.html#63 Early Computer Use

https://www.garlic.com/~lynn/2021b.html#59 370 Virtual Memory

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Copyright Software Date: 14 Aug, 2023 Blog: Facebookre:

more FS background

http://www.jfsowa.com/computer/memo125.htm

one of my hobbies after joining IBM was enhanced production (360&370) operating systems for internal datacenters (circa 1975, somehow leaked to AT&T longlines, but that's another story) and the FS people periodically drop by to talk about my work ... I would ridicule what they were doing (which wasn't exactly career enhancing). One of the final nails in the FS coffin was study by Houston Science Center which showed if 370/195 software was redone for FS, running on machine made out of the fastest available hardware technology, it would have throughput of 370/145 (about 30times slowdown).

ref to ACS/360 shutdown ... terminated because executives were afraid

it would advance state-of-the-art too fast and they would loose

control of the market. Amdahl leaves shortly after acs/360 shutdown

(lists some features that show up more than 20yrs later in the 90s

with ES/9000).

https://people.cs.clemson.edu/~mark/acs_end.html

Amdahl had talk at large MIT auditorium shortly after starting his company and some of us from cambridge science center attended. Somebody asked him about business case he used with investors. He said that there was so much customer developed 360 software, that even if IBM were to totally walk away from 360(/370), it would keep him in business until the end of century. This sort of sounded like he was aware of FS ... but he has repeatedly claimed he had no knowledge of FS.

future system posts

https://www.garlic.com/~lynn/submain.html#futuresys

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

recent posts mentioning Sowa's FS memo and end of ACS/360

https://www.garlic.com/~lynn/2023d.html#94 The IBM mainframe: How it runs and why it survives

https://www.garlic.com/~lynn/2023d.html#87 545tech sq, 3rd, 4th, & 5th flrs

https://www.garlic.com/~lynn/2023d.html#63 CICS Product 54yrs old today

https://www.garlic.com/~lynn/2023b.html#84 Clone/OEM IBM systems

https://www.garlic.com/~lynn/2023b.html#20 IBM Technology

https://www.garlic.com/~lynn/2023b.html#6 z/VM 50th - part 7

https://www.garlic.com/~lynn/2023b.html#0 IBM 370

https://www.garlic.com/~lynn/2023.html#74 IBM 4341

https://www.garlic.com/~lynn/2023.html#73 IBM 4341

https://www.garlic.com/~lynn/2023.html#72 IBM 4341

https://www.garlic.com/~lynn/2023.html#41 IBM 3081 TCM

https://www.garlic.com/~lynn/2023.html#36 IBM changes between 1968 and 1989

https://www.garlic.com/~lynn/2022h.html#120 IBM Controlling the Market

https://www.garlic.com/~lynn/2022h.html#117 TOPS-20 Boot Camp for VMS Users 05-Mar-2022

https://www.garlic.com/~lynn/2022h.html#114 TOPS-20 Boot Camp for VMS Users 05-Mar-2022

https://www.garlic.com/~lynn/2022h.html#93 IBM 360

https://www.garlic.com/~lynn/2022h.html#48 smaller faster cheaper, computer history thru the lens of esthetics versus economics

https://www.garlic.com/~lynn/2022h.html#33 computer history thru the lens of esthetics versus economics

https://www.garlic.com/~lynn/2022h.html#17 Arguments for a Sane Instruction Set Architecture--5 years later

https://www.garlic.com/~lynn/2022h.html#2 360/91

https://www.garlic.com/~lynn/2022g.html#59 Stanford SLAC (and BAYBUNCH)

https://www.garlic.com/~lynn/2022g.html#22 3081 TCMs

https://www.garlic.com/~lynn/2022f.html#109 IBM Downfall

https://www.garlic.com/~lynn/2022e.html#61 Channel Program I/O Processing Efficiency

https://www.garlic.com/~lynn/2022b.html#99 CDC6000

https://www.garlic.com/~lynn/2022b.html#88 Computer BUNCH

https://www.garlic.com/~lynn/2022b.html#51 IBM History

https://www.garlic.com/~lynn/2022.html#77 165/168/3033 & 370 virtual memory

https://www.garlic.com/~lynn/2022.html#74 165/168/3033 & 370 virtual memory

https://www.garlic.com/~lynn/2022.html#31 370/195

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Maneuver Warfare as a Tradition. A Blast from the Past Date: 14 Aug, 2023 Blog: Facebookre:

well ... long-winded "internet" followup ... would require spreading

across multiple comments ... so instead redid as one large article

https://www.linkedin.com/pulse/maneuver-warfare-tradition-blast-from-past-lynn-wheeler/

Maneuver Warfare as a Tradition. A Blast from the Past

https://tacticalnotebook.substack.com/p/maneuver-warfare-as-a-tradition

In briefings, Boyd would also mention Guderian instructed Verbal Orders Only for the blitzgrieg ... stressing officers on the spot were encouraged to make decisions without having to worry about the Monday morning quarterbacks questioning what should have been done.

one of my old posts here

https://slightlyeastofnew.com/2019/11/18/creating-agile-leaders/

Impact Of Technology On Military Manpower Requirements (Dec1980)

https://books.google.com/books?id=wKY3AAAAIAAJ&printsec=frontcover&source=gbs_ge_summary_r&cad=0#v

top of pg. 104:

# Verbal orders only, convey only general intentions, delegate

authority to lowest possible level and give subordinates broad

latitude to devise their own means to achieve commander's

intent. Subordinates restrict communications to upper echelons to

general difficulties and progress, Result: clear, high speed, low

volume communications,

... snip ...

post redone as article with long-winded internet followup and topic

drift (to some comments)

https://www.linkedin.com/posts/lynnwheeler_maneuver-warfare-as-a-tradition-activity-7096921373977022464-XLku/

coworker at cambridge science center and san jose research

https://en.wikipedia.org/wiki/Edson_Hendricks

In June 1975, MIT Professor Jerry Saltzer accompanied Hendricks to

DARPA, where Hendricks described his innovations to the principal

scientist, Dr. Vinton Cerf. Later that year in September 15-19 of 75,

Cerf and Hendricks were the only two delegates from the United States,

to attend a workshop on Data Communications at the International

Institute for Applied Systems Analysis, 2361 Laxenburg Austria where

again, Hendricks spoke publicly about his innovative design which

paved the way to the Internet as we know it today.

... snip ...

and It's Cool to Be Clever: The Story of Edson C. Hendricks, the

Genius Who Invented the Design for the Internet

https://www.amazon.com/Its-Cool-Be-Clever-Hendricks/dp/1897435630/

Ed tried to get IBM to support internet & failed, SJMN article (behind

paywall but mostly free at wayback)

https://web.archive.org/web/20000124004147/http://www1.sjmercury.com/svtech/columns/gillmor/docs/dg092499.htm

additional correspondence with IBM executives (Ed passed Aug2020, his

website at wayback machine)

https://web.archive.org/web/20000115185349/http://www.edh.net/bungle.htm

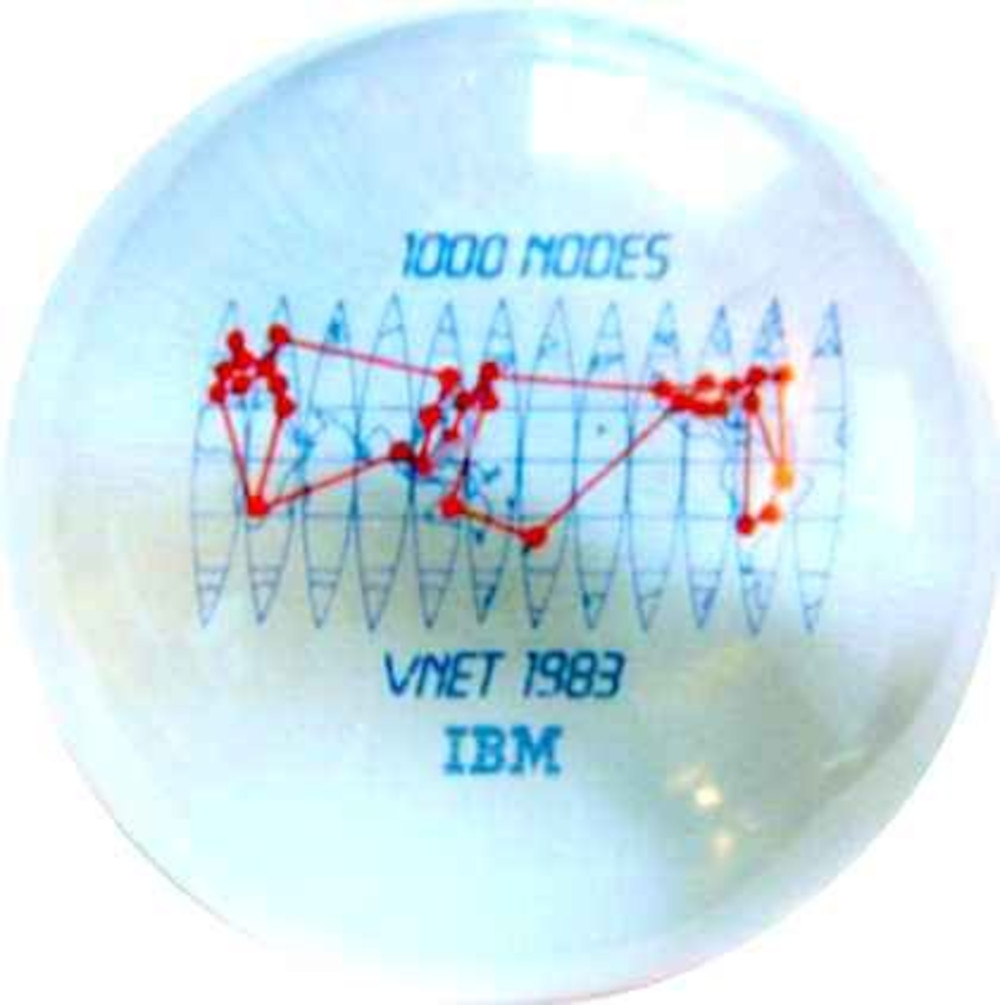

Ed was also responsible for the world-wide internal network (larger

than arpanet/internet from just about the beginning until sometime

mid/late 80s). At the time ARPANET transition from IMPs/HOSTs (to

TCP/IP) it had approx. 255 hosts while the internal network was

closing in on 1000 hosts. One of our difficulties was with govs. over

corporate requirement all links be encrypted ... especially

gov. problems when links crossed national boundaries. Technology was

also used for the corporate sponsored univ. "BITNET" (included EARN in

Europe).

https://en.wikipedia.org/wiki/BITNET

Ed left IBM about the same time I was introduced to John Boyd and also

I had HSDT project (and hired Ed to help), T1 and faster computer

links and was suppose to get $20M from the director of NSF to

interconnect the NSF Supercomputer centers. Then congress cuts the

budget, some other things happen and finally a RFP is released (in

part based on what we already had running) ... Preliminary announce

(28Mar1986):

https://www.garlic.com/~lynn/2002k.html#12

The OASC has initiated three programs: The Supercomputer Centers

Program to provide Supercomputer cycles; the New Technologies Program

to foster new supercomputer software and hardware developments; and

the Networking Program to build a National Supercomputer Access

Network - NSFnet.

... snip ...

IBM internal politics not allowing us to bid (being blamed for online

computer conferencing (precursor to social media) inside IBM, likely

contributed, folklore is that 5of6 members of corporate executive

committee wanted to fire me). The NSF director tried to help by

writing the company a letter 3Apr1986, NSF Director to IBM Chief

Scientist and IBM Senior VP and director of Research, copying IBM CEO)

with support from other gov. agencies ... but that just made the

internal politics worse (as did claims that what we already had

operational was at least 5yrs ahead of the winning bid, RFP awarded 24Nov87), as regional

networks connect in, it becomes the NSFNET backbone, precursor to

modern internet

https://www.technologyreview.com/s/401444/grid-computing/

The last product worked on at IBM was HA/CMP. It originally started out as HA/6000 for the NYTimes to migrate their newspaper system (ATEX) from (DEC) VAXCluster to RS/6000. I rename it HA/CMP when doing technical/scientific cluster scale-up with national labs and commercial cluster scale-up with RDBMS vendors (Oracle, Informix, Ingres, Sybase). Early Jan1992 have meeting with Oracle CEO Ellison on cluster scale-up, planning 16-way mid92 and 128-way ye92. By end of Jan1992, cluster scale-up was transferred for announce as IBM Supercomputer and we were told we couldn't work on anything with more than four processors (we leave IBM a few months later).

Some time later I'm brought in as consultant to small client/server

startup. Two of the former Oracle people (in Ellison meeting) that we

were working with on commercial HA/CMP, are there responsible for

something called commerce server and want to do payment transactions

on the server, the startup had also invented this technology they

called "SSL" they want to use for payment transactions, sometimes now

called "electronic commerce". I had responsibility for everything

between webservers and the financial network. I would claim that it

took me 3-10s the original effort for a well design&developed

application to turn it into a service. Postel (IETF/Internet Editor)

https://en.wikipedia.org/wiki/Jon_Postel

would sponsor my talk on "Why Internet Isn't Business Critical Dataprocessing" based on work (software, procedures, documents) I had to do for "electronic commerce".

post from last year, intertwining Boyd and IBM

https://www.linkedin.com/pulse/john-boyd-ibm-wild-ducks-lynn-wheeler/

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

internal network posts

https://www.garlic.com/~lynn/subnetwork.html#internalnet

BITNET (& EARN) posts

https://www.garlic.com/~lynn/subnetwork.html#bitnet

NSFNET posts

https://www.garlic.com/~lynn/subnetwork.html#nsfnet

HSDT posts

https://www.garlic.com/~lynn/subnetwork.html#hsdt

HA/CMP posts

https://www.garlic.com/~lynn/subtopic.html#hacmp

internet payment gateway posts

https://www.garlic.com/~lynn/subnetwork.html#gateway

internet posts

https://www.garlic.com/~lynn/subnetwork.html#internet

Boyd posts and web URLs

https://www.garlic.com/~lynn/subboyd.html

some posts mention "Why Internet Isn't Business Critical

Dataprocessing"

https://www.garlic.com/~lynn/2023d.html#81 Taligent and Pink

https://www.garlic.com/~lynn/2023.html#31 IBM Change

https://www.garlic.com/~lynn/2022g.html#90 IBM Cambridge Science Center Performance Technology

https://www.garlic.com/~lynn/2022f.html#33 IBM "nine-net"

https://www.garlic.com/~lynn/2022e.html#28 IBM "nine-net"

https://www.garlic.com/~lynn/2022c.html#14 IBM z16: Built to Build the Future of Your Business

https://www.garlic.com/~lynn/2022b.html#108 Attackers exploit fundamental flaw in the web's security to steal $2 million in cryptocurrency

https://www.garlic.com/~lynn/2022b.html#68 ARPANET pioneer Jack Haverty says the internet was never finished

https://www.garlic.com/~lynn/2022b.html#38 Security

https://www.garlic.com/~lynn/2021k.html#128 The Network Nation

https://www.garlic.com/~lynn/2021k.html#87 IBM and Internet Old Farts

https://www.garlic.com/~lynn/2021k.html#57 System Availability

https://www.garlic.com/~lynn/2021j.html#55 ESnet

https://www.garlic.com/~lynn/2021j.html#42 IBM Business School Cases

https://www.garlic.com/~lynn/2021e.html#74 WEB Security

https://www.garlic.com/~lynn/2021d.html#16 The Rise of the Internet

https://www.garlic.com/~lynn/2021c.html#68 Online History

https://www.garlic.com/~lynn/2019d.html#113 Internet and Business Critical Dataprocessing

https://www.garlic.com/~lynn/2019.html#25 Are we all now dinosaurs, out of place and out of time?

https://www.garlic.com/~lynn/2018f.html#60 1970s school compsci curriculum--what would you do?

https://www.garlic.com/~lynn/2017i.html#18 progress in e-mail, such as AOL

https://www.garlic.com/~lynn/2017g.html#14 Mainframe Networking problems

https://www.garlic.com/~lynn/2017f.html#100 Jean Sammet, Co-Designer of a Pioneering Computer Language, Dies at 89

https://www.garlic.com/~lynn/2017e.html#75 11May1992 (25 years ago) press on cluster scale-up

https://www.garlic.com/~lynn/2017e.html#70 Domain Name System

https://www.garlic.com/~lynn/2017e.html#14 The Geniuses that Anticipated the Idea of the Internet

https://www.garlic.com/~lynn/2017e.html#11 The Geniuses that Anticipated the Idea of the Internet

https://www.garlic.com/~lynn/2015e.html#10 The real story of how the Internet became so vulnerable

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: A U.N. Plan to Stop Corporate Tax Abuse Date: 15 Aug, 2023 Blog: FacebookA U.N. Plan to Stop Corporate Tax Abuse. Big business wants you to think that reforming corporate taxes is a boring and complicated subject, but it's actually simple and exciting.

... note 2010 CBO report that 2003-2009, tax collections were cut $6T and spending increased $6T for a $12T gap compared to fiscal responsibility budget (spending could not exceed tax revenue, which had been on its way to eliminating all federal debt) ... first time taxes were cut to not pay for two wars (also sort of confluence of Federal Reserve and Too Big To Fail needed huge federal debt, special interests wanted huge tax cut, and Military-Industrial Complex wanted huge spending increase and perpetual wars)

Tax havens could cost countries $4.7 trillion over the next decade,

advocacy group warns. The U.K. continues to lead the so-called "axis

of tax avoidance," which drains an estimated $151 billion from global

coffers through corporate profit-shifting, a new report found.

https://www.icij.org/investigations/paradise-papers/tax-havens-could-cost-countries-4-7-trillion-over-the-next-decade-advocacy-group-warns/

OECD 'disappointed' over 'surprising' UN global tax report. The UN

chief pushed for a bigger say in the international tax agenda and said

the group of wealthy countries had ignored the needs of developing

nations.

https://www.icij.org/investigations/paradise-papers/oecd-surprised-and-disappointed-over-un-global-tax-plan/

tax fraud, tax evasion, tax loopholes, tax abuse, tax avoidance, tax

haven posts