From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Book on computer architecture for beginners Newsgroups: comp.arch,alt.folklore.computers Date: 22 Jun 2005 11:45:27 -0600keith writes:

--

Anne & Lynn Wheeler | https://www.garlic.com/~lynn/

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: The Worth of Verisign's Brand Newsgroups: netscape.public.mozilla.crypto Date: 22 Jun 2005 14:09:00 -0600"Anders Rundgren" writes:

https://www.garlic.com/~lynn/aadsm5.htm#asrn2

https://www.garlic.com/~lynn/aadsm5.htm#asrn3

in the year we worked with them ... they moved and changed their name. trivia question ... who had owned the rights to their new name?

as part of the effort on doing the thing called a payment gateway and allowing servers to do payment transactions ... we also had to perform business due diligence on most of these operations that produced these things called SSL domain name certificates. At the time we coined the term certificate manufacturing ... to differentiate what most of them were doing from this thing called PKI (aka that don't actually really have any operational business process for doing much more than pushing the certificates out the door).

It was also when we coined the term merchant comfort certificates (since it made the relying parties ... aka the consumers ... feel better).

we also originated the comparison between PKI CRLs and the paper booklet invalidation model used by the payment card industry in the 60s. when some number of people would comment that it was time to move payment card transactions into the modern world using digital certificates ... would pointed out to them ... rather than modernizing the activity ... it was regressing the operations by 20-30 years.

Another analogy for certificates is the offline payment card world of the 50s & 60s ... which had to mail out invalid account booklets on a monthly basis ... and then as the number of merchants, card holders and risks increased ... they started going to humongous weekly mailings. At least they had a record of all the relying parties (aka merchants) ... which typical PKI operation has no idea what-so-ever who the relying parties are.

It was sometime after we started pointing out that PKIs really had a business model oriented at offline business environment ... which would result in regressing many business operations decades if it was force fit on them ... that you saw OCSP come on the scene.

OCSP doesn't actually validate any information ... it is just there to validate whether any information that might be in a certificate is actually still valid. The CRL model is known to not scale ... as found out in the payment card industry going into the 70s.

PKIs imply the administration and management of the trust information. In the offline certificate model ... either they have to have a list of all possible relying parties and regularly push invalidation lists out to them ... or they provide an online service which allows relying parties to check for still valid. However, as you point out that the PKI administration and management of any kind doesn't really scale ... which resulted in actual deployments being simple certificate manufacturing (instead of real PKI).

The payment card industry also demonstrated the lack of scaling of the certificate-like offline model scalling model in the 70s when they converted to an online model for the actual information.

Part of the problem with the online OCSP model is that has all the overhead of an online model with all the downside of the offline implementation aka not providing the relying party access to real online, timely actual information ... things like timely aggregation information (current account balance) or timely sequences of events (saw for fraud detection).

part of the viability for no/low-value market segment is to stick with simple certificate manufacturing and don't actually try to manage and administrate the associated information trust.

random past certificate manufacturing posts

https://www.garlic.com/~lynn/aepay2.htm#fed Federal CP model and financial transactions

https://www.garlic.com/~lynn/aepay2.htm#cadis disaster recovery cross-posting

https://www.garlic.com/~lynn/aepay3.htm#votec (my) long winded observations regarding X9.59 & XML, encryption and certificates

https://www.garlic.com/~lynn/aadsm2.htm#scale Scale (and the SRV record)

https://www.garlic.com/~lynn/aadsm2.htm#inetpki A PKI for the Internet (was RE: Scale (and the SRV

https://www.garlic.com/~lynn/aadsm3.htm#kiss7 KISS for PKIX. (Was: RE: ASN.1 vs XML (used to be RE: I-D ACTION :draft-ietf-pkix-scvp- 00.txt))

https://www.garlic.com/~lynn/aadsm5.htm#pkimort2 problem with the death of X.509 PKI

https://www.garlic.com/~lynn/aadsm5.htm#faith faith-based security and kinds of trust

https://www.garlic.com/~lynn/aadsm8.htm#softpki6 Software for PKI

https://www.garlic.com/~lynn/aadsm8.htm#softpki10 Software for PKI

https://www.garlic.com/~lynn/aadsm8.htm#softpki14 DNSSEC (RE: Software for PKI)

https://www.garlic.com/~lynn/aadsm8.htm#softpki20 DNSSEC (RE: Software for PKI)

https://www.garlic.com/~lynn/aadsm9.htm#cfppki5 CFP: PKI research workshop

https://www.garlic.com/~lynn/aadsmore.htm#client4 Client-side revocation checking capability

https://www.garlic.com/~lynn/aepay10.htm#81 SSL certs & baby steps

https://www.garlic.com/~lynn/aepay10.htm#82 SSL certs & baby steps (addenda)

https://www.garlic.com/~lynn/aadsm11.htm#34 ALARMED ... Only Mostly Dead ... RIP PKI

https://www.garlic.com/~lynn/aadsm11.htm#39 ALARMED ... Only Mostly Dead ... RIP PKI .. addenda

https://www.garlic.com/~lynn/aadsm13.htm#35 How effective is open source crypto? (bad form)

https://www.garlic.com/~lynn/aadsm13.htm#37 How effective is open source crypto?

https://www.garlic.com/~lynn/aadsm14.htm#19 Payments as an answer to spam (addenda)

https://www.garlic.com/~lynn/aadsm14.htm#37 Keyservers and Spam

https://www.garlic.com/~lynn/aadsm15.htm#0 invoicing with PKI

https://www.garlic.com/~lynn/aadsm19.htm#13 What happened with the session fixation bug?

https://www.garlic.com/~lynn/98.html#0 Account Authority Digital Signature model

https://www.garlic.com/~lynn/2000.html#40 "Trusted" CA - Oxymoron?

https://www.garlic.com/~lynn/2001d.html#7 Invalid certificate on 'security' site.

https://www.garlic.com/~lynn/2001d.html#16 Verisign and Microsoft - oops

https://www.garlic.com/~lynn/2001d.html#20 What is PKI?

https://www.garlic.com/~lynn/2001g.html#2 Root certificates

https://www.garlic.com/~lynn/2001g.html#68 PKI/Digital signature doesn't work

https://www.garlic.com/~lynn/2001h.html#0 PKI/Digital signature doesn't work

https://www.garlic.com/~lynn/2001j.html#8 PKI (Public Key Infrastructure)

https://www.garlic.com/~lynn/2003.html#41 InfiniBand Group Sharply, Evenly Divided

https://www.garlic.com/~lynn/2003l.html#36 Proposal for a new PKI model (At least I hope it's new)

https://www.garlic.com/~lynn/2003l.html#45 Proposal for a new PKI model (At least I hope it's new)

https://www.garlic.com/~lynn/2003l.html#46 Proposal for a new PKI model (At least I hope it's new)

https://www.garlic.com/~lynn/2004m.html#12 How can I act as a Certificate Authority (CA) with openssl ??

random past comfort certificate postings:

https://www.garlic.com/~lynn/aadsm2.htm#mcomfort Human Nature

https://www.garlic.com/~lynn/aadsm2.htm#mcomf3 Human Nature

https://www.garlic.com/~lynn/aadsm2.htm#useire2 U.S. & Ireland use digital signature

https://www.garlic.com/~lynn/aadsm3.htm#kiss5 Common misconceptions, was Re: KISS for PKIX. (Was: RE: ASN.1 vs XML (used to be RE: I-D ACTION :draft-ietf-pkix-scvp- 00.txt))

https://www.garlic.com/~lynn/aadsm3.htm#kiss7 KISS for PKIX. (Was: RE: ASN.1 vs XML (used to be RE: I-D ACTION :draft-ietf-pkix-scvp- 00.txt))

https://www.garlic.com/~lynn/aadsmail.htm#comfort AADS & X9.59 performance and algorithm key sizes

https://www.garlic.com/~lynn/aepay4.htm#comcert Merchant Comfort Certificates

https://www.garlic.com/~lynn/aepay4.htm#comcert2 Merchant Comfort Certificates

https://www.garlic.com/~lynn/aepay4.htm#comcert3 Merchant Comfort Certificates

https://www.garlic.com/~lynn/aepay4.htm#comcert4 Merchant Comfort Certificates

https://www.garlic.com/~lynn/aepay4.htm#comcert5 Merchant Comfort Certificates

https://www.garlic.com/~lynn/aepay4.htm#comcert6 Merchant Comfort Certificates

https://www.garlic.com/~lynn/aepay4.htm#comcert7 Merchant Comfort Certificates

https://www.garlic.com/~lynn/aepay4.htm#comcert8 Merchant Comfort Certificates

https://www.garlic.com/~lynn/aepay4.htm#comcert9 Merchant Comfort Certificates

https://www.garlic.com/~lynn/aepay4.htm#comcert10 Merchant Comfort Certificates

https://www.garlic.com/~lynn/aepay4.htm#comcert11 Merchant Comfort Certificates

https://www.garlic.com/~lynn/aepay4.htm#comcert12 Merchant Comfort Certificates

https://www.garlic.com/~lynn/aepay4.htm#comcert13 Merchant Comfort Certificates

https://www.garlic.com/~lynn/aepay4.htm#comcert14 Merchant Comfort Certificates

https://www.garlic.com/~lynn/aepay4.htm#comcert15 Merchant Comfort Certificates

https://www.garlic.com/~lynn/aepay4.htm#comcert16 Merchant Comfort Certificates

https://www.garlic.com/~lynn/aepay4.htm#comcert17 Merchant Comfort Certificates

https://www.garlic.com/~lynn/aepay6.htm#dspki use of digital signatures and PKI

https://www.garlic.com/~lynn/aepay10.htm#80 Invisible Ink, E-signatures slow to broadly catch on (addenda)

https://www.garlic.com/~lynn/2000c.html#32 Request for review of "secure" storage scheme

https://www.garlic.com/~lynn/2001c.html#62 SSL weaknesses

https://www.garlic.com/~lynn/2003l.html#43 Proposal for a new PKI model (At least I hope it's new)

https://www.garlic.com/~lynn/2004b.html#39 SSL certificates

https://www.garlic.com/~lynn/2004c.html#43 why and how VeriSign, thawte became a trusted CA?

https://www.garlic.com/~lynn/2004i.html#4 New Method for Authenticated Public Key Exchange without Digital Certificates

--

Anne & Lynn Wheeler | https://www.garlic.com/~lynn/

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: The Worth of Verisign's Brand Newsgroups: netscape.public.mozilla.crypto Date: 22 Jun 2005 14:57:15 -0600Anne & Lynn Wheeler writes:

misc. refs:

https://www.garlic.com/~lynn/2005i.html#42 Development as Configuration

https://www.garlic.com/~lynn/2005i.html#43 Development as Configuration

https://www.garlic.com/~lynn/2005i.html#44 SqlServerCE and SOA - an architecture question

https://www.garlic.com/~lynn/2005i.html#48 defeating firewalls made easy

https://www.garlic.com/~lynn/2005k.html#2 Ancient history

--

Anne & Lynn Wheeler | https://www.garlic.com/~lynn/

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: The Worth of Verisign's Brand Newsgroups: netscape.public.mozilla.crypto Date: 22 Jun 2005 15:13:41 -0600"Anders Rundgren" writes:

the problem with OSCP services is that it supposedly just says yes/no as to whether the stale, static certificate information is still applicable or not.

as mentioned ... this has all the overhead of having an online service w/o any of the benefits.

the payment infrastructure moved out of this offline (certificate-like), archaic design in the 70s with online authentication and authorization with timely online access to the actual, real information ... like aggregated information of sequences of operations. This resulted in things like support for fraud detection patterns and current account balance. the current account balance represents the starting value (which you might or not might consider including in a stale, static, redundant and superfluous certificate?), in addtion to the aggregation of all the ongoing operations updating the current account balance with subtractions and additions (say issue a brand new stale, static, redundant and superfluous certificate every time there is an account balance update, and then spray it all over the world to every possible and/or potential relying party).

--

Anne & Lynn Wheeler | https://www.garlic.com/~lynn/

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: SHARE 50 years Newsgroups: alt.folklore.computers Date: 23 Jun 2005 06:06:01 -0600

Fifty years of sharing open source

http://business.newsforge.com/business/05/06/15/166253.shtml?tid=35&tid=18

and some comments

http://business.newsforge.com/comments.pl?sid=47512&mode=flat&commentsort=0&op=Change

--

Anne & Lynn Wheeler | https://www.garlic.com/~lynn/

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Book on computer architecture for beginners Newsgroups: comp.arch,alt.folklore.computers Date: 23 Jun 2005 06:15:02 -0600Alex McDonald writes:

found this listing for nylon ribbons for 1403

http://www.cleansweepsupply.com/pages/item-lex0457937.html

--

Anne & Lynn Wheeler | https://www.garlic.com/~lynn/

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: SHARE 50 years? Newsgroups: bit.listserv.ibm-main Date: 23 Jun 2005 07:21:19 -0600

Fifty years of sharing open source

http://business.newsforge.com/business/05/06/15/166253.shtml?tid=35&tid=18

and some comments

http://business.newsforge.com/comments.pl?sid=47512&mode=flat&commentsort=0&op=Change

ref to presentation made at fall 68 share meeting

https://www.garlic.com/~lynn/94.html#18

--

Anne & Lynn Wheeler | https://www.garlic.com/~lynn/

From: lynn@garlic.com Newsgroups: microsoft.public.dotnet.framework.aspnet.security Subject: Re: Signing and bundling data using certificates Date: Thu, 23 Jun 2005 10:24:14 -0700Alan Fisher wrote:

there is a business process called public/private key ... where one key is made public (public key) and the other key is kept confidentential and is never divulged (private key).

there is an additional business process called

digital signature

authentication ... where a hash of some data is made and then encoded

with private key. the corresponding public key can be used to decode

the digital signature ... and then compare the decoded digital

signature with a recomputed hash of the message. If the recomputed

hash and the decoded digital signature are the same, then the

recipient knows that 1) the message hasn't been modified and 2)

authenticates the originator of the message.

in standard business practice ... somebody registers their public key

with destinations and/or relying parties ... in much the same way they

might register a pin, password, SSN#, mother's maiden name, birth

date, and/or any other authentication information. The advantage of

registering a public key over some sort of static, shared-secret

... is that a public key can only be used to authenticate digital

signatures .... it can't be used for impersonation (as part of

generating a digital signature).

https://www.garlic.com/~lynn/subpubkey.html#certless

On-file, static, shared-secret authentication information can not only

be used for authentication ... but also impersonation.

https://www.garlic.com/~lynn/subintegrity.html#secrets

Digital certificates are a business process that addresses an offline email scenario from the early 80s ... where the recipient dials up their local (electronic) post office, exchanges email, hangs up ... and then is possibly faced with authenticating some first time communication from a total stranger (and had no recourse to either local information and/or online information for obtaining the necessary information). It is somewhat analogous to the "letters of credit" used in the sailing ship days.

A trusted party "binds" the public key with some other information into a "digital certificate" and then digital signs the package called a digital certificate. The definition of a "trusted party" is that recipients have the public key of the "tursted party" is some local trusted public key repository (for instance browsers are shipped with a list of trusted party public keys in an internal trusted public key repository).

The originator creates a message or document of some sort, digital signs the information and then packages up the 1) document, 2) digital signature, and 3) digital certificate (containing some binding of their public key to other information)

and transmits it.

The recipient/relying-party eventually gets the package composed of the three pices. The recipient looks up the trusted party's public key in their trusted public key repository, and validates the digital signature on the enclosed digital certificate. If the digital certificate is valid, they then check the "bound" information in the digital certificate to see if it relates to anything at all they are interested in. If so, then they can take the sender's public key (included in the digital certificate) and validate the digital signature on the message. If that all seems to be valid ... they then make certain assumptions about the content of the actual message.

In normal business operations ... where there is prior relationship between the sender and the receiver ... the receiver will tend to already have authentication information about the sender in a local trusted (public key) repository (and not have to resort to trust redirection thru the use of trusted party public keys and digital certificates).

Another scenario is that in the early 90s, there were x.509 identity digital certificates where the trusted parties (or certification authorities ... i.e. CAs) were looking at grossly overloading the "bound" information in the digital certificates with enormous amounts of personal information. This was in part because the CAs didn't have a good idea what future relying parties might need in the way of information about individuals that they were communicating with.

You started to see some retrenchment of this in the mid-90s ... where

institutions were started to realize that x.509 identity digital

certificates grossly overloaded with personal information represented

significant privacy and liability issues. Somewhat as a resort there

was some retrenchment to relying-party-only certificates

https://www.garlic.com/~lynn/subpubkey.html#rpo

which contained little more information than the individual's public key and some sort of account number or other database index. The actual database contained the real information. However, it is trivial to show that such replying-party-only certificates not only violate the original purpose of digital certificates, but are also redundant and superfluous ... aka the relying party registers the indivuals public key in their trusted repository along with all of the individual's other information. Since all of the individual's information (including their public key) is already in a trusted repository at the relying party, having an individual repeatedly transmit a digital certificate containing a small, stale, static, subset of the same information is redundant and superfluous.

In some of the scenarios involving relying-party-only certificates from the mid-90s it was even worse than redundant and superfluous. One of the scenarios involved specification for digitally signed payment transactions with appended relying-party-only digital certificate. Typical payment transactions are on the order of 60-80 bytes. The typical erlying-party-only digital certicates involved 4k-12k bytes. Not only were the relying-party-only stale, static digital certificates, redundant and superfluous, they also would represent a factor of one hundred times payload bloat for the payment transaction network (increasing the size of payment transaction by one hundred times for redundant and superfluous stale, static information)

From: Anne & Lynn Wheeler <lynn@garlic.com> Newsgroups: sci.crypt.research Subject: Re: derive key from password Date: 24 Jun 2005 10:07:32 -0600machiel@braindamage.nl (machiel) writes:

there is also some work on longer term derived key material ... where rather than doing a unique derived key per transaction ... there are long term derived keys. in the DUKPT case, clear-text information from the transaction is part of the process deriving the key. The longer term derived keys tend to use some sort of account number. You might find such implementations in transit systems. There is a master key for the whole infrastructure ... and each transit token then has a unique account number with an associated derived key. The transit system may store data in each token using the token-specific derived key. brute force on a token specific key ... doesn't put the whole infrastructure at risk.

some discussion of attack against RFC 2289 one-time password system

(uses iterative hashes of a passphrase)

https://www.garlic.com/~lynn/2003n.html#1 public key vs passwd authentication?

https://www.garlic.com/~lynn/2003n.html#2 public key vs passwd authentication?

https://www.garlic.com/~lynn/2003n.html#3 public key vs passwd authentication?

https://www.garlic.com/~lynn/2005i.html#50 XOR passphrase with a constant

from my rfc index

https://www.garlic.com/~lynn/rfcietff.htm

https://www.garlic.com/~lynn/rfcidx5.htm#1760

1760 I

The S/KEY One-Time Password System, Haller N., 1995/02/15 (12pp)

(.txt=31124) (Refs 1320, 1704) (Ref'ed By 1938, 2222, 2229, 2289,

2945, 4082)

in the RFC summary, clicking on the ".txt=nnnn" field retrieves the

actual RFC.

https://www.garlic.com/~lynn/rfcidx6.htm#1938

1938 -

A One-Time Password System, Haller N., Metz C., 1996/05/14 (18pp)

(.txt=44844) (Obsoleted by 2289) (Refs 1320, 1321, 1704, 1760)

(Ref'ed By 2243, 2284, 2828)

https://www.garlic.com/~lynn/rfcidx7.htm#2289

2289 S

A One-Time Password System, Haller N., Metz C., Nesser P., Straw

M., 1998/02/26 (25pp) (.txt=56495) (STD-61) (Obsoletes 1938) (Refs

1320, 1321, 1704, 1760, 1825, 1826, 1827) (Ref'ed By 2444, 2808,

3552, 3631, 3748, 3888) (ONE-PASS)

--

Anne & Lynn Wheeler | https://www.garlic.com/~lynn/

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Jack Kilby dead Newsgroups: alt.folklore.computers Date: 24 Jun 2005 12:28:18 -0600hancock4 writes:

i've also seen what i consider more science, technology and engineering go into the manufacturing of product ... than might goe into the product itself. literature tends to have lots of stuff about various product technologies ... but not necessarily a whole lot about product manufacturing technology.

i once worked on a product in the mid-70s that was canceled (before announce) because it only showed $9B revenue over five years and was below the minimum threshold requirement of $10b over five years.

...

or have multi-megapixel CCDs. in the mid-80s, I got asked to spend time on what was then called Berkeley 10m (now called Keck 10m .. and they have built a second one). at the time, as part of the effort, they were testing 200x200 (40k pixels) ccd array at lick observatory ... and some talk about maybe being able to get a 400x400 (160k pixels) for testing. there was an industry rumor that possibly spielberg might have a 2kx2k ccd that he was testing.

--

Anne & Lynn Wheeler | https://www.garlic.com/~lynn/

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: who invented CONFIG/SYS? Newsgroups: comp.sys.tandy,alt.folklore.computers Date: 24 Jun 2005 14:26:49 -0600noone writes:

for some tandy topic drift, the original document formater/processor

done on cms at the science center

https://www.garlic.com/~lynn/subtopic.html#545tech

was called script and used runoff-like "dot" commands. in '69, "G",

"M", and "L" invented GML at the science center

https://www.garlic.com/~lynn/submain.html#sgml

(and of course they had to come up with a name that matched their initials). it was later standardized in iso as SGML and later begat HTML, XML, FSML, SAML, etc.

univ. of waterloo did a cms script clone that was in use on cms at

cern ... and is the evolutionary path to html .. recent reference

in afc to the UofW and cern connection:

https://www.garlic.com/~lynn/2005k.html#58 Book on computer architecture for beginners

an IBM SE (system engineer) out of the LA branch office, in the late

70s, did a cms script clone and sold it on tandy machines (some of

the references seem to indicate it is still available):

https://www.garlic.com/~lynn/2000e.html#0 What good and old text formatter are there ?

https://www.garlic.com/~lynn/2000e.html#20 Is Al Gore The Father of the Internet?^

https://www.garlic.com/~lynn/2002b.html#46 ... the need for a Museum of Computer Software

https://www.garlic.com/~lynn/2002h.html#73 Where did text file line ending characters begin?

https://www.garlic.com/~lynn/2002p.html#54 Newbie: Two quesions about mainframes

https://www.garlic.com/~lynn/2003.html#40 InfiniBand Group Sharply, Evenly Divided

https://www.garlic.com/~lynn/2004o.html#5 Integer types for 128-bit addressing

https://www.garlic.com/~lynn/2005.html#46 8086 memory space

for total topic drift ... current w3c offices are only a couple blocks from the old science center location at 545 tech. sq.

--

Anne & Lynn Wheeler | https://www.garlic.com/~lynn/

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: The Worth of Verisign's Brand Newsgroups: netscape.public.mozilla.crypto Date: 25 Jun 2005 07:48:28 -0600"Anders Rundgren" writes:

1) stale, static, year old information (say about whether a financial account may or may not have existed)

and

2) real-time response based on real-time and aggregated information whether they were being paid.

... would relying parties prefer to have stale, static year old information ... or would they prefer to have a real time answer whether or not they were being paid.

The issue is that OCSP goes to all the trouble to have a real-time information responding yes/no to whether the stale, static information was still current ... but doesn't provide a yes/no response to whether the relying party was actually being paid.

The contention is that going to all the trouble of having a real-time operation ... the yes/no response to being paid or not ... is of significantly more value to a relying party than whether or not some stale, static information was still valid.

the analogy is that you have a strip mall ... that has a bunch of retail stores. there are appliance operation, a dry goods operation and an identification operation. you go into the appliance operation and buy a appliance and present a card ... that card initiates an online transaction, which checks you financial worth and recent transactions and a relying party returns to the merchant a guarantee that they will be (and possibly already have been) paid.

you then go into the identity operation and present a card ... the digital certificate is retrieved by the operation ... it does an OCSP to check if the certificate is still valid and then they verify a digital signature operation. then you walk out of the store (random acts of gratuitous identification).

the issue, of course, is that very few verification or identification things are done just for the sake of doing them .. they are almost always done within the context of performing some other operation. the assertion has always been that the verification of stale, static information is only useful to the relying party whent they have no recourse to more valuable, real-time information (and/or recent stale, staic paradigm has tried to move into the no-value market niche, where the no-value operation can't justify the cost of real-time operation)

you very seldom have acts of gratuitous identification occurring ... they are occurring within some context. furthermore there are huge number of operations where the issue of identification is superfluous to the objective of the operation ... which may be primarily the exchange of value (as the object of the operation) and identification is truely redundant and superfluous (as can be demonstrated when anonomous cash can be used in lieu of financial institutional based exchange of value).

--

Anne & Lynn Wheeler | https://www.garlic.com/~lynn/

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: The Worth of Verisign's Brand Newsgroups: netscape.public.mozilla.crypto Date: 25 Jun 2005 08:43:24 -0600"Anders Rundgren" writes:

It would appear to be a descaling of PKI ... since there is only "one cert per bank".

It is also has some number of operations that could be considered antithetical to the PKI design point. The consumer bank and the consumer have a predefined relationship. It is possible for the consumer bank to ship their public key for direct installation in the consumer's trusted public key repository.

The PKI design point has trusted third party CAs ... installing

their public key in the consumer's trusted public key repository

... the original model from the original electronic commerce

https://www.garlic.com/~lynn/aadsm5.htm#asrn2

https://www.garlic.com/~lynn/aadsm5.htm#asrn3

with this thing called SSL.

The CA's then digitally signed digital certificates for operations that the consumer had no prior relationship with. The consumer could validate the digital certificates with the "CA" public keys on-file in their local trusted public key repository (possibly manufactured and integrated into their application, like a browser).

For predefined relationship between a consumer and their financial institution ... they can exchange public keys and store them in their respective trusted public key repositories (a financial institution can provide the consumer some automation that assists in such an operation).

PKI would appear to actually make the existing infrastructure less secure ... rather than the consumer directly trusting their financial institution ... the consumer would rely on a TTP CA to provide all their trust about their own consumer financial institution.

In the mid-90s there was work done on PKI for payment transactions.

One of the things learned from the early 90s, x.509 identity

certificates ... was that they appeared to represent significant

privacy and liability issues. As a result many institutions retrenched

to relying-party-only digital certificates ...

https://www.garlic.com/~lynn/subpubkey.html#rpo

that contained little more than some sort of database lookup value (like an account number) and a public key. however, it was trivial to demonstrate such certificates were redundant and superfluous. One possible reason for the ease in demonstrating that such stale, static certificates were redundant and superfluous was that they appeared to totally violate the basis PKI design point, aka requiring a independent, trusted third party to establish trust between two entities that never previously had any interaction.

For two parties that have pre-existing relationship, it is possible for them to directly exchange public keys and store them in their respective trusted public key repositories .... and not have to rely on a trusted third party to tell them whether they should trust each other. In the case where a consumer's financial institution is the only entity with a public/private key pair ... it is possible for the consumer to obtain the public key of their trusted financial institution ... not needed to rely on some independent third party to provide them with trust.

The other issue from the mid-90s in the PKI-oriented payment transaction specification ... was that besides using redundant and superfluous stale, static certificates ... they also represented enormous payload bloat for the financial infrastructure. The typical iso 8583 payment transaction is on the order of 60-80 bytes. The RPO-certificate overhead for the operation was on the order of 4k to 12k bytes. The stale, static, redundant and superfluous digital certificate overhead represented an enormous, one hundred times increase in payload bloat.

Another question ... are you saying that the complete transaction goes via this new path ... or does the existing real-time iso 8583 transaction have to be performed in addition to this new real-time function (also being performed, at least doubling the number and overhead for real-time operations).

The existing iso 8583 operations goes as straight-through processing in a single real-time round trip. Does the introduction of these new operations improve on the efficiency of that existing single round-trip, straight through processing?

--

Anne & Lynn Wheeler | https://www.garlic.com/~lynn/

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: The Worth of Verisign's Brand Newsgroups: netscape.public.mozilla.crypto Date: 25 Jun 2005 09:11:27 -0600with respect to iso 8583 payment network trust and single round-trip, straight thru processing.

part of the issue is that a PKI is redundant and superfluous since they don't need to rely on a trusted third party to provide trust between anonomous strangers that have never before met. in some sense, the pre-existing relationship and pre-existing trust allows for more efficient, single round-trip, straight through processing ... w/o having to go through a trust discovery process for every transactions (authentication should be sufficient).

in the normal operation, a merchant financial institution has a contractual relationship with merchants ... for which the merchant financial institution also takes some amount of financial liability. one of the well-used examples is the airline industry, both loved and somewhat feared by merchant financial institutions. There are a lot of high value transactions ... but there is also the prospect of the airline going bankrupt ... in the past this has represented something like $20m (or more) in outstanding airline tickets that the merchant financial institution had to make good on.

In a manner similar to the merchant financial institution and the merchant, there is also a pre-existing contractual relationship between a consumer and the consumer's financial institution (with the consumer's financail institution also accepting liability for their consumers). Again, no trusted third party PKI is required to establish trust on every operation that goes on between the consumer and the consumer's financial institution.

Over both the merchant financial institutions and the consumer financial institutions are the associations ... where there are pre-existing contractual relationships between the associations and the financial institutions. Again, there is no requirement for a trusted third party PKI to provide for a trust relationship on every transaction between the financial institutions and the associations.

A trusted third party PKI has no role in such an environment because there are pre-existing contractual, trust relationships already in place ... making a trusted third party PKI redundant and superfluous.

So not only is there an end-to-end contractual trust chain that follow from the merchant, to the merchant financial institution, to the associations, to the consumer financial institution, to the consumer ... this pre-existing end-to-end contractual trust chain can be relied upon to improve efficiency so that the whole trust establishment processes doesn't have to be re-executed on every transaction ... allowing for single round-trip straight through processing.

The existing issue ... doesn't have so much to do with establishing trust relationships (the objective of TTP CA & PKIs) but the simple problem of improving the authentication technology (when trust has already been established ... then the operations can rely on simpler authentication events ... rather than having to repeatedly re-establish the basis for identification and trust) ... it has to do with the vulnerability and exploits associated with existing authentication technology in use.

It is possible to simply improve on the integrity of the authentication technology .... w/o having to introduced the complexity and expense of repeatedly having to re-establish trust for every operation.

--

Anne & Lynn Wheeler | https://www.garlic.com/~lynn/

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: The Worth of Verisign's Brand Newsgroups: netscape.public.mozilla.crypto Date: 25 Jun 2005 09:34:21 -0600Anne & Lynn Wheeler writes:

one of the reasons that it is unlikely that the real transaction will go via the new path directly from the merchant to the consumer's financial institution (except in the "on-us" scenario where the same financial institution represents both the consumer and the merchant) is that the merchant financial institution has interest in real-time tracking of the merchant activities (aka the merchant financial institution is liable for what the merchant does, in much the same that the consumer financial institution is liable for consumer transactions).

having the merchant substitute direct transaction with the consumer financial institution would cut the merchant financial institution out of the single round-trip, straight-through process path. this might be likely were the consumer financial institution to not only assume liability for the consumer but also for the merchant (as in the "on-us" transaction scenario, aka "on-us" is defined as the situation where the same financial institution represents both the merchant and the consumer in the transaction).

on going posts in this thread:

https://www.garlic.com/~lynn/2005i.html#12 The Worth of Verisign's Brand

https://www.garlic.com/~lynn/2005i.html#13 The Worth of Verisign's Brand

https://www.garlic.com/~lynn/2005i.html#14 The Worth of Verisign's Brand

https://www.garlic.com/~lynn/2005i.html#17 The Worth of Verisign's Brand

https://www.garlic.com/~lynn/2005i.html#21 The Worth of Verisign's Brand

https://www.garlic.com/~lynn/2005i.html#23 The Worth of Verisign's Brand

https://www.garlic.com/~lynn/2005i.html#24 The Worth of Verisign's Brand

https://www.garlic.com/~lynn/2005i.html#26 The Worth of Verisign's Brand

https://www.garlic.com/~lynn/2005k.html#60 The Worth of Verisign's Brand

https://www.garlic.com/~lynn/2005l.html#1 The Worth of Verisign's Brand

https://www.garlic.com/~lynn/2005l.html#2 The Worth of Verisign's Brand

https://www.garlic.com/~lynn/2005l.html#3 The Worth of Verisign's Brand

https://www.garlic.com/~lynn/2005l.html#11 The Worth of Verisign's Brand

https://www.garlic.com/~lynn/2005l.html#12 The Worth of Verisign's Brand

--

Anne & Lynn Wheeler | https://www.garlic.com/~lynn/

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: The Worth of Verisign's Brand Newsgroups: netscape.public.mozilla.crypto Date: 25 Jun 2005 09:51:51 -0600oh, and just for the fun of it ... past threads where we've exchanged posts regarding the nature of

1) pre-existing, established contractual trust relationships

vis-a-vis

2) dynamically establishment of trust on every transaction with the aid of trusted third party PKI certification authority

and in the case of pre-existing, contractual trust relationships,

whether or not PKI certification authorities were redundant and

superfluous for also establishment of trust relationship

https://www.garlic.com/~lynn/aepay11.htm#70 Confusing Authentication and Identiification? (addenda)

https://www.garlic.com/~lynn/aepay12.htm#1 Confusing business process, payment, authentication and identification

https://www.garlic.com/~lynn/aadsm12.htm#22 draft-ietf-pkix-warranty-ext-01

https://www.garlic.com/~lynn/aadsm12.htm#41 I-D ACTION:draft-ietf-pkix-sim-00.txt

https://www.garlic.com/~lynn/aadsm12.htm#45 draft-ietf-pkix-warranty-extn-01.txt

https://www.garlic.com/~lynn/aadsm12.htm#48 draft-ietf-pkix-warranty-extn-01.txt

https://www.garlic.com/~lynn/aadsm12.htm#54 TTPs & AADS Was: First Data Unit Says It's Untangling Authentication

https://www.garlic.com/~lynn/aadsm17.htm#9 Setting X.509 Policy Data in IE, IIS, Outlook

--

Anne & Lynn Wheeler | https://www.garlic.com/~lynn/

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Newsgroups (Was Another OS/390 to z/OS 1.4 migration Newsgroups: bit.listserv.ibm-main,alt.folklore.computers Date: 25 Jun 2005 13:12:34 -0600Eric-PHMining@ibm-main.lst (Eric Bielefeld) writes:

the internal network was larger than the arpanet/internet from just

about the start up until around summer of '85.

https://www.garlic.com/~lynn/subnetwork.html#internalnet

at the great switch over from host/imp arpanet to internetworking

protocol on 1/1/83 ... the arpanet was around 250 nodes. by comparison,

not too long afterwards, the internal network passed 1000 nodes:

https://www.garlic.com/~lynn/internet.htm#22

i've claimed that one of the possible reasons was that the major internal networking nodes had a form of gateway functionality built into every node ... which the arpanet/internet didn't get until the 1/1/83 cut-over to internetworking protocol.

during this period in the early 80s ... there was some growing internal anxiety about this emerging internal networking prevalence ... that had largely grown up from the grassroots.

there were all kinds of efforts formed to try and study and understand what was happening. once such effort even brought in hiltz and turoff (the network nation) to help study what was going on.

also, there was a researcher assigned to set in the back of my

office. they took notes on how i communicated in face-to-face (also

going to meetings with me), on the phone ... and they also had access

to contents of all my incoming and outgoing email as well as logs of

all my instant messages. this went on for 9 months ... the report

also turned into a stanford phd thesis (joint with language and

computer AI) ... as well as material for subsequent papers and

books. some references included in collection of postings on computer

mediated communication

https://www.garlic.com/~lynn/subnetwork.html#cmc

one of the stats was that supposedly for the 9 month period, that I exchanged email with an avg. of 275-some people per week (well before the days of spam).

later in the 80s ... there was the nsf network backbone RFP. we

weren't allowed to bid ... but we got an nsf study that reported that

the backbone that we were operating was at least five years ahead of

all bid submissions to build the nsfnet backbone.

https://www.garlic.com/~lynn/internet.htm#0

https://www.garlic.com/~lynn/subnetwork.html#hsdt

the nsf network backbone could be considered the progenitor of the modern internet .... actually deploying a backbone for supporting network of networks (aka an operational characteristic that goes along with the internetworking protocol technology).

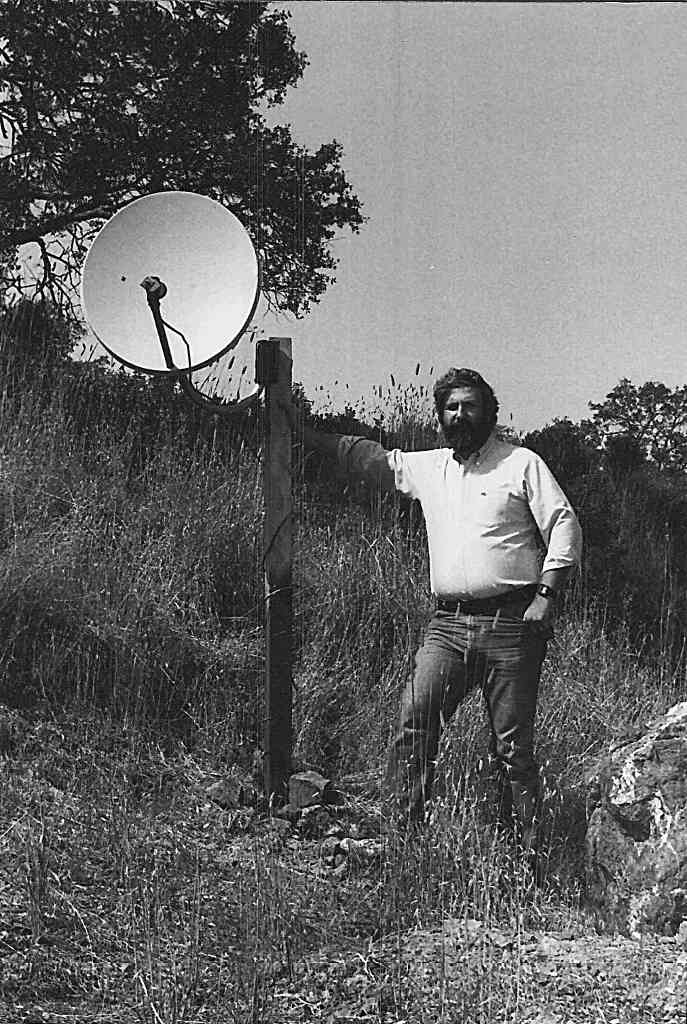

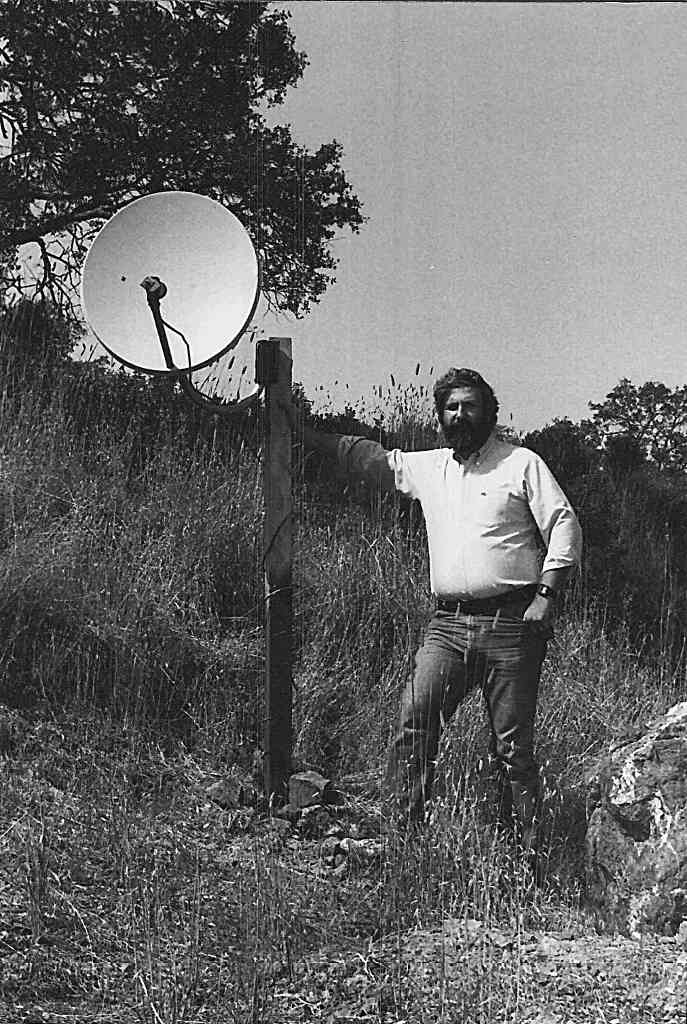

note that into the early and mid-90s ... much of the newsgroups were still riding the usenet/uucp rails (not yet having moved to internet in any significant way) ... and people having either a direct usenet feed or having access via some BBS that had a usenet feed. Circa 93, I co-authored an article for boardwatch (bbs industry mag) about drivers I had done for a full usenet satellite broadcast feed.

the "ISPs" of this era were typically offering shell accounts and/or UUCP accounts (predating PPP and tcp/ip connectivity).

--

Anne & Lynn Wheeler | https://www.garlic.com/~lynn/

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: The Worth of Verisign's Brand Newsgroups: netscape.public.mozilla.crypto Date: 25 Jun 2005 13:35:26 -0600"Anders Rundgren" writes:

as mentioned ... it is unlikely that 3D (going directly from the merchant to the consumer financial institution) is actually replacing the existing payment message transport ... unless it is actually suggesting that the merchant financial institution is no longer involved representing the merchant ... and that the consumer financial institutions will be assuming all liability responsiblity for the merchant.

futhermore if you study the existing infrastructure ... not only does the federation of payments already exist ... but there are long term contractual trust vehicles in place that support that support that federaion of payments (between merchant, merchant financial instituation, association, consumer financial institution, and consumer).

if it isn't replacing the existing real-time, online, single round-trip, straight-through processing ... that directly involves all the financially responsible parties ... then presumably it is just adding a second, online, real-time transaction to an existing online, real-time transaction? (doubling the transaction and processing overhead).

one of the things that kindergartern, security 101 usually teaches is that if you bifurcate transaction operation in such a way ... you may be opening up unnecessary security and fraud exposures ... in addition to possibly doubling the transaction and processing overhead.

now, the design point for the stale, static, PKI model was for establishing trust for a relying party that had no other recourse about first time communication with a party where no previous relationship existed. Supposedly 3d (assuming that it is just adding a second realtime, online transaction to an already existing, realtime online transaction) is doubling the number and overhead of online, realtime transactions .... in addition to managing to craft in some stale, static PKI processing.

the AADS model doesn't do anything about federation or non-federation

of payments. AADS simply provides for providing improved

authentication technology integrated with standard business

operations:

https://www.garlic.com/~lynn/x959.html#aads

There have been some significant protocols defined over the past

several years ... where authentication was done as an independent

operation ... totally separate from doing authentication on the

transaction itself. In all such cases that I know of, it has been

possible to demonstrated man-in-the-middle (MITM) attacks

https://www.garlic.com/~lynn/subintegrity.html#mitm

where authentication is done separately from the actual transaction.

in the mid-90s the x9a10 financial standards working group was tasked

with preserving the integrity of the financial infrastructure for all

retail payments ... and came up with x9.59

https://www.garlic.com/~lynn/x959.html#x959

https://www.garlic.com/~lynn/subpubkey.html#privacy

which simply states that transaction is directly authenticated. some

recent posts (in totally different thread) going into some number of

infrastructure vulnerabilities and the x9.59 financial standard

countermeasures:

https://www.garlic.com/~lynn/aadsm19.htm#17 What happened with the session fixation bug?

https://www.garlic.com/~lynn/aadsm19.htm#32 Using Corporate Logos to Beat ID Theft

https://www.garlic.com/~lynn/aadsm19.htm#38 massive data theft at MasterCard processor

https://www.garlic.com/~lynn/aadsm19.htm#39 massive data theft at MasterCard processor

https://www.garlic.com/~lynn/aadsm19.htm#40 massive data theft at MasterCard processor

https://www.garlic.com/~lynn/aadsm19.htm#44 massive data theft at MasterCard processor

--

Anne & Lynn Wheeler | https://www.garlic.com/~lynn/

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: The Worth of Verisign's Brand Newsgroups: netscape.public.mozilla.crypto Date: 25 Jun 2005 14:23:43 -0600"Anders Rundgren" writes:

there has been some threads about having defense-in-depth.

the counter argument to defense-in-depth ... is a lot of the defense-in-depth strategies drastically increase the complexity of the infrastructure ... and frequently, it is complexity itself that opens up vulnerabilities and exploits.

the countermeasure to complexity vulnerabilities and exploits frequently is KISS ... where simpler actually wins out over defense-iu-depth and more complex. In part, defense-in-depth, while possibly creating overlapping layers ... frequently also creates cracks between such layers that allow the crooks to slip through.

a couple past threads mentioning defense-in-depth

https://www.garlic.com/~lynn/aepay11.htm#0 identity, fingerprint, from comp.risks

https://www.garlic.com/~lynn/2002j.html#40 Beginner question on Security

https://www.garlic.com/~lynn/aadsm19.htm#27 Citibank discloses private information to improve security

https://www.garlic.com/~lynn/2005b.html#45 [Lit.] Buffer overruns

numerous past posts mentioning KISS:

https://www.garlic.com/~lynn/aadsm2.htm#mcomfort Human Nature

https://www.garlic.com/~lynn/aadsm3.htm#kiss1 KISS for PKIX. (Was: RE: ASN.1 vs XML (used to be RE: I-D ACTION :draft-ietf-pkix-scvp- 00.txt))

https://www.garlic.com/~lynn/aadsm3.htm#kiss2 Common misconceptions, was Re: KISS for PKIX. (Was: RE: ASN.1 vs XML (used to be RE: I-D ACTION :draft-ietf-pkix-scvp-00.txt))

https://www.garlic.com/~lynn/aadsm3.htm#kiss3 KISS for PKIX. (Was: RE: ASN.1 vs XML (used to be RE: I-D ACTION :draft-ietf-pkix-scvp- 00.txt))

https://www.garlic.com/~lynn/aadsm3.htm#kiss4 KISS for PKIX. (Was: RE: ASN.1 vs XML (used to be RE: I-D ACTION :draft-ietf-pkix-scvp- 00.txt))

https://www.garlic.com/~lynn/aadsm3.htm#kiss5 Common misconceptions, was Re: KISS for PKIX. (Was: RE: ASN.1 vs XML (used to be RE: I-D ACTION :draft-ietf-pkix-scvp- 00.txt))

https://www.garlic.com/~lynn/aadsm3.htm#kiss6 KISS for PKIX. (Was: RE: ASN.1 vs XML (used to be RE: I-D ACTION :draft-ietf-pkix-scvp- 00.txt))

https://www.garlic.com/~lynn/aadsm3.htm#kiss7 KISS for PKIX. (Was: RE: ASN.1 vs XML (used to be RE: I-D ACTION :draft-ietf-pkix-scvp- 00.txt))

https://www.garlic.com/~lynn/aadsm3.htm#kiss8 KISS for PKIX

https://www.garlic.com/~lynn/aadsm3.htm#kiss9 KISS for PKIX .... password/digital signature

https://www.garlic.com/~lynn/aadsm3.htm#kiss10 KISS for PKIX. (authentication/authorization seperation)

https://www.garlic.com/~lynn/aadsm5.htm#liex509 Lie in X.BlaBla...

https://www.garlic.com/~lynn/aadsm7.htm#3dsecure 3D Secure Vulnerabilities?

https://www.garlic.com/~lynn/aadsm8.htm#softpki10 Software for PKI

https://www.garlic.com/~lynn/aepay3.htm#gaping gaping holes in security

https://www.garlic.com/~lynn/aepay7.htm#nonrep3 non-repudiation, was Re: crypto flaw in secure mail standards

https://www.garlic.com/~lynn/aepay7.htm#3dsecure4 3D Secure Vulnerabilities? Photo ID's and Payment Infrastructure

https://www.garlic.com/~lynn/aadsm10.htm#boyd AN AGILITY-BASED OODA MODEL FOR THE e-COMMERCE/e-BUSINESS ENTERPRISE

https://www.garlic.com/~lynn/aadsm11.htm#10 Federated Identity Management: Sorting out the possibilities

https://www.garlic.com/~lynn/aadsm11.htm#30 Proposal: A replacement for 3D Secure

https://www.garlic.com/~lynn/aadsm12.htm#19 TCPA not virtualizable during ownership change (Re: Overcoming the potential downside of TCPA)

https://www.garlic.com/~lynn/aadsm12.htm#54 TTPs & AADS Was: First Data Unit Says It's Untangling Authentication

https://www.garlic.com/~lynn/aadsm13.htm#16 A challenge

https://www.garlic.com/~lynn/aadsm13.htm#20 surrogate/agent addenda (long)

https://www.garlic.com/~lynn/aadsm15.htm#19 Simple SSL/TLS - Some Questions

https://www.garlic.com/~lynn/aadsm15.htm#20 Simple SSL/TLS - Some Questions

https://www.garlic.com/~lynn/aadsm15.htm#21 Simple SSL/TLS - Some Questions

https://www.garlic.com/~lynn/aadsm15.htm#39 FAQ: e-Signatures and Payments

https://www.garlic.com/~lynn/aadsm15.htm#40 FAQ: e-Signatures and Payments

https://www.garlic.com/~lynn/aadsm16.htm#1 FAQ: e-Signatures and Payments

https://www.garlic.com/~lynn/aadsm16.htm#10 Difference between TCPA-Hardware and a smart card (was: example:secure computing kernel needed)

https://www.garlic.com/~lynn/aadsm16.htm#12 Difference between TCPA-Hardware and a smart card (was: example: secure computing kernel needed)

https://www.garlic.com/~lynn/aadsm17.htm#0 Difference between TCPA-Hardware and a smart card (was: example: secure computing kernel needed)<

https://www.garlic.com/~lynn/aadsm17.htm#41 Yahoo releases internet standard draft for using DNS as public key server

https://www.garlic.com/~lynn/aadsm17.htm#60 Using crypto against Phishing, Spoofing and Spamming

https://www.garlic.com/~lynn/aadsmail.htm#comfort AADS & X9.59 performance and algorithm key sizes

https://www.garlic.com/~lynn/aepay10.htm#76 Invisible Ink, E-signatures slow to broadly catch on (addenda)

https://www.garlic.com/~lynn/aepay10.htm#77 Invisible Ink, E-signatures slow to broadly catch on (addenda)

https://www.garlic.com/~lynn/aepay11.htm#73 Account Numbers. Was: Confusing Authentication and Identiification? (addenda)

https://www.garlic.com/~lynn/99.html#228 Attacks on a PKI

https://www.garlic.com/~lynn/2001.html#18 Disk caching and file systems. Disk history...people forget

https://www.garlic.com/~lynn/2001l.html#1 Why is UNIX semi-immune to viral infection?

https://www.garlic.com/~lynn/2001l.html#3 SUNW at $8 good buy?

https://www.garlic.com/~lynn/2002b.html#22 Infiniband's impact was Re: Intel's 64-bit strategy

https://www.garlic.com/~lynn/2002b.html#44 PDP-10 Archive migration plan

https://www.garlic.com/~lynn/2002b.html#59 Computer Naming Conventions

https://www.garlic.com/~lynn/2002c.html#15 Opinion on smartcard security requested

https://www.garlic.com/~lynn/2002d.html#0 VAX, M68K complex instructions (was Re: Did Intel Bite Off MoreThan It Can Chew?)

https://www.garlic.com/~lynn/2002d.html#1 OS Workloads : Interactive etc

https://www.garlic.com/~lynn/2002e.html#26 Crazy idea: has it been done?

https://www.garlic.com/~lynn/2002e.html#29 Crazy idea: has it been done?

https://www.garlic.com/~lynn/2002i.html#62 subjective Q. - what's the most secure OS?

https://www.garlic.com/~lynn/2002k.html#11 Serious vulnerablity in several common SSL implementations?

https://www.garlic.com/~lynn/2002k.html#43 how to build tamper-proof unix server?

https://www.garlic.com/~lynn/2002k.html#44 how to build tamper-proof unix server?

https://www.garlic.com/~lynn/2002m.html#20 A new e-commerce security proposal

https://www.garlic.com/~lynn/2002m.html#27 Root certificate definition

https://www.garlic.com/~lynn/2002p.html#23 Cost of computing in 1958?

https://www.garlic.com/~lynn/2003b.html#45 hyperblock drift, was filesystem structure (long warning)

https://www.garlic.com/~lynn/2003b.html#46 internal network drift (was filesystem structure)

https://www.garlic.com/~lynn/2003c.html#66 FBA suggestion was Re: "average" DASD Blocksize

https://www.garlic.com/~lynn/2003d.html#14 OT: Attaining Perfection

https://www.garlic.com/~lynn/2003h.html#42 IBM says AMD dead in 5yrs ... -- Microsoft Monopoly vs

https://www.garlic.com/~lynn/2003.html#60 MIDAS

https://www.garlic.com/~lynn/2003m.html#33 MAD Programming Language

https://www.garlic.com/~lynn/2003n.html#37 Cray to commercialize Red Storm

https://www.garlic.com/~lynn/2004c.html#26 Moribund TSO/E

https://www.garlic.com/~lynn/2004e.html#26 The attack of the killer mainframes

https://www.garlic.com/~lynn/2004e.html#30 The attack of the killer mainframes

https://www.garlic.com/~lynn/2004f.html#58 Infiniband - practicalities for small clusters

https://www.garlic.com/~lynn/2004f.html#60 Infiniband - practicalities for small clusters

https://www.garlic.com/~lynn/2004g.html#24 |d|i|g|i|t|a|l| questions

https://www.garlic.com/~lynn/2004h.html#51 New Method for Authenticated Public Key Exchange without Digital Certificates

https://www.garlic.com/~lynn/2004q.html#50 [Lit.] Buffer overruns

https://www.garlic.com/~lynn/2005.html#10 The Soul of Barb's New Machine

https://www.garlic.com/~lynn/2005.html#12 The Soul of Barb's New Machine

https://www.garlic.com/~lynn/2005c.html#22 [Lit.] Buffer overruns

https://www.garlic.com/~lynn/aadsm19.htm#27 Citibank discloses private information to improve security

https://www.garlic.com/~lynn/2005i.html#19 Improving Authentication on the Internet

--

Anne & Lynn Wheeler | https://www.garlic.com/~lynn/

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Bank of America - On Line Banking *NOT* Secure? Newsgroups: sci.crypt Date: 25 Jun 2005 15:24:21 -0600"John E. Hadstate" writes:

1) spoofed website and/or MITM attack 2) evesdropping

there have been mechanisms that allow key exchange that don't require certificates & CAs ... that then would allow encrypted sessions as countermeasure for evesdropping.

the SSL domain name certificates were supposed to provide that the

domain name that you typed in for the URL ... matched the domain name

provided in an SSL domain name certificate (from the server)

https://www.garlic.com/~lynn/subpubkey.html#sslcert

and subsequently leveraged to provide key exchange with the valid

end-point and end-to-end encryption.

the problem was that a lot of merchants ... considering the original

SSL target for e-commerce

https://www.garlic.com/~lynn/aadsm5.htm#asrn2

https://www.garlic.com/~lynn/aadsm5.htm#asrn3

... found that they got something like five-times the thruput using non-SSL. The result is that the merchants avoided using SSL & https for non-evesdropping scenarios ... reserving it solely for evesdropping like operations. in the e-commerce scenario, that typically met the user got to eventually click on a "check-out" or "pay" button, which, in turn invoked SSL for the payment phase.

the problem was that the URL the user provided was never checked against the certificate of the site the user was visiting. So if the user happened to be dealing with a spoofed site ... when they finally got to the "pay" button ... the "pay" button generated a URL (on the user's behalf) and if it happened to be a spoofed site, it was highly likely that the URL that the spoofed site provided as part of the "pay" button, was highly likely to match whatever was in an SSL domain name certificate from the server that the user had been directed to.

the issue is that if there really is a spoofed site vulnerability and that the user might happen to be visiting a spoofed site (which is in large part the justification for SSL, ssl domain name certificates, certification authorities, etc) ... then nothing such a suspect site does or provides should be trusted ... including any javascript or other html related stuff that invokes ssl (as a evesdropping countermeasure) ... since it may also be to another fraudulent site (and they are keeping the other crooks from evesdropping on their spoofed communication).

spoofed site technology can either be straight spoofed site ... its

own site with all its own files providing the look & feel of the real

site. A spoofed site might also be done as man-in-the-middle attack

... where the spoofed site is actually acting as a middle man between

the end-user and the real site ... although possibly subtly modifying

communication passing thru:

https://www.garlic.com/~lynn/subintegrity.html#mitm

--

Anne & Lynn Wheeler | https://www.garlic.com/~lynn/

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: Newsgroups (Was Another OS/390 to z/OS 1.4 migration Newsgroups: bit.listserv.ibm-main,alt.folklore.computers Date: 25 Jun 2005 21:30:29 -0600David Scheidt writes:

re:

https://www.garlic.com/~lynn/2005l.html#16 Newsgroups (Was Another OS/390 to z/OS 1.4 migration

old usenet newsgroup posting from the feed:

Path: wheeler!pagesat!olivea!hal.com!darkstar.UCSC.EDU!osr

From: vern@daffy.ee.lbl.gov (Vern Paxson)

Newsgroups: comp.os.research

Subject: Paper on wide-area TCP growth trends available for ftp

Date: 13 May 1993 17:52:04 GMT

Lines: 34

Approved: comp-os-research@ftp.cse.ucsc.edu

Message-ID: <1su1s4INNsdj@darkstar.UCSC.EDU>

NNTP-Posting-Host: ftp.cse.ucsc.edu

Originator: osr@ftp

*** EOOH ***

From: vern@daffy.ee.lbl.gov (Vern Paxson)

Newsgroups: comp.os.research

Subject: Paper on wide-area TCP growth trends available for ftp

Date: 13 May 1993 17:52:04 GMT

Originator: osr@ftp

The following paper is now available via anonymous ftp to ftp.ee.lbl.gov.

Retrieve WAN-TCP-growth-trends.ps.Z (about 100KB):

Growth Trends in Wide-Area TCP Connections

Vern Paxson

Lawrence Berkeley Laboratory and

EECS Division, University of California, Berkeley

vern@ee.lbl.gov

We analyze the growth of a medium-sized research laboratory's

wide-area TCP connections over a period of more than two years.

Our data consisted of six month-long traces of all TCP connections

made between the site and the rest of the world. We find that

{\em smtp\/}, {\em ftp\/}, and {\em X11} traffic all exhibited

exponential growth in the number of connections and bytes

transferred, at rates significantly greater than that at which the

site's overall computing resources grew; that individual users

increasingly affected the site's traffic profile by making

wide-area connections from background scripts; that the proportion

of local computers participating in wide-area traffic outpaces the

site's overall growth; that use of the network by individual

computers appears to be constant for some protocols ({\em telnet})

and growing exponentially for others ({\em ftp\/}, {\em smtp\/});

and that wide-area traffic geography is diverse and dynamic.

If you have trouble printing it let me know and I'll mail you hardcopy.

Vern

Vern Paxson vern@ee.lbl.gov

Systems Engineering ucbvax!ee.lbl.gov!vern

Lawrence Berkeley Laboratory (510) 486-7504

backyard full usenet feed

--

Anne & Lynn Wheeler | https://www.garlic.com/~lynn/

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: The Worth of Verisign's Brand Newsgroups: netscape.public.mozilla.crypto Date: 26 Jun 2005 08:30:30 -0600"Anders Rundgren" writes:

for their financial institution in their trusted public key store.

this also would eliminate many of the bank site spoofing

vulnerabilities ... recent discussion

https://www.garlic.com/~lynn/2005l.html#19

in the above ... it discusses various kinds of spoofing and

MITM-attacks ... where the end user is provided with a URL ... rather

than entering it themselves. Then you have an exploit of SSL ...

which is only verifying the domain name in the entered URL against the

domain name in the supplied certificate. If you aren't entering the

URL ... but it is being provided by an attacker ... then they are

likely to provide a URL that corresponds to a certificate that they

have valid rights for. This has been a long recognized characteristic.

https://www.garlic.com/~lynn/subpubkey.html#sslcert

A consumer, having vetted a bank's public key for storing in their own trusted public key repository ... then can use that vetted public key for future communication with their financial institution ... and not be subject to vulnerabilities and exploits of an externally provided (certificate-based) public key that has had no vetting .. other than it is a valid public key and belongs to somebody.

The purpose for PKI has been for allowing relying parties to establish some level of trust when dealing with first-time encounters with entities that are otherwise complete strangers ... and the relying party has no other recourse for accessing information to establish trust. The design point was somewhat from the early 80s when there was much lower level of online connectivity and relying parties frequently operated in offline environment.

With the ubiquitous proliferation of the internet, those offline pockets are being drastically reduced. Somewhat as a result, some PKIs have attempted to move into the no-value market segment ... where a relying party is online ... but the value of the operation doesn't justify performing a online transactions. The issue user is that as the internet becomes much more pervasive ... the cost of online internet operations are radically dropping ... which in turn is drastically reducing the no-value situations that can't justify an online operation.

Presumably in the 3d secure PKI scenario, it has a financial institution's CC-specific certificate that is targeted specifically at relying parties that have had no prior dealings with that financial institution(*?*).

Presumably this implies the merchant as a relying party in dealing with the consumer's financial institution (the other alternative is possibly the consumer as a relying party in dealing with the merchant's financial institution ... but I have seen nothing that seems to support that scenario). Now, going back to well before the rise of PKI to address the offline trust scenario ... the payment card industry had online transactions that went from the merchant through a federated infrastructure all the way to the consumer's financial instititon and back as straight through processing. This included contractual trust establishment with various kinds of obligations and liabilities ... that included the consumer's financial institution assuming certain liabilities on behalf of the consumer and the merchant's financial institution assuming certain liabilities on behalf of the merchant. Possibly because of these obligations ... both financial institutions have interest in the transaction passing through them.

As mentioned before ... it appears that 3d secure doesn't eliminate the existing online real-time transaction that conforms to some significant contractual and liability obligations. 3d secure appears to add an additional, 2nd online transaction ... allowing the merchant to be directly in communication with the consumer's financial institution (bypassing the established contractual and liability obligations involving the merchant's financial institution). Furthermore, this 3d secure appears to include a PKI certificate ... targeted at establishing trust where the relying party has no other recourse for trust establishment. However, the merchant is already covered under the contractual trust operations that have been standard business practice for decades.

So what possible motivation is there for a merchant to add additional overhead and processing(*?*).

--

Anne & Lynn Wheeler | https://www.garlic.com/~lynn/

From: Anne & Lynn Wheeler <lynn@garlic.com> Subject: Re: The Worth of Verisign's Brand Newsgroups: netscape.public.mozilla.crypto Date: 26 Jun 2005 09:36:46 -0600"Anders Rundgren" writes:

the issue in the x9.59 standards word

https://www.garlic.com/~lynn/x959.html#x959

https://www.garlic.com/~lynn/subpubkey.html#privacy

was the treats and vulnerabilities in the authentication technology and that the integrity level has possibly eroded over the past 30 years or so (in the face of technology advances).

the primary issue was the authentication of the consumer for the transaction. this cropped up in two different aspects

1) is the consumer originating the transaction, really the entity that is authorized to perform transactions against the specific account

2) somewhat because of authentication integrity issues, starting at

least in the 90s, there was an increase in skimming and harvesting

... either direct skimming of the magnetic stripe information or

harvesting of account transaction databases ... both supporting later

counterfeiting activities enabling generation of fraudulent

transactions

https://www.garlic.com/~lynn/subintegrity.html#harvest

the countermeasure corner stones of x9.59 then became:

1) use technology for drastically increasing the authentication strength directly associated with transactions ... as a countermeasure to not being sure that the entity originating the transaction is really the entity authorized to perform transactions for that account.

2) business rule that PANs (account numbers) used in strongly authenticated transactions aren't allowed to be used in poorly or non-authentication transactions (or don't authorize poorly authenticated transaction having a PAN that is identified for use only in strongly authenticated transactions). this is a countermeasure to the skimming/harvesting vulnerabilities and exploits.

there was a joke with regard to the second countermeasure

corner stone that you could blanket the world in miles deep

cryptography and you still couldn't contain the skimming/harvesting

activities. the second corner stone just removes skimming/harvesting

as having any practical benefit in support of crooks and fraudulent

transactions. slightly related post on security proportional to risk:

https://www.garlic.com/~lynn/2001h.html#61

recent similar posting in another thread:

https://www.garlic.com/~lynn/2005k.html#23

https://www.garlic.com/~lynn/aadsm16.htm#20

having helped with the deployment of the e-commerce ssl based

infrastructure

https://www.garlic.com/~lynn/aadsm5.htm#asrn2

https://www.garlic.com/~lynn/aadsm5.htm#asrn3

we recognized a large number of situations where PKIs that had originally been designed to address trust issues between relying parties and other entities that had no prrevious contact ... were being applied to environments that had long term and well established trust and relationship management infrastructures (aka if one has a relationship management infrastructure that provides long-term and detailed trust history about a specific relationship ... then a PKI becomes redundant and superfluous as a trust establishment mechanism).

In the AADS model

https://www.garlic.com/~lynn/x959.html#aads

involving certificate-less public key operation

https://www.garlic.com/~lynn/subpubkey.html#certless

we attempted to map publickey-based authentication technology into existing and long-term business processes and relationship management infrastructures.

the existing authentication landscape is largely shared-secret based

https://www.garlic.com/~lynn/subintegrity.html#secret

where the same information that is used for originating a transaction is also used for verifying a transaction. this opens up harvesting vulnerabilities and treats against the verification repositories.

basically asymmetric cryptography is a technology involving pairs of keys, data encoding by one key is decoded by the other key.

a business process has been defined for asymmetric cryptography where one of the key pair is designated "public" and can be widely distributed. The other of the key pair is designated "private" and kept confidential and never divulged.

a futher business process has been defined callrf "digital signatures" where a hash of some message or document is encoded with a private key. later a relying party can recalculate the hash of the same message or document, decode the digital signatures with the corresponding public key and compare the two hashes. if the two hashes are the same ... then the relying party can assume:

1) the message/document hasn't been modified since being digitally signed

2) something you have authentication, aka the originating entity has access to, and use of the corresponding private key.

an additional business process was created called PKIs and certification authorities that was targeted at the environment where a relying party is dealing with first time communication with a stranger and has no other recourse for trust establishment about the total stranger. note however, that PKIs and certification authorities can be shown to be redundant and superfluous in environments where the relying party has long established business processes and trust/relationship management infrastructures for dealing with pre-existing relationships.

However, just because PKIs and certification authority business process can be shown to be redundant and superfluous in most existing modern day business operations ... that doesn't preclude digital signature technology being used (in a certificate-less environment) as a stronger form of authentication (relying on existing and long estasblished relationship management processes for registering a public key in lieu of shared-secret based authentication material).

leveraging long established relationship management infrastructures for registering public key authentication material in lieu of shared-secret authentication material (and use of public key oriented authentication) is a countermeasure to many kinds of harvesting and skimming vulnerabilities and threats. Many of the identity theft reports result from havesting/skimming of common, static, shared-secret authentication material for later use in fraudulent transactions. The advantage of public key based authentication material, is that while it can be used for authentication purposes, it doesn't have the short-coming of also being usable for originating fraudulent transactions and/or impersonation.

--

Anne & Lynn Wheeler | https://www.garlic.com/~lynn/

From: lynn@garlic.com Newsgroups: netscape.public.mozilla.crypto Subject: Re: The Worth of Verisign's Brand Date: Mon, 27 Jun 2005 08:45:04 -0700Anders Rundgren wrote:

in the mid-90s, one of the pki oriented payment structures had the financial insitutions registering public keys and issuing relying-party-only certificates.

the issue wasn't with the registering of the public keys ... since the financial insitutions have well established relationship management infrastructures.

the problem was trying to mandate that simple improvement in authentication technology be shackled to an extremely cumbersome and expensive redundant and superfluous PKI infrastrucutre.

the other issue ... was that the horribly complex, heavyweight and

expensive PKI infrastructure had limited their solution to only

addressing evesdropping of transactions in-flight ... which was

already adequately addressed by the existing e-commerce SSL solution

https://www.garlic.com/~lynn/aadsm5.htm#asrn2

https://www.garlic.com/~lynn/aadsm5.htm#asrn3

https://www.garlic.com/~lynn/subpubkey.html#sslcert

and was providing no additional improvement in the integrity landscape.

so you have a simple and straight-forward mechanism for minor technology improvement in authentication ... schackled to a horribly complex, expensive, redundant and superfluous PKI operation which was providing no additional countermeasures to the major e-commerce threats and vulnerability (than the existing deployed SSL solution).

Now if you were a business person and was given an alternative between two solutions that both effectively addressed the same subset of e-commerce vulnerabilities and threats ... one, the relatively straight-forward and simple SSL operation and the other a horribly complex, expensive, redundant and superfluous PKI operation .... which would you choose?

An additional issue with the horribly complex, expesnive, redundant and

superfluous PKI based solutions were the horrible payload bloat

represented by the relying-party-only certificates

https://www.garlic.com/~lynn/subpubkey.html#rpo

was that the typicaly payment message payload size is on the order of 60-80 bytes ... the attachment redundant and superfluous relying-party-only digital certificates represented a payload size on the order of 4k-12k bytes .... or a horrible payload bloat increase by a factor of one hundred times.

As mentioned in the previous posting,

https://www.garlic.com/~lynn/2005l.html#22 The Worth of Verisign's Brand

the x9a10 financial standard working group which was tasked with

preserving the integrity of the financial infrastructure for all

retail payments actually attempted to address major additional threats

and vulnerabilities with x9.59

https://www.garlic.com/~lynn/x959.html#x959

and there was actually a pilot project that was deployed for iso 8583

nacha trials ... see references at

https://www.garlic.com/~lynn/x959.html#aads

part of the market acceptance issue is that the market place has been so saturated with PKI oriented literature .... that if somebody mentions digital signature ... it appears to automatically bring forth images of horribly expensive, complex, redundant and superfluous PKI implementations

From: lynn@garlic.com Newsgroups: netscape.public.mozilla.crypto Subject: Re: The Worth of Verisign's Brand Date: Mon, 27 Jun 2005 09:18:39 -0700Anders Rundgren wrote:

is that there is absolutely no changes to existing infrastructures, business processes and/or message flows ... they all stay the same ... there is just a straight-forward upgrade of the authentication technology (while not modifying existing infrastructures, business process, and/or message flows).

aggresive cost optimization for a digital signature only hardware token would result in negligiible difference between the fully-loaded roll-out costs for the current contactless, RFID program and the fully-loaded costs for nearly identical operation for a contactless, digital signature program.

the advantage over some of the earlier pki-oriented payment rollouts

https://www.garlic.com/~lynn/2005l.html#23

is that in addition to addressing evesdropping vulnerability for data-in-flight (already addressed by the simpler SSL-based solution) ... it also provides countermeasures for impersonation vulnerabilities as well as numerous kinds of data breach and identity theft vulnerabilities.

is that in addition to addressing evesdropping vulnerability for

data-in-flight (already addressed by the simpler SSL-based solution)

... it also provides countermeasures for impersonation

vulnerabilities as well as numerous kinds of data breach and

identity theft vulnerabilities.

https://www.garlic.com/~lynn/2005l.html#22