From: Lynn Wheeler <lynn@garlic.com> Subject: Dataprocessing Career Date: 09 Feb 2022 Blog: Facebookre:

After transferring to San Jose Research, got to wander around machine rooms/datacenters in silicon valley (both IBM and non-IBM), including disk engineering (bldg14) and product test (bldg15). They were running pre-scheduled, stand-alone, around-the-clock, 7x24 mainframe testing. They said that they had recently tried MVS, but it had 15min mean-time-between-failure in that environment (requiring manual re-ipl). I offered to rewrite I/O supervisor making it bulllet-proof and never fail, allowed any amount of on-demand, concurrent testing, greatly improving productivity. I then wrote up (internal IBM) research report about all the work and happened to mention the MVS 15min MTBF, which brought done the wrath of the MVS organization on my head (informally I was told that they tried to have me separated from the IBM company, when that didn't work, they tried to make my career as unpleasant as possible ... the joke was on them, they had to get in long line of other people, periodically being told that I had no career, promotions, and/or raises).

In the late70s and early 80s, I was blamed for online computer conferencing (precursor to social media) on the internal network (larger than arpanet/internet from just about the beginning until sometime mid/late 80s). It really took off spring of 1981 when I distributed trip report of visit to Jim Gray at Tandem, only about 300 actually participated, but claims up to 25,000 was reading. We then printed six copies of 300 pages, with executive summary and summary of summary, packaged in Tandem 3-ring binders and set them to corporate executive committee (folklore is 5of6 wanted to fire me).

I was introduced to John Boyd about the same time and would sponsor

his briefings at IBM (finding something of kindred spirit) ... in the

50s as instructor at USAF weapons school, he was referred to as 40sec

Boyd (challenge to all comers that he could beat them within 40sec),

considered possibly the best fighter pilot in the world. He then

invented E/M theory and used it to redo the original F15 design

(cutting weight nearly in half), and used it for YF16 & YF17 (which

become the F16 & F18). By the time he passes in 1997, the USAF had

pretty much disowned him and it was the Marines at Arlington. One of

his quotes:

There are two career paths in front of you, and you have to choose

which path you will follow. One path leads to promotions, titles, and

positions of distinction.... The other path leads to doing things that

are truly significant for the Air Force, but the rewards will quite

often be a kick in the stomach because you may have to cross swords

with the party line on occasion. You can't go down both paths, you

have to choose. Do you want to be a man of distinction or do you want

to do things that really influence the shape of the Air Force? To Be

or To Do, that is the question.

... snip ...

getting to play disk engineer in bldgs 14&15

https://www.garlic.com/~lynn/subtopic.html#disk

online computer conferencing

https://www.garlic.com/~lynn/subnetwork.html#cmc

internal network

https://www.garlic.com/~lynn/subnetwork.html#internalnet

Boyd posts & URLs

https://www.garlic.com/~lynn/subboyd.html

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: On why it's CR+LF and not LF+CR [ASR33] Newsgroups: alt.folklore.computers Date: Wed, 09 Feb 2022 10:48:05 -1000David Lesher <wb8foz@panix.com> writes:

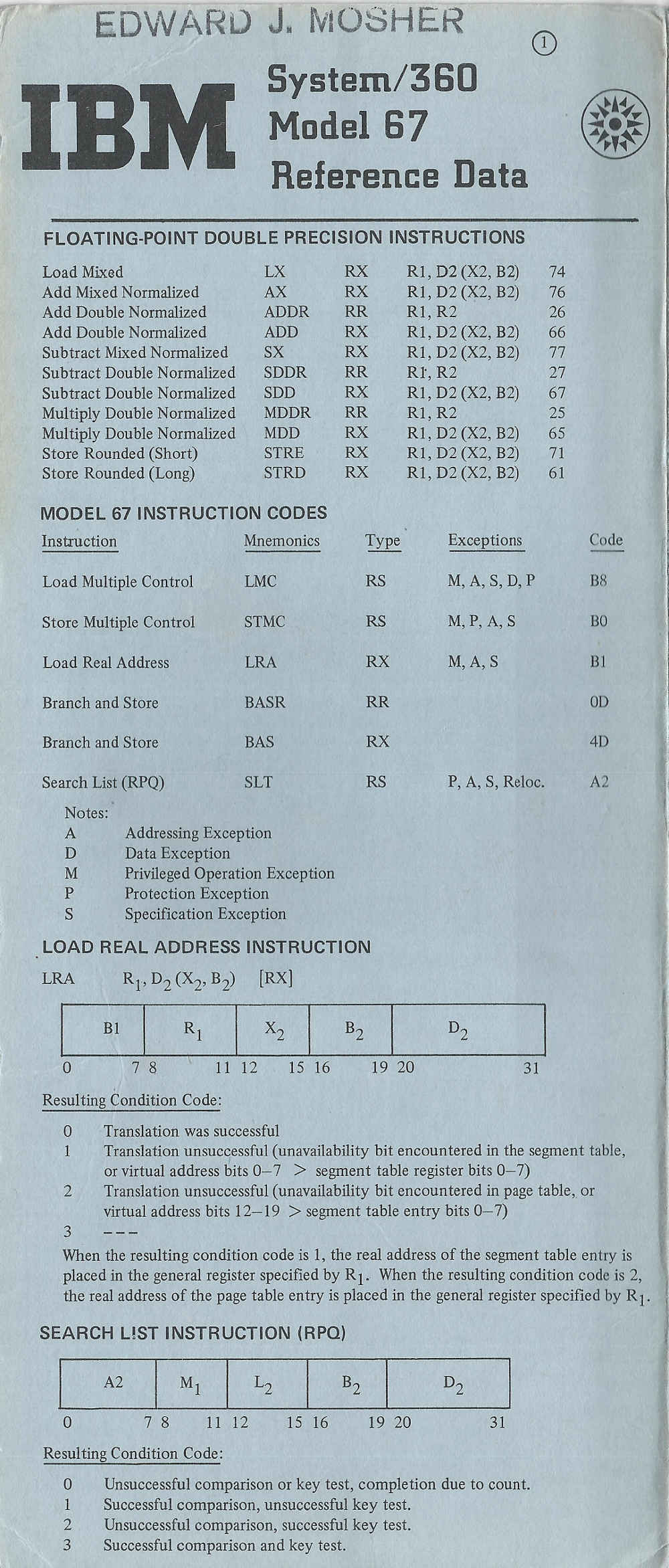

mentioned recently univ. was sold 360/67 to replace 709/1401 supposedly for tss/360, but never came to production fruition so ran as 360/65 with os/360. The IBM TSS/360 SE would do some testing on weekends (I sometimes had to share my 48hr weekend time).

Shortly after CP67 was delivered to univ, got to play with it on weekends (in addition to os/360 support). Very early on (before I started rewriting lots of CP67 code), the IBM SE and I put together a fortran edit/compile/execute benchmark with simulated users, his for TSS/360, mine for CP67/CMS. His TSS benchmark had four users and had worse interactive response and throughput than my CP67/CMS benchmark with 35 users.

Later at IBM, I did a paged-mapped filesystem for CP67/CMS and would

explain I learned what not to do from TSS/360. The (failed) Future

System somewhat adopted its "single-level-store" from TSS/360 ... some

FS details

http://www.jfsowa.com/computer/memo125.htm

... and I would periodically ridicule FS (in part of how they were doing

"single-level-store") ... which wasn't exactly a career enhancing

activity. Old quote from Ferguson & Morris, "Computer Wars: The Post-IBM

World", Time Books, 1993

http://www.amazon.com/Computer-Wars-The-Post-IBM-World/dp/1587981394

.... reference to the "Future System" project

1st half of the 70s:

and perhaps most damaging, the old culture under Watson Snr and Jr of

free and vigorous debate was replaced with *SYNCOPHANCY* and *MAKE NO

WAVES* under Opel and Akers. It's claimed that thereafter, IBM lived in

the shadow of defeat ... But because of the heavy investment of face by

the top management, F/S took years to kill, although its wrong

headedness was obvious from the very outset. "For the first time, during

F/S, outspoken criticism became politically dangerous," recalls a former

top executive.

... snip ...

one of the final nails in the FS coffin was analysis by the IBM Houston Science Center that if 370/195 software was redone for FS machine made out of the fastest available technology, it would have the throughput of 370/145 (about 30 times slowdown).

The death of FS also gave virtual memory filesystems really bad reputation inside IBM ... regardless of how they were implemented.

trivia: AT&T had a contract with IBM for a stripped-down TSS/360 kernel referred to SSUP ... for UNIX to be layered on top. Part of the issue is that mainframe hardware support required production/type-1 RAS&EREP for maint. It turns out that adding that level of support to UNIX, was many times larger than doing straight UNIX port to 370 (as well as layering UNIX on top SSUP was significantly simpler).

This also came up for both Amdahl (gold/uts) and IBM (UCLA Locus for AIX/370) ... both running them under VM370 (providing the necessary type-1 RAS&EREP).

future system posts

https://www.garlic.com/~lynn/submain.html#futuresys

some more recent posts mentioning SSUP:

https://www.garlic.com/~lynn/2021k.html#64 1973 Holmdel IBM 370's

https://www.garlic.com/~lynn/2021k.html#63 1973 Holmdel IBM 370's

https://www.garlic.com/~lynn/2021e.html#83 Amdahl

https://www.garlic.com/~lynn/2020.html#33 IBM TSS

https://www.garlic.com/~lynn/2019d.html#121 IBM Acronyms

https://www.garlic.com/~lynn/2018d.html#93 tablets and desktops was Has Microsoft

https://www.garlic.com/~lynn/2017j.html#66 A Computer That Never Was: the IBM 7095

https://www.garlic.com/~lynn/2017g.html#102 SEX

https://www.garlic.com/~lynn/2017d.html#82 Mainframe operating systems?

https://www.garlic.com/~lynn/2017d.html#80 Mainframe operating systems?

https://www.garlic.com/~lynn/2017d.html#76 Mainframe operating systems?

https://www.garlic.com/~lynn/2017.html#20 {wtf} Tymshare SuperBasic Source Code

https://www.garlic.com/~lynn/2014j.html#17 The SDS 92, its place in history?

https://www.garlic.com/~lynn/2014f.html#74 Is end of mainframe near ?

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Dataprocessing Career Date: 09 Feb 2022 Blog: Facebookre:

passthru drift ... well before IBM/PC & HLLAPI, there was

PARASITE/STORY (done by VMSG author, very early prototype VMSG was

used by PROFS group for email client, PARASITE/STORY was coding

marvel) ... using simulated 3270s on the same mainframe or via PVM,

creating simulated 3270s elsewhere on the internal

network. Description of parasite/story

https://www.garlic.com/~lynn/2001k.html#35

STORY example to log onto RETAIN and automagically retrieve PUT Bucket

(using the YKT PVM/CCDN gateway)

https://www.garlic.com/~lynn/2001k.html#36

internal network posts

https://www.garlic.com/~lynn/subnetwork.html#internalnet

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Final Rules of Thumb on How Computing Affects Organizations and People Date: 09 Feb 2022 Blog: FacebookFinal Rules of Thumb on How Computing Affects Organizations and People

I would say "e-commerce" prevailed because it used the bank "card-not-present" model and processing (after leaving IBM was brought in as consultant to small client/server startup that wanted to do payment transactions on their servers, the startup had also invented this technology they called "SSL" they wanted to use ... had responsible for everything between their servers and financial payment networks, did gateway that simulated one of the existing vendor protocols that was in common use by Las Vegas hotels and casinos).

e-commerce gateway

https://www.garlic.com/~lynn/subnetwork.html#gateway

Counter ... mid-90s, billions were being spent on redoing (mostly mainframe) financial software for implementing straight through processing, some of it dating back to the 60s, real-time had been added over the years ... but "settlement" was still being done in the overnight batch window. Problem in the 90s was overnight batch window was being shortened because of globalization ... which was also contributing to other workload increases, (both) resulting in the overnight batch window being exceeded. The straight through processing implementations were planning on using large number of killer micros with industry standard parallelization libraries. Warnings by me and others about the industry parallelization libraries had hundred times the overhead of batch cobol, were ignored ... until large pilots were going down in flames (increased overhead totally swamping anticipated throughput increase with large number of the killer micros).

After turn of the century was involved in somebody doing demos of something similar but relied on financial transaction language that generated fine-grain SQL statements that were easily parallelized by RDBMS clusters. Its throughput handled many times the transaction rates of largest production operations and was dependent on 1) increase in micro performance and associated disks and 2) significant cluster RDBMS throughput optimization done by IBM and other RDBMS vendors. It was demo'ed to various industry financial bodies with high acceptance ... and then hit brick wall. Were finally told that there were too many executives that still bore the scars of the failed 90s efforts.

had worked on RDBMS cluster-scale-up at IBM for HA/CMP Product

https://www.garlic.com/~lynn/subtopic.html#hacmp

some recent e-commerce posts

https://www.garlic.com/~lynn/2022.html#3 GML/SGML/HTML/Mosaic

https://www.garlic.com/~lynn/2019d.html#84 Steve King Devised an Insane Formula to Claim Undocumented Immigrants Are Taking Over America

https://www.garlic.com/~lynn/2019d.html#74 Employers escape sanctions, while the undocumented risk lives and prosecution

https://www.garlic.com/~lynn/2018f.html#119 What Minimum-Wage Foes Got Wrong About Seattle

https://www.garlic.com/~lynn/2018f.html#35 OT: Postal Service seeks record price hikes to bolster falling revenues

https://www.garlic.com/~lynn/2018d.html#58 We must stop bad bosses using migrant labour to drive down wages

https://www.garlic.com/~lynn/2018b.html#72 Doubts about the HR departments that require knowledge of technology that does not exist

https://www.garlic.com/~lynn/2018b.html#45 More Guns Do Not Stop More Crimes, Evidence Shows

https://www.garlic.com/~lynn/2018.html#106 Predicting the future in five years as seen from 1983

overnight batch window posts

https://www.garlic.com/~lynn/2022.html#23 Target Marketing

https://www.garlic.com/~lynn/2021k.html#123 Mainframe "Peak I/O" benchmark

https://www.garlic.com/~lynn/2021k.html#120 Computer Performance

https://www.garlic.com/~lynn/2021k.html#58 Card Associations

https://www.garlic.com/~lynn/2021j.html#30 VM370, 3081, and AT&T Long Lines

https://www.garlic.com/~lynn/2021i.html#87 UPS & PDUs

https://www.garlic.com/~lynn/2021i.html#10 A brief overview of IBM's new 7 nm Telum mainframe CPU

https://www.garlic.com/~lynn/2021g.html#18 IBM email migration disaster

https://www.garlic.com/~lynn/2021e.html#61 Performance Monitoring, Analysis, Simulation, etc

https://www.garlic.com/~lynn/2021c.html#61 MAINFRAME (4341) History

https://www.garlic.com/~lynn/2021b.html#4 Killer Micros

https://www.garlic.com/~lynn/2019e.html#155 Book on monopoly (IBM)

https://www.garlic.com/~lynn/2019c.html#80 IBM: Buying While Apathetaic

https://www.garlic.com/~lynn/2019c.html#11 mainframe hacking "success stories"?

https://www.garlic.com/~lynn/2019b.html#62 Cobol

https://www.garlic.com/~lynn/2018f.html#85 Douglas Engelbart, the forgotten hero of modern computing

https://www.garlic.com/~lynn/2018d.html#43 How IBM Was Left Behind

https://www.garlic.com/~lynn/2018d.html#2 Has Microsoft commuted suicide

https://www.garlic.com/~lynn/2018c.html#33 The Pentagon still uses computer software from 1958 to manage its contracts

https://www.garlic.com/~lynn/2018c.html#30 Bottlenecks and Capacity planning

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: SEC Set to Lower Massive Boom on Private Equity Industry Date: 10 Feb 2022 Blog: FacebookSEC Set to Lower Massive Boom on Private Equity Industry

private equity posts

https://www.garlic.com/~lynn/submisc.html#private.equity

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: On why it's CR+LF and not LF+CR [ASR33] Newsgroups: alt.folklore.computers Date: Thu, 10 Feb 2022 08:07:27 -1000Lynn Wheeler <lynn@garlic.com> writes:

... other RAS&EREP trivia/drift: when transferred to san jose research in the late 70s, got to wander around of lot of datacenters in silicon valley (both IBM&non-IBM), including disk engineering (bldg14) and disk product test (bldg15) across the street. They were running pre-scheduled, stand-alone, 7x24 mainframes for engineering testing. They mentioned that they had recently tried MVS, but it had 15min mean-time-between-failure in that environment, requiring manual re-ipl (boot). I offerred to rewrite input/output supervisor, making it bullet proof and never fail, allowing any amount of on-demand, concurrent testing, greatly improving productivitty. I then wrote (internal) research report about the activity and happen to mention the MVS 15min MTBF ... bringing down the wrath of the MVS organization on my head.

getting to play disk engineer in bldgs 14&15

https://www.garlic.com/~lynn/subtopic.html#disk

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: On why it's CR+LF and not LF+CR [ASR33] Newsgroups: alt.folklore.computers Date: Thu, 10 Feb 2022 11:47:34 -1000Peter Flass <peter_flass@yahoo.com> writes:

... real topic drift ... In the late70s & early80s, I was blamed for

online computer conferencing on the internal network ... it really took

off spring 1981 after i distributed trip report of visit to Jim Gray at

Tandem. Only around 300 participated, but claim was the upwards of

25,000 were reading. Six copies of about 300 pages were printed with

executive summary and summary of summary, packaged in Tandem 3-ring

binders and sent to corporate executive committee (folklore is that 5of6

wanted to fire me) ... some of summary of summary:

• The perception of many technical people in IBM is that the company is

rapidly heading for disaster. Furthermore, people fear that this

movement will not be appreciated until it begins more directly to affect

revenue, at which point recovery may be impossible

• Many technical people are extremely frustrated with their management and

with the way things are going in IBM. To an increasing extent, people

are reacting to this by leaving IBM Most of the contributors to the

present discussion would prefer to stay with IBM and see the problems

rectified. However, there is increasing skepticism that correction is

possible or likely, given the apparent lack of commitment by management

to take action

• There is a widespread perception that IBM management has failed to

understand how to manage technical people and high-technology

development in an extremely competitive environment.

... took another decade (1981-1992) ... IBM had gone into the red and

was being reorganized into the 13 baby blues in preparation for

breaking up the company .... reference gone behind paywall but mostly

lives free at wayback machine

https://web.archive.org/web/20101120231857/http://www.time.com/time/magazine/article/0,9171,977353,00.html

may also work

https://content.time.com/time/subscriber/article/0,33009,977353-1,00.html

we had already left IBM, but we get a call from the bowels of Armonk asking if we could help with breakup of the company. Lots of business units were using supplier contracts in other units via MOUs. After the breakup, all of these contracts would be in different companies ... all of those MOUs would have to be cataloged and turned into their own contracts (however, before we get started, the board brings in a new CEO and reverses the breakup).

... previously, in my executive exit interview, was told they could have forgiven me for being wrong, but they never were going to forgive me for being right.

... the joke somewhat on the MVS group ... they had to get in long line of people that wished I was fired.

online computer conferencing posts

https://www.garlic.com/~lynn/subnetwork.html#cmc

internal network posts

https://www.garlic.com/~lynn/subnetwork.html#internalnet

IBM downfall/downturn posts

https://www.garlic.com/~lynn/submisc.html#ibmdownfall

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: USENET still around Date: 11 Feb 2022 Blog: Facebookusenet still around ... as well as gatewayed to google groups ... alt.folklore.computers

re:

https://www.garlic.com/~lynn/2022.html#127 On why it's CR+LF and not LF+CR [ASR33]

https://www.garlic.com/~lynn/2022b.html#1 On why it's CR+LF and not LF+CR [ASR33]

https://www.garlic.com/~lynn/2022b.html#5 On why it's CR+LF and not LF+CR [ASR33]

https://www.garlic.com/~lynn/2022b.html#6 On why it's CR+LF and not LF+CR [ASR33]

Other usenet trivia:

Leaving IBM, I had to turn in everything IBM, including a RS6000/320. I've mentioned before that the GPD/Adstar VP would periodically ask for help with some of his investments in distributed computing startups that would use IBM disks (partial work around to communication group blocking all IBM mainframe distributed computing efforts). In any case, he has my (former) RS6000/320 given to me, delivered to my house.

Also, SGI (graphical workstations)

https://en.wikipedia.org/wiki/Silicon_Graphics

has bought MIPS (risc) Computers

https://en.wikipedia.org/wiki/MIPS_Technologies

and has gotten a new president. MIPS new president asks me to (first)

take home his executive SGI Indy (to configure) and then 2) keep it

for him (when he leaves MIPS, I have to return it).

So now in my office at home I have RS/6000 320, SGI Indy and a couple

486 PCs. Pagesat runs a pager service ... but is also offering a full

satellite usenet feed

http://www.art.net/lile/pagesat/netnews.html

I get offered a deal ... if I do unix and dos drivers for the usenet

satellite modem, and write an article about it for Boardwatch magazine

https://en.wikipedia.org/wiki/Boardwatch

I get a free installation at home with full usenet feed. I also put up

a "waffle" usenet bbs on one of the 486 PCs.

https://en.wikipedia.org/wiki/Waffle_(BBS_software)

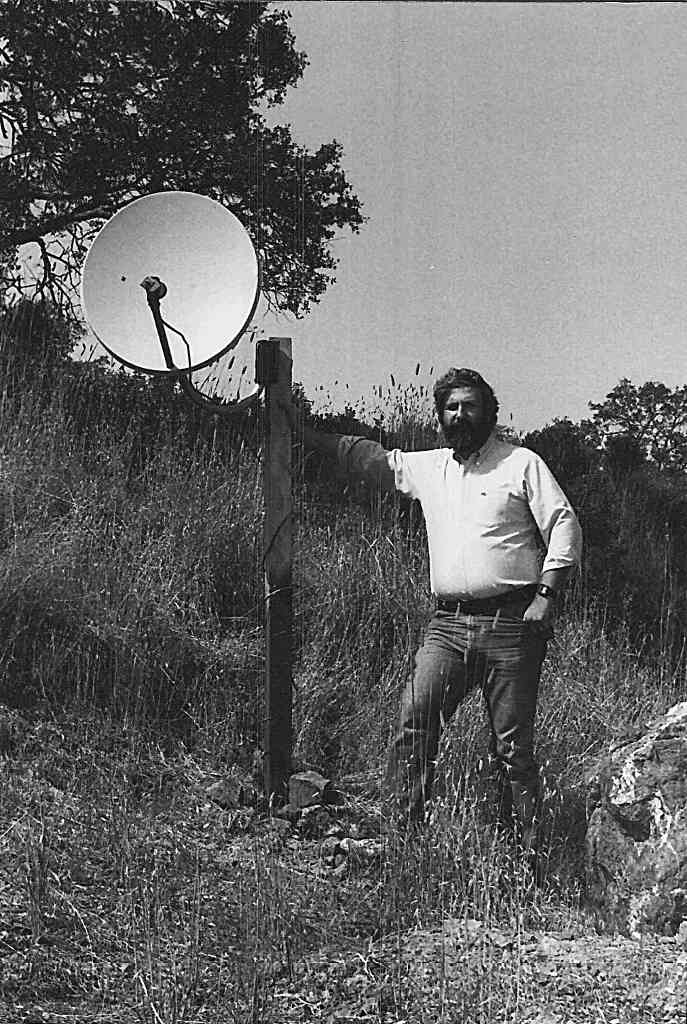

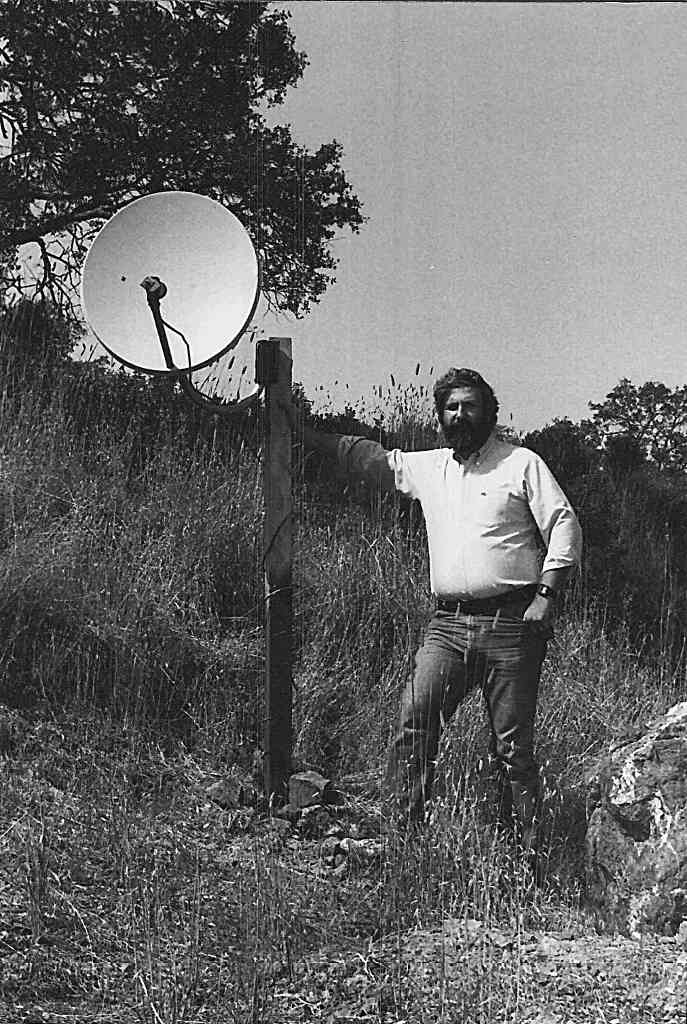

picture of me on the hill behind the house installing pagesat dish

https://www.garlic.com/~lynn/pagesat.jpg

periodically reposted: senior disk engineer getting talk scheduled at internal, world-wide, annual communication group conference in the late 80s, supposedly on 3174 performance, but opens the talk with the statement that the communication group was going to be responsible for the demise of the disk division. The issue was that the communication group had stranglehold on datacenters with its corporate strategic ownership of everything that crossed datacenter wall and was fiercely fighting off client/server and distributed computing trying to preserve their dumb terminal paradigm. The disk division was seeing drop in disk sales with customers moving to more distributed computing friendly platforms. The disk division had come up with a number of solutions, but the communication group (with their corporate strategic datacenter stranglehold) would veto them.

posts about FS

https://www.garlic.com/~lynn/submain.html#futuresys

posts about playing disk engineer

https://www.garlic.com/~lynn/subtopic.html#disk

posts about online computer conferencing

https://www.garlic.com/~lynn/subnetwork.html#cmc

posts about communication group

https://www.garlic.com/~lynn/subnetwork.html#terminal

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Porting APL to CP67/CMS Date: 11 Feb 2022 Blog: FacebookCSC also ported apl\360 to cp67/cms for cms\apl ... also did some of early stuff for what shipped later to customers as VS/Repack ... would trace instruction and storage use and do semi-automagic module reorg to improve performance in demand page virtual memory (also used by several internal IBM orgs as part of moving OS/360 software to VS1 and VS2.

We had 1403 printout taped floor to ceiling down the halls of cms\apl storage use ... time along the hallway (horizontal), storage address vertical ... looked like extreme saw tooth. APL\360 had 16kbyte workspaces that were swapped, storage management allocated new storage location for every assignment and then garbage collected when it ran out of storage and compressed low address. Didn't make any difference in small swapped workspace ... but in demand page large virtual memory would quickly touch every virtual page before garbage collection ... was guaranteed to cause page thrashing.

That was completely reworked to eliminate the severe page thrashing. Also added API for system services (for things like file i/o, combination of large workspaces and system services API allowed real world application). APL purists criticized the API implementation ... was eventually replaced with "shared variables"

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

CMS\APL was also used for deploying the APL-based sales&marketing support apps on (CP67) HONE systems.

The Palo Alto Science Center ... then did the migration from CP67/CMS to VM370/CMS for APL\CMS. PASC also did the apl microcode assist for 370/145 (ran lots of APL on 145 at speed of 370/168) and APL for IBM 5100.

When HONE consolidated the US HONE systems in Palo Alto, their datacenter was across the back parking lot from PASC (by this time, HONE had migrated from CP67/CMS to VM370/CMS).

When HONE consolidated the US HONE systems in Palo Alto, their datacenter was across the back parking lot from PASC (by this time, HONE had migrated from CP67/CMS to VM370/CMS). An issue for HONE was it also needed large storage and I/O of 168s ... so was unable to take advantage of the 370/145 apl microcode assist. HONE had max'ed out number of 168s in a single-system-image, loosely-coupled (sharing all disks) configuration with load-balancing and fall-over. The VM370 development group had simplified and dropped a lot of stuff in the CP67->VM370 morph (including shared-memory, tightly-coupled multiprocessor support). After migrating lots of stuff from CP67 into VM370 release 2 ... I got SMP multiprocessor support back into a VM370 release 3 version ... initially specifically for HONE so they could add a 2nd processor to every 168 system.

HONE posts

https://www.garlic.com/~lynn/subtopic.html#hone

SMP posts

https://www.garlic.com/~lynn/subtopic.html#smp

trivia: about HONE loosely-coupled ... they used a "compare-and-swap" channel program mechanism (analogous to compare-and-swap 370 instruction) ... read the record, update it, then write it back using the compare-and-swap (search equal then only update/write record if it hadn't been changed).

This had significantly lower overhead than reserve/release. It also had the advantage over the ACP/TPF loosely-coupled locking RPQ for the 3830 controller. The 3830 lock RPQ only worked for all disks connected to single 3830 controller ... limiting ACP/TPF configurations to four systems (because of the 3830 four channel interface). HONE approach worked with string-switch ... where string of 3330 drives were connected to two different 3830 controllers (each with four channel switch) ... allowing eight systems operating in loosely-coupled mode (and then adding a 2nd cpu to each system).

recent ACP/TPF post

https://www.garlic.com/~lynn/2021i.html#77

referencing old post

https://www.garlic.com/~lynn/2008i.html#39 American Airlines

with old ACP/TPF lock rpq email

https://www.garlic.com/~lynn/2008i.html#email800325

specific posts mentioning HONE single system image

https://www.garlic.com/~lynn/2019d.html#106 IBM HONE

https://www.garlic.com/~lynn/2018d.html#83 CMS\APL

https://www.garlic.com/~lynn/2018c.html#77 z/VM Live Guest Relocation

https://www.garlic.com/~lynn/2017d.html#42 What are mainframes

https://www.garlic.com/~lynn/2016.html#63 Lineage of TPF

https://www.garlic.com/~lynn/2015.html#87 a bit of hope? What was old is new again

https://www.garlic.com/~lynn/2014g.html#103 Fifty Years of nitpicking definitions, was BASIC,theProgrammingLanguageT

https://www.garlic.com/~lynn/2014c.html#88 Optimization, CPU time, and related issues

https://www.garlic.com/~lynn/2012.html#10 Can any one tell about what is APL language

https://www.garlic.com/~lynn/2011n.html#35 Last Word on Dennis Ritchie

https://www.garlic.com/~lynn/2011e.html#63 Collection of APL documents

https://www.garlic.com/~lynn/2011e.html#58 Collection of APL documents

https://www.garlic.com/~lynn/2010l.html#20 Old EMAIL Index

https://www.garlic.com/~lynn/2008j.html#50 Another difference between platforms

https://www.garlic.com/~lynn/2003b.html#26 360/370 disk drives

... and some old posts mentioning VS/Repack

https://www.garlic.com/~lynn/2022.html#129 Dataprocessing Career

https://www.garlic.com/~lynn/2021e.html#61 Performance Monitoring, Analysis, Simulation, etc

https://www.garlic.com/~lynn/2021c.html#37 Some CP67, Future System and other history

https://www.garlic.com/~lynn/2019c.html#25 virtual memory

https://www.garlic.com/~lynn/2017j.html#86 VS/Repack

https://www.garlic.com/~lynn/2017j.html#84 VS/Repack

https://www.garlic.com/~lynn/2016h.html#111 Definition of "dense code"

https://www.garlic.com/~lynn/2016f.html#92 ABO Automatic Binary Optimizer

https://www.garlic.com/~lynn/2015f.html#79 Limit number of frames of real storage per job

https://www.garlic.com/~lynn/2015c.html#69 A New Performance Model ?

https://www.garlic.com/~lynn/2015c.html#66 Messing Up the System/360

https://www.garlic.com/~lynn/2014c.html#71 assembler

https://www.garlic.com/~lynn/2014b.html#81 CPU time

https://www.garlic.com/~lynn/2013k.html#62 Suggestions Appreciated for a Program Counter History Log

https://www.garlic.com/~lynn/2012o.html#20 Assembler vs. COBOL--processing time, space needed

https://www.garlic.com/~lynn/2012o.html#19 Assembler vs. COBOL--processing time, space needed

https://www.garlic.com/~lynn/2012j.html#82 printer history Languages influenced by PL/1

https://www.garlic.com/~lynn/2012j.html#20 Operating System, what is it?

https://www.garlic.com/~lynn/2012d.html#73 Execution Velocity

https://www.garlic.com/~lynn/2011e.html#8 Multiple Virtual Memory

https://www.garlic.com/~lynn/2010m.html#5 Memory v. Storage: What's in a Name?

https://www.garlic.com/~lynn/2010k.html#9 Idiotic programming style edicts

https://www.garlic.com/~lynn/2010k.html#8 Idiotic programming style edicts

https://www.garlic.com/~lynn/2010j.html#81 Percentage of code executed that is user written was Re: Delete all members of a PDS that is allocated

https://www.garlic.com/~lynn/2010j.html#48 Knuth Got It Wrong

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Porting APL to CP67/CMS Date: 11 Feb 2022 Blog: Facebookre:

Some of the early remote (dialup) CMS\APL users were Armonk business planners. They sent data to cambridge of the most valuable IBM business information (detailed customer profiles, purchases, kind of work etc) and implemented APL business modeling using the data. In cambridge, we had to demonstrate extremely strong security ... in part because various professors, staff, and students from Boston/Cambridge area universities were also using the CSC CP67/CMS system.

csc posts

https://www.garlic.com/~lynn/subtopic.html#545tech

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Seattle Dataprocessing Date: 11 Feb 2022 Blog: FacebookSpent a lot of time in Seattle area, but not for IBM. In grade school (up over hill from Boeing field), the Boeing group that sponsored our cub scout pack gave evening plane ride, took off from Boeing field, flew around skies of Seattle and then back to Boeing field. While undergraduate, I'm hired into small group in Boeing CFO office to help with the formation with Boeing Computer Services (consolidate all dataprocessing in a independent business unit to better monetize the investment, including offering services to non-Boeing entities).

Renton center has a couple hundred million in 360s, 360/65s arriving faster than they could be installed (boxes constantly staged in hallways around machine room), and 747#3 flying skies of Seattle getting FAA flt certification. Lots of politics between CFO and Renton datacenter director. The CFO only had small machine room at Boeing field with 360/30 for payroll, although they enlarge it and install a 360/67 for me to play with when I'm not doing other stuff. When I graduate, I join IBM science center on the opposite coast and then transfer to san jose research.

Much later, after cluster scale-up for our HA/CMP product is transferred, announced as IBM supercomputer and we are told we can't work on anything with more than four processors, we leave IBM.

Later, we are brought into small client/server startup as consultants, two former Oracle people that we had been working with on RDBMS cluster scale-up, are there responsible for something called "commerce server" and want to do payment transactions on the server. The startup had also invented this technology they called "SSL", the result is now frequently called "electronic commerce".

In 1999, financial company asks us to spend a year in Seattle working

on electronic commerce projects with a few companies in the area,

including large PC company in Redmond and a Kerberos/security company

out in Issaquah (it had contract with the Redmond PC company to port

Kerberos, becomes active directory). We have regular meetings with the

CEO of the Kerberos company, previously he had been head of IBM POK,

then IBM BOCA, and also at Perot Systems

https://www.nytimes.com/1997/07/26/business/executive-who-oversaw-big-growth-at-perot-systems-quits.html

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

HA/CMP posts

https://www.garlic.com/~lynn/subtopic.html#hacmp

other recent posts mentioning boeing computer services

https://www.garlic.com/~lynn/2022.html#48 Mainframe Career

https://www.garlic.com/~lynn/2022.html#22 IBM IBU (Independent Business Unit)

https://www.garlic.com/~lynn/2022.html#12 Programming Skills

https://www.garlic.com/~lynn/2021k.html#55 System Availability

https://www.garlic.com/~lynn/2021j.html#63 IBM 360s

https://www.garlic.com/~lynn/2021i.html#89 IBM Downturn

https://www.garlic.com/~lynn/2021i.html#6 The Kill Chain: Defending America in the Future of High-Tech Warfare

https://www.garlic.com/~lynn/2021h.html#64 WWII Pilot Barrel Rolls Boeing 707

https://www.garlic.com/~lynn/2021h.html#46 Dynamic Adaptive Resource Management

https://www.garlic.com/~lynn/2021g.html#39 iBM System/3 FORTRAN for engineering/science work?

https://www.garlic.com/~lynn/2021g.html#6 IBM 370

https://www.garlic.com/~lynn/2021f.html#78 The Long-Forgotten Flight That Sent Boeing Off Course

https://www.garlic.com/~lynn/2021f.html#57 "Hollywood model" for dealing with engineers

https://www.garlic.com/~lynn/2021e.html#80 Amdahl

https://www.garlic.com/~lynn/2021e.html#54 Learning PDP-11 in 2021

https://www.garlic.com/~lynn/2021b.html#62 Early Computer Use

https://www.garlic.com/~lynn/2021b.html#5 Availability

https://www.garlic.com/~lynn/2021.html#78 Interactive Computing

https://www.garlic.com/~lynn/2021.html#41 CADAM & Catia

https://www.garlic.com/~lynn/2020.html#32 IBM TSS

https://www.garlic.com/~lynn/2020.html#29 Online Computer Conferencing

https://www.garlic.com/~lynn/2020.html#10 "This Plane Was Designed By Clowns, Who Are Supervised By Monkeys"

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Seattle Dataprocessing Date: 11 Feb 2022 Blog: Facebookre:

... oops didn't realize that nytimes article might be behind paywall

for some ... similar, but different article, PEROT'S PARTNERS

https://www.dmagazine.com/publications/d-magazine/1997/january/perots-partners/

My wife had run into him in prior life when she was in the GBURG JES group (and one of the catchers for JES3). He cons her into going to POK to be in charge of loosely-coupled architecture where she does Peer-coupled shared data architecture. She doesn't remain long because 1) constant battles with the communication group trying to force her into using VTAM for loosely-coupled operation. and 2) little uptake (until much later for sysplex and parallel sysplex) except for IMS hot-standby (she has story about asking Vern Watts who he will ask to get permission for doing hot-standby, he tells her nobody, he will just do it and tell IBM about it when it was all done).

Peer-Coupled Shared Data posts

https://www.garlic.com/~lynn/submain.html#shareddata

trivia: Dec 1999, we have booth at World Wide Retail Banking Show in Miami

with some of the companies we were working with (not just in Seattle Area).

Old 1Dec1999 post

https://www.garlic.com/~lynn/99.html#217

press release at the show

https://www.garlic.com/~lynn/99.html#224

AADS references

https://www.garlic.com/~lynn/x959.html#aads

X9.59 references

https://www.garlic.com/~lynn/x959.html#x959

X9.59 posts

https://www.garlic.com/~lynn/subpubkey.html#x959

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: TCP/IP and Mid-range market Date: 12 Feb 2022 Blog: Facebookre:

other trivia, there are periodic posts by former DEC people in (usenet) alt.folklore.computers about person behind DEC VMS (cutler) going to redmond to do NT for m'soft. other trivia, the IBM FS project was completely different from 370 and was going to completely replace it (internal politics was killing off 370 efforts and the lack of new 370s is credited with giving clone 370 makers, their market foothold).

other FS trivia:

http://www.jfsowa.com/computer/memo125.htm

when FS implodes their is mad rush to get stuff back into the 370

product pipelines, including kick off the quick&dirty 3033 & 3081

efforts. Ferguson & Morris, "Computer Wars: The Post-IBM World", Time

Books, 1993

http://www.amazon.com/Computer-Wars-The-Post-IBM-World/dp/1587981394

.... reference to the "Future System" project 1st half of

the 70s:

and perhaps most damaging, the old culture under Watson Snr and Jr of

free and vigorous debate was replaced with *SYNCOPHANCY* and *MAKE NO

WAVES* under Opel and Akers. It's claimed that thereafter, IBM lived

in the shadow of defeat ... But because of the heavy investment of

face by the top management, F/S took years to kill, although its wrong

headedness was obvious from the very outset. "For the first time,

during F/S, outspoken criticism became politically dangerous," recalls

a former top executive.

... snip ...

The head of IBM POK (mainframes) also convinced corporate to kill the vm370 (virtual machine) product, shutdown the development location (burlington mall, mass, off 128, former SBC bldg) and transfer all the people to POK to support MVS/XA development (Endicott eventually manages to save the vm370 product mission, but had to reconstitute a development group from scratch). They weren't going to tell the VM group until the very last minute, to minimize those that might escape. The information managed to leak early and several managed to escape ... including to the infant VMS effort at DEC. Joke was one of the largest contributors to VMS was the head of IBM POK. There was also a witch hunt for the source of the leak, fortunately for me ... nobody gave up the source.

future system posts

https://www.garlic.com/~lynn/submain.html#futuresys

internet posts

https://www.garlic.com/~lynn/subnetwork.html#internet

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: 360 Performance Date: 13 Feb 2022 Blog: FacebookWithin two semester hrs of taking intro to fortran/computers, I was hired fulltime at the univ to be responsible for os/360. The univ. had been sold a 360/67 for tss/360 (to replace 709/1401), but it never quite came to production fruition so ran as 360/65 (w/os360). Univ. shutdown datacenter over the weekend and I had to whole place to myself (although 48hrs w/o sleep could make monday morning classes hard). When CSC came out (Jan1968) with CP67 (3rd location after CSC & Lincoln Labs), I got to play with it also on the weekends (rewriting lots of the code). I was then part of the CP67 announcement at SHARE, Houston, spring 1968.

Originally, on 709 tape->tape, student jobs ran less than second, on

360/65 they ran over a minute. I installed HASP which cuts that in

half. Then I started redoing SYSGEN to carefully place datasets and

PDS members to optimized arm seek and PDS (multi-track) directory

member search, cutting it by another 2/3rds to 12.9secs. It never got

better than 709 until installed Univ. of Waterloo's WATFOR. For CP67 I

started by rewrting a lot of code for running OS/360 in virtual

machine. Part of Fall68 SHARE presentation.

https://www.garlic.com/~lynn/94.html#18

OS/360 benchmark ran 322sec bare machine, originally under CP67 ran 856sec (534sec CP67 CPU). Rewriting lots of code got it down to 435sec (113sec CP67 CPU), reduced CP67 CPU from 534sec to 113sec, or reduction of 421sec.

science center (& cp67) posts

https://www.garlic.com/~lynn/subtopic.html#545tech

A 2011 post, History of VM Performance (originally Oct86 SEAS,

European SHARE) ... w/copy of presentation being regiven at 2011 DC

Hillgang user group meeting

https://www.garlic.com/~lynn/2011c.html#72

some other past posts mentioning oct86 SEAS:

https://www.garlic.com/~lynn/2022.html#94 VM/370 Interactive Response

https://www.garlic.com/~lynn/2021j.html#59 Order of Knights VM

https://www.garlic.com/~lynn/2021h.html#82 IBM Internal network

https://www.garlic.com/~lynn/2021g.html#46 6-10Oct1986 SEAS

https://www.garlic.com/~lynn/2021e.html#65 SHARE (& GUIDE)

https://www.garlic.com/~lynn/2021c.html#41 Teaching IBM Class

https://www.garlic.com/~lynn/2021.html#17 Performance History, 5-10Oct1986, SEAS

https://www.garlic.com/~lynn/2019b.html#4 Oct1986 IBM user group SEAS history presentation

https://www.garlic.com/~lynn/2011e.html#22 Multiple Virtual Memory

https://www.garlic.com/~lynn/2011e.html#20 Multiple Virtual Memory

other early CP67 trivia, had support for 1052 & 2741 with automagic terminal type recognition (and used terminal controller SAD CCW to change terminal type scanning for each port). The univ. had some number of ASCII terminals and so I added ASCII support (extending automagic terminal type for ASCII).

I then wanted to have single dial-in number of all terminals ... hunt

group

https://en.wikipedia.org/wiki/Line_hunting

for all terminals. Didn't quite work since I could switch line scanner

for each port (on IBM telecommunication controller), IBM had took

short cut and hard wired line speed for each port (TTY was different

line speed from 2741&1052). Thus was born univ. project to do a clone

controller, built a mainframe channel interface board for Interdata/3

programmed to emulate mainframe telecommunication controller with the

addition it could also do dynamic line speed determination. Later it

was enhanced with Interdata/4 for the channel interface and cluster of

Interdata/3s for the port interfaces. Interdata (and later

Perkin/Elmer) sell it commercially as IBM clone controller. Four of us

at the univ. get written up responsible for (some part of the) clone

controller business.

https://en.wikipedia.org/wiki/Interdata

https://en.wikipedia.org/wiki/Perkin-Elmer

clone controller posts

https://www.garlic.com/~lynn/submain.html#360pcm

other 360 terminal controller line scanner trivia ... when the ascii/tty terminal line scanner arrived (for CE to install in the controller) ... it was in a box from "heathkit".

The clone controller business is claimed to be the major motivation

for the IBM Future System effort in the 70s (make the interface so

complex that clone makers couldn't keep up). From the law of

unintended consequences: FS was completely different from 370 and was

going to completely replace it and internal politics was shutting down

the 370 projects ... the lack of new IBM 370 offerings is claimed to

give the clone 370 processor makers their market foothold (FS as

countermeasure to clone controllers becomes responsible for rise of

clone processors). Some FS details

http://www.jfsowa.com/computer/memo125.htm

future system posts

https://www.garlic.com/~lynn/submain.html#futuresys

IBM selectric terminals ran with tilt-rotate code (so needed translate from EBCDIC to tilt-rotate code) ... got a table of what characters were located where on the ball. What ever characters you think you have in the computer, has to be translated into the tilt-rotate code for that character on the ball. There were standard balls making a lot of tilt-rotate codes common. But it was possible to have things like APL-ball with lots of special characters and needing to know where they were on the ball and the corresponding tilt-rotate codes (change the selectric ball, could require having the corresponding table for character positions on the ball). So there really wasn't EBCDIC code for selectric terminals ... it was "tilt-rotate" code.

Teletype ASCII terminals did run with direct ASCII code (on ASCII

computers, just send the same data directly to terminal ... not

required to change into tilt-rotate code). "AND" the greatest computer

"goof", 360 was supposed to be ASCII, by the (IBM) father of ASCII,

gone 404 from wayback machine:

https://web.archive.org/web/20180513184025/http://www.bobbemer.com/P-BIT.HTM

Who Goofed?

The culprit was T. Vincent Learson. The only thing for his defense is

that he had no idea of what he had done. It was when he was an IBM

Vice President, prior to tenure as Chairman of the Board, those lofty

positions where you believe that, if you order it done, it actually

will be done. I've mentioned this fiasco elsewhere. Here are some

direct extracts:

... snip ...

i.e. the ascii unit record equipment wasn't ready for the 360 announce so had to rely (supposedly temporarily?) on BCD machines (adapted for EBCDIC)

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Arbitration Antics: Warren, Porter, Press Regulator to Explain Yet Another Way Wells Fargo Found to Game the System Date: 13 Feb 2022 Blog: FacebookArbitration Antics: Warren, Porter, Press Regulator to Explain Yet Another Way Wells Fargo Found to Game the System

fraud, risk, exploits, threats, vulnerability posts

https://www.garlic.com/~lynn/subintegrity.html#fraud

other past posts mentioning Wells Fargo

https://www.garlic.com/~lynn/2019d.html#64 How the Supreme Court Is Rebranding Corruption

https://www.garlic.com/~lynn/2019b.html#99 Student Loan Forgiveness Program Offers False Hope, Rejects 99% of Applications

https://www.garlic.com/~lynn/2019b.html#9 England: South Sea Bubble - The Sharp Mind of John Blunt

https://www.garlic.com/~lynn/2018d.html#60 Dirty Money, Shiny Architecture

https://www.garlic.com/~lynn/2018d.html#50 OCC Covering Up for Wells Fargo Type Abuses at Other Banks

https://www.garlic.com/~lynn/2017j.html#64 Wages and Productivity

https://www.garlic.com/~lynn/2017j.html#59 Wall Street Wants to Kill the Agency Protecting Americans From Financial Scams

https://www.garlic.com/~lynn/2017e.html#93 Ransomware on Mainframe application ?

https://www.garlic.com/~lynn/2017b.html#52 when to get out???

https://www.garlic.com/~lynn/2017b.html#6 OT: Trump Moves to Roll Back Obama-Era Financial Regulations

https://www.garlic.com/~lynn/2017b.html#0 Trump to sign cyber security order

https://www.garlic.com/~lynn/2016f.html#54 U.S. Big Banks: A Culture of Crime

https://www.garlic.com/~lynn/2016f.html#52 U.S. Big Banks: A Culture of Crime

https://www.garlic.com/~lynn/2016c.html#92 Goldman and Wells Fargo FINALLY Admit They Committed Fraud

https://www.garlic.com/~lynn/2016c.html#86 Wells Fargo "Admits Deceiving" U.S. Government, Pays Record $1.2 Billion Settlement

https://www.garlic.com/~lynn/2016c.html#85 Wells Fargo "Admits Deceiving" U.S. Government, Pays Record $1.2 Billion Settlement

https://www.garlic.com/~lynn/2016c.html#84 Wells Fargo "Admits Deceiving" U.S. Government, Pays Record $1.2 Billion Settlement

https://www.garlic.com/~lynn/2015h.html#25 Hillary Clinton's Glass-Steagall

https://www.garlic.com/~lynn/2015g.html#70 AIG freezes defined-benefit pension plan

https://www.garlic.com/~lynn/2015f.html#64 1973--TI 8 digit electric calculator--$99.95

https://www.garlic.com/~lynn/2015.html#90 NY Judge Slams Wells Fargo For Forging Documents... And Why Nothing Will Change

https://www.garlic.com/~lynn/2014d.html#78 Wells Fargo made up on-demand foreclosure papers plan: court filing charges

https://www.garlic.com/~lynn/2014d.html#64 Wells Fargo made up on-demand foreclosure papers plan: court filing charges

https://www.garlic.com/~lynn/2014d.html#47 Stolen F-35 Secrets Now Showing Up in China's Stealth Fighter

https://www.garlic.com/~lynn/2014d.html#46 Wells Fargo made up on-demand foreclosure papers plan: court filing charges

https://www.garlic.com/~lynn/2014c.html#57 Royal Pardon For Turing

https://www.garlic.com/~lynn/2013l.html#38 OT: NYT article--the rich get richer

https://www.garlic.com/~lynn/2013l.html#37 Money Laundering Exposed As A Key Component Of The Housing Bubble's "All Cash" Bid

https://www.garlic.com/~lynn/2013h.html#36 CLECs, Barbara, and the Phone Geek

https://www.garlic.com/~lynn/2013e.html#42 More Whistleblower Leaks on Foreclosure Settlement Show Both Suppression of Evidence and Gross Incompetence

https://www.garlic.com/~lynn/2013d.html#63 What Makes an Architecture Bizarre?

https://www.garlic.com/~lynn/2013c.html#58 More Whistleblower Leaks on Foreclosure Settlement Show Both Suppression of Evidence and Gross Incompetence

https://www.garlic.com/~lynn/2013c.html#43 More Whistleblower Leaks on Foreclosure Settlement Show Both Suppression of Evidence and Gross Incompetence

https://www.garlic.com/~lynn/2013.html#50 How to Cut Megabanks Down to Size

https://www.garlic.com/~lynn/2012p.html#49 Regulator Tells Banks to Share Cyber Attack Information

https://www.garlic.com/~lynn/2012n.html#55 U.S. Sues Wells Fargo, Accusing It of Lying About Mortgages

https://www.garlic.com/~lynn/2012n.html#12 Why Auditors Fail To Detect Frauds?

https://www.garlic.com/~lynn/2012l.html#85 Singer Cartons of Punch Cards

https://www.garlic.com/~lynn/2012l.html#48 The Payoff: Why Wall Street Always Wins

https://www.garlic.com/~lynn/2012e.html#37 The $30 billion Social Security hack

https://www.garlic.com/~lynn/2011n.html#49 The men who crashed the world

https://www.garlic.com/~lynn/2011k.html#56 50th anniversary of BASIC, COBOL?

https://www.garlic.com/~lynn/2011g.html#30 Bank email archives thrown open in financial crash report

https://www.garlic.com/~lynn/2011f.html#52 Are Americans serious about dealing with money laundering and the drug cartels?

https://www.garlic.com/~lynn/2011b.html#42 Productivity And Bubbles

https://www.garlic.com/~lynn/2011.html#50 What do you think about fraud prevention in the governments?

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Channel I/O Date: 14 Feb 2022 Blog: FacebookIn 1980, STL was bursting at the seams and were moving 300 people from the IMS group to offsite bldg. They had tried "remote 3270" and found the human factors unacceptable. I get con'ed into doing channel extender support so they place channel attached 3270 controllers at the offsite bldg ... with no perceptible human factors difference between offsite and in STL. The hardware vendor then tries to get IBM to release my support, but there is a group in POK playing with some serial stuff and they get it veto'ed (afraid if it was in the market, it would make it harder to justify their stuff). A side effect was that the (really slow, high channel busy) 3270 controllers had been spread around all the mainframe (disk) channels, moving the channel attached 3270 controllers remotely and replacing them with a really high-speed box (for all the 3270 activity) had the side-effect of drastically cutting the 3270 related channel busy (for same amount of traffic) ... allowing more disk throughput and improving over all system throughput by 10-15%.

channel-extender posts

https://www.garlic.com/~lynn/submisc.html#channel.extender

IBM channels were 3mbyte/sec half duplex. Mid-80s LANL (national lab)

was behind standardization of the Cray 100mbyte/sec channel which

becomes HIPPI

https://en.wikipedia.org/wiki/HIPPI

In 1988, I was asked to help LLNL (national lab) standardize some

serial stuff they were playing which quickly becomes fibre channel

standard (including some stuff I had done in 1980) ... started out

1gbit/sec, full-duplex, 2gbit/sec aggregate, 200mbyte/sec.

https://en.wikipedia.org/wiki/Fibre_Channel

For a time HIPPI group was doing a serial HIPPI in competition with

FCS (I have bunch of meeting notes from both efforts).

The POK group finally get their serial stuff released in 1990 with ES/9000 as ESCON when it is already obsolete (17mbytes/sec, FCS aggregate 200mbytes/sec). Then some POK engineers become involved in FCS and define an enormously heavy-weight protocol that radically reduces the native throughput that is eventually released as FICON.

The most recent published "peak I/O" benchmark I can find is for max configured z196 getting 2M IOPS with 104 FICON (running over 104 FCS) ... using emulated CKD disks on industry standard fixed-block disks (no real CKD disks made for decades). About the same time there is a FCS announced for E5-2600 blades (standard in cloud megadatacenters at the time) claiming over million IOPS (two such FCS having higher throughput than 104 FICON running over 104 FCS) using industry standard disks.

FICON posts

https://www.garlic.com/~lynn/submisc.html#ficon

cloud megadatacenter posts

https://www.garlic.com/~lynn/submisc.html#megadatacenter

Other trivia, last product did at IBM was HA/CMP and working with

national labs on technical/scientific cluster scale-up (including

porting the LLNL Cray filesystem to HA/CMP) and with RDBMS vendors on

commercial cluster scale-up. HA/CMP was also doing configurations with

Hursley 9333 ... full-duplex 80mbit/sec serial copper. I wanted 9333

to evolve into (slower=speed) interoperable FCS ... but instead it

evolves into 160mbit/sec (later faster) incompatible SSA.

https://en.wikipedia.org/wiki/Serial_Storage_Architecture

old post mentioning cluster scale-up meeting Jan1992 in (oracle ceo)

Ellison conference room (16-system loosely-coupled/cluster by my92,

128-system loosely-coupled/cluster by ye92)

https://www.garlic.com/~lynn/95.html#13

within a few weeks of the Ellison meeting, cluster scale-up is

transferred, announced as IBM supercomputer (for scientific/technical

*ONLY*) and we are told we couldn't work on anything with more than

four processors. We leave IBM a few months later.

ha/cmp posts

https://www.garlic.com/~lynn/subtopic.html#hacmp

3880/3090 trivia: originally, 3090 had configured number of channels assuming the 3880 controller would have channel busy similar to 3830 controller, but with 3mbyte/sec channel throughput. However the 3880 had special hardware to handle data transfer but the 3880 microprocessor was really slow ... a combination of the IBM half-duplex channel protocol and the slow 3880 microprocessor drastically drove up channel busy. As a result they had to drastically increase the number of channels (to offset the drastic increase in channel busy), which had side-effect of needing an extra TCM (3090 joke that they were going to bill the 3880 group for the extra 3090 manufacturing cost). The marketing people eventually respin the significant increase in 3090 channels as extraordinary I/O machine (rather than just to offset the extraordinary increase in channel busy).

posts getting to play disk engineer

https://www.garlic.com/~lynn/subtopic.html#disk

some 3880/jib-prime microprocessor posts

https://www.garlic.com/~lynn/2021f.html#23 IBM Zcloud - is it just outsourcing ?

https://www.garlic.com/~lynn/2021c.html#66 ACP/TPF 3083

https://www.garlic.com/~lynn/2021.html#60 San Jose bldg 50 and 3380 manufacturing

https://www.garlic.com/~lynn/2021.html#6 3880 & 3380

https://www.garlic.com/~lynn/2020.html#42 If Memory Had Been Cheaper

https://www.garlic.com/~lynn/2019b.html#80 TCM

https://www.garlic.com/~lynn/2017g.html#61 What is the most epic computer glitch you have ever seen?

https://www.garlic.com/~lynn/2017d.html#1 GREAT presentation on the history of the mainframe

https://www.garlic.com/~lynn/2016g.html#76 IBM disk capacity

https://www.garlic.com/~lynn/2016e.html#56 IBM 1401 vs. 360/30 emulation?

https://www.garlic.com/~lynn/2016e.html#45 How the internet was invented

https://www.garlic.com/~lynn/2016b.html#81 Asynchronous Interrupts

https://www.garlic.com/~lynn/2016b.html#79 Asynchronous Interrupts

https://www.garlic.com/~lynn/2013n.html#69 'Free Unix!': The world-changing proclamation made30yearsagotoday

https://www.garlic.com/~lynn/2013n.html#57 rebuild 1403 printer chain

https://www.garlic.com/~lynn/2013i.html#0 By Any Other Name

https://www.garlic.com/~lynn/2013h.html#86 By Any Other Name

https://www.garlic.com/~lynn/2013d.html#16 relative mainframe speeds, was What Makes an Architecture Bizarre?

https://www.garlic.com/~lynn/2012p.html#17 What is a Mainframe?

https://www.garlic.com/~lynn/2012o.html#28 IBM mainframe evolves to serve the digital world

https://www.garlic.com/~lynn/2012e.html#27 NASA unplugs their last mainframe

https://www.garlic.com/~lynn/2012d.html#75 megabytes per second

https://www.garlic.com/~lynn/2012d.html#28 NASA unplugs their last mainframe

https://www.garlic.com/~lynn/2011p.html#128 Start Interpretive Execution

https://www.garlic.com/~lynn/2011j.html#54 Graph of total world disk space over time?

https://www.garlic.com/~lynn/2011.html#37 CKD DASD

https://www.garlic.com/~lynn/2011.html#36 CKD DASD

https://www.garlic.com/~lynn/2010n.html#14 Mainframe Slang terms

https://www.garlic.com/~lynn/2010h.html#62 25 reasons why hardware is still hot at IBM

https://www.garlic.com/~lynn/2010e.html#30 SHAREWARE at Its Finest

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Channel I/O Date: 14 Feb 2022 Blog: Facebookre:

Starting in early 80s, had HSDT project, T1 and faster computer links (including 1980 STL chanel-extender mentioned in post using NSC hardware). NCAR had a supercomputer hiearchical filesystem controlled by IBM system with IBM disks. Supercomputers would send requiest to IBM system, IBM system would make sure it was staged to IBM disks, download the appropriate disk channel program to an A515 channel emulator ... and return the "handle" for the channel program to the supercomputer to execute directly. In the move to HIPPI and HIPPI switch ... there were provisions to do similar "3rd party transfers" via HIPPI in support of the NCAR filesystem (and also add "3rd party transfers to FCS switches). I had becomed the IBM expert on NSC gear and branch would periodically call me to help with issues at NCAR.

Was also working with NSF director and was suppose to get $20M to

interconnect NSF supercomputer centers. Then congress cuts the budget,

some other things happen and finally an RFP is released. Preliminary

announce (28Mar1986)

https://www.garlic.com/~lynn/2002k.html#12

The OASC has initiated three programs: The Supercomputer Centers

Program to provide Supercomputer cycles; the New Technologies Program

to foster new supercomputer software and hardware developments; and

the Networking Program to build a National Supercomputer Access

Network - NSFnet.

... snip ...

... internal IBM politics prevent us from bidding on the RFP (in part

based on what we already had running), the NSF director tries to help

by writing the company a letter 3Apr1986, NSF Director to IBM Chief Scientist and IBM Senior VP and director of Research, copying IBM CEO) with support from other gov. agencies,

but that just makes the internal politics worse (as did claims that

what we already had running was at least 5yrs ahead of the winning

bid). The winning bid doesn't even install T1 links called for

... they are 440kbit/sec links ... but apparently to make it look like

its meeting the requirements, they install telco multiplexors with T1

trunks (running multiple links/trunk). We periodically ridicule them

that why don't they call it a T5 network (because some of those T1

trunks would in turn be multiplexed over T3 or even T5 trunks). as

regional networks connect in, NSFnet becomes the NSFNET backbone,

precursor to modern internet

https://www.technologyreview.com/s/401444/grid-computing/

hsdt posts

https://www.garlic.com/~lynn/subnetwork.html#hsdt

NSFNET posts

https://www.garlic.com/~lynn/subnetwork.html#nsfnet

... while doing high-speed links ... was also working on various processor clusters with national labs (had been doing it on&off dating back to getting con'ed into doing a CDC6600 benchmark on engineering 4341 for national lab that was looking getting 70 for compute farm, sort of leading edge of the coming cluster supercomputing tsunami).

periodically reposted: senior disk engineer getting talk scheduled at internal, world-wide, annual communication group conference in the late 80s, supposedly on 3174 performance, but opens the talk with the statement that the communication group was going to be responsible for the demise of the disk division. The issue was that the communication group had stranglehold on datacenters with its corporate strategic ownership of everything that crossed datacenter wall and was fiercely fighting off client/server and distributed computing trying to preserve their dumb terminal paradigm. The disk division was seeing drop in disk sales with customers moving to more distributed computing friendly platforms. The disk division had come up with a number of solutions, but the communication group (with their datacenter stranglehold) would veto them.

dumb terminal emulation posts

https://www.garlic.com/~lynn/subnetwork.html#terminal

As work around to internal politics and the communication group, the GPD/ADstar VP of software was investing in distributed computing startups (that would use IBM disks) and would periodically ask us to drop by his investments to offer assistance. One was NCAR spinoff, MESA Archival porting the NCAR supercomputer filesystem to RS/6000 and HA/CMP.

ha/cmp posts

https://www.garlic.com/~lynn/subtopic.html#hacmp

playing disk engineer posts

https://www.garlic.com/~lynn/subtopic.html#disk

other HSDT trivia: also had T1 satellite link between Los Gatos and

Clementi

https://en.wikipedia.org/wiki/Enrico_Clementi

E&anp;S lab in IBM Kingston (this was different and not related to the

Kingston's "supercomputer" effort). His lab had boatload of FPS boxes

(with 40mbyte/sec disk arrays)

https://en.wikipedia.org/wiki/Floating_Point_Systems

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Channel I/O Date: 14 Feb 2022 Blog: Facebookre:

other cray/4341 trivia: as part of communication group fiercely fighting of client/server and distributing computing ... they were fighting hard to prevent release of mainframe tcp/ip support. When they lost that battle, they changed their tactic and said that since they had corporate strategic ownership of everything that crossed datacenter walls. What they released got 44kbytes/sec aggregate using nearly whole 3090 processor. I then added support for RFC1044 and in some tuning tests at Cray Research between a 4341 and Cray, got sustained 4341 channel throughput using only modest amount of 4341 processor (nearly 500 times improvement in bytes moved per instruction executed)

rfc1044 posts

https://www.garlic.com/~lynn/subnetwork.html#1044

disk&controller trivia: when I transfer to San Jose Research in the 70s, I get to wander around most datacenters (IBM and non-IBM) in silicon valley ... including the disk engineering (bldg14) and disk product test (bldg15) across the street. They were doing prescheduled stand-alone 7x24 mainframe testing. They had mentioned that they had recently tried MVS, but it had 15min mean-time-between-failure in that environment. I offered to rewrite the I/O supervisor making it bullet proof and never fail, allowing any amount of ondemand concurrent testing ... greatly improving productivity. Downside was when they had a problem they 1st tried to blame it on my software ... and I had to spend increasingly amount of time playing disk engineer and diagnosing their problems.

I then do a (internal only) research report describing all the work ... also happen to mention the MVS 15min MTBF ... bringing the wrath of the MVS group down on my head (offline told that they tried to have me separated from IBM, when that didn't work they tried ways to make my career unpleasant.)

playing disk engineer posts

https://www.garlic.com/~lynn/subtopic.html#disk

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Channel I/O Date: 14 Feb 2022 Blog: Facebookre:

After leaving IBM, I was asked to come into the largest airline res system to look at the impossible things they couldn't do. They start with "routes" which represented about 25% of the total mainframe workload. They give me a tape with the full OAG (all scheduled commercial flt segments in the world) and I go away for two months. I come back with rewritten ROUTES running on RS/6000 ... which they tested for doing all the impossible things. I had started out with about 100 times faster than the mainframe version ... and then doing all the rest of the impossible things slowed it down to only about ten times faster. Basically ten rack mount RS6000/990 could handle all route requests for all commercial airlines in the world (including doing all the impossible things). A decade later, cellphone processors had as much compute power as those ten 990s.

Note after the demo ... they started wringing their hands ... eventually saying they hadn't really wanted me to do all the impossible things ... they just wanted to be able to tell the parent company's board that I was working on it (in prior life, had known one of the board members at IBM). Part of doing the impossible things had automated a bunch of stuff that was being done manually by some 800 people ... which would then be made obsolete. They wouldn't let me at "fares" which represented about 40% of the mainframe workload.

some past posts mentioning ROUTES:

https://www.garlic.com/~lynn/2021f.html#8 Air Traffic System

https://www.garlic.com/~lynn/2021.html#71 Airline Reservation System

https://www.garlic.com/~lynn/2017k.html#63 SABRE after the 7090

https://www.garlic.com/~lynn/2016f.html#109 Airlines Reservation Systems

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Channel I/O Date: 14 Feb 2022 Blog: Facebookre:

IBM Los Gatos VLSI lab was trying to help IBM Burlington VLSI center. Problem was MVT->VS2/SVS->VS2/MVS ... In the transition to MVS they gave every application its own 16mbyte virtual address space. However, OS/360 was heavily pointer-passing API paradigm ... and so an 8mbyte image of the MVS kernel was mapped into every application 16mbyte virtual address (leaving just 8mbytes for applications). Then because subsystems were also mapped into their own (separate) 16mbyte virtual address space ... they had to come up with the Common Segment Area (1mbyte CSA) for being able to pass parameters (where the address is the same for both applications and subsystems); CSA takes 1mbyte leaving only 7mbytes for applications. However, the CSA requirement is somewhat proportional to concurrent applications and subsystems and by 3033 time, many customer CSAs (renamed Common System Area) were 5-6mbytes (and threaten to become eight) leaving only 2-3mbytes for applications program (threatening to become zero).

IBM Burlington had custom MVS systems (168-3 & 3033) that only had one megabyte CSA; dedicated for highly used 7mbyte Fortran VLSI application (although constant problem with any enhancements pushing application over 7mbytes available). Los Gatos did some experimentation porting to VM370/CMS (allowing almost full 16mbytes for application use), but there was problem with the CMS 64kbyte OS simulation implementation, not fully supporting the Burlington VLSI Fortran application. Los Gatos then found that with 12kbytes more of OS simulation code, they could easily run the Burlington VLSI Fortran application and more than double the space available for its runtime execution (easily handling all the deferred enhancements because of lack of execution space under MVS).

recnet posts mentioning MVS common segment/system area:

https://www.garlic.com/~lynn/2022.html#70 165/168/3033 & 370 virtual memory

https://www.garlic.com/~lynn/2021k.html#113 IBM Future System

https://www.garlic.com/~lynn/2021i.html#17 Versatile Cache from IBM

https://www.garlic.com/~lynn/2021h.html#70 IBM Research, Adtech, Science Center

https://www.garlic.com/~lynn/2021g.html#34 IBM Fan-fold cards

https://www.garlic.com/~lynn/2021b.html#63 Early Computer Use

https://www.garlic.com/~lynn/2020.html#36 IBM S/360 - 370

https://www.garlic.com/~lynn/2019d.html#115 Assembler :- PC Instruction

https://www.garlic.com/~lynn/2019b.html#94 MVS Boney Fingers

https://www.garlic.com/~lynn/2019b.html#25 Online Computer Conferencing

https://www.garlic.com/~lynn/2019.html#38 long-winded post thread, 3033, 3081, Future System

https://www.garlic.com/~lynn/2019.html#18 IBM assembler

https://www.garlic.com/~lynn/2018c.html#23 VS History

https://www.garlic.com/~lynn/2018.html#92 S/360 addressing, not Honeywell 200

https://www.garlic.com/~lynn/2017i.html#48 64 bit addressing into the future

https://www.garlic.com/~lynn/2017e.html#40 Mainframe Family tree and chronology 2

https://www.garlic.com/~lynn/2017d.html#61 Paging subsystems in the era of bigass memory

https://www.garlic.com/~lynn/2016.html#78 Mainframe Virtual Memory

https://www.garlic.com/~lynn/2015h.html#116 Is there a source for detailed, instruction-level performance info?

https://www.garlic.com/~lynn/2015g.html#90 IBM Embraces Virtual Memory -- Finally

https://www.garlic.com/~lynn/2015b.html#60 ou sont les VAXen d'antan, was Variable-Length Instructions that aren't

https://www.garlic.com/~lynn/2015b.html#46 Connecting memory to 370/145 with only 36 bits

https://www.garlic.com/~lynn/2015b.html#40 OS/360

https://www.garlic.com/~lynn/2014k.html#82 Do we really need 64-bit DP or is 48-bit enough?

https://www.garlic.com/~lynn/2014k.html#78 Do we really need 64-bit DP or is 48-bit enough?

https://www.garlic.com/~lynn/2014k.html#39 1950: Northrop's Digital Differential Analyzer

https://www.garlic.com/~lynn/2014k.html#36 1950: Northrop's Digital Differential Analyzer

https://www.garlic.com/~lynn/2014i.html#86 z/OS physical memory usage with multiple copies of same load module at different virtual addresses

https://www.garlic.com/~lynn/2014g.html#83 Costs of core

https://www.garlic.com/~lynn/2014d.html#62 Difference between MVS and z / OS systems

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: CP-67 Date: 15 Feb 2022 Blog: FacebookCP-67

some of the MIT CTSS/7094

https://en.wikipedia.org/wiki/Compatible_Time-Sharing_System

people went to the 5th flr Project MAC to do MULTICS (operating system

written in PLI).

https://en.wikipedia.org/wiki/Multics

Others went to the IBM science center on 4th flr to do virtual machine

(CP40/CMS & CP67/CMS), internal network, lots of performance tools,

etc. CTSS RUNOFF was redone for CMS as SCRIPT. In 1969, GML was

invented at CSC and GML tag processing added to SCRIPT (a decade later

GML morphs into ISO standard SGML, and after another decade morphs

into HTML at CERN)

https://en.wikipedia.org/wiki/Cambridge_Scientific_Center

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

internal network posts

https://www.garlic.com/~lynn/subnetwork.html#internalnet

GML posts

https://www.garlic.com/~lynn/submain.html#sgml

trivia: CSC wanted a model 50 for the virtual memory hardware changes

... but all the spare 50s were going to FAA ATC project ... so had to

settle for 360/40 for the hardware changes ... and created

cp40/cms. CP40/CMS morphs into CP67/CMS when 360/67 becomes available

standard with virtual memory. Lots more history (including Les' CP40)

https://www.leeandmelindavarian.com/Melinda#VMHist

quote here

https://www.leeandmelindavarian.com/Melinda/25paper.pdf

Since the early time-sharing experiments used base and limit registers

for relocation, they had to roll in and roll out entire programs when

switching users....Virtual memory, with its paging technique, was

expected to reduce significantly the time spent waiting for an

exchange of user programs.

What was most significant was that the commitment to virtual memory

was backed with no successful experience. A system of that period that

had implemented virtual memory was the Ferranti Atlas computer, and

that was known not to be working well. What was frightening is that

nobody who was setting his virtual memory direction at IBM knew why

Atlas didn't work.(23)

... from "CP/40 -- The Origin of VM/370"

https://www.leeandmelindavarian.com/Melinda/JimMarch/CP40_The_Origin_of_VM370.pdf

What was most significant to the CP/40 team was the commitment to

virtual memory was backed with no successful experience. A system of

that period that had implemented virtual memory was the Ferfanti Atlas

computer and that was known to not be working well. What was

frightening is that nobody who was setting this virtual memory

direction at IBM knew why Atlas didn't work.

.... snip ...

CSC delivered CP67 Jan68 to univ. (after CSC itself, and Lincoln Labs) had no page thrashing controls and primitive page replacement algorithm. I added dynamic adaptive page thrashing controls, a highly efficient page replacement algorithm, dynamic adaptive resource management and scheduling ... significantly rewrote lots of code to reduce pathlengths, redid DASD i/o for seek and rotational optimization ... and a few other things.

recent post about some of the path length work

https://www.garlic.com/~lynn/2022b.html#13 360 Performance

part of 68 Share meeting presentation on path length work

https://www.garlic.com/~lynn/94.html#18

also mentions old 2011 post

https://www.garlic.com/~lynn/2011c.html#72

about regiving oct86 presentation that I made at SEAS meeting on

history of VM370 performance

https://www.garlic.com/~lynn/hill0316g.pdf

dynamic adaptive resource management posts

https://www.garlic.com/~lynn/subtopic.html#fairshare

page management posts

https://www.garlic.com/~lynn/subtopic.html#clock

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: To Be Or To Do Date: 16 Feb 2022 Blog: FacebookBoyd Quote:

Boyd posts & URLs

https://www.garlic.com/~lynn/subboyd.html

past Rochefort reference

https://www.garlic.com/~lynn/2017i.html#86 WW II cryptography

military-industrial complex

https://www.garlic.com/~lynn/submisc.html#military.industrial.complex

past posts with "forgive you for being right" reference

https://www.garlic.com/~lynn/2021d.html#80 OSS in China: Prelude to Cold War

https://www.garlic.com/~lynn/2021.html#83 Kinder/Gentler IBM

https://www.garlic.com/~lynn/2015d.html#14 3033 & 3081 question

https://www.garlic.com/~lynn/2014f.html#78 Over in the Mainframe Experts Network LinkedIn group

https://www.garlic.com/~lynn/2014f.html#69 Is end of mainframe near ?

https://www.garlic.com/~lynn/2013f.html#78 The cloud is killing traditional hardware and software

https://www.garlic.com/~lynn/2013f.html#57 The cloud is killing traditional hardware and software

https://www.garlic.com/~lynn/2012d.html#40 Strategy subsumes culture

https://www.garlic.com/~lynn/2010b.html#38 Happy DEC-10 Day

https://www.garlic.com/~lynn/2009r.html#50 "Portable" data centers

https://www.garlic.com/~lynn/2009h.html#74 My Vintage Dream PC

https://www.garlic.com/~lynn/2008m.html#30 Taxes

https://www.garlic.com/~lynn/2003i.html#71 Offshore IT

https://www.garlic.com/~lynn/2002k.html#61 arrogance metrics (Benoits) was: general networking

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM Cloud to offer Z-series mainframes for first time - albeit for test and dev. Date: 16 Feb 2022 Blog: FacebookIBM Cloud to offer Z-series mainframes for first time - albeit for test and dev. z/OS VMs coming sometime in the second half of 2022