From: Lynn Wheeler <lynn@garlic.com> Subject: IBM Quota Date: 12 June 2022 Blog: Facebookre:

trivia: IBM asks me to teach a one week cp67 class ... science center brought out CP67/CMS to install at univ ... and I got to play with it on my weekend time (univ. had been shutting down the datacenter on weekends and I had it all to myself ... back to when I was hired as student programmer to reimplement 1401 MPIO on 360/30 ... although 48hrs w/o sleep made monday classes a little hard). About 6months after science center brought out cp67/cms to the univ ... they were having cp67/cms one week class at the beverly hills hilton. I arrived sunday night and got asked to teach the cp67 class ... friday, two days before, the cp67 people had resigned to join a commercial online cp67 startup.

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

virtual machine commercial online operations

https://www.garlic.com/~lynn/submain.html#timeshare

past posts mentioning beverly hills hilton class:

https://www.garlic.com/~lynn/2017g.html#11 Mainframe Networking problems

https://www.garlic.com/~lynn/2010g.html#68 What is the protocal for GMT offset in SMTP (e-mail) header

https://www.garlic.com/~lynn/2003d.html#72 cp/67 35th anniversary

https://www.garlic.com/~lynn/2001m.html#55 TSS/360

https://www.garlic.com/~lynn/99.html#131 early hardware

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM Games Date: 12 June 2022 Blog: FacebookDate: 08/04/80 16:18:25

MFC&CSP written by MFC in PLI ... multiuser client/server spacewar game (still have copy of mff2.pli), client 3270 display spacewar screen (above ref. was 3270 emulation on ascii glass teletype 3101) ... used the internal SPM (originally done by IBM Pisa Science Center for CP67 and ported to vm370 ... superset of combined VMCF, IUCV, and SMSG) ... was supported by RSCS/VNET ... so clients could access via the internal network, didn't have to be on same machine as server. However almost immediately robot players starting appearing winning all the games ... Non-linear power increase was added for when move intervals dropped below human reaction time, in order to somewhat level the playing field.

other "game" trivia ... after transferring to san jose research, I got

to wander around ibm and non-ibm datacenters in silicon valley. One of

the places was online commercial TYMSHARE

https://en.wikipedia.org/wiki/Tymshare

In aug1976, they had provided their CMS-based online computer

conferencing system "free" to SHARE as VMSHARE (and later PCSHARE)

... archives

http://vm.marist.edu/~vmshare

and I had arranged to get monthly tape dump of all files for making

available inside IBM (may have also contributed to having been blamed

for online computer conferencing on the IBM internal network in the

late 70s and early 80s ... precursor to TOOLSRUN). The biggest problem

I had was with IBM lawyers worried that internal IBM employees might

be contaminated exposed to customer information (and/or find out what

they were being fed customer misinformation). On one stop by TYMSHARE

in the 70s, they demonstrated a game called ADVENTURE, they found on

(stanford) SAIL PDP10 and ported to VM370/CMS. I got a copy for making

available inside IBM (accumulating quite a collection of "demo"

programs ... i.e. games).

https://en.wikipedia.org/wiki/Adventure_game

Disclaimer: most IBM 3270 logon screens included "For IBM Business Use Only" ... the SJR 3270 logon screens had "For IBM Management Approved Use Only" (which could include "demo" programs).

some recent VMSHARE refs:

https://www.garlic.com/~lynn/2022c.html#62 IBM RESPOND

https://www.garlic.com/~lynn/2022c.html#28 IBM Cambridge Science Center

https://www.garlic.com/~lynn/2022c.html#8 Cloud Timesharing

https://www.garlic.com/~lynn/2022b.html#126 Google Cloud

https://www.garlic.com/~lynn/2022b.html#118 IBM Disks

https://www.garlic.com/~lynn/2022b.html#107 15 Examples of How Different Life Was Before The Internet

https://www.garlic.com/~lynn/2022b.html#93 IBM Color 3279

https://www.garlic.com/~lynn/2022b.html#49 4th generation language

https://www.garlic.com/~lynn/2022b.html#34 IBM Cloud to offer Z-series mainframes for first time - albeit for test and dev

https://www.garlic.com/~lynn/2022b.html#30 Online at home

https://www.garlic.com/~lynn/2022b.html#28 Early Online

https://www.garlic.com/~lynn/2022.html#123 SHARE LSRAD Report

https://www.garlic.com/~lynn/2022.html#66 HSDT, EARN, BITNET, Internet

https://www.garlic.com/~lynn/2022.html#65 CMSBACK

https://www.garlic.com/~lynn/2022.html#57 Computer Security

https://www.garlic.com/~lynn/2022.html#36 Error Handling

https://www.garlic.com/~lynn/2021k.html#104 DUMPRX

https://www.garlic.com/~lynn/2021k.html#102 IBM CSO

https://www.garlic.com/~lynn/2021k.html#98 BITNET XMAS EXEC

https://www.garlic.com/~lynn/2021k.html#50 VM/SP crashing all over the place

https://www.garlic.com/~lynn/2021j.html#77 IBM 370 and Future System

https://www.garlic.com/~lynn/2021j.html#71 book review: Broad Band: The Untold Story of the Women Who Made the Internet

https://www.garlic.com/~lynn/2021j.html#68 MTS, 360/67, FS, Internet, SNA

https://www.garlic.com/~lynn/2021j.html#59 Order of Knights VM

https://www.garlic.com/~lynn/2021j.html#48 SUSE Reviving Usenet

https://www.garlic.com/~lynn/2021i.html#99 SUSE Reviving Usenet

https://www.garlic.com/~lynn/2021i.html#95 SUSE Reviving Usenet

https://www.garlic.com/~lynn/2021i.html#77 IBM ACP/TPF

https://www.garlic.com/~lynn/2021i.html#75 IBM ITPS

https://www.garlic.com/~lynn/2021h.html#68 TYMSHARE, VMSHARE, and Adventure

https://www.garlic.com/~lynn/2021h.html#47 Dynamic Adaptive Resource Management

https://www.garlic.com/~lynn/2021h.html#1 Cloud computing's destiny

https://www.garlic.com/~lynn/2021g.html#90 Was E-mail a Mistake? The mathematics of distributed systems suggests that meetings might be better

https://www.garlic.com/~lynn/2021g.html#45 Cloud computing's destiny

https://www.garlic.com/~lynn/2021g.html#27 IBM Fan-fold cards

https://www.garlic.com/~lynn/2021f.html#20 1401 MPIO

https://www.garlic.com/~lynn/2021e.html#55 SHARE (& GUIDE)

https://www.garlic.com/~lynn/2021e.html#30 Departure Email

https://www.garlic.com/~lynn/2021e.html#8 Online Computer Conferencing

https://www.garlic.com/~lynn/2021d.html#42 IBM Powerpoint sales presentations

https://www.garlic.com/~lynn/2021c.html#12 Z/VM

https://www.garlic.com/~lynn/2021c.html#5 Z/VM

https://www.garlic.com/~lynn/2021b.html#84 1977: Zork

https://www.garlic.com/~lynn/2021b.html#81 The Golden Age of computer user groups

https://www.garlic.com/~lynn/2021b.html#69 Fumble Finger Distribution list

https://www.garlic.com/~lynn/2021.html#85 IBM Auditors and Games

https://www.garlic.com/~lynn/2021.html#72 Airline Reservation System

https://www.garlic.com/~lynn/2021.html#25 IBM Acronyms

https://www.garlic.com/~lynn/2021.html#14 Unbundling and Kernel Software

https://www.garlic.com/~lynn/2020.html#28 50 years online at home

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM Games Date: 13 June 2022 Blog: Facebookre:

other SPM trivia ... it was also used for developing numerous automated operator functions.

Note: in the 60s, the science center and two commercial online service bureaus (spinoffs from the science center) did a lot of CP67 for 7x24 availability operation (including darkroom and no human present). At the time, IBM leased/rented machines with charges (even "funny money" for IBM internal datacenters) based on the processor "system meter" ... that ran whenever any processor and/or channel was busy. Special channel programs were done for online terminals ... which would go idle, but instant on for arriving characters (allowing system meter to stop when system was idle, trivia: all processors and channels had to be idle for at least 400ms before the system meter would stop, long after IBM had converted to sales, MVS still had a timer task that woke up every 400ms, guaranteeing system meter would never stop).

Other 60s 7x24 work was system dump automatically written to disk file (instead of printer) and system would automagically re-ipl (with no human intervention).

IPCS, a large assembler application was developed for processing dump file (1st cp67 and later vm370) ... although function was little different from dealing with real paper dump. With appearance of REX (before renamed REXX and release for customers), I wanted to demo that it wasn't just another pretty scripting language ... and chose to reimplement the (at the time vm370) dump application in REX with ten times the performance (compared to the assembler implementation, some slight of hand for interpreted REX) and ten times the function ... doing it in half time over three months. I finished early, so started implementation of automagic library that looked for most common failure signatures.

I thought it would be released to customers in place of the current application, but for various reason it wasn't, even tho it was in use by nearly every PSR and internal datacenter. Eventually I got permission to give presentations at user group meetings on the implementation ... and within a few months, non-IBM implementations started to appear.

Trivia: old email from the 3090 service processor group (3092)

https://en.wikipedia.org/wiki/IBM_3090

https://web.archive.org/web/20230719145910/https://www.ibm.com/ibm/history/exhibits/mainframe/mainframe_PP3090.html

about including it as part of 3092 service processor

https://www.garlic.com/~lynn/2010e.html#email861031

https://www.garlic.com/~lynn/2010e.html#email861223

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

dumprx posts

https://www.garlic.com/~lynn/submain.html#dumprx

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM Games Date: 13 June 2022 Blog: Facebookre:

late 70s&early 80s, I had been blamed for online computer conferencing

(precursor to TOOLSRUN and the internal IBM forums). It really took

off spring 1981 when I distributed a trip report of visit to Jim Gray

at Tandem (only about 300 directly participated, but claims upwards of

25,000 were reading, folklore when corporate executive committee was

told, 5of6 wanted to fire me) from IBM Jargon Dictionary:

https://web.archive.org/web/20241204163110/https://comlay.net/ibmjarg.pdf

Tandem Memos - n. Something constructive but hard to control; a fresh

of breath air (sic). That's another Tandem Memos. A phrase to worry

middle management. It refers to the computer-based conference (widely

distributed in 1981) in which many technical personnel expressed

dissatisfaction with the tools available to them at that time, and

also constructively criticized the way products were [are]

developed. The memos are required reading for anyone with a serious

interest in quality products. If you have not seen the memos, try

reading the November 1981 Datamation summary.

... snip ...

online computer conferencing posts

https://www.garlic.com/~lynn/subnetwork.html#cmc

internal network posts

https://www.garlic.com/~lynn/subnetwork.html#internalnet

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Retiree sues IBM alleging shortchanged benefits Date: 14 June 2022 Blog: FacebookRetiree sues IBM alleging shortchanged benefits

"Barbarians at the Gate"; AMEX was in competition with KKR for

(private equity) LBO (reverse IPO) of RJR and KKR wins. KKR runs into

trouble and hires away AMEX president to help with RJR (later goes on

to be CEO of IBM)

https://en.wikipedia.org/wiki/Barbarians_at_the_Gate:_The_Fall_of_RJR_Nabisco

IBM had one of the largest losses in history of US companies and was

being reorged into the 13 baby blues in preparation for breaking up

the company, gone 404, but lives on at wayback machine.

https://web.archive.org/web/20101120231857/http://www.time.com/time/magazine/article/0,9171,977353,00.html

may also work

https://content.time.com/time/subscriber/article/0,33009,977353-1,00.html

had already left IBM, but get a call from the bowels of Armonk asking

if could help with breakup of the company. Lots of business units were

using supplier contracts in other units via MOUs. After the breakup,

all of these contracts would be in different companies ... all of

those MOUs would have to be cataloged and turned into their own

contracts. However, before getting started, the board brings in a new

CEO and reverses the breakup ... new CEO also used some of the same

techniques at RJR (gone 404, but lives on at wayback machine).

https://web.archive.org/web/20181019074906/http://www.ibmemployee.com/RetirementHeist.shtml

above some IBM related specifics from

https://www.amazon.com/Retirement-Heist-Companies-Plunder-American-ebook/dp/B003QMLC6K/

pension posts

https://www.garlic.com/~lynn/submisc.html#pensions

gerstner posts

https://www.garlic.com/~lynn/submisc.html#gerstner

private equity posts

https://www.garlic.com/~lynn/submisc.html#private.equity

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: RED and XEDIT fullscreen editors Date: 15 June 2022 Blog: FacebookRED and XEDIT fullscreen editors, some old email

RED is much more mature (in use around IBM for some time before XEDIT

existed), more feature/function, and faster

https://www.garlic.com/~lynn/2006u.html#email790606

apology to RED editor, having sent email to Endicott about RED better

than XEDIT, response was that it wasn't Endicott's fault they shipped

something not as good as RED, it was the RED author's fault that RED

was much better than XEDIT.

https://www.garlic.com/~lynn/2006u.html#email800311

https://www.garlic.com/~lynn/2006u.html#email800312

after joining IBM, one of my hobbies was enhanced production opeating

systems for internal datacenters, originally for CP67 (one of the

earliest & long time customers was online sales&marketing support

HONE), then CSC/VM for VM370 and after transferring to San Jose

Research, SJR/VM

https://www.garlic.com/~lynn/2006u.html#email800429

https://www.garlic.com/~lynn/2006u.html#email800501

originally in the morph from CP67->VM370 lots of stuff was dropped

(including multiprocessor support and lots of stuff I had done as

undergraduate) and/or greatly simplified, old email starting to

migrate lots of CP67 to VM370-R2PLC9 (for CSC/VM)

https://www.garlic.com/~lynn/2006w.html#email750102

https://www.garlic.com/~lynn/2006w.html#email750430

some of the first stuff I moved to VM370 was my CP67 stress test benchmarking (trivia: originally did "autolog" command for synthetic benchmarking, but it got picked up for a lot of automated operations) ... which consistently crashed VM370 ... so the next items was all the CP67 internal kernel serialization and other integrity features (in order to finish benchmarks w/o VM370 crashing). Later added multiprocessor support to CSC/VM (VM370 release3 base), originally for consolidated US HONE datacenter in Palo Alto, so they could add a 2nd processor to each of their 168 systems.

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

csc/vm (&/or sjr/vm) posts

https://www.garlic.com/~lynn/submisc.html#cscvm

benchmarking posts

https://www.garlic.com/~lynn/submain.html#benchmark

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: RED and XEDIT fullscreen editors Date: 15 June 2022 Blog: Facebookre:

Note: co-worker at the science center was responsible for the internal

network ... later also used for the corporate sponsored

univ. "BITNET":

https://en.wikipedia.org/wiki/BITNET

the original distributed development project was between the science

center and endicott to implement virtual memory 370 virtual machines

in CP67 (running on running 360/67). Then there was 370 virtual memory

modification to CP67 ("CP67I") to run in those 370 virtual

machines. This was in regular use a year before the first engineer 370

(370/145) with virtual memory was operational (in fact "CP67I" was

first thing IPL'ed on that machine, which had hardware glitch

... which required a q&d CP67I temporary software change to get it up

and running). Two people then came out from San Jose and did 3330 &

2305 device support for CP67I ... known as CP67SJ ... which was in

regular use throughout the company on early 370s with virtual memory

... even for a time after VM370 became available. A lot of this was

during the Future System period ... which was completely different

from 370 and was going to complete 370 ... internal politics was also

killing off 370 efforts ... and the lack of new 370 is credited with

giving 370 clone makers their market foothold. I continued to work on

360&370 all during the FS period ... even periodically ridiculing what

they were doing (which wasn't exactly career enhancing activity). When

FS imploded, there was mad rush to get stuff back into the 370 product

pipeline ... including quick&dirty 3033 and 3081; a lot more info

http://www.jfsowa.com/computer/memo125.htm

https://people.computing.clemson.edu/~mark/fs.html

reference to Future System failure resulted in major IBM culture

change, from Ferguson & Morris, "Computer Wars: The Post-IBM World",

Time Books, 1993

http://www.amazon.com/Computer-Wars-The-Post-IBM-World/dp/1587981394

.... reference to "Future System" ...

"and perhaps most damaging, the old culture under Watson Snr and Jr of

free and vigorous debate was replaced with *SYNCOPHANCY* and *MAKE NO

WAVES* under Opel and Akers. It's claimed that thereafter, IBM lived

in the shadow of defeat ... But because of the heavy investment of

face by the top management, F/S took years to kill, although its wrong

headedness was obvious from the very outset. "For the first time,

during F/S, outspoken criticism became politically dangerous," recalls

a former top executive."

... snip ...

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

future system posts

https://www.garlic.com/~lynn/submain.html#futuresys

internal network posts

https://www.garlic.com/~lynn/subnetwork.html#internalnet

bitnet posts

https://www.garlic.com/~lynn/subnetwork.html#bitnet

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: RED and XEDIT fullscreen editors Date: 15 June 2022 Blog: Facebookre:

Note2: During VM370 Release 2, a lot of resources were diverted to FS ... so coming up for Release 3 ... there wasn't a lot of stuff ready... as a result, a little of my R2PLC9-based internal CSC/VM was picked up for VM370 Release 3 ... this included a small subset of my shared segment executables (which is referenced in one of the previous "RED" emails ... about no work has yet been done to move "RED" into read-only, "shared segment").

23Jun1969 unbundling announcement started to charge for SE services, maintenance and (application) software (but they were able to make the case that kernel software should still be free). In the wake of FS (contributing to rise of clone 370s and then FS imploding and mad rush to get stuff back into 370 product pipeline), there was decision to transition to charging for kernel software ... starting out with charging for kernel addons, and then increasing to all kernel software was charged for). An additional selection of my internal VM370 changes were selected as the guinea pig for "charged-for" kernel addons (initially for Release 3 VM370). Trivia: kernel structure reorg for multiprocessor support was included, but not the actual multiprocessor support. Then the decision was made to release actual multiprocessor support for Release 4. One of the initial "charged-for" rules was new hardware support wouldn't be charged (and couldn't have a pre-req of charged-for components). However, the (multiprocessor) kernel restructure was already part of my "charged-for" add-on ... the solution was to move around 90% of the code from my "charged-for" add-on into the "free" base (w/o changing the price of my kernel add-on) for Release 4.

Eventually in the 80s, all kernel code became charged-for ... along

with the onset of the "OCO-wars" (make all code "object-code only",

i.e. no free source). Some of the OCO-wars can be seen in VMSHARE

posts ... aka Aug1976 TYMSHARE

https://en.wikipedia.org/wiki/Tymshare

provided their CMS-based online, computer conference system "free" to

the IBM SHARE user group

https://www.share.org/

VMSHARE archives here

http://vm.marist.edu/~vmshare

search of "MEMO", "NOTE", & "PROB" turns up 21 discussions for "OCO

WARS" ... one of the comments

Append on 03/31/89 at 08:04 by Melinda Varian <BITNET: MAINT@PUCC>:

Steve, "VMFblah", "VMFbanana", etc., are generic terms to describe a

collection of "tools" all of whose names start with the letters "VMF".

Yes, we might just as well use "VMFxxxxx" here.

However, you should understand that these "tools" were introduced to

do object-level maintenance, thus replacing our beloved, very elegant,

extremely reliable source-level maintenance tools. The VMFxxxxx's

have proven to be terribly buggy and difficult to use. They have

greatly decreased both our peace of mind and the stability of our

systems while greatly increasing our work load, for no other purpose

than to allow IBM to introduce OCO, which many of us view as VM's

death warrant.

Thus, I fear, our resentment shows.

Although the terms we use here are mild compared to those we use in

private, there is no point in our gratuitously offending the IBMers

who listen here, so we'll try to lighten it up. At the same time, I

suspect that too many IBMers equate criticism with disloyalty. You

need to keep in mind that the people here are the ones who are still

loyal to IBM, who are still trying to keep it from shooting itself in

the foot.

Melinda

*** APPENDED 03/31/89 08:04:59 BY PU/MELINDA ***

... snip ...

... other trivia: Melinda's history works

https://www.leeandmelindavarian.com/Melinda#VMHist

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

csc/vm (&/or sjr/vm) posts

https://www.garlic.com/~lynn/submisc.html#cscvm

smp, muliprocessor, compare&swap, etc posts

https://www.garlic.com/~lynn/subtopic.html#smp

page mapped filesystem

https://www.garlic.com/~lynn/submain.html#mmap

location independent share segment posts

https://www.garlic.com/~lynn/submain.html#adcon

23jun69 unbundling announce posts

https://www.garlic.com/~lynn/submain.html#unbundle

some recent posts mentioning OCO-wars

https://www.garlic.com/~lynn/2022b.html#118 IBM Disks

https://www.garlic.com/~lynn/2022b.html#30 Online at home

https://www.garlic.com/~lynn/2021k.html#121 Computer Performance

https://www.garlic.com/~lynn/2021k.html#50 VM/SP crashing all over the place

https://www.garlic.com/~lynn/2021h.html#55 even an old mainframer can do it

https://www.garlic.com/~lynn/2021g.html#27 IBM Fan-fold cards

https://www.garlic.com/~lynn/2021d.html#2 What's Fortran?!?!

https://www.garlic.com/~lynn/2021c.html#5 Z/VM

https://www.garlic.com/~lynn/2021.html#14 Unbundling and Kernel Software

https://www.garlic.com/~lynn/2018e.html#91 The (broken) economics of OSS

https://www.garlic.com/~lynn/2018d.html#48 IPCS, DUMPRX, 3092, EREP

https://www.garlic.com/~lynn/2018b.html#6 S/360 addressing, not Honeywell 200

https://www.garlic.com/~lynn/2018.html#43 VSAM usage for ancient disk models

https://www.garlic.com/~lynn/2017j.html#16 IBM open sources it's JVM and JIT code

https://www.garlic.com/~lynn/2017g.html#101 SEX

https://www.garlic.com/~lynn/2017g.html#23 Eliminating the systems programmer was Re: IBM cuts contractor billing by 15 percent (our else)

https://www.garlic.com/~lynn/2017e.html#18 [CM] What was your first home computer?

https://www.garlic.com/~lynn/2017.html#59 The ICL 2900

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: VM Workship ... VM/370 50th birthday Date: 16 June 2022 Blog: Facebookfrom long ago and far away:

History Presentation was made at SEAS 5-10Oct1986 (European SHARE, IBM

mainframe user group), I gave it most recently at WashDC Hillgang user

group 16Mar2011

https://www.garlic.com/~lynn/hill0316g.pdf

HSDT spool file system was for RSCS/VNET which used VM370 spool file system with a synchronous diagnose to access 4k blocked records. On moderately loaded VM370 RSCS/VNET might get only 5-8 4k records/sec (aggregate both read&write). I needed 75 4k records/sec for just one T1 link. I implemented VM370 spool file system in Pascal running in virtual address space.

I had started HSDT in the early 80s with T1 and faster computer links,

both terrestrial and satellite and working with NSF Director, was

suppose to get $20M to interconnect the NSF Supercomputer

Centers. then congress cuts the budget, some other things happen and

finally an RFP is released. Preliminary Announcement (28Mar1986)

https://www.garlic.com/~lynn/2002k.html#12

The OASC has initiated three programs: The Supercomputer Centers

Program to provide Supercomputer cycles; the New Technologies Program

to foster new supercomputer software and hardware developments; and

the Networking Program to build a National Supercomputer Access

Network - NSFnet.

... snip ...

internal IBM politics prevent us from bidding on the RFP. the NSF

director tries to help by writing the company a letter 3Apr1986, NSF

Director to IBM Chief Scientist and IBM Senior VP and director of

Research, copying IBM CEO) with support from other gov. agencies, but

that just makes the internal politics worse (as did claims that what

we already had running was at least 5yrs ahead of the winning bid, RFP awarded 24Nov87), as

regional networks connect in, NSFnet becomes the NSFNET backbone,

precursor to modern internet

https://www.technologyreview.com/s/401444/grid-computing/

communication group (and other) executives were spreading all sorts of

misinformation about how SNA products could be used for NSFnet

... somebody collected lots of that misinformation email and

forwarded it to us ... old archived post with the email, heavily

redacted and clipped to protect the guilty

https://www.garlic.com/~lynn/2006w.html#email870109

another recent history thread

https://www.garlic.com/~lynn/2022e.html#5 RED and XEDIT fullscreen editors

https://www.garlic.com/~lynn/2022e.html#6 RED and XEDIT fullscreen editors

https://www.garlic.com/~lynn/2022e.html#7 RED and XEDIT fullscreen editors

HSDT posts

https://www.garlic.com/~lynn/subnetwork.html#hsdt

NSFNET posts

https://www.garlic.com/~lynn/subnetwork.html#nsfnet

internal network

https://www.garlic.com/~lynn/subnetwork.html#internalnet

past posts mentioning HSDT spool file system (HSDTSFS)

https://www.garlic.com/~lynn/2021j.html#26 Programming Languages in IBM

https://www.garlic.com/~lynn/2021g.html#37 IBM Programming Projects

https://www.garlic.com/~lynn/2013n.html#91 rebuild 1403 printer chain

https://www.garlic.com/~lynn/2012g.html#24 Co-existance of z/OS and z/VM on same DASD farm

https://www.garlic.com/~lynn/2012g.html#23 VM Workshop 2012

https://www.garlic.com/~lynn/2012g.html#18 VM Workshop 2012

https://www.garlic.com/~lynn/2011e.html#25 Multiple Virtual Memory

past posts mentioning history presentation at (DC) hillgang meeting

https://www.garlic.com/~lynn/2022c.html#0 System Response

https://www.garlic.com/~lynn/2022b.html#13 360 Performance

https://www.garlic.com/~lynn/2022.html#93 HSDT Pitches

https://www.garlic.com/~lynn/2021j.html#59 Order of Knights VM

https://www.garlic.com/~lynn/2021h.html#82 IBM Internal network

https://www.garlic.com/~lynn/2021g.html#46 6-10Oct1986 SEAS

https://www.garlic.com/~lynn/2021e.html#65 SHARE (& GUIDE)

https://www.garlic.com/~lynn/2021c.html#41 Teaching IBM Class

https://www.garlic.com/~lynn/2021b.html#61 HSDT SFS (spool file rewrite)

https://www.garlic.com/~lynn/2021.html#17 Performance History, 5-10Oct1986, SEAS

https://www.garlic.com/~lynn/2019b.html#4 Oct1986 IBM user group SEAS history presentation

https://www.garlic.com/~lynn/2019.html#38 long-winded post thread, 3033, 3081, Future System

https://www.garlic.com/~lynn/2017d.html#52 Some IBM Research RJ reports

https://www.garlic.com/~lynn/2015h.html#93 OT: Electrician cuts wrong wire and downs 25,000 square foot data centre

https://www.garlic.com/~lynn/2012g.html#18 VM Workshop 2012

https://www.garlic.com/~lynn/2011e.html#3 Multiple Virtual Memory

https://www.garlic.com/~lynn/2011c.html#88 Hillgang -- VM Performance

https://www.garlic.com/~lynn/2011c.html#86 If IBM Hadn't Bet the Company

https://www.garlic.com/~lynn/2011c.html#72 A History of VM Performance

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: VM/370 Going Away Date: 17 June 2022 Blog: FacebookVM/370 Going Away(?)

Future System effort, 1st half 70s, completely different from 370 and

was going to completely replace 370 (internal politics was killing off

370 efforts during the period, lack of new 370 products is credited

with giving clone 370 makers their market foothold). with the demise

of FS, there was a mad rush to get stuff back into 370 product

pipelines, including kicking off quick&dirty 3033&3081 projects in

parallel. also head of POK managed to convince corporate to kiil vm370

product, shutdown the development group and transfer all the people to

POK (or supposedly, MVS/XA wouldn't ship on time ... several yrs in

the future). Endicott eventually managed to save the vm370 product

mission, but had to reconstitute a development group from scratch

... can see comments about (poor) code quality during this period in

various comments in the vmshare archives:

http://vm.marist.edu/~vmshare

After graduating (and leaving Boeing CFO office), I join the ibm science center ... and one of my hobbies was enhanced production operating systems for internal datacenters ... and (world-wide sales&marketing support) HONE systems were long-time customers ... starting with CP67 and then moving to VM370 (I had continued 360&370 work all during the FS period, including periodically ridiculing what they were doing, which wasn't exactly career enhancing activity).

Later part of the 70s, HONE went through a series of new executives, branch manager promoted to executive position in DPD dqtrs, discover to their horror that HONE wasn't MVS based and figure that they could "make their career" by directing HONE to move to MVS, directing all hands to work on the port. After 9-12 months, it would be shown it wouldn't work, declared a success, heads promoted uphill, and after a few months, it would repeat again. Also round the turn of that decade, a POK executive gave HONE a presentation of future of POK products and said that while Endicott had saved vm370 product, it would no longer be supported on POK machines. This caused such an uproar, and he had to come back and explain how HONE had misunderstood what he had said.

Mid-70s, Endicott had con'ed me into helping with 138/148 microcode

assist (i.e. "ECPS"); the machines had 6kbyes of available microcode

space and I was to identify the 6kbytes of highest executed vm370

kernel paths ... for reprogramming in microcode (at approx

byte-for-byte with 10 times performance increase) ... which would

continue into 4300s. Old archived post with original analysis (6kbytes

accounted for 80% of kernel execution)

https://www.garlic.com/~lynn/94.html#21

Around time POK was telling HONE that vm370 wouldn't run on new POK machines, I got approval to give presentations at monthly BAYBUNCH user group meetings (hosted at Stanford SLAC) on ECPS implementation. After the meetings, Amdahl people would corner me to provide more information on ECPS. They explained that they had done MACROCODE ... 370-similar instructions running in microcode mode (originally done to quickly respond to plethora of 3033 trivial microcode changes that were required by latest MVS to run). They were then implementing HYPERVISOR support (hardware support to run multiple logical machines w/o vm370 software) ... note high-end processors was horizontal microcode that was difficult and time-consuming to implement, Amdahl MACROCODE drastically reduced that difficulty (note IBM's response to HYPERVISOR with LPAR&PR/SM for 3090 was nearly 8yrs later).

Note that while POK had killed VM370 development group and moved them

to POK to work on MVS/XA, they did develop the VMTOOL, which was a

small VM370 subset w/o function, performance and features of vm370

... *only* intended for MVS/XA development (*never* to ship to

customers). Later when customers weren't converting from MVS to MVS/XA

as planned on 3081 (except Amdahl machines where they concurrently run

MVS & MVS/XA under HYPERVISOR), they decided to provide VMTOOL as

VM/MA and VM/SF for "migration aid" ... old email

https://www.garlic.com/~lynn/2007.html#email850304

https://www.garlic.com/~lynn/2008c.html#email850419

... the VMTOOL had 3081 SIE microcode instruction to help ... however

SIE was also never intended for customer use either ... 3081 not

having sufficient microcode space ... so the SIE microcode had to be

paged in each time it was invoked ... old email about "trout" (aka

3090) did implement SIE for production use:

https://www.garlic.com/~lynn/2006j.html#email810630

3081 trivia: 3081 was suppose to be multiprocessor "only" machine,

original 3081D, each processor slower than 3033, they then doubled

processor cache size to make it faster than 3033 (however, latest

Amdahl single processor had at least throughput of two processor

3081K). some FS, 3033, & 3081 info

http://www.jfsowa.com/computer/memo125.htm

https://people.computing.clemson.edu/~mark/fs.html

Then there was a big POK/Kingston push for a large development group

to upgrade VMTOOL to feature, performance and function of VM370

... Endicott's only alternative was to pickup the full 370/XA support

that Rochester had added to vm370. Some old reference to Rochester's

VM370 XA support

https://www.garlic.com/~lynn/2011c.html#email860122

https://www.garlic.com/~lynn/2011c.html#email860123

My recent (IBM Retirees) discussion post

https://www.facebook.com/groups/62822320855/posts/10159776681680856/

has this email reference to this post

https://www.garlic.com/~lynn/2006u.html#26

with email from Rochester sending tape for copy of my SJR/VM system

https://www.garlic.com/~lynn/2006u.html#email800501

Then there was also a different attack moving HONE to MVS ... claiming the reason HONE couldn't be moved to MVS was they were running my enhanced system. HONE would be required to move to standard supported product (because what would HONE do if I was hit by a bus?) ... after which, then it would be possible for HONE to be migrated to MVS.

I had done (CP67) dynamic adaptive resource management algorithms as an undergraduate in the 60s ... which the science center picked up and shipped as part of cp67. In the morph of CP67->VM370 lots of stuff was dropped (including multiprocessor support and lots of stuff I had done as undergraduate) and/or greatly simplified. Then there were SHARE resolutions to put the "wheeler scheduler" back.

VM/HPO 3.4 (w/o "wheeler scheduler") from vmshare

https://www.garlic.com/~lynn/2007b.html#email860111

https://www.garlic.com/~lynn/2007b.html#email860113

also putting (my, originally done as undergraduate in the 60s) global

LRU back into VM/HPO

https://www.garlic.com/~lynn/2006y.html#email860119

Science Center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

Future System posts

https://www.garlic.com/~lynn/submain.html#futuresys

HONE posts

https://www.garlic.com/~lynn/subtopic.html#hone

dynamic adaptive resource manager posts

https://www.garlic.com/~lynn/subtopic.html#fairshare

glogal LRU page replacement posts

https://www.garlic.com/~lynn/subtopic.html#clock

a few threads this year, touching on same &/or similar subjects:

https://www.garlic.com/~lynn/2022e.html#8 VM Workship ... VM/370 50th birthday

https://www.garlic.com/~lynn/2022e.html#7 RED and XEDIT fullscreen editors

https://www.garlic.com/~lynn/2022e.html#6 RED and XEDIT fullscreen editors

https://www.garlic.com/~lynn/2022e.html#5 RED and XEDIT fullscreen editors

https://www.garlic.com/~lynn/2022e.html#1 IBM Games

https://www.garlic.com/~lynn/2022d.html#94 Operating System File/Dataset I/O

https://www.garlic.com/~lynn/2022d.html#58 IBM 360/50 Simulation From Its Microcode

https://www.garlic.com/~lynn/2022d.html#44 CMS Personal Computing Precursor

https://www.garlic.com/~lynn/2022d.html#30 COMPUTER HISTORY: REMEMBERING THE IBM SYSTEM/360 MAINFRAME, its Origin and Technology

https://www.garlic.com/~lynn/2022d.html#17 Computer Server Market

https://www.garlic.com/~lynn/2022c.html#83 VMworkshop.og 2022

https://www.garlic.com/~lynn/2022c.html#62 IBM RESPOND

https://www.garlic.com/~lynn/2022c.html#28 IBM Cambridge Science Center

https://www.garlic.com/~lynn/2022c.html#8 Cloud Timesharing

https://www.garlic.com/~lynn/2022b.html#126 Google Cloud

https://www.garlic.com/~lynn/2022b.html#118 IBM Disks

https://www.garlic.com/~lynn/2022b.html#111 The Rise of DOS: How Microsoft Got the IBM PC OS Contract

https://www.garlic.com/~lynn/2022b.html#107 15 Examples of How Different Life Was Before The Internet

https://www.garlic.com/~lynn/2022b.html#93 IBM Color 3279

https://www.garlic.com/~lynn/2022b.html#54 IBM History

https://www.garlic.com/~lynn/2022b.html#49 4th generation language

https://www.garlic.com/~lynn/2022b.html#34 IBM Cloud to offer Z-series mainframes for first time - albeit for test and dev

https://www.garlic.com/~lynn/2022b.html#30 Online at home

https://www.garlic.com/~lynn/2022b.html#28 Early Online

https://www.garlic.com/~lynn/2022b.html#22 IBM Cloud to offer Z-series mainframes for first time - albeit for test and dev

https://www.garlic.com/~lynn/2022b.html#20 CP-67

https://www.garlic.com/~lynn/2022.html#123 SHARE LSRAD Report

https://www.garlic.com/~lynn/2022.html#105 IBM PLI

https://www.garlic.com/~lynn/2022.html#89 165/168/3033 & 370 virtual memory

https://www.garlic.com/~lynn/2022.html#71 165/168/3033 & 370 virtual memory

https://www.garlic.com/~lynn/2022.html#66 HSDT, EARN, BITNET, Internet

https://www.garlic.com/~lynn/2022.html#65 CMSBACK

https://www.garlic.com/~lynn/2022.html#62 File Backup

https://www.garlic.com/~lynn/2022.html#57 Computer Security

https://www.garlic.com/~lynn/2022.html#36 Error Handling

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: VM/370 Going Away Date: 18 June 2022 Blog: Facebookre:

For a time my wife reported to manager of one of the FS technology sectors ... she somewhat implies in group meetings most of the other sections would talk about way out blue sky ideas ... but no idea how to make any production implementation.

One of the final nails in the FS coffin was analysis by the IBM

Houston Science Center that 370/195 applications moved to FS machine

made out of the fastest available hardware ... would have throughput

of 370/145 (about 30 times slowdown). Folklore is that Rochester did

greatly simplified version of FS for the S/38 (plenty of performance

headroom between technology and the low-end office market). Then

Rochester does AS/400 as follow-on for combination of S/34, S/36, &

S/38 ... eliminating some of the S/38 FS features.

https://en.wikipedia.org/wiki/IBM_AS/400

Part of my FS ridicule was filesystem "single level store" architecture ... somewhat from TSS/360. I was responsible for OS/360 at the univ. running 360/67 as 360/65 (360/67 sold for TSS/360 which never came to production fruition, later before I graduate I was hired into a small group in the Boeing CFO office to help with the formation of Boeing Computer Services, when I graduate, I leave Boeing and join the IBM science center). The univ would shutdown the datacenter over the weekends and I had the whole place dedicated to myself for 48hrs straight.

The science center came out and installed CP67 (3rd location after science center and MIT Lincoln labs) which I could play with on weekends. The IBM TSS/360 SE was still around and we put together a simulated interactive fortran program edit, compile, and execute. The benchmark running CP67/CMS with 35users had better throughput and interactive response than TSS/360 (on same exact hardware) with only four users. Later I did a page mapped filesystem for CMS and would claim I learned what "not to do" from observing TSS/360 (for moderate benchmarks exercising the filesystem, had three times throughput the standard CMS filesystem).

However, I blamed the inability to get my CMS page mapped filesystem shipped because of the horrible reputation that paged filesystems got from the FS failure (although it was deployed on internal datacenters ... include the sales&marketing support HONE systems).

Part of S/38 filesystem simplification was all disks were part of same

filesystem and a dataset might have pieces across all disks

... backing up met the whole filesystem had to be as single entity

(all disks) ... and any single disk failure ... required the whole

filesystem had to be restored (possibly taking 24hrs elapsed as disks

were added). Implementation totally impractical in large mainframe

datacenter with scores or even hundreds of disks. Trivia:

backup/restore of S/38 was so disastrous that S/38 was early adopter

of RAID.

https://en.wikipedia.org/wiki/RAID#History

In 1977, Norman Ken Ouchi at IBM filed a patent disclosing what was

subsequently named RAID 4.

... snip ...

disk trivia: when I transferred to San Jose Research, got to wander

around IBM and non-IBM datacenters including bldg14 (disk engineering)

and bldg5 (disk product test) across the street. At the time, bldg14

was running 7x24 around the clock, pre-schedule, stand-alone,

mainframe testing. They said that they had recently tried MVS, but MVS

had 15min mean-time-between-failure, requiring manual re-ipl. I

offered to rewrite I/O subsystem, making it bullet proof and never

fail, allowing any amount of on-demand concurrent testing ... greatly

improving productivity. Downside was I was increasingly asked to play

disk engineer ... and would periodically run into Ken. I wrote up what

was done as internal research report and happened to mention the MVS

15min MTBF ... bringing the wrath of the MVS organization down on my

head. informally I was told they tried unsuccessfully to have me

separated from the company ... but then would make my career as

unpleasant as possible ... the joke was on them ... I had already been

told a number of times that I had no career, promotions, raises in IBM

... for offending various IBM careerists and bureaucrats, including at

least for ridiculing FS ... periodically repeated from Ferguson &

Morris, "Computer Wars: The Post-IBM World", Time Books, 1993

http://www.amazon.com/Computer-Wars-The-Post-IBM-World/dp/1587981394

.... reference to "Future System" ...

"and perhaps most damaging, the old culture under Watson Snr and Jr of

free and vigorous debate was replaced with *SYNCOPHANCY* and *MAKE NO

WAVES* under Opel and Akers. It's claimed that thereafter, IBM lived

in the shadow of defeat ... But because of the heavy investment of

face by the top management, F/S took years to kill, although its wrong

headedness was obvious from the very outset. "For the first time,

during F/S, outspoken criticism became politically dangerous," recalls

a former top executive."

... snip ...

In any case, later, just before 3380 drives were about to ship ... FE had hardware regression test of 57 simulated 3380 errors likely to occur, in all 57 cases, MVS would fail (requiring re-ipl) and in 2/3rds of the cases there was no indication of what caused the failure (I didn't feel badly at all).

future system posts

https://www.garlic.com/~lynn/submain.html#futuresys

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

getting to play disk engineer posts

https://www.garlic.com/~lynn/subtopic.html#disk

CMS paged mapped filesystem

https://www.garlic.com/~lynn/submain.html#mmap

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: VM/370 Going Away Date: 18 June 2022 Blog: Facebookre:

AS/400 PowerPC trivia:

https://en.wikipedia.org/wiki/IBM_AS/400#History

and

https://en.wikipedia.org/wiki/IBM_AS/400#The_move_to_PowerPC

The last product we did at IBM was HA/CMP. It started out HA/6000 for the NYTimes to move their newspaper system (ATEX) off (DEC) Vaxcluster to RS/6000. However as I started doing technical/scale-up cluster scale-up with national labs and commercial cluster scale-up with RDBMS vendors (Ingres, Informix, Sybase, Oracle, all had vax/cluster support in the same source base with unix support ... lots of discussion on improving over vaxcluster and easing RDBMS port to HA/CMP). However, nearly every time we visited national labs (including LANL & LLNL) we would get hate mail from the IBM Kingston supercomputer group (working on more traditional supercomputer design).

During HA/CMP, the executive we reported to, transfers over to head up

Somerset (he had previously come from Motorola) ... to do power/pc

... single chip 801/risc. Power was large multichip undertaking, I

would periodically claim that a lot of transition to single chip

power/pc bore heavy influence from Motorola's 88k RISC single chip.

https://en.wikipedia.org/wiki/AIM_alliance

https://en.wikipedia.org/wiki/AIM_alliance#Launch

The development of the PowerPC is centered at an Austin, Texas,

facility called the Somerset Design Center. The building is named

after the site in Arthurian legend where warring forces put aside

their swords, and members of the three teams that staff the building

say the spirit that inspired the name has been a key factor in the

project's success thus far.

... snip ...

Also, Oct1991 senior VP backing the IBM Kingston group, retires and there are audits of his projects. Shortly later there is announcement of internal supercomputing conference (effectively trolling the company for technology). Early Jan1992 we have meeting in Ellison's (Oracle CEO) conference room on (commercial) cluster/scale-up, 16way by mid92, 128way by ye92. Then within a few weeks of the Ellison meeting, cluster scale-up is transferred, announced as IBM supercomputer (for technical/scientific *ONLY*) and we are told we can't work on anything with more than four processors. We leave IBM a few months later. Possibly contributing was mainframe DB2 group complaining that if we were allowed to continue, it would be years ahead of them.

Note: Jan1979 I had been con'ed into doing vm/4341 benchmarks for national lab that was looking at getting 70 for compute farm ... sort of the leading edge of the coming cluster supercomputing tsunami.

ha/cmp posts

https://www.garlic.com/~lynn/subtopic.html#hacmp

801/risc, iliad, romp, rios, power, power/pc

https://www.garlic.com/~lynn/subtopic.html#801

posts mentioning some work on original sql/relational, System/R

https://www.garlic.com/~lynn/submain.html#systemr

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: VM/370 Going Away Date: 18 June 2022 Blog: Facebookre:

from (already posted more on as/400)

http://www.jfsowa.com/computer/memo125.htm

[GRAD was the code name for IBM's Future Systems project of the

1970s. The code names for its components were taken from various

colleges and universities: RIPON was the hardware architecture, COLBY

was the operating system, HOFSTRA/TULANE were the system programming

language and library, and VANDERBILT was the largest of the three

planned implementations. The smallest of the three, with considerable

simplification, was eventually released as System/38, which evolved

into the AS/400.

... snip ...

future system posts

https://www.garlic.com/~lynn/submain.html#futuresys

801/risc, iliad, romp, rios, power, power/pc, etc

https://www.garlic.com/~lynn/subtopic.html#801

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: VM/370 Going Away Date: 18 June 2022 Blog: Facebookre:

as I've mentioned, as undergraduate, I was hired fulltime into a small group in the Boeing CFO office to help with the formation of Boeing Computer Services (consolidate all dataprocessing into independent business unit to better monetize the investment, including offering services to non-Boeing entities). I thought Renton datacenter possibly largest in the world, lots of politics between Renton manager and CFO, who just had a 360/30 for payroll up at Boeing field (although they enlarge the room for a 360/67 that I can play with when I'm not doing other stuff).

Later one of the science center people that had written a lot of CMS\APL software left IBM and joined BCS in DC. On one visit he talked about they had contract with USPS and he was using CMS\APL to do the analysis to justify raising the postal rate.

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

other recent posts mentioning Boeing CFO:

https://www.garlic.com/~lynn/2022d.html#110 Window Display Ground Floor Datacenters

https://www.garlic.com/~lynn/2022d.html#106 IBM Quota

https://www.garlic.com/~lynn/2022d.html#100 IBM Stretch (7030) -- Aggressive Uniprocessor Parallelism

https://www.garlic.com/~lynn/2022d.html#95 Operating System File/Dataset I/O

https://www.garlic.com/~lynn/2022d.html#91 Short-term profits and long-term consequences -- did Jack Welch break capitalism?

https://www.garlic.com/~lynn/2022d.html#57 CMS OS/360 Simulation

https://www.garlic.com/~lynn/2022d.html#19 COMPUTER HISTORY: REMEMBERING THE IBM SYSTEM/360 MAINFRAME, its Origin and Technology

https://www.garlic.com/~lynn/2022c.html#72 IBM Mainframe market was Re: Approximate reciprocals

https://www.garlic.com/~lynn/2022c.html#42 Why did Dennis Ritchie write that UNIX was a modern implementation of CTSS?

https://www.garlic.com/~lynn/2022c.html#8 Cloud Timesharing

https://www.garlic.com/~lynn/2022c.html#2 IBM 2250 Graphics Display

https://www.garlic.com/~lynn/2022c.html#0 System Response

https://www.garlic.com/~lynn/2022b.html#126 Google Cloud

https://www.garlic.com/~lynn/2022b.html#117 Downfall: The Case Against Boeing

https://www.garlic.com/~lynn/2022b.html#27 Dataprocessing Career

https://www.garlic.com/~lynn/2022b.html#10 Seattle Dataprocessing

https://www.garlic.com/~lynn/2022.html#120 Series/1 VTAM/NCP

https://www.garlic.com/~lynn/2022.html#109 Not counting dividends IBM delivered an annualized yearly loss of 2.27%

https://www.garlic.com/~lynn/2022.html#73 MVT storage management issues

https://www.garlic.com/~lynn/2022.html#48 Mainframe Career

https://www.garlic.com/~lynn/2022.html#30 CP67 and BPS Loader

https://www.garlic.com/~lynn/2022.html#22 IBM IBU (Independent Business Unit)

https://www.garlic.com/~lynn/2022.html#12 Programming Skills

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM "Fast-Track" Bureaucrats Date: 18 June 2022 Blog: FacebookMid-80s, top executives were predicting that IBM revenue would shortly double, mostly based on mainframe sales ... and they ere looking at needing to double (mainframe) manufacturing capacity and double the number of executives (needed to run the businesses). A side-effect was large number of "fast-track" newly minted MBAs (rapidly rotating through positions running different victim business units).

It reminded me of a decade earlier when prominent branch manager

horribly offended one of IBM's largest financial industry

customer. After joining IBM I got to wander IBM and customer

locations, and the manager of this particular datacenter liked me to

stop by and talk technology. The customer orders a Amdahl machine in

retaliation (up until then clone mainframe makers were selling into

technical, scientific, univ market ... but had yet to break into the

true-blue commercial market and this would be the first). I was asked

to go sit onsite at the customer to help obfuscate the reason for the

order. I talk it over with the customer and then told IBM I declined

the offer. I was then told the branch manager was good sailing buddy

of IBM CEO and if I refused, I can forget any career, promotion,

and/or raises (wasn't the only time I was fed that line), reminding me

of epidemic "old boys network" and Learson's Management Briefing

ZZ04-1312 about the IBM bureaucrats and careerists). Also from from

Ferguson & Morris, "Computer Wars: The Post-IBM World", Time Books,

1993

http://www.amazon.com/Computer-Wars-The-Post-IBM-World/dp/1587981394

.... reference to "Future System" failure:

"and perhaps most damaging, the old culture under Watson Snr and Jr of

free and vigorous debate was replaced with *SYNCOPHANCY* and *MAKE NO

WAVES* under Opel and Akers. It's claimed that thereafter, IBM lived

in the shadow of defeat ... But because of the heavy investment of

face by the top management, F/S took years to kill, although its wrong

headedness was obvious from the very outset. "For the first time,

during F/S, outspoken criticism became politically dangerous," recalls

a former top executive."

... snip ...

In my executive exit interview, I was told that they could have forgiven me for being wrong, but they would never forgive me for being right.

past posts referencing Learson's Management Briefing ZZ04-1312:

https://www.garlic.com/~lynn/2022d.html#89 Short-term profits and long-term consequences -- did Jack Welch break capitalism?

https://www.garlic.com/~lynn/2022d.html#76 "12 O'clock High" In IBM Management School

https://www.garlic.com/~lynn/2022d.html#71 IBM Z16 - The Mainframe Is Dead, Long Live The Mainframe

https://www.garlic.com/~lynn/2022d.html#52 Another IBM Down Fall thread

https://www.garlic.com/~lynn/2022d.html#35 IBM Business Conduct Guidelines

https://www.garlic.com/~lynn/2021g.html#51 Intel rumored to be in talks to buy chip manufacturer GlobalFoundries for $30B

https://www.garlic.com/~lynn/2021g.html#32 Big Blue's big email blues signal terminal decline - unless it learns to migrate itself

https://www.garlic.com/~lynn/2021e.html#62 IBM / How To Stuff A Wild Duck

https://www.garlic.com/~lynn/2021d.html#51 IBM Hardest Problem(s)

https://www.garlic.com/~lynn/2021.html#0 IBM "Wild Ducks"

https://www.garlic.com/~lynn/2017j.html#23 How to Stuff a Wild Duck

https://www.garlic.com/~lynn/2017f.html#109 IBM downfall

https://www.garlic.com/~lynn/2017b.html#56 Wild Ducks

https://www.garlic.com/~lynn/2015d.html#19 Where to Flatten the Officer Corps

https://www.garlic.com/~lynn/2013.html#11 How do we fight bureaucracy and bureaucrats in IBM?

https://www.garlic.com/~lynn/2012f.html#92 How do you feel about the fact that India has more employees than US?

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: VM/370 Going Away Date: 18 June 2022 Blog: Facebookre:

... periodically posted ... Learson about the IBM careerists and

bureaucrats before FS implosion (and SYNCOPHANCY* and MAKE NO WAVES

under Opel and Akers)

Management Briefing

Number 1-72: January 18,1972

ZZ04-1312

TO ALL IBM MANAGERS:

Once again, I'm writing you a Management Briefing on the subject of

bureaucracy. Evidently the earlier ones haven't worked. So this time

I'm taking a further step: I'm going directly to the individual

employees in the company. You will be reading this poster and my

comment on it in the forthcoming issue of THINK magazine. But I wanted

each one of you to have an advance copy because rooting out

bureaucracy rests principally with the way each of us runs his own

shop.

We've got to make a dent in this problem. By the time the THINK piece

comes out, I want the correction process already to have begun. And

that job starts with you and with me.

Vin Learson

... and ...

+-----------------------------------------+ | "BUSINESS ECOLOGY" | | | | | | +---------------+ | | | BUREAUCRACY | | | +---------------+ | | | | is your worst enemy | | because it - | | | | POISONS the mind | | STIFLES the spirit | | POLLUTES self-motivation | | and finally | | KILLS the individual. | +-----------------------------------------+ "I'M Going To Do All I Can to Fight This Problem . . ." by T. Vincent Learson, Chairman... snip ...

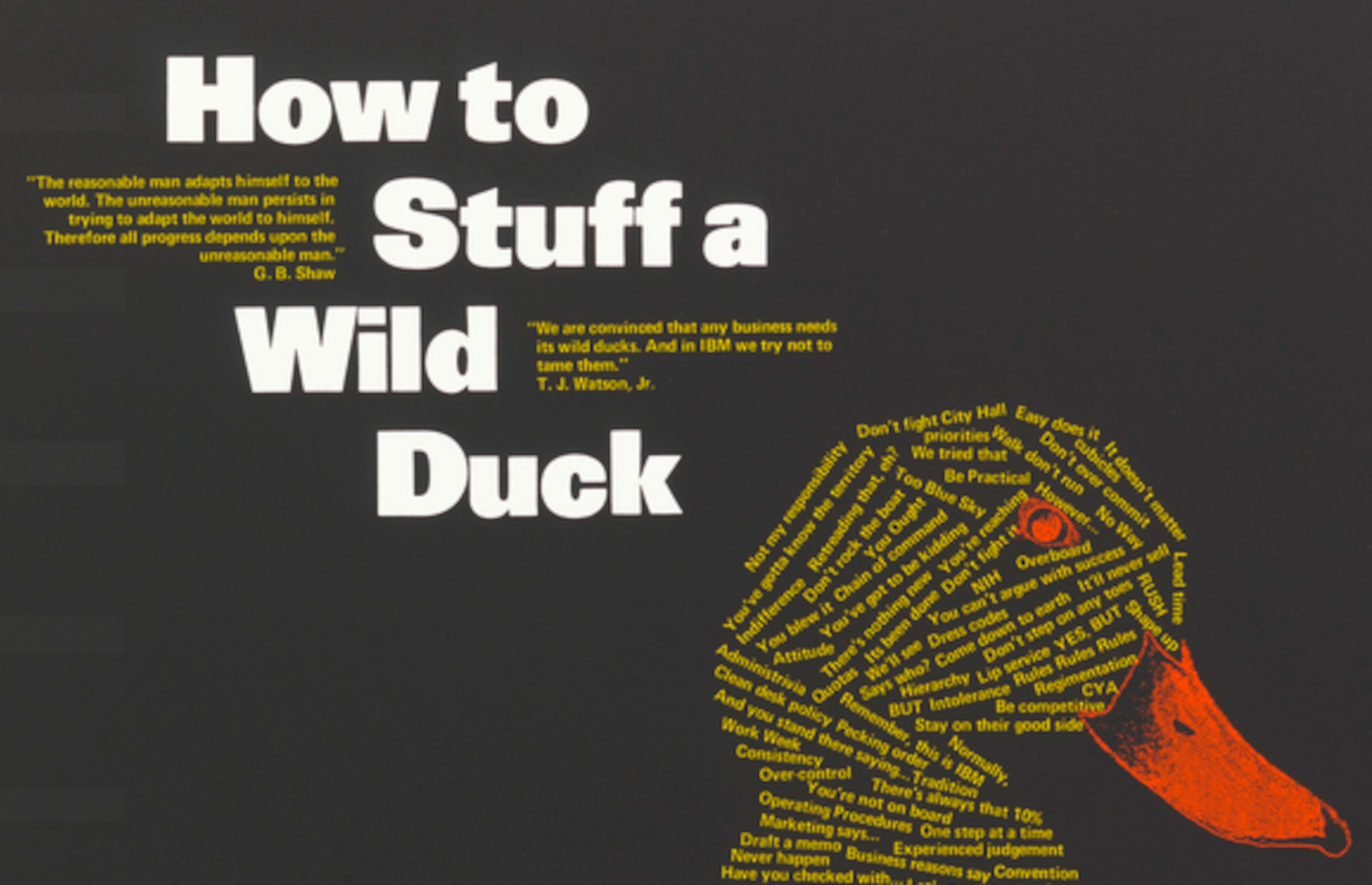

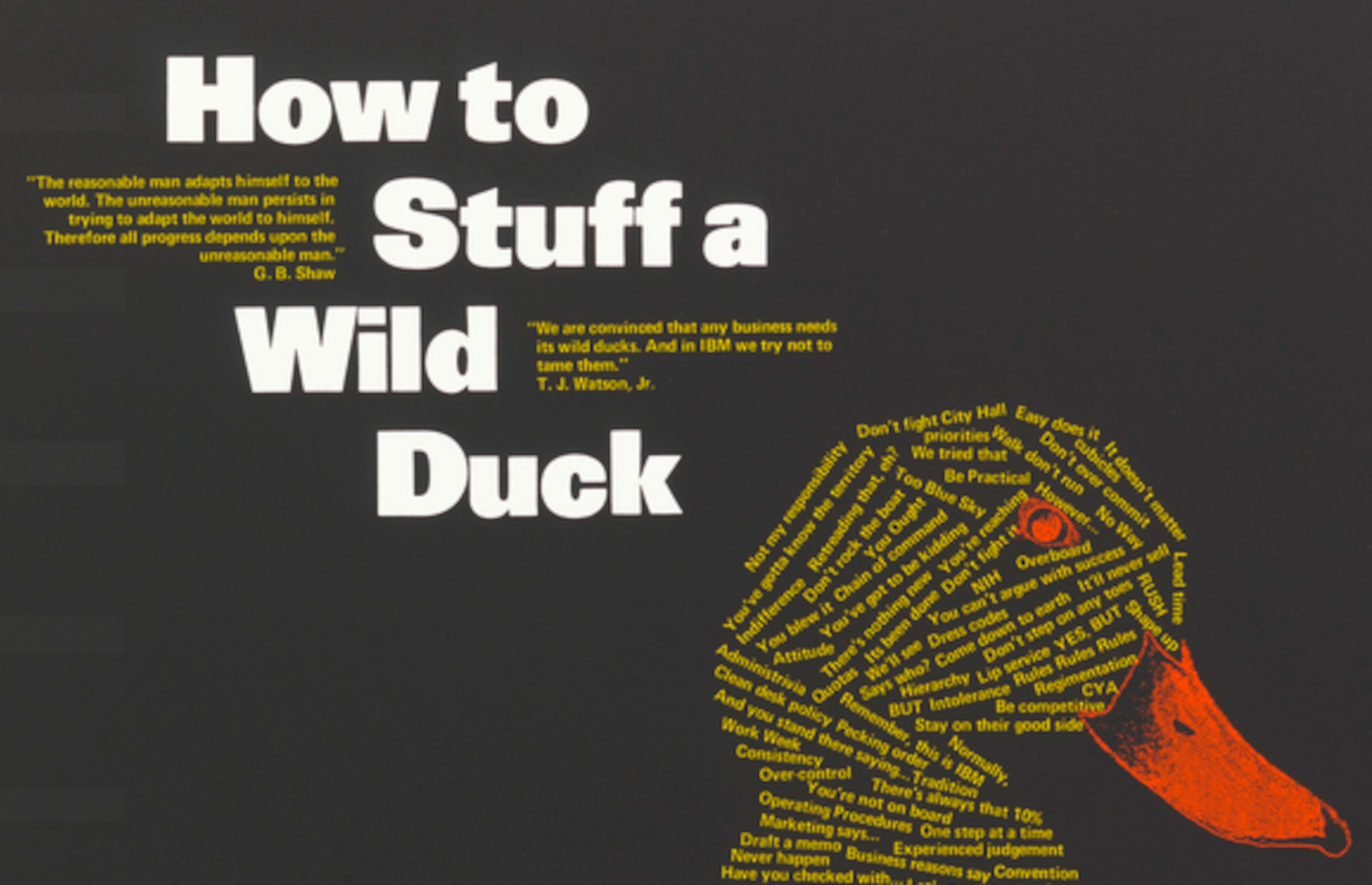

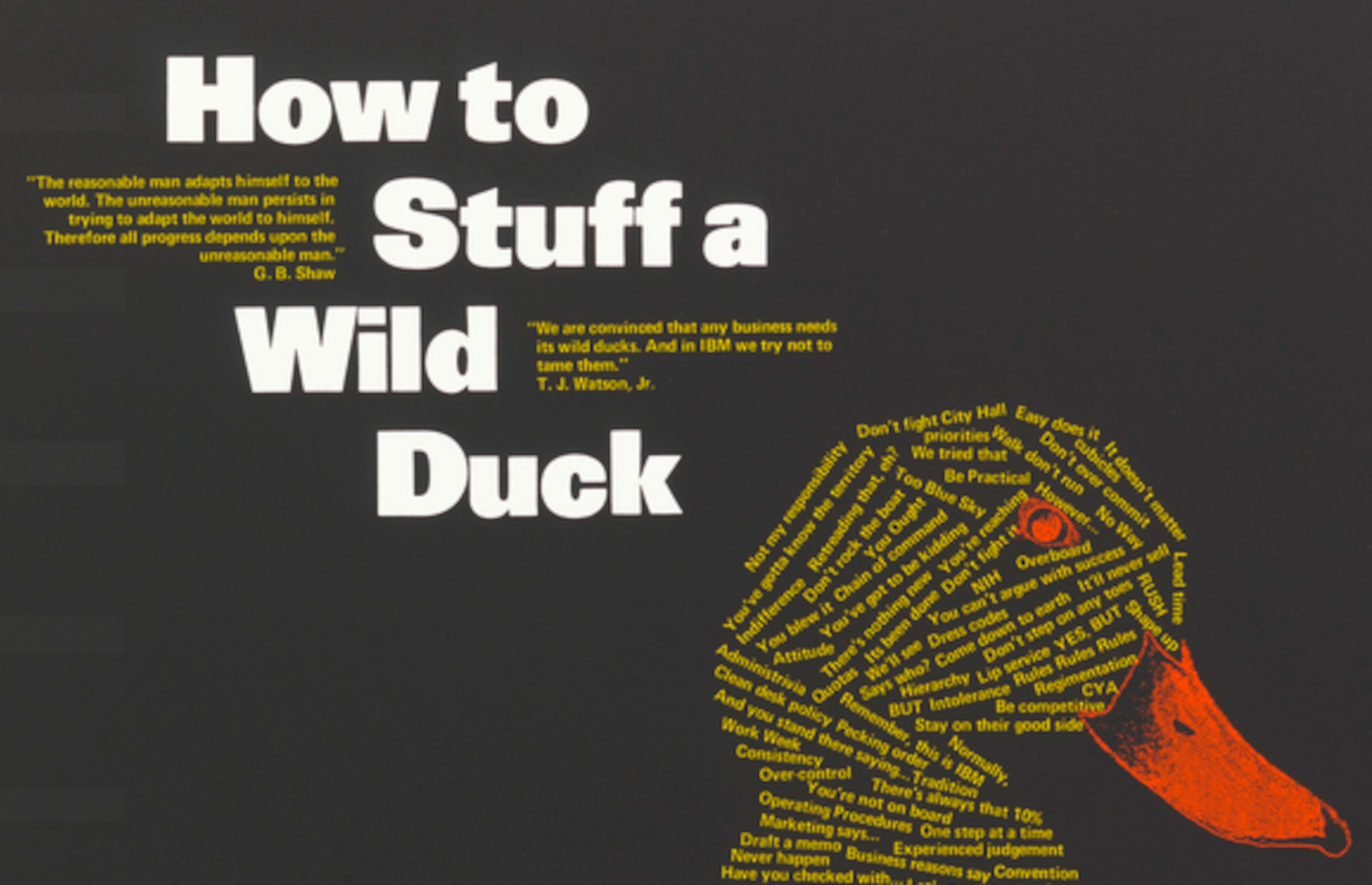

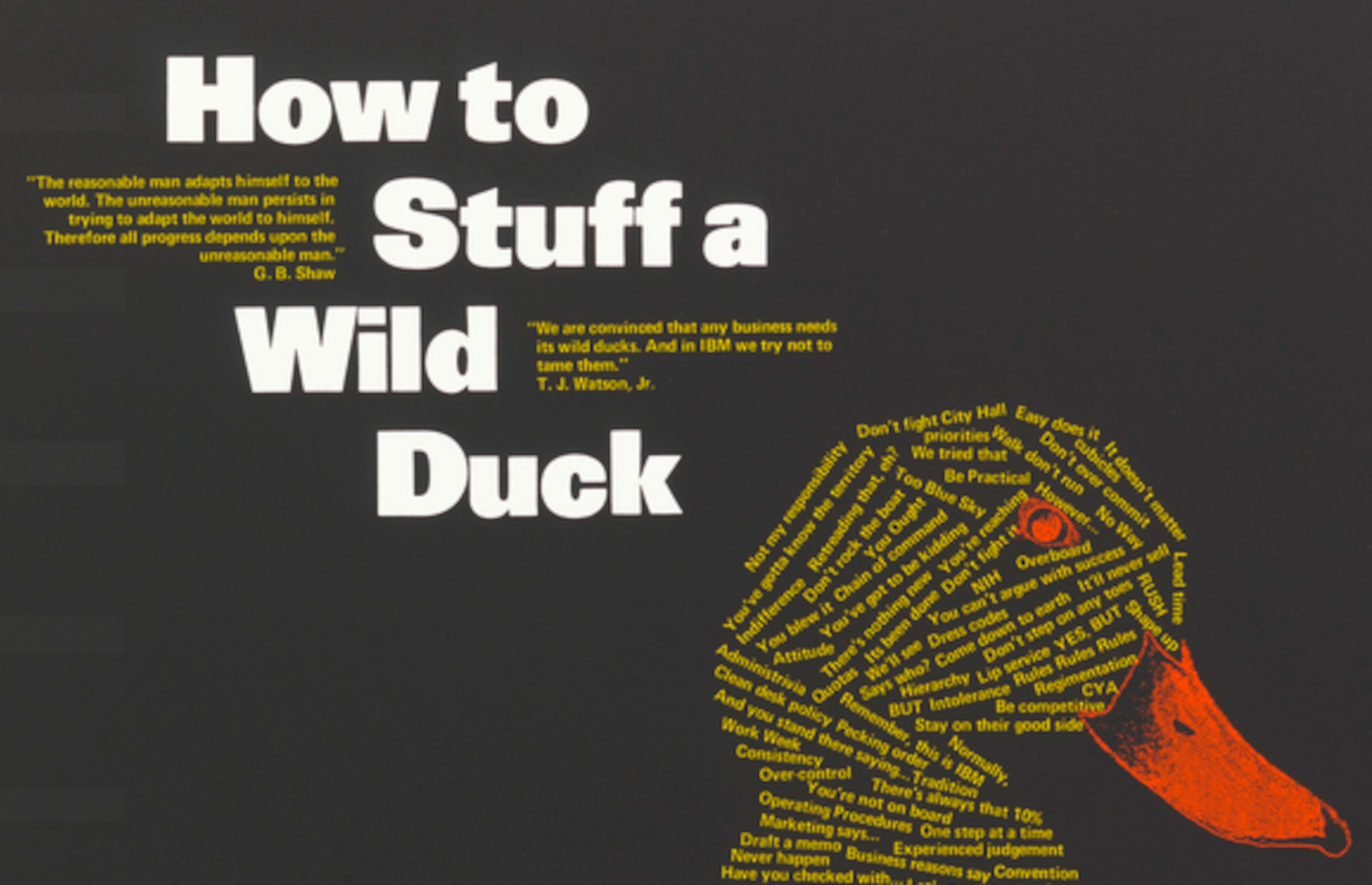

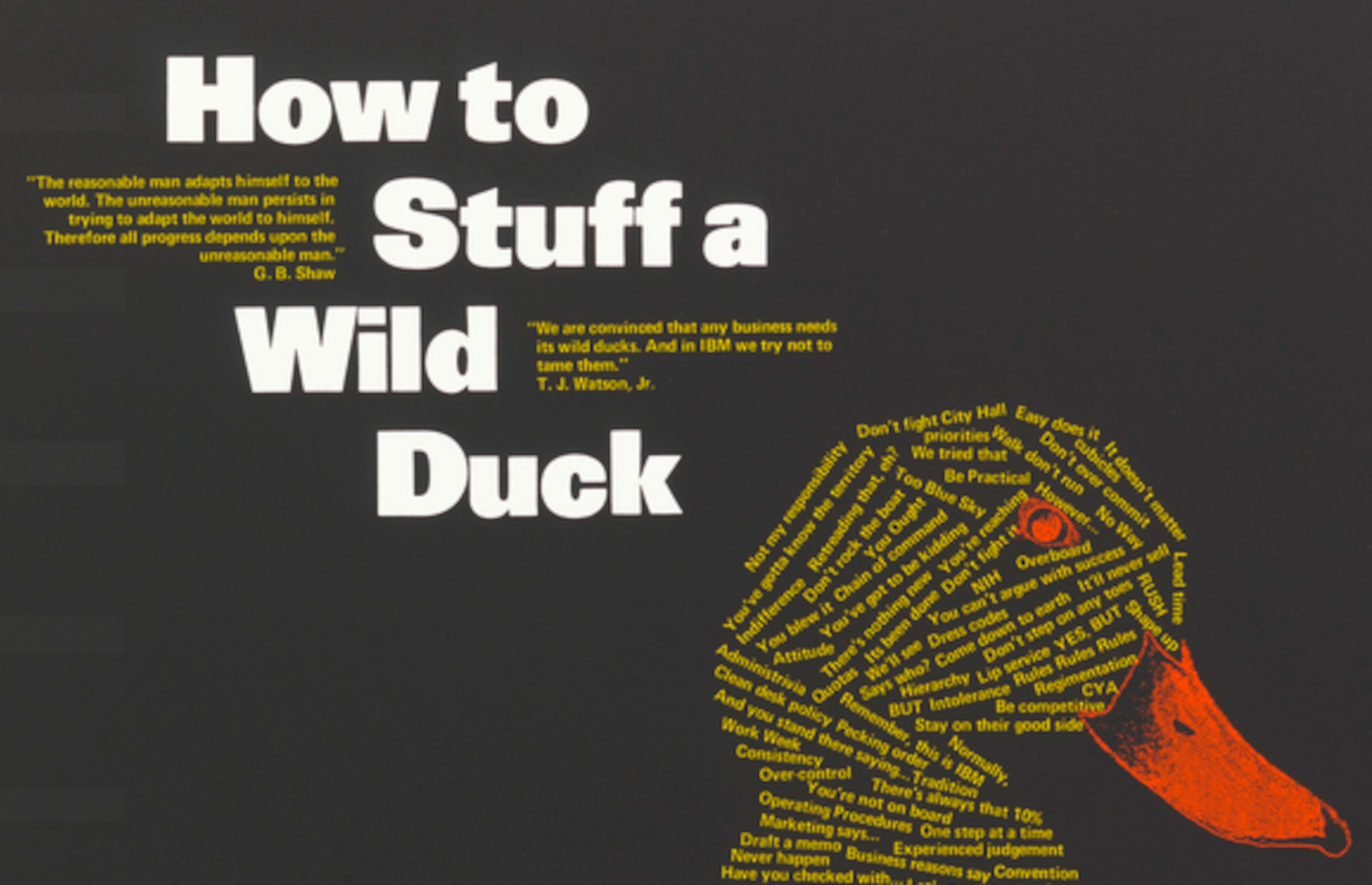

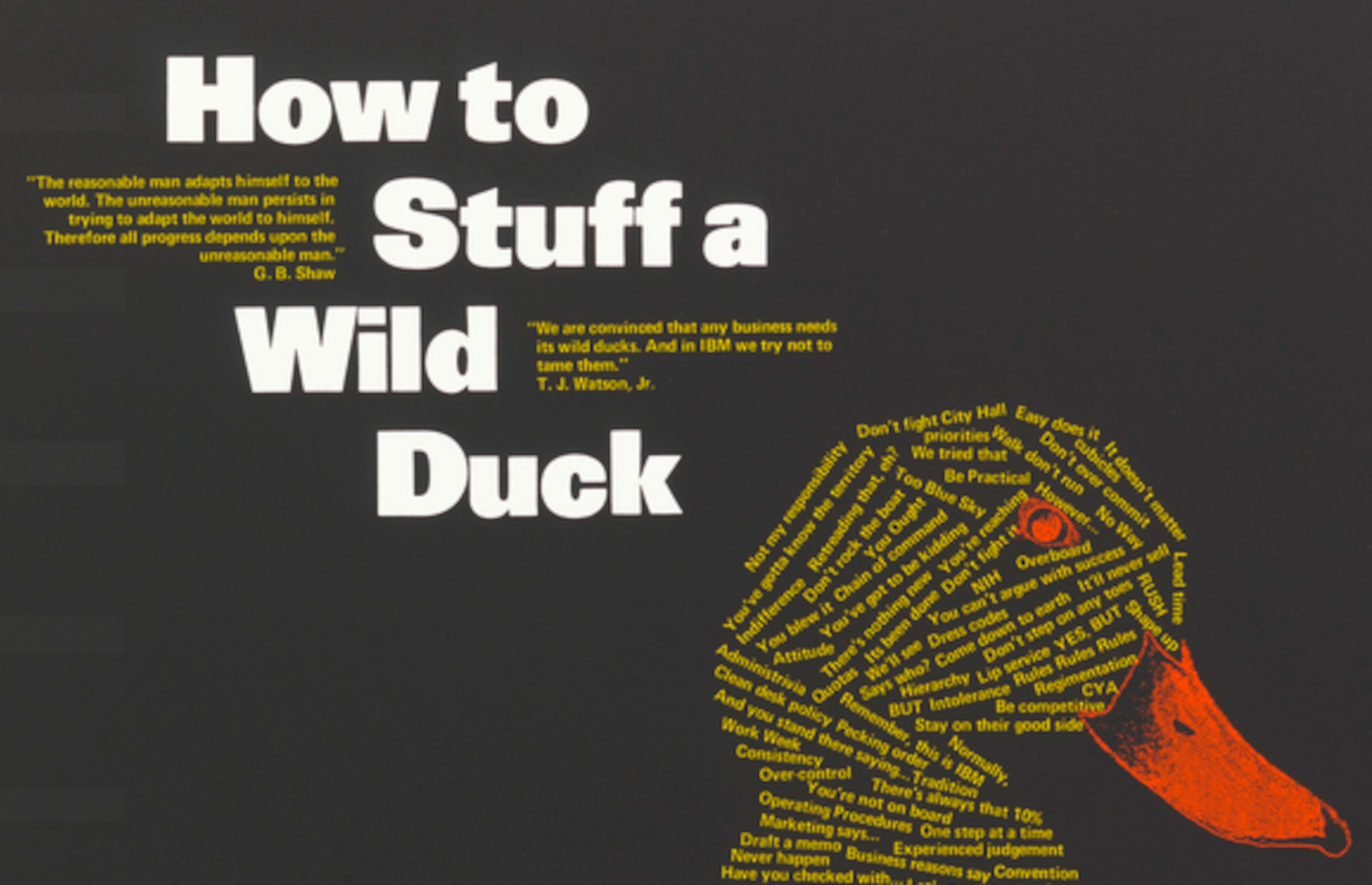

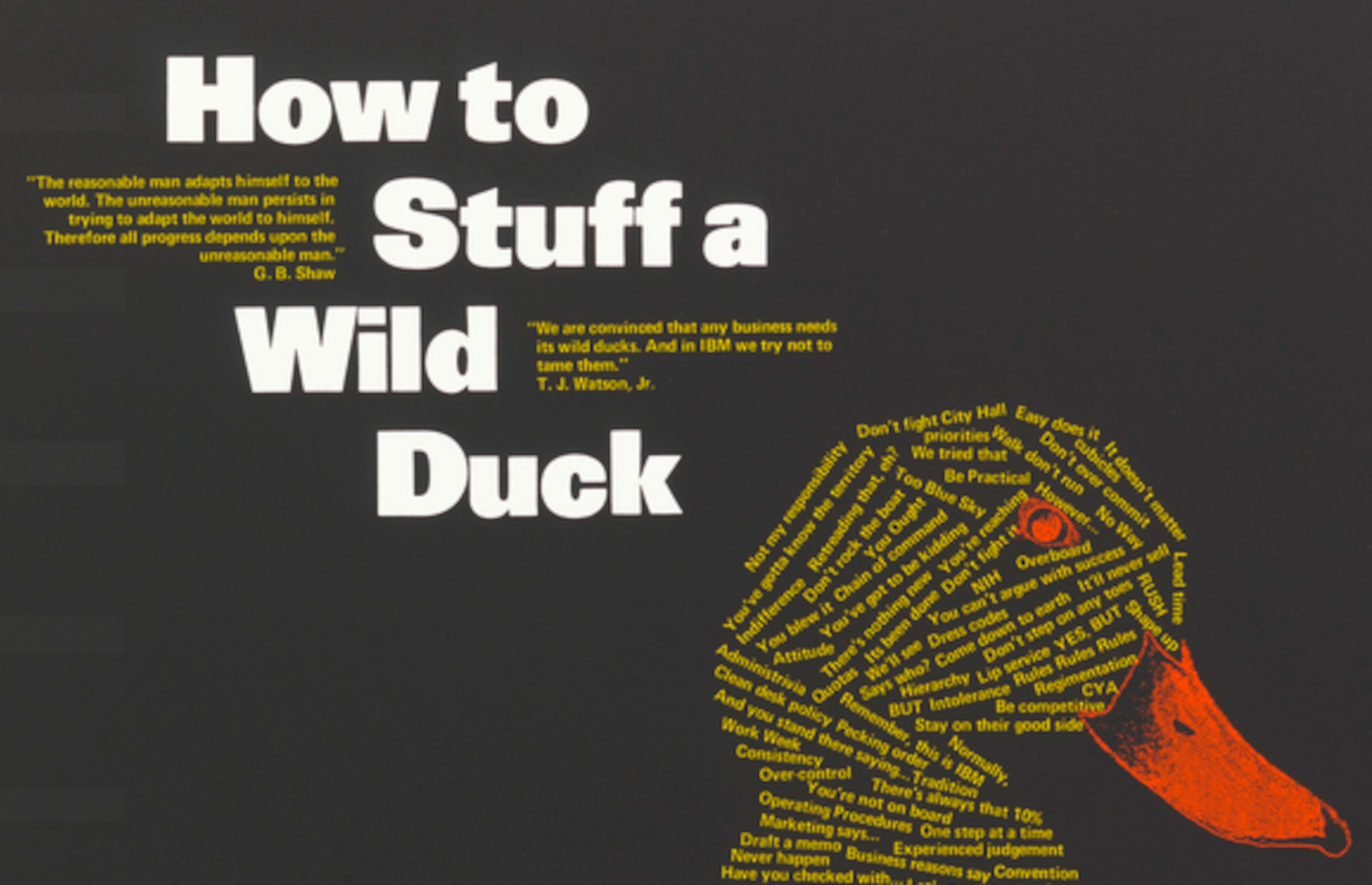

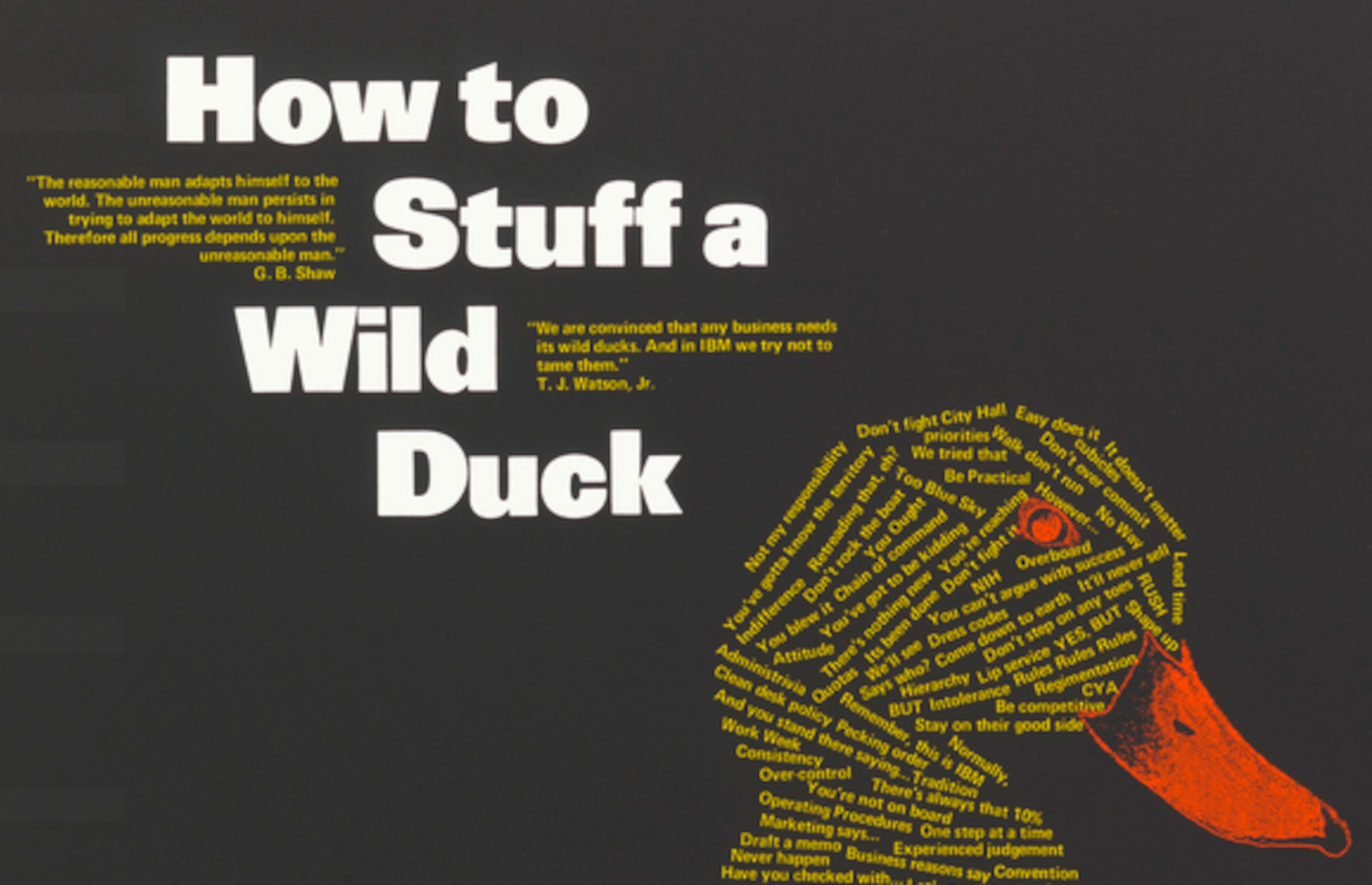

"How To Stuff A Wild Duck", 1973, IBM poster

https://collection.cooperhewitt.org/objects/18618011/

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: Context switch cost Newsgroups: comp.arch Date: Sat, 18 Jun 2022 19:07:34 -1000> I believe POWER uses a variation of the same scheme.

ROMP, 16 segment registers, each with 12bit segment id (top 4bits of 32bit virtual address indexed a segment register) ... they referred to it as 40bit addressing ... the 12bit segment id plus the 28bit address (within segment). no address space id ... designed for CP.r ... and would change segment register values as needed. originally ROMP had no protection domain, inline code could change segment register values as easily as general registers could be changed ... however that had to be "fixed" when moving to a unix process model & programming environment.

POWER (RIOS) just doubled the segment id to 24bits ... and some documentation would still refer to it as 24+28=52bit addressing ... even tho program model had changed to unix with different processes and simulating virtual address space IDs with sets of segment ids.

370 table look-asides could complete reset anytime address space id changed (segment table address), higher-end 370s kept most recent addres space mappings and table lookaside entries were address space id (segment table address) associative. romp/rios entries were segment id associative (not address space associative).

801/risc, iliad, romp, rios, power, power/pc, etc

https://www.garlic.com/~lynn/subtopic.html#801

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: VM Workshop Date: 18 June 2022 Blog: Facebookhope everybody had a good time, Old (archived) 2012 postings from Linkedin (original tiny "URLs" no longer work) discussion groups about 40th, includes some older stuff about 1987 workshop

other trivia:

VM Knights

http://mvmua.org/knights.html

Mainframe Hall of Frame (alphabetical order)

https://www.enterprisesystemsmedia.com/mainframehalloffame

2005 esystems article (although they garbled some of the details),

since gone through name changes and more recently 404

https://web.archive.org/web/20200103152517/http://archive.ibmsystemsmag.com/mainframe/stoprun/stop-run/making-history/

Performance History originally given at Oct86 SEAS (European SHARE),

repeat presentation at 2011 (DC) Hillgang meeting

https://www.garlic.com/~lynn/hill0316g.pdf

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: 3270 Trivia Date: 18 June 2022 Blog: Facebook3277 had .086sec hardware response ... 3278 moved a large amount of electronics back to the 3274 controller (cutting manufacturing cost) ... resulting in huge amount of protocol chatter over the coax driving hardware response to .3sec-.5sec ... this was in the period when lots of published studies about increase in productivity with quarter sec (or better) response ... i.e. for 3277 needed .25-.086=.164sec system response for human to see (.164+.086) quarter sec response ... in order to have quarter sec response with 3278, needed a time machine with negative system response ... i.e. .5sec (hardware response) minus negative .25sec (system response). Letters to the 3278 product administration about 3278 being much worse for interactive, resulted in a reply that 3278 wasn't met for interactive computing, but for data entry (aka electronic key punch).

3277 had logic in keyboard that a little soldering could change repeat key delay, and repeat key rate. I had repeat key rate faster than cursor on screen update ... had to learn timing to lift finger for cursor location it would coast to stop. 3270 also was half-duplex and would lock up typing same time screen write update. A fifo box was built unplug keyboard from screen, plug in fifo box and plug keyboard fifo box ... no more keyboard lockup (horrible for interactive computing) ... none worked with 3278. Also 3277 has enuf electronics able to wire a large tektronics graphics screen into 3277 for 3277GA ... sort of inexpensive 2250/3250

.. 3278 protocol so degraded thruput that later ibmpc 3277 hardware emulation card had 3-4 times upload/download thruput of a 3278 hardware emulation card

some 3270 (FCS and other) trivia

1980 STL (now SVL) was bursting at the seams and they were moving 300 people from the IMS group (and 300 3270 terminals) to offsite bldg with dataprocessing service back to the STL datacenter. They had tried "remote" 3270 (over telco lines), but found the human factors totally unacceptable (compared to channel connected 3270 controllers and my enhanced production operating systems). I get con'ed into doing channel-extender support to the offsite bldg so they could have channel attached controllers at the offste bldg with no perceptible difference in response. The hardware vendor then tries to get IBM to release my support, but there are some engineers in POK playing with some serial stuff who were afraid that it would make it harder to release their stuff ... and get it veto'ed.

Note that 3270 controllers were relative slow with exceptional channel busy ... getting them off the real IBM channels replaced with a fast channel-extender interface box increased STL 370/168 throughput by 10-15% (the 3270 controllers had been spread across the same channels shared with DASD ... and were interfering with DASD throughput, the fast channel-extender box radically cut the channel busy for the same amount of 3270 I/O). STL was considered using the channel extender box for all their 3270 controllers (even those purely in house).

In 1988, the IBM branch office asks me to help LLNL (national lab) get some serial stuff LLNL is playing with, released as standard ... which quickly becomes fibre channel standard (including some stuff I had done in 1980) ... started full-duplex 1gbit, 2gbit aggregate, 200mbyte/sec. Then in 1990, the POK engineers get their stuff released (when it is already obsolete) with ES/9000 as ESCON (17mbytes/sec).

Then some POK engineers become involved in FCS and define a protocol that radically reduces throughput, which is eventually released as FICON. The latest published FICON numbers is z196 "peak I/O" benchmark which got 2M IOPS with 104 FICON (running over 104 FCS). About the same time a FCS was announced for E5-2600 blades (commonly used in cloud datacenters) getting over a million IOPS (two such native FCS having higher throughput than 104 FICON running over 104 FCS).

... after joining IBM, one of my hobbies was production operating systems for internal datacenters. After transferring to san jose research in the 70s, I got to wander around IBM and non-IBM datacenters in silicon valley ... including bldg14 (disk engineering) and bldg15 (disk product test) across the street. At the time they were running around the clock, 7x24, prescheduled, stand-alone mainframe time. They said they had recently tried MVS, but it had 15min mean-time-between failure (in that environment). I offered to rewrite the I/O supervisor to be bullet proof and never fail allowing any amount of on-demand, concurrent testing (greatly improving productivity). Downside was they kept calling and I had to spend increasing amount of time playing disk engineer (diagnose problems). I was also getting .11 trivial interactive system response for my SJR/VM systems ... when the normal production MVS systems were rare to even get 1sec trivia interactive system response.

getting to play disk engineer posts

https://www.garlic.com/~lynn/subtopic.html#disk

channel-extender posts

https://www.garlic.com/~lynn/submisc.html#channel.extender

FICON posts

https://www.garlic.com/~lynn/submisc.html#ficon

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM "Fast-Track" Bureaucrats Date: 19 June 2022 Blog: Facebookre:

In the early 80s, I was introduced to John Boyd and would sponsor his

briefings at IBM. The first time, I tried to do it through plant site

employee education. At first they agreed, but as I provided more

information about how to prevail/win in competitive situations, they

changed their mind. They said that IBM spends a great deal of money

training managers on how to handle employees and it wouldn't be in

IBM's best interest to expose general employees to Boyd, I should

limit audience to senior members of competitive analysis

departments. First briefing in bldg28 auditorium open to all. One

of his quotes:

"There are two career paths in front of you, and you have to choose

which path you will follow. One path leads to promotions, titles, and

positions of distinction.... The other path leads to doing things that

are truly significant for the Air Force, but the rewards will quite

often be a kick in the stomach because you may have to cross swords

with the party line on occasion. You can't go down both paths, you

have to choose. Do you want to be a man of distinction or do you want

to do things that really influence the shape of the Air Force? To Be

or To Do, that is the question."

... snip ...

Trivia: In 89/90 the commandant of Marine Corps leverages Boyd for

make-over of the corps ... at a time when IBM was desperately in need

of make-over ... 1992 has one of the largest losses in history of US

companies and was being reorg'ed into the 13 baby blues in preparation

for breaking up the company (board brings in new CEO who reverses the

breakup) ref gone behind paywall, mostly free at wayback machine

https://web.archive.org/web/20101120231857/http://www.time.com/time/magazine/article/0,9171,977353,00.html

may also work

https://content.time.com/time/subscriber/article/0,33009,977353-1,00.html

When Boyd passes in 1997, the USAF had pretty much disowned him and it was the Marines at Arlington. There have continued to be Boyd conferences at Marine Corps Univ. in Quantico ... including lots of discussions about careerists and bureaucrats (as well as the "old boy networks" and "risk averse").

Chuck's tribute to John

http://www.usni.org/magazines/proceedings/1997-07/genghis-john

for those w/o subscription

http://radio-weblogs.com/0107127/stories/2002/12/23/genghisJohnChuckSpinneysBioOfJohnBoyd.html

John Boyd - USAF. The Fighter Pilot Who Changed the Art of Air Warfare

http://www.aviation-history.com/airmen/boyd.htm

"John Boyd's Art of War; Why our greatest military theorist only made colonel"

http://www.theamericanconservative.com/articles/john-boyds-art-of-war/

40 Years of the 'Fighter Mafia'

https://www.theamericanconservative.com/articles/40-years-of-the-fighter-mafia/

Fighter Mafia: Colonel John Boyd, The Brain Behind Fighter Dominance

https://www.avgeekery.com/fighter-mafia-colonel-john-boyd-the-brain-behind-fighter-dominance/

Updated version of Boyd's Aerial Attack Study

https://tacticalprofessor.wordpress.com/2018/04/27/updated-version-of-boyds-aerial-attack-study/

A New Conception of War

https://www.usmcu.edu/Outreach/Marine-Corps-University-Press/Books-by-topic/MCUP-Titles-A-Z/A-New-Conception-of-War/

Boyd posts & web URLs

https://www.garlic.com/~lynn/subboyd.html

specific past posts mentioning To Be or To Do:

https://www.garlic.com/~lynn/2022d.html#75 "12 O'clock High" In IBM Management School

https://www.garlic.com/~lynn/2022b.html#0 Dataprocessing Career

https://www.garlic.com/~lynn/2022b.html#21 To Be Or To Do

https://www.garlic.com/~lynn/2022b.html#26 To Be Or To Do

https://www.garlic.com/~lynn/2021h.html#80 Warthog/A-10

https://www.garlic.com/~lynn/2022.html#48 Mainframe Career

https://www.garlic.com/~lynn/2021f.html#36 The Blind Strategist: John Boyd and the American Art of War

https://www.garlic.com/~lynn/2021f.html#51 Martial Arts "OODA-loop"

https://www.garlic.com/~lynn/2020.html#44 Watch AI-controlled virtual fighters take on an Air Force pilot on August 18th

https://www.garlic.com/~lynn/2021.html#0 IBM "Wild Ducks"

https://www.garlic.com/~lynn/2021.html#8 IBM CEOs

https://www.garlic.com/~lynn/2019c.html#25 virtual memory

https://www.garlic.com/~lynn/2019.html#12 Employees Come First

https://www.garlic.com/~lynn/2019.html#61 Employees Come First

https://www.garlic.com/~lynn/2019.html#82 The Sublime: Is it the same for IBM and Special Ops?

https://www.garlic.com/~lynn/2017k.html#13 Now Hear This-Prepare For The "To Be Or To Do" Moment

https://www.garlic.com/~lynn/2017k.html#68 Innovation?, Government, Military, Commercial

https://www.garlic.com/~lynn/2019e.html#138 Half an operating system: The triumph and tragedy of OS/2

https://www.garlic.com/~lynn/2017j.html#104 Now Hear This-Prepare For The "To Be Or To Do" Moment

https://www.garlic.com/~lynn/2019d.html#69 Decline of IBM

https://www.garlic.com/~lynn/2017g.html#47 The rise and fall of IBM

https://www.garlic.com/~lynn/2017f.html#14 Fast OODA-Loops increase Maneuverability

https://www.garlic.com/~lynn/2018.html#55 Now Hear This--Prepare For The "To Be Or To Do" Moment

https://www.garlic.com/~lynn/2018e.html#29 These Are the Best Companies to Work For in the U.S

https://www.garlic.com/~lynn/2017b.html#2 IBM 1970s

https://www.garlic.com/~lynn/2017c.html#64 Most people are secretly threatened by creativity

https://www.garlic.com/~lynn/2017c.html#74 Being Lazy Is the Key to Success, According to the Best-Selling Author of 'Moneyball'

https://www.garlic.com/~lynn/2017c.html#92 An OODA-loop is a far-from-equilibrium, non-linear system with feedback

https://www.garlic.com/~lynn/2018c.html#4 Cutting 'Old Heads' at IBM

https://www.garlic.com/~lynn/2017.html#89 The ICL 2900

https://www.garlic.com/~lynn/2016f.html#41 Misc. Success of Failure

https://www.garlic.com/~lynn/2016e.html#14 Leaked IBM email says cutting 'redundant' jobs is a 'permanent and ongoing' part of its business model

https://www.garlic.com/~lynn/2016c.html#20 To Be or To Do

https://www.garlic.com/~lynn/2016d.html#8 What Does School Really Teach Children

https://www.garlic.com/~lynn/2016.html#49 Strategy

https://www.garlic.com/~lynn/2015.html#54 How do we take political considerations into account in the OODA-Loop?

https://www.garlic.com/~lynn/2014h.html#52 EBFAS

https://www.garlic.com/~lynn/2014i.html#7 You can make your workplace 'happy'

https://www.garlic.com/~lynn/2014i.html#12 Let's Face It--It's the Cyber Era and We're Cyber Dumb

https://www.garlic.com/~lynn/2014i.html#13 IBM & Boyd

https://www.garlic.com/~lynn/2014d.html#91 IBM layoffs strike first in India; workers describe cuts as 'slaughter' and 'massive'

https://www.garlic.com/~lynn/2014e.html#9 Boyd for Business & Innovation Conference

https://www.garlic.com/~lynn/2014c.html#83 11 Years to Catch Up with Seymour

https://www.garlic.com/~lynn/2013m.html#23 "There IS no force, just inertia"

https://www.garlic.com/~lynn/2013m.html#28 The Reformers

https://www.garlic.com/~lynn/2013k.html#48 John Boyd's Art of War

https://www.garlic.com/~lynn/2013g.html#63 What Makes collecting sales taxes Bizarre?

https://www.garlic.com/~lynn/2014m.html#7 Information Dominance Corps Self Synchronization

https://www.garlic.com/~lynn/2014m.html#56 The Road Not Taken: Knowing When to Keep Your Mouth Shut

https://www.garlic.com/~lynn/2014m.html#61 Decimation of the valuation of IBM

https://www.garlic.com/~lynn/2013e.html#10 The Knowledge Economy Two Classes of Workers

https://www.garlic.com/~lynn/2013e.html#39 As an IBM'er just like the Marines only a few good men and women make the cut,

https://www.garlic.com/~lynn/2012o.html#65 ISO documentation of IBM 3375, 3380 and 3390 track format

https://www.garlic.com/~lynn/2012o.html#71 Is orientation always because what has been observed? What are your 'direct' experiences?

https://www.garlic.com/~lynn/2012j.html#32 Microsoft's Downfall: Inside the Executive E-mails and Cannibalistic Culture That Felled a Tech Giant

https://www.garlic.com/~lynn/2012i.html#51 Is this Boyd's fundamental postulate, 'to improve our capacity for independent action'? thoughts please

https://www.garlic.com/~lynn/2012k.html#40 Core characteristics of resilience

https://www.garlic.com/~lynn/2012k.html#66 The Perils of Content, the Perils of the Information Age

https://www.garlic.com/~lynn/2012k.html#67 Coping With the Bounds: Speculations on Nonlinearity in Military Affairs

https://www.garlic.com/~lynn/2012h.html#17 Hierarchy

https://www.garlic.com/~lynn/2012h.html#21 The Age of Unsatisfying Wars

https://www.garlic.com/~lynn/2012h.html#24 Baby Boomer Guys -- Do you look old? Part II

https://www.garlic.com/~lynn/2012h.html#63 Is this Boyd's fundamental postulate, 'to improve our capacity for independent action'?

https://www.garlic.com/~lynn/2012f.html#23 Time to competency for new software language?

https://www.garlic.com/~lynn/2012f.html#52 Does the Experiencing Self "Out-OODA" the Remembering Self?

https://www.garlic.com/~lynn/2012e.html#14 Are mothers naturally better at OODA because they always have the Win in mind?

https://www.garlic.com/~lynn/2012e.html#20 Are mothers naturally better at OODA because they always have the Win in mind?

https://www.garlic.com/~lynn/2012e.html#60 Candid Communications & Tweaking Curiosity, Tools to Consider

https://www.garlic.com/~lynn/2012e.html#70 Disruptive Thinkers: Defining the Problem

https://www.garlic.com/~lynn/2012e.html#72 Sunday Book Review: Mind of War

https://www.garlic.com/~lynn/2012c.html#14 Strategy subsumes culture

https://www.garlic.com/~lynn/2012c.html#51 How would you succinctly desribe maneuver warfare?

https://www.garlic.com/~lynn/2012d.html#40 Strategy subsumes culture

https://www.garlic.com/~lynn/2012b.html#42 Strategy subsumes culture

https://www.garlic.com/~lynn/2012b.html#68 Original Thinking Is Hard, Where Good Ideas Come From

https://www.garlic.com/~lynn/2011k.html#88 Justifying application of Boyd to a project manager

https://www.garlic.com/~lynn/2011i.html#57 Low Carb Mavericks, John Boyd and the Art of War

https://www.garlic.com/~lynn/2011d.html#3 If IBM Hadn't Bet the Company

https://www.garlic.com/~lynn/2011d.html#6 If IBM Hadn't Bet the Company

https://www.garlic.com/~lynn/2011d.html#12 I actually miss working at IBM

https://www.garlic.com/~lynn/2011d.html#49 If IBM Hadn't Bet the Company

https://www.garlic.com/~lynn/2011d.html#79 Mainframe technology in 2011 and beyond; who is going to run these Mainframes?