From: Lynn Wheeler <lynn@garlic.com> Subject: Financial Engineering Date: 01 Mar, 2025 Blog: FacebookThe last product we did was HA/6000 approved by Nick Donofrio in 1988 (before RS/6000 was announced) for the NYTimes to move their newspaper system (ATEX) off VAXCluster to RS/6000. I rename it HA/CMP

Early Jan1992 have a meeting with Oracle CEO and IBM/AWD Hester tells Ellison we would have 16-system clusters by mid92 and 128-system clusters by ye92. Then late Jan92, cluster scale-up is transferred for announce as IBM Supercomputer (for technical/scientific *ONLY*) and we are told we can't work on anything with more than four processors (we leave IBM a few months later).

1992, IBM has one of the largest losses in the history of US companies

and was in the process of being re-orged into the 13 baby blues in

preparation for breaking up the company (take off on the "baby bell"

breakup a decade earlier)

https://web.archive.org/web/20101120231857/http://www.time.com/time/magazine/article/0,9171,977353,00.html

https://content.time.com/time/subscriber/article/0,33009,977353-1,00.html

We had already left IBM but get a call from the bowels of Armonk

asking if we could help with the breakup. Before we get started, the

board brings in the former AMEX president as CEO to try and save the

company, who (somewhat) reverses the breakup

Not long after leaving IBM, I was brought in as consultant into small client/server startup, two of the former Oracle people (that we had worked with on HA/CMP cluster scaleup) were there responsible for something they called "commerce server" and they wanted to do payment transactions, the startup had also invented this technology they called "SSL" they wanted to use, it is now frequently called "electronic commerce" (or ecommerce).

I had complete responsibility for everything between "web servers" and gateways to the financial industry payment networks. Payment network trouble desks had 5min initial problem diagnoses ... all circuit based. I had to do a lot of procedures, documentation and software to bring packet-based internet up to that level. I then did a talk (based on ecommerce work) "Why Internet Wasn't Business Critical Dataprocessing" ... which Postel (Internet standards editor) sponsored at ISI/USC.

Stockman and IBM financial engineering company:

https://www.amazon.com/Great-Deformation-Corruption-Capitalism-America-ebook/dp/B00B3M3UK6/

pg464/loc9995-10000:

IBM was not the born-again growth machine trumpeted by the mob of Wall

Street momo traders. It was actually a stock buyback

contraption on steroids. During the five years ending in fiscal 2011,

the company spent a staggering $67 billion repurchasing its own

shares, a figure that was equal to 100 percent of its net income.

pg465/loc10014-17:

Total shareholder distributions, including dividends, amounted to $82

billion, or 122 percent, of net income over this five-year

period. Likewise, during the last five years IBM spent less on capital

investment than its depreciation and amortization charges, and also

shrank its constant dollar spending for research and development by

nearly 2 percent annually.

... snip ...

(2013) New IBM Buyback Plan Is For Over 10 Percent Of Its Stock

http://247wallst.com/technology-3/2013/10/29/new-ibm-buyback-plan-is-for-over-10-percent-of-its-stock/

(2014) IBM Asian Revenues Crash, Adjusted Earnings Beat On Tax Rate

Fudge; Debt Rises 20% To Fund Stock Buybacks (gone behind

paywall)

https://web.archive.org/web/20140201174151/http://www.zerohedge.com/news/2014-01-21/ibm-asian-revenues-crash-adjusted-earnings-beat-tax-rate-fudge-debt-rises-20-fund-st

The company has represented that its dividends and share repurchases

have come to a total of over $159 billion since 2000.

(2016) After Forking Out $110 Billion on Stock Buybacks, IBM

Shifts Its Spending Focus

https://www.fool.com/investing/general/2016/04/25/after-forking-out-110-billion-on-stock-buybacks-ib.aspx

(2018) ... still doing buybacks ... but will (now?, finally?, a

little?) shift focus needing it for redhat purchase.

https://www.bloomberg.com/news/articles/2018-10-30/ibm-to-buy-back-up-to-4-billion-of-its-own-shares

(2019) IBM Tumbles After Reporting Worst Revenue In 17 Years As Cloud

Hits Air Pocket (gone behind paywall)

https://web.archive.org/web/20190417002701/https://www.zerohedge.com/news/2019-04-16/ibm-tumbles-after-reporting-worst-revenue-17-years-cloud-hits-air-pocket

HA/CMP posts

https://www.garlic.com/~lynn/subtopic.html#hacmp

IBM downturn/downfall/breakup posts

https://www.garlic.com/~lynn/submisc.html#ibmdownfall

ecommerce gateways

https://www.garlic.com/~lynn/subnetwork.html#gateway

internet posts

https://www.garlic.com/~lynn/subnetwork.html#internet

stock buyback posts

https://www.garlic.com/~lynn/submisc.html#stock.buyback

former AMEX president posts

https://www.garlic.com/~lynn/submisc.html#gerstner

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Large Datacenters Date: 01 Mar, 2025 Blog: FacebookI had taken 2credit-hr intro do fortran/computers and at the end of the semester was hired to rewrite 1401 MPIO in 360 assembler for 360/30. Univ was getting 360/67 for tss/360 to replace 709/1401 and got a 360/30 temporarily (replacing 1401) pending availability of 360/67. Univ shutdown datacenter on weekends and I got the whole place dedicated (although 48hrs w/o sleep made Mondays hard). I was given a bunch of hardware & software manuals and to to design and implement my own monitor, device drivers, interrupt handlers, error recovery, storage management, etc ... and within a few weeks had a 2000 card assembler program. The 360/67 arrives within year of talking intro class and I was hired fulltime responsible for os/360 (tss/360 never came to production).

Then before I graduate, I'm hired fulltime into a small group in Boeing CFO office to help with the formation of Boeing Computer Services (consolidate all dataprocessing into independent business unit). I think Renton datacenter largest in the world with 360/65s arriving faster than they could be installed, boxes constantly staged in the hallways around the machine room. Lots of politics between Renton director and CFO who only had a 360/30 up at Boeing field (although they enlarge the machine room to install 360/67 for me to play with when I'm not doing other stuff). Then when I graduate, instead of staying with the CFO, I join IBM science center.

I was introduced to John Boyd in the early 80s and would sponsor his

briefings at IBM. He had lots of stories, including being very vocal

about electronics across the trail wouldn't work. Possibly as

punishment he was put in command of "spook base" (Boyd would say it

had the largest air conditioned bldg in that part of the world) about

the same time I'm at Boeing

https://en.wikipedia.org/wiki/Operation_Igloo_White

https://web.archive.org/web/20030212092342/http://home.att.net/~c.jeppeson/igloo_white.html

Access to the environmentally controlled building was afforded via the

main security lobby that also doubled as an airlock entrance and

changing-room, where twelve inch-square pidgeon-hole bins stored

individually name-labeled white KEDS sneakers for all TFA

personnel. As with any comparable data processing facility of that

era, positive pressurization was necessary to prevent contamination

and corrosion of sensitive electro-mechanical data processing

equipment. Reel-to-reel tape drives, removable hard-disk drives,

storage vaults, punch-card readers, and inumerable relays in

1960's-era computers made for high-maintainence systems. Paper dust

and chaff from fan-fold printers and the teletypes in the

communications vault produced a lot of contamination. The super-fine

red clay dust and humidity of northeast Thailand made it even more

important to maintain a well-controlled and clean working environment.

Maintenance of air-conditioning filters and chiller pumps was always a

high-priority for the facility Central Plant, but because of the

24-hour nature of operations, some important systems were run to

failure rather than taken off-line to meet scheduled preventative

maintenance requirements. For security reasons, only off-duty TFA

personnel of rank E-5 and above were allowed to perform the

housekeeping in the facility, where they constantly mopped floors and

cleaned the consoles and work areas. Contract civilian IBM computer

maintenance staff were constantly accessing the computer sub-floor

area for equipment maintenance or cable routing, with the numerous

systems upgrades, and the underfloor plenum areas remained much

cleaner than the average data processing facility. Poisonous snakes

still found a way in, causing some excitement, and staff were

occasionally reprimanded for shooting rubber bands at the flies during

the moments of boredom that is every soldier's fate. Consuming

beverages, food or smoking was not allowed on the computer floors, but

only in the break area outside. Staff seldom left the compound for

lunch. Most either ate C-rations, boxed lunches assembled and

delivered from the base chow hall, or sandwiches and sodas purchased

from a small snack bar installed in later years.

... snip ...

Boyd biography says "spook base" was a $2.5B "windfall" for IBM (ten times Renton).

In 89/90 the Commandant of Marine Corps leverages Boyd for make-over

of the corps (at a time when IBM was desperately in need of

make-over).

https://www.linkedin.com/pulse/john-boyd-ibm-wild-ducks-lynn-wheeler/

1992 IBM has one of the largest losses in history of US companies and

was being reorganized into the 13 baby blues in preparation for

breaking up the company (take off on "baby bells" breakup a decade

earlier).

https://web.archive.org/web/20101120231857/http://www.time.com/time/magazine/article/0,9171,977353,00.html

https://content.time.com/time/subscriber/article/0,33009,977353-1,00.html

We had already left IBM but get a call from the bowels of Armonk

asking if we could help with the breakup. Before we get started, the

board brings in the former AMEX president as CEO to try and save the

company, who (somewhat) reverses the breakup.

Boyd passes in 1997 and the USAF had pretty much disowned him and it was the Marines at Arlington and his effects go to Quantico. The 89/90 commandant continued to sponsor regular Boyd themed conferences at Marine Corps Univ. In one, the (former) commandant wanders in after lunch and speaks for two hrs (totally throwing schedule off, but nobody complains). I'm in the back corner of the room and when he is done, he makes a beeline straight for me (and all I could think of was I had been setup by Marines I've offended in the past, including former head of DaNang datacenter and later Quantico).

IBM Cambridge Science Center

https://www.garlic.com/~lynn/subtopic.html#545tech

Boyd posts and web URLs

https://www.garlic.com/~lynn/subboyd.html

IBM downturn/downfall/breakup posts

https://www.garlic.com/~lynn/submisc.html#ibmdownfall

former AMEX president posts

https://www.garlic.com/~lynn/submisc.html#gerstner

some recent 709/1401, MPIO, 360/67, univ, Boeing CFO, Renton posts

https://www.garlic.com/~lynn/2025.html#111 Computers, Online, And Internet Long Time Ago

https://www.garlic.com/~lynn/2025.html#91 IBM Computers

https://www.garlic.com/~lynn/2024g.html#106 IBM 370 Virtual Storage

https://www.garlic.com/~lynn/2024g.html#39 Applications That Survive

https://www.garlic.com/~lynn/2024g.html#17 60s Computers

https://www.garlic.com/~lynn/2024f.html#124 Any interesting PDP/TECO photos out there?

https://www.garlic.com/~lynn/2024f.html#110 360/65 and 360/67

https://www.garlic.com/~lynn/2024f.html#88 SHARE User Group Meeting October 1968 Film Restoration, IBM 360

https://www.garlic.com/~lynn/2024f.html#69 The joy of FORTH (not)

https://www.garlic.com/~lynn/2024f.html#20 IBM 360/30, 360/65, 360/67 Work

https://www.garlic.com/~lynn/2024e.html#136 HASP, JES2, NJE, VNET/RSCS

https://www.garlic.com/~lynn/2024e.html#67 The IBM Way by Buck Rogers

https://www.garlic.com/~lynn/2024e.html#24 Public Facebook Mainframe Group

https://www.garlic.com/~lynn/2024d.html#103 IBM 360/40, 360/50, 360/65, 360/67, 360/75

https://www.garlic.com/~lynn/2024d.html#79 Other Silicon Valley

https://www.garlic.com/~lynn/2024d.html#76 Some work before IBM

https://www.garlic.com/~lynn/2024d.html#63 360/65, 360/67, 360/75 750ns memory

https://www.garlic.com/~lynn/2024d.html#25 IBM 23June1969 Unbundling Announcement

https://www.garlic.com/~lynn/2024d.html#22 Early Computer Use

https://www.garlic.com/~lynn/2024c.html#93 ASCII/TTY33 Support

https://www.garlic.com/~lynn/2024c.html#15 360&370 Unix (and other history)

https://www.garlic.com/~lynn/2024c.html#9 Boeing and the Dark Age of American Manufacturing

https://www.garlic.com/~lynn/2024b.html#97 IBM 360 Announce 7Apr1964

https://www.garlic.com/~lynn/2024b.html#63 Computers and Boyd

https://www.garlic.com/~lynn/2024b.html#60 Vintage Selectric

https://www.garlic.com/~lynn/2024b.html#49 Vintage 2250

https://www.garlic.com/~lynn/2024b.html#44 Mainframe Career

https://www.garlic.com/~lynn/2024.html#87 IBM 360

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: Why VAX Was the Ultimate CISC and Not RISC Newsgroups: comp.arch Date: Sat, 01 Mar 2025 18:29:50 -1000anton@mips.complang.tuwien.ac.at (Anton Ertl) writes:

When follow-on to DISPLAYWRITER was canceled, they pivoted to UNIX workstation market and got the company that had done AT&T unix port to IBM/PC for PC/IX ... to do AIX. Now ROMP needed supervisor/problem mode and inline code could no longer change segment register values ... needed to have supervisor call.

Folklore is they also had 200 PL.8 programmers and needed something for them to do, so they gen'ed a abstract virtual machine system ("VRM") (implemented in PL.8) and had AIX port be done to the abstract virtual machine definition (instead of real hardware) .... claiming that the combined effort would be less (total effort) than having the outside company do the AIX port to the real hardware (also putting in a lot of IBM SNA communication support).

The IBM Palo Alto group had been working on UCB BSD port to 370, but was redirected to do it instead to bare ROMP hardware ... doing it in enormously significantly less resources than the VRM+AIX+SNA effort.

Move to RS/6000 & RIOS (large multi-chip) doubled the 12bit segment-id to 24bit segment-id (and some left-over description talked about it being 52bit addressing) and eliminated the VRM ... and adding in some amount of BSDisms.

AWD had done their own cards for PC/RT (16bit AT) bus, including a 4mbit token-ring card. Then for RS/6000 microchannel, AWD was told they couldn't do their own card, but had to do PS2 microchannel cards. The communication group was fiercely fighting off client/server and distributed computing and had seriously performance knee-capped PS2 cards, including ($800) 16mbit token-ring card (the PS2 microchannel which had lower card throughput than the PC/RT 4mbit TR card). There was joke that PC/RT 4mbit TR server having higher throughput than RS/6000 16mbit TR server. There was also joke that the RS6000/730 with VMEbus was a work around corporate politics and being able to install high-performance workstation cards

We got the HA/6000 project in 1988 (approved by Nick Donofrio),

originally for NYTimes to move their newspaper system (ATEX) off VAXCluster to

RS/6000. I rename it HA/CMP.

https://en.wikipedia.org/wiki/IBM_High_Availability_Cluster_Multiprocessing

when I start doing technical/scientific cluster scale-up with national

labs (LLNL, LANL, NCAR, etc) and commercial cluster scale-up with

RDBMS vendors (Oracle, Sybase, Ingres, Informix that had vaxcluster

support in same source base with unix). The S/88 product administrator

then starts taking us around to their customers and also has me do a

section for the corporate continuous availability strategy document

... it gets pulled when both Rochester/AS400 and POK/(high-end

mainframe) complain they couldn't meet the requirements.

Early Jan1992 have a meeting with Oracle CEO and IBM/AWD Hester tells Ellison we would have 16-system clusters by mid92 and 128-system clusters by ye92. Then late Jan92, cluster scale-up is transferred for announce as IBM Supercomputer (for technical/scientific *ONLY*) and we are told we can't work on anything with more than four processors (we leave IBM a few months later). Contributing was the mainframe DB2 DBMS group were complaining if we were allowed to coninue, it would be at least five years ahead of them.

Neither ROMP or RIOS supported bus/cache consistency for multiprocessor operation. The executive we reported to, went over to head up ("AIM" - Apple, IBM, Motorola) Somerset for single chip 801/risc ... but also adopts Motorola 88k bus enabling multiprocessor configurations. He later leaves Somerset for president of (SGI owned) MIPS.

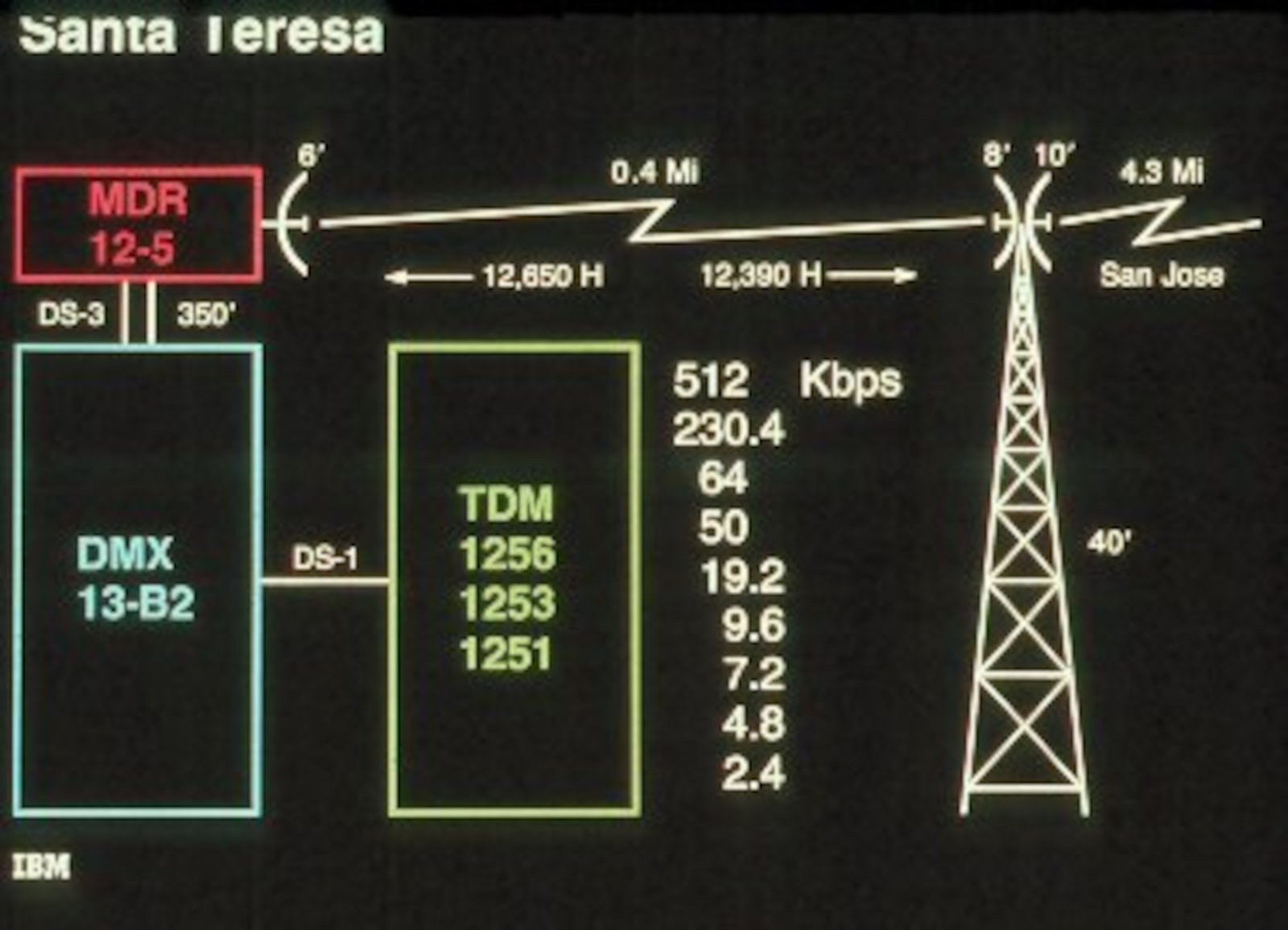

trivia: I also had HSDT project (started in early 80s), T1 and faster computer links, both terrestrial and satellite ... which included custom designed TDMA satellite system done on the other side of the pacific ... and put in 3-node system. two 4.5M dishes, one in San Jose and one in Yorktown Research (hdqtrs, east coast) and a 7M dish in Austin (where much of the RIOS design was going on). San Jose also got an EVE, a superfast hardware VLSI logic simulator (scores of times faster than existing simultion) ... and it was claimed that Austin being able to use the EVE in San Jose, helped bring RIOS in a year early.

801/risc, iliad, romp, rios, pc/rt, rs/6000, power, power/pc posts

https://www.garlic.com/~lynn/subtopic.html#801

ha/cmp posts

https://www.garlic.com/~lynn/subtopic.html#hacmp

hsdt posts

https://www.garlic.com/~lynn/subnetwork.html#hsdt

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Clone 370 System Makers Date: 02 Mar, 2025 Blog: Facebookre:

Note: several years after the Amdahl incident (and being told goodby to career, promotions, raises), I wrote an IBM "speakup" about being underpaid with some supporting documents. Got a written reply from head of HR that after a detail review of my whole career, I was being paid exactly what I was suppose to be paid. I then made copy of original "speakup" and head of HR's reply and wrote a cover stating that I recently was asked to help interview some number of students that would be shortly graduating, for positions in new group that I would be technically directing ... and found out that they were being offered starting salaries that were 1/3rd more than I was currently making. I never got a written reply, but a few weeks later I got a 33% raise (putting me on level playing field with new graduate hires). Several people then reminded me that "Business Ethics" was an oxymoron.

some past posts mentioning the speakup

https://www.garlic.com/~lynn/2023c.html#89 More Dataprocessing Career

https://www.garlic.com/~lynn/2023b.html#101 IBM Oxymoron

https://www.garlic.com/~lynn/2022h.html#24 Inventing the Internet

https://www.garlic.com/~lynn/2022f.html#42 IBM Bureaucrats

https://www.garlic.com/~lynn/2022e.html#59 IBM CEO: Only 60% of office workers will ever return full-time

https://www.garlic.com/~lynn/2022d.html#35 IBM Business Conduct Guidelines

https://www.garlic.com/~lynn/2022b.html#95 IBM Salary

https://www.garlic.com/~lynn/2022b.html#27 Dataprocessing Career

https://www.garlic.com/~lynn/2021k.html#125 IBM Clone Controllers

https://www.garlic.com/~lynn/2021j.html#39 IBM Registered Confidential

https://www.garlic.com/~lynn/2021j.html#12 Home Computing

https://www.garlic.com/~lynn/2021i.html#82 IBM Downturn

https://www.garlic.com/~lynn/2021h.html#61 IBM Starting Salary

https://www.garlic.com/~lynn/2021e.html#15 IBM Internal Network

https://www.garlic.com/~lynn/2021d.html#86 Bizarre Career Events

https://www.garlic.com/~lynn/2021c.html#40 Teaching IBM class

https://www.garlic.com/~lynn/2021b.html#12 IBM "811", 370/xa architecture

https://www.garlic.com/~lynn/2017d.html#49 IBM Career

https://www.garlic.com/~lynn/2017.html#78 IBM Disk Engineering

https://www.garlic.com/~lynn/2014i.html#47 IBM Programmer Aptitude Test

https://www.garlic.com/~lynn/2014h.html#81 The Tragedy of Rapid Evolution?

https://www.garlic.com/~lynn/2014c.html#65 IBM layoffs strike first in India; workers describe cuts as 'slaughter' and 'massive'

https://www.garlic.com/~lynn/2012k.html#42 The IBM "Open Door" policy

https://www.garlic.com/~lynn/2012k.html#28 How to Stuff a Wild Duck

https://www.garlic.com/~lynn/2011g.html#12 Clone Processors

https://www.garlic.com/~lynn/2011g.html#2 WHAT WAS THE PROJECT YOU WERE INVOLVED/PARTICIPATED AT IBM THAT YOU WILL ALWAYS REMEMBER?

https://www.garlic.com/~lynn/2010c.html#82 search engine history, was Happy DEC-10 Day

https://www.garlic.com/~lynn/2009h.html#74 My Vintage Dream PC

https://www.garlic.com/~lynn/2007j.html#94 IBM Unionization

https://www.garlic.com/~lynn/2007j.html#83 IBM Unionization

https://www.garlic.com/~lynn/2007j.html#75 IBM Unionization

https://www.garlic.com/~lynn/2007e.html#48 time spent/day on a computer

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: Why VAX Was the Ultimate CISC and Not RISC Newsgroups: comp.arch Date: Sun, 02 Mar 2025 09:03:53 -1000Robert Swindells <rjs@fdy2.co.uk> writes:

from long ago and far away:

Date: 79/07/11 11:00:03

To: wheeler

i heard a funny story: seems the MIT LISP machine people proposed that

IBM furnish them with an 801 to be the engine for their prototype.

B.O. Evans considered their request, and turned them down.. offered them

an 8100 instead! (I hope they told him properly what they thought of

that)

... snip ... top of post, old email index

... trivia: Evans had asked my wife to review/audit 8100 (had really slow, anemic processor) and shortly later it was canceled ("decomitted").

801/risc, iliad, romp, rios, pc/rt, rs/6000, power, power/pc posts

https://www.garlic.com/~lynn/subtopic.html#801

misc past posts with same email

https://www.garlic.com/~lynn/2023e.html#84 memory speeds, Solving the Floating-Point Conundrum

https://www.garlic.com/~lynn/2006t.html#9 32 or even 64 registers for x86-64?

https://www.garlic.com/~lynn/2006o.html#45 "25th Anniversary of the Personal Computer"

https://www.garlic.com/~lynn/2006c.html#3 Architectural support for programming languages

https://www.garlic.com/~lynn/2003e.html#65 801 (was Re: Reviving Multics

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: RDBMS, SQL/DS, DB2, HA/CMP Date: 02 Mar, 2025 Blog: FacebookVern Watts responsible for IMS

SQL/Relational started 1974 at San Jose Research (main plant site) as System/R, implementing on VM370

Some of the MIT CTSS/7094 people went to the 5th flr to do Multics, others went to to the IBM Cambridge Science Center ("CSC") on the 4th flr, did virtual machines (initially CP40/CMS on 360/40 with virtual memory hardware mods, morphs into CP67/CMS when 360/67 standard with virtual memory becomes available), internal network, invented GML in 1969, lots of online apps, When decision was made to add virtual memory to all 370s, some of the CSC people split off and take-over the IBM Boston Programming Center on the 3rd flr for the VM370 development group (and CP67/CMS morphs into VM370/CMS).

Multics releases the 1st relational RDBMS (non-SQL) in June 1976

https://www.mcjones.org/System_R/mrds.html

STL (since renamed SVL) didn't appear until 1977, it was originally going to be called Coyote after the convention naming for the closest Post Office. However that spring the San Francisco Coyote Organization demonstrated on the steps of the capital and it was quickly decided to choose a different name (prior to the opening), eventually the closest cross street. Vern and IMS move up from LA area to STL. It was same year that I transferred from CSC to San Jose Research and would work on some of System/R with Jim Gray and Vera Watson. Some amount of criticism from IMS group about System/R, including index requiring lots more I/O and double the disk space.

First SQL/RDBMS ships, Oracle

https://www.mcjones.org/System_R/SQL_Reunion_95/sqlr95-Oracle.html

STL was in the process of doing the next great DBMS, "EAGLE" and we

were able to do technology transfer to Endicott (under the "radar",

while company pre-occupied with "EAGLE") for SQL/DS

https://www.mcjones.org/System_R/SQL_Reunion_95/sqlr95-SQL_DS.html

Later "EAGLE" implodes and there is a request for how fast could

System/R be ported to MVS ... eventually released as DB2, originally

for decision-support.

https://www.mcjones.org/System_R/SQL_Reunion_95/sqlr95-DB2.html

Trivia: Jim Gray departs for Tandem fall 1980, palming of some things

on me. The last product at IBM was HA/6000 starting 1988, originally

for NYTimes to move their newspaper system (ATEX) off VAXCluster to

RS/6000. I rename it HA/CMP

https://en.wikipedia.org/wiki/IBM_High_Availability_Cluster_Multiprocessing

when I start doing technical/scientific cluster scale-up with national

labs (LLNL, LANL, NCAR, etc) and commercial cluster scale-up with

RDBMS vendors (Oracle, Sybase, Ingres, Informix that had vaxcluster

support in same source base with unix). The S/88 product administrator

then starts taking us around to their customers and also has me do a

section for the corporate continuous availability strategy document

... it gets pulled when both Rochester/AS400 and POK/(high-end

mainframe) complain they couldn't meet the requirements.

Early Jan1992 have a meeting with Oracle CEO and IBM/AWD Hester tells Ellison we would have 16-system clusters by mid92 and 128-system clusters by ye92. Then late Jan92, cluster scale-up is transferred for announce as IBM Supercomputer (for technical/scientific *ONLY*) and we are told we can't work on anything with more than four processors (we leave IBM a few months later).

CSC posts

https://www.garlic.com/~lynn/subtopic.html#545tech

System/R posts

https://www.garlic.com/~lynn/submain.html#systemr

HA/CMP posts

https://www.garlic.com/~lynn/subtopic.html#hacmp

continuous availability, disaster

survivability, geographic survivability posts

https://www.garlic.com/~lynn/submain.html#available

posts mentioning HA/CMP, S/88, Continuous Availability Strategy

document:

https://www.garlic.com/~lynn/2025b.html#2 Why VAX Was the Ultimate CISC and Not RISC

https://www.garlic.com/~lynn/2025b.html#0 Financial Engineering

https://www.garlic.com/~lynn/2025.html#119 Consumer and Commercial Computers

https://www.garlic.com/~lynn/2025.html#106 Giant Steps for IBM?

https://www.garlic.com/~lynn/2025.html#104 Mainframe dumps and debugging

https://www.garlic.com/~lynn/2025.html#89 Wang Terminals (Re: old pharts, Multics vs Unix)

https://www.garlic.com/~lynn/2025.html#57 Multics vs Unix

https://www.garlic.com/~lynn/2025.html#24 IBM Mainframe Comparison

https://www.garlic.com/~lynn/2024g.html#82 IBM S/38

https://www.garlic.com/~lynn/2024g.html#5 IBM Transformational Change

https://www.garlic.com/~lynn/2024f.html#67 IBM "THINK"

https://www.garlic.com/~lynn/2024f.html#36 IBM 801/RISC, PC/RT, AS/400

https://www.garlic.com/~lynn/2024f.html#25 Future System, Single-Level-Store, S/38

https://www.garlic.com/~lynn/2024f.html#3 Emulating vintage computers

https://www.garlic.com/~lynn/2024e.html#138 IBM - Making The World Work Better

https://www.garlic.com/~lynn/2024e.html#55 Article on new mainframe use

https://www.garlic.com/~lynn/2024d.html#12 ADA, FAA ATC, FSD

https://www.garlic.com/~lynn/2024d.html#4 Disconnect Between Coursework And Real-World Computers

https://www.garlic.com/~lynn/2024c.html#105 Financial/ATM Processing

https://www.garlic.com/~lynn/2024c.html#79 Mainframe and Blade Servers

https://www.garlic.com/~lynn/2024c.html#60 IBM "Winchester" Disk

https://www.garlic.com/~lynn/2024c.html#54 IBM 3705 & 3725

https://www.garlic.com/~lynn/2024c.html#7 Testing

https://www.garlic.com/~lynn/2024c.html#3 ReBoot Hill Revisited

https://www.garlic.com/~lynn/2024b.html#111 IBM 360 Announce 7Apr1964

https://www.garlic.com/~lynn/2024b.html#84 IBM DBMS/RDBMS

https://www.garlic.com/~lynn/2024b.html#44 Mainframe Career

https://www.garlic.com/~lynn/2024b.html#29 DB2

https://www.garlic.com/~lynn/2024b.html#22 HA/CMP

https://www.garlic.com/~lynn/2024.html#93 IBM, Unix, editors

https://www.garlic.com/~lynn/2024.html#35 RS/6000 Mainframe

https://www.garlic.com/~lynn/2023f.html#115 IBM RAS

https://www.garlic.com/~lynn/2023f.html#72 Vintage RS/6000 Mainframe

https://www.garlic.com/~lynn/2023f.html#70 Vintage RS/6000 Mainframe

https://www.garlic.com/~lynn/2023f.html#38 Vintage IBM Mainframes & Minicomputers

https://www.garlic.com/~lynn/2022b.html#55 IBM History

https://www.garlic.com/~lynn/2021d.html#53 IMS Stories

https://www.garlic.com/~lynn/2021.html#3 How an obscure British PC maker invented ARM and changed the world

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: 2301 Fixed-Head Drum Date: 05 Mar, 2025 Blog: Facebookre:

late 70s, I tried to get 2305-like "multiple exposure" (aka multiple subchannel addresses, where controller could do real-time scheduling of requests queued at the different subchannel addresses) for 3350 fixed-head feature, so I could do (paging) data transfer overlapped with 3350 arm seek. There was group in POK doing "VULCAN", an electronic disk ... and they got 3350 "multiple exposure" work vetoed. Then VULCAN was told that IBM was selling every memory chip it made as (higher markup) processor memory ... and canceled VULCAN, however by then it was too late to resurrect 3350 multiple exposure (and went ahead with non-IBM 1655).

trivia: after decision to add virtual memory to all 370s, some of science center (4th flr) splits off and takes over the IBM Boston Programming Center (3rd flr) for the VM370 Development group (morph CP67->VM370). At the same time there was joint effort between Endicott and Science Center to add 370 virtual machines to CP67 ("CP67H", the new 370 instructions and the different format for 370 virtual memory). When that was done there was then further CP67 mods for CP67I which ran on 370 architecture (in CP67H 370 virtual machines for a year before the first engineering 370 with virtual memory was ready to test ... by trying to IPL CP67I). As more and more 370s w/virtual memory became available, three engineers from San Jose came out to add 3330 and 2305 device support to CP67I for CP67SJ. CP67SJ was in regular use inside IBM, even after VM370 became available.

CSC posts:

https://www.garlic.com/~lynn/subtopic.html#545tech

getting to play disk engineer in bldgs 14&15

https://www.garlic.com/~lynn/subtopic.html#disk

recent posts mentioning cp/67h, cp/67i cp/67sj

https://www.garlic.com/~lynn/2025.html#122 Clone 370 System Makers

https://www.garlic.com/~lynn/2025.html#121 Clone 370 System Makers

https://www.garlic.com/~lynn/2025.html#120 Microcode and Virtual Machine

https://www.garlic.com/~lynn/2025.html#10 IBM 37x5

https://www.garlic.com/~lynn/2024g.html#108 IBM 370 Virtual Storage

https://www.garlic.com/~lynn/2024g.html#73 Early Email

https://www.garlic.com/~lynn/2024f.html#112 IBM Email and PROFS

https://www.garlic.com/~lynn/2024f.html#80 CP67 And Source Update

https://www.garlic.com/~lynn/2024f.html#29 IBM 370 Virtual memory

https://www.garlic.com/~lynn/2024d.html#68 ARPANET & IBM Internal Network

https://www.garlic.com/~lynn/2024c.html#88 Virtual Machines

https://www.garlic.com/~lynn/2023g.html#63 CP67 support for 370 virtual memory

https://www.garlic.com/~lynn/2023g.html#4 Vintage Future System

https://www.garlic.com/~lynn/2023f.html#47 Vintage IBM Mainframes & Minicomputers

https://www.garlic.com/~lynn/2023e.html#70 The IBM System/360 Revolution

https://www.garlic.com/~lynn/2023e.html#44 IBM 360/65 & 360/67 Multiprocessors

https://www.garlic.com/~lynn/2023d.html#98 IBM DASD, Virtual Memory

https://www.garlic.com/~lynn/2023d.html#24 VM370, SMP, HONE

https://www.garlic.com/~lynn/2023.html#74 IBM 4341

https://www.garlic.com/~lynn/2023.html#71 IBM 4341

https://www.garlic.com/~lynn/2022h.html#22 370 virtual memory

https://www.garlic.com/~lynn/2022e.html#94 Enhanced Production Operating Systems

https://www.garlic.com/~lynn/2022e.html#80 IBM Quota

https://www.garlic.com/~lynn/2022d.html#59 CMS OS/360 Simulation

https://www.garlic.com/~lynn/2022.html#55 Precursor to current virtual machines and containers

https://www.garlic.com/~lynn/2022.html#12 Programming Skills

https://www.garlic.com/~lynn/2021k.html#23 MS/DOS for IBM/PC

https://www.garlic.com/~lynn/2021g.html#34 IBM Fan-fold cards

https://www.garlic.com/~lynn/2021g.html#6 IBM 370

https://www.garlic.com/~lynn/2021d.html#39 IBM 370/155

https://www.garlic.com/~lynn/2021c.html#5 Z/VM

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: Why VAX Was the Ultimate CISC and Not RISC Newsgroups: comp.arch Date: Thu, 06 Mar 2025 16:11:15 -1000John Levine <johnl@taugh.com> writes:

long ago and far away ... comparing pascal to pascal front-end with

pl.8 back-end (3033 is 370 about 4.5MIPS)

Date: 8 August 1981, 16:47:28 EDT

To: wheeler

the 801 group here has run a program under several different PASCAL

"systems". The program was about 350 statements and basically

"solved" SOMA (block puzzle..). Although this is only one test, and

all of the usual caveats apply, I thought the numbers were

interesting... The numbers given in each case are EXECUTION TIME ONLY

(Virtual on 3033).

6m 30 secs PERQ (with PERQ's Pascal compiler, of course)

4m 55 secs 68000 with PASCAL/PL.8 compiler at OPT 2

0m 21.5 secs 3033 PASCAL/VS with Optimization

0m 10.5 secs 3033 with PASCAL/PL.8 at OPT 0

0m 5.9 secs 3033 with PASCAL/PL.8 at OPT 3

... snip ... top of post, old email index

801/risc, iliad, romp, rios, pc/rt, rs/6000, power, power/pc posts

https://www.garlic.com/~lynn/subtopic.html#801

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: The joy of FORTRAN Newsgroups: alt.folklore.computers, comp.os.linux.misc Date: Fri, 07 Mar 2025 06:46:48 -1000cross@spitfire.i.gajendra.net (Dan Cross) writes:

IBM 4300s competed with VAX in the mid-range market and sold in approx

same numbers in small unit orders ... bit difference was large

corporations with orders for hundreds of vm/4300s (in at least one

case almost 1000) at a time for placing out in departmental areas

(sort of the leading edge of distributed computing tsunami). old afc

post with decade of VAX sales, sliced&diced by year, model, US/non-US.

https://www.garlic.com/~lynn/2002f.html#0

Inside IBM, conference rooms were becoming scarce since so many were

being converted to vm4341 rooms. IBM was expecting to see same

explosion in 4361/4381 orders (as 4331/4341), but by 2nd half of 80s,

market was moving to workstations and large PCs, 30rs of pc market

share (original articles were separate URLs, now condensed to single

web page (original URLs remapped to displacements)

https://arstechnica.com/features/2005/12/total-share/

I got availability of early engineering 4341 in 1978 and IBM branch heard about it and in jan1979 con me into doing national lab benchmark (60s cdc6600 "rain/rain4" fortran) looking at getting 70 for compute farm (sort of leading edge of the coming cluster supercomputing tsunami). Then BofA was getting 60 VM/4341s for distributed System/R (original SQL/relational) pilot.

upthread mentioned doing HA/CMP (targeted for both technical/scientific and commercial) cluster scale-up (and then it is transferred for announce as IBM Supercomputer for technical/scientific *ONLY*) and we were told we couldn't work on anything with more than four processors.

801/risc (PC/RT, RS/6000) didn't have coherent cache so didn't have SMP scale-up ... only scale-up method was cluster ...

1993 large mainframe compared to RS/6000

• ES/9000-982 : 8CPU 408MIPS, 51MIPS/CPU

• RS6000/990 : 126MIPS; 16-sysem: 2BIPS; 128-systemr: 16BIPS

executive we reported to went over to head up AIM/Somerset to do

single-chip power/pc ... and picked up Motorola 88k bus ... so could

then do SMP configs (and/or clusters of SMP)

HA/CMP posts

https://www.garlic.com/~lynn/subtopic.html#hacmp

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: HSDT Date: 07 Mar, 2025 Blog: Facebooklong winded:

In early 80s, got IBM HSDT project, T1 and faster computer links (terrestrial and satellite) and some amount of conflicts with the communication group (note in 60s, IBM had 2701 controller that supported T1 computer links, but going into 70s and uptake of SNA/VTAM, issues appeared to cap controller links at 56kbits/sec). I was working with NSF director and was to get $20M to interconnect NSF Supercomputer Centers. Then congress cuts the budget, some other things happen and eventually an RFP is released (in part based on what we already have running).

NSF 28Mar1986 Preliminary Announcement

https://www.garlic.com/~lynn/2002k.html#12

The OASC has initiated three programs: The Supercomputer Centers

Program to provide Supercomputer cycles; the New Technologies Program

to foster new supercomputer software and hardware developments; and

the Networking Program to build a National Supercomputer Access

Network - NSFnet.

... snip ...

IBM internal politics was not allowing us to bid (being blamed for online computer conferencing inside IBM likely contributed, folklore was when corporate executive committee was told, 5of6 wanted to fire me). The NSF director tried to help by writing the company a letter (3Apr1986, NSF Director to IBM Chief Scientist and IBM Senior VP and director of Research, copying IBM CEO) with support from other gov. agencies ... but that just made the internal politics worse (as did claims that what we already had operational was at least 5yrs ahead of the winning bid), as regional networks connect in, it becomes the NSFNET backbone, precursor to modern internet.

in between, NSF was asking me to do presentations at some current

and/or possible future NSF Supercomputer locations (old archived

email)

https://www.garlic.com/~lynn/2011b.html#email850325

https://www.garlic.com/~lynn/2011b.html#email850325b

https://www.garlic.com/~lynn/2011b.html#email850326

https://www.garlic.com/~lynn/2011b.html#email850402

https://www.garlic.com/~lynn/2015c.html#email850408

https://www.garlic.com/~lynn/2011c.html#email850425

https://www.garlic.com/~lynn/2011c.html#email850425b

https://www.garlic.com/~lynn/2006w.html#email850607

https://www.garlic.com/~lynn/2006t.html#email850930

https://www.garlic.com/~lynn/2011c.html#email851001

https://www.garlic.com/~lynn/2011b.html#email851106

https://www.garlic.com/~lynn/2011b.html#email851114

https://www.garlic.com/~lynn/2006t.html#email860407

https://www.garlic.com/~lynn/2007.html#email860428

https://www.garlic.com/~lynn/2007.html#email860428b

https://www.garlic.com/~lynn/2007.html#email860430

had some exchanges with Melinda (at princeton)

https://www.leeandmelindavarian.com/Melinda#VMHist

from or to Melinda/Princeton (pucc)

https://www.garlic.com/~lynn/2007b.html#email860111

https://www.garlic.com/~lynn/2007b.html#email860113

https://www.garlic.com/~lynn/2007b.html#email860114

https://www.garlic.com/~lynn/2011b.html#email860217

https://www.garlic.com/~lynn/2011b.html#email860217b

https://www.garlic.com/~lynn/2011c.html#email860407

related

https://www.garlic.com/~lynn/2011c.html#email850426

https://www.garlic.com/~lynn/2006t.html#email850506

https://www.garlic.com/~lynn/2007b.html#email860124

earlier IBM branch brings me into Berkeley "10M" looking at doing

remote viewing

https://www.garlic.com/~lynn/2004h.html#email830804

https://www.garlic.com/~lynn/2004h.html#email830822

https://www.garlic.com/~lynn/2004h.html#email830830

https://www.garlic.com/~lynn/2004h.html#email841121

https://www.garlic.com/~lynn/2011b.html#email850409

https://www.garlic.com/~lynn/2004h.html#email860519

HSDT posts

https://www.garlic.com/~lynn/subnetwork.html#hsdt

NSFNET posts

https://www.garlic.com/~lynn/subnetwork.html#nsfnet

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM Token-Ring Date: 08 Mar, 2025 Blog: FacebookThe communication group dumb 3270s (and PC 3270 emulators) had point-to-point coax from machine room to each terminal. Several large corporations were starting to exceed building load limits from the weight of all that coax, so needed much lighter and easier to manage solution ... and CAT (shielded twisted pair) and token-ring LAN technology (trivia: my wife was co-inventor on early token passing patent used for the IBM Series/1 "chat ring")

IBM workstation division did their own cards for the PC/RT (16bit PC/AT bus), including 4mbit token-ring card. Then for RS/6000 w/microchannel, they were told they couldn't do their own cards and had to use PS2 microchannel cards. The communication group was fiercely fighting off client/server and distributed computing (trying to preserve their dumb terminal paradigm and install base) and had severely performance kneecapped microchannel cards. The PS2 microchannel 16mbit token-ring card had lower card throughput than the PC/RT 4mbit token-ring card (joke was PC/RT 4mbit T/R server would have higher throughput than RS/6000 16mbit T/R server) ... PS2 microchannel 16mbit T/R card design point was something like 300 dumb terminal stations sharing single LAN.

The new IBM Almaden research bldg had been heavily provisioned with IBM wiring, but they found a $69 10mbit ethernet card had higher throughput than the $800 16mbit T/R card (same IBM wiring) ... and 10mbit ethernet LAN also had higher aggregrate throughput and lower latency. For the price difference for 300 stations, could get several high-performance TCP/IP routers with channel interfaces, dozen or more ethernet interfaces along with FDDI and telco T1 & T3 options.

1988 ACM SIGCOMM had article analyzing 30 station ethernet getting aggregate 8.5mbit throughput, dropping to effective 8mbit throughput when all device drivers were put in low level loop constantly transmitting minimum size packets.

posts about communication group dumb terminal strategies

https://www.garlic.com/~lynn/subnetwork.html#terminal

801/risc, iliad, romp, rios, pc/rt, rs/6000, power, power/pc posts

https://www.garlic.com/~lynn/subtopic.html#801

IBM downturn/downfall/breakup posts

https://www.garlic.com/~lynn/submisc.html#ibmdownfall

recent posts mentioning token-ring:

https://www.garlic.com/~lynn/2025b.html#2 Why VAX Was the Ultimate CISC and Not RISC

https://www.garlic.com/~lynn/2025.html#106 Giant Steps for IBM?

https://www.garlic.com/~lynn/2025.html#97 IBM Token-Ring

https://www.garlic.com/~lynn/2025.html#96 IBM Token-Ring

https://www.garlic.com/~lynn/2025.html#95 IBM Token-Ring

https://www.garlic.com/~lynn/2025.html#23 IBM NY Buildings

https://www.garlic.com/~lynn/2024g.html#101 IBM Token-Ring versus Ethernet

https://www.garlic.com/~lynn/2024g.html#53 IBM RS/6000

https://www.garlic.com/~lynn/2024g.html#18 PS2 Microchannel

https://www.garlic.com/~lynn/2024f.html#42 IBM/PC

https://www.garlic.com/~lynn/2024f.html#39 IBM 801/RISC, PC/RT, AS/400

https://www.garlic.com/~lynn/2024f.html#27 The Fall Of OS/2

https://www.garlic.com/~lynn/2024f.html#6 IBM (Empty) Suits

https://www.garlic.com/~lynn/2024e.html#138 IBM - Making The World Work Better

https://www.garlic.com/~lynn/2024e.html#102 Rise and Fall IBM/PC

https://www.garlic.com/~lynn/2024e.html#81 IBM/PC

https://www.garlic.com/~lynn/2024e.html#71 The IBM Way by Buck Rogers

https://www.garlic.com/~lynn/2024e.html#64 RS/6000, PowerPC, AS/400

https://www.garlic.com/~lynn/2024e.html#56 IBM SAA and Somers

https://www.garlic.com/~lynn/2024e.html#52 IBM Token-Ring, Ethernet, FCS

https://www.garlic.com/~lynn/2024d.html#30 Future System and S/38

https://www.garlic.com/~lynn/2024d.html#7 TCP/IP Protocol

https://www.garlic.com/~lynn/2024c.html#69 IBM Token-Ring

https://www.garlic.com/~lynn/2024c.html#57 IBM Mainframe, TCP/IP, Token-ring, Ethernet

https://www.garlic.com/~lynn/2024c.html#56 Token-Ring Again

https://www.garlic.com/~lynn/2024c.html#47 IBM Mainframe LAN Support

https://www.garlic.com/~lynn/2024c.html#33 Old adage "Nobody ever got fired for buying IBM"

https://www.garlic.com/~lynn/2024b.html#50 IBM Token-Ring

https://www.garlic.com/~lynn/2024b.html#47 OS2

https://www.garlic.com/~lynn/2024b.html#41 Vintage Mainframe

https://www.garlic.com/~lynn/2024b.html#22 HA/CMP

https://www.garlic.com/~lynn/2024b.html#0 Assembler language and code optimization

https://www.garlic.com/~lynn/2024.html#117 IBM Downfall

https://www.garlic.com/~lynn/2024.html#68 IBM 3270

https://www.garlic.com/~lynn/2024.html#41 RS/6000 Mainframe

https://www.garlic.com/~lynn/2024.html#37 RS/6000 Mainframe

https://www.garlic.com/~lynn/2024.html#5 How IBM Stumbled onto RISC

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM Token-Ring Date: 08 Mar, 2025 Blog: Facebookre:

other trivia: In early 80s, I got HSDT, T1 and faster computer links (both terrestrial and satellite) with lots of conflict with SNA/VTAM org (note in the 60s, IBM had 2701 controller that supported T1 links, but the transition to SNA/VTAM in the 70s and associated issues appeared to cap all controllers at 56kbit/sec links).

2nd half of 80s, I was on Greg Chesson's XTP TAB and there were some gov. operations involved ... so we took XTP "HSP" to ISO chartered ANSI X3S3.3 for standardization ... eventually being told that ISO only did network standards work on things that corresponded to OSI ... and "HSP" didn't because 1) was internetworking ... not in OSI sitting between layer 3&4 (network & transport), 2) bypassed layer 3/4 interface and 3) went directly to MAC LAN interface also not in OSI, sitting in middle of layer3. had a joke that while IETF required two interoperable implementations for standards progression, that ISO didn't even require a standard to be implementable.

HSDT posts

https://www.garlic.com/~lynn/subnetwork.html#hsdt

XTP/HSP posts

https://www.garlic.com/~lynn/subnetwork.html#xtphsp

NSFNET posts

https://www.garlic.com/~lynn/subnetwork.html#nsfnet

internet posts

https://www.garlic.com/~lynn/subnetwork.html#internet

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM 3880, 3380, Data-streaming Date: 08 Mar, 2025 Blog: Facebookwhen I transfer to San Jose Research in 2nd half of 70s, was allowed to wander around IBM (& non-IBM) datacenters in silicon valley, including disk bldg14/engineering & bldg15/product test across the street. They were running 7x24, prescheduled, stand-alone testing and mentioned they had recently tried MVS for concurrent testing, but it had 15min MTBF (requiring manual re-ipl) in that environment. I offered to rewrite I/O supervisor to make it bullet proof and never fail so they can do any amount of on-demand, concurrent testing, greatly improving productivity. Downside they started calling me anytime they had a problem and I had to increasingly spend time playing disk engineer.

Bldg15 got early engineering systems for I/O product testing, including 1st engineering 3033 outside POK processor development flr. Testing was only taking a couple percent of 3033 CPU, so we scrounge up 3830 and 3330 string and setup our own private online service. One morning I get a call asking what I had done over the weekend to completely destroy online response and throughput. I said nothing, and asked what had they done. They say nothing, but eventually find out somebody had replaced the 3830 with early 3880. Problem was the 3880 had replaced the really fast 3830 horizontal microcode processor with a really slow vertical microcode processor (the only way it could handle 3mbyte/sec transfer was when switched to data streaming protocol channels (instead end-to-end handshake for every byte transferred, it transferred multiple bytes per end-to-end handshake). There was then something like six months of microcode hacks to try to do a better masking of how slow 3880 actually was.

Then 3090 was going to have all data streaming channels and initially figured that 3880 was just like 3830 but with data streaming 3mbyte/sec transfer and configured number of channels based on that assumption to meet target system throughput. When they found out how bad channel busy (increase) was (unable to totally mask), they realized they would have to significantly increase the number of channels (which required extra TCM, they semi-facetiously claimed they would bill the 3880 group for the extra 3090 manufacturing cost).

Bldg15 also get early engineering 4341 in 1978 ... and with some tweaking of the 4341 integrated channels, it was fast enough to handle 3380 3mbyte/sec data streaming testing (the 303x channel directors were slow 158 engines with just the 158 integrated channel microcode and no 370 microcode). To otherwise allow 3380 3mbyte/sec to be attached to 370 block-mux 1.5mbyte/sec channels, the 3880 "Calypso" speed matching & ECKD channel programs were created.

Other trivia: people doing air bearing thin-film head simulation were

only getting a couple turn arounds/month on the SJR 370/195. We set

things up on the bldg15 3033 where they could get multiple turn

arounds/day (even though 3033 was not quite half the MIPs of the 195).

https://www.computerhistory.org/storageengine/thin-film-heads-introduced-for-large-disks/

https://en.wikipedia.org/wiki/History_of_IBM_magnetic_disk_drives#IBM_3370

trivia: there haven't been any CKD DASD made for decades, all being simulated on industry standard fixed-block devices (dating back to 3375 on 3370 and can be seen in 3380 records/track formulas where record size is rounded up to 3380 fixed cell size).

posts getting to play disk engineering in 14&15:

https://www.garlic.com/~lynn/subtopic.html#disk

DASD, CKD, FBA, multi-track search posts

https://www.garlic.com/~lynn/submain.html#dasd

posts mentioning Calypso and ECKD

https://www.garlic.com/~lynn/2024g.html#3 IBM CKD DASD

https://www.garlic.com/~lynn/2024c.html#74 Mainframe and Blade Servers

https://www.garlic.com/~lynn/2023d.html#117 DASD, Channel and I/O long winded trivia

https://www.garlic.com/~lynn/2023d.html#111 3380 Capacity compared to 1TB micro-SD

https://www.garlic.com/~lynn/2023d.html#105 DASD, Channel and I/O long winded trivia

https://www.garlic.com/~lynn/2023c.html#103 IBM Term "DASD"

https://www.garlic.com/~lynn/2018.html#81 CKD details

https://www.garlic.com/~lynn/2015g.html#15 Formal definition of Speed Matching Buffer

https://www.garlic.com/~lynn/2015f.html#89 Formal definition of Speed Matching Buffer

https://www.garlic.com/~lynn/2015f.html#86 Formal definition of Speed Matching Buffer

https://www.garlic.com/~lynn/2012o.html#64 Random thoughts: Low power, High performance

https://www.garlic.com/~lynn/2012j.html#12 Can anybody give me a clear idea about Cloud Computing in MAINFRAME ?

https://www.garlic.com/~lynn/2011e.html#35 junking CKD; was "Social Security Confronts IT Obsolescence"

https://www.garlic.com/~lynn/2010h.html#30 45 years of Mainframe

https://www.garlic.com/~lynn/2010e.html#36 What was old is new again (water chilled)

https://www.garlic.com/~lynn/2009p.html#11 Secret Service plans IT reboot

https://www.garlic.com/~lynn/2007e.html#40 FBA rant

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Learson Tries To Save Watson IBM Date: 08 Mar, 2025 Blog: FacebookLearson tried (& failed) to block the bureaucrats, careerists, and MBAs from destroying Watson culture&legacy, pg160-163, 30yrs of management briefings 1958-1988

20 yrs later, IBM has one of the largest losses in the history of US

companies and was being reorged into the 13 baby blues in

preparation for breaking up the company (take-off on "baby bell"

breakup a decade earlier).

https://web.archive.org/web/20101120231857/http://www.time.com/time/magazine/article/0,9171,977353,00.html

https://content.time.com/time/subscriber/article/0,33009,977353-1,00.html

We had already left IBM but get a call from the bowels of Armonk

asking if we could help with the breakup. Before we get started, the

board brings in the former AMEX president as CEO to try and save the

company, who (somewhat) reverses the breakup

IBM downturn/downfall/breakup posts

https://www.garlic.com/~lynn/submisc.html#ibmdownfall

former AMEX president posts

https://www.garlic.com/~lynn/submisc.html#gerstner

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM Token-Ring Date: 09 Mar, 2025 Blog: Facebookre:

In the 60s, there were a couple commercial online CP67-based spin-offs

of the science center, also the science center network morphs into the

corporate internal network and technology also used for the corporate

sponsored univ. BITNET).

https://en.wikipedia.org/wiki/BITNET

Quote from one of the 1969 inventors of "GML" at the Science Center

https://web.archive.org/web/20230402212558/http://www.sgmlsource.com/history/jasis.htm

Actually, the law office application was the original motivation for

the project, something I was allowed to do part-time because of my

knowledge of the user requirements. My real job was to encourage the

staffs of the various scientific centers to make use of the

CP-67-based Wide Area Network that was centered in Cambridge.

... snip ...

Science-Center/corporate network was larger than ARPANET/Internet from

just about the beginning until sometime mid/late 80s (about the time

it was forced to move to SNA/VTAM). At the 1Jan1983 morph of ARPANET

to internetworking, there were approx 100 IMPs and 255 hosts ... at a

time the internal network was rapidly approaching 1000. I've

periodically commented that ARPANET was somewhat limited by

requirement for IMPs and associated approvals. Somewhat equivalent for

the corporate network was requirement that all links be encrypted and

various gov. resistance especially when links crossed national

boundaries. Old archive post with list of corporate locations that

added one or more nodes during 1983:

https://www.garlic.com/~lynn/2006k.html#8

After decision was made to add virtual memory to all IBM 370s, CP67

morphs into VM370 ... and TYMSHARE is providing commercial online

VM370 services

https://en.wikipedia.org/wiki/Tymshare

and in Aug1976 started offering its CMS-based online computer

conferencing for free to the (user group) SHARE

https://en.wikipedia.org/wiki/SHARE_(computing)

as "VMSHARE" ... archives here

http://vm.marist.edu/~vmshare

accessed via Tymnet:

https://en.wikipedia.org/wiki/Tymnet

after M/D buys TYMSHARE in the early 80s and discontinues some number

of things, VMSHARE service is moved to a univ. computer.

co-worker at science center responsible for early CP67-based wide-area

network and early days of the corporate internal network through much

of the 70s

https://en.wikipedia.org/wiki/Edson_Hendricks

Trivia: a decade after "GML" was invented, it morphs into ISO standard

"SGML", and after another decade morphs into "HTML" at CERN; first

webserver in the US is at CERN-sister institution, Stanford SLAC on

their VM370 system:

https://ahro.slac.stanford.edu/wwwslac-exhibit

https://ahro.slac.stanford.edu/wwwslac-exhibit/early-web-chronology-and-documents-1991-1994

science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

commercial, online virtual machine based services

https://www.garlic.com/~lynn/submain.html#online

internal network posts

https://www.garlic.com/~lynn/subnetwork.html#internalnet

GML, SGML, HTML, etc posts

https://www.garlic.com/~lynn/submain.html#sgml

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM Token-Ring Date: 10 Mar, 2025 Blog: Facebookre:

also on the OSI subject:

OSI: The Internet That Wasn't. How TCP/IP eclipsed the Open Systems

Interconnection standards to become the global protocol for computer

networking

https://spectrum.ieee.org/osi-the-internet-that-wasnt

Meanwhile, IBM representatives, led by the company's capable director

of standards, Joseph De Blasi, masterfully steered the discussion,

keeping OSI's development in line with IBM's own business interests.

Computer scientist John Day, who designed protocols for the ARPANET,

was a key member of the U.S. delegation. In his 2008 book Patterns in

Network Architecture(Prentice Hall), Day recalled that IBM

representatives expertly intervened in disputes between delegates

"fighting over who would get a piece of the pie.... IBM played them

like a violin. It was truly magical to watch."

... snip ...

On the 60s 2701 T1 subject, IBM FSD (Federal System Division) had some number of gov. customers that had 2701 that were failing in the 80s and came up with (special bid) "T1 Zirpel" card for the IBM Series/1.

HSDT posts

https://www.garlic.com/~lynn/subnetwork.html#hsdt

XTP/HSP posts

https://www.garlic.com/~lynn/subnetwork.html#xtphsp

internet posts

https://www.garlic.com/~lynn/subnetwork.html#internet

some posts mentioning "OSI: The Internet That Wasn't"

https://www.garlic.com/~lynn/2025.html#33 IBM ATM Protocol?

https://www.garlic.com/~lynn/2025.html#13 IBM APPN

https://www.garlic.com/~lynn/2024b.html#113 EBCDIC

https://www.garlic.com/~lynn/2024b.html#99 OSI: The Internet That Wasn't

https://www.garlic.com/~lynn/2013j.html#65 OSI: The Internet That Wasn't

https://www.garlic.com/~lynn/2013j.html#64 OSI: The Internet That Wasn't

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM VM/CMS Mainframe Date: 10 Mar, 2025 Blog: FacebookPredated VM370, originally 60s CP67 wide-area science center network (RSCS/VNET) .... comment by one of the cambridge science center inventors of GML in 1969 ...

It then morphs into the corporate internal network (larger than

arpanet/internet from just about the beginning until sometime mid/late

80s, about the time internal network was forced to convert to

SNA/VTAM) ... technology also used for the corporate sponsored univ

BITNET

https://en.wikipedia.org/wiki/BITNET

when decision was made to add virtual memory to all 370s, then CP67

morphs into VM370.

Co-worker at science center responsible for RSCS/VNET

https://en.wikipedia.org/wiki/Edson_Hendricks

RSCS/VNET used CP internal synchronous "diagnose" interface to spool file system transferring 4Kbyte blocks ... on large loaded system, spool file contention could limit it to 6-8 4k blocks/sec ... or 24k-32k bytes (240k-320k bits). I got HSDT in early 80s, with T1 and faster computer links (and lots of battles with communication group, aka 60s IBM had 2701 controller, but 70s transition to SNA/VTAM, issues capped controllers at 56kbit/sec links) .... supporting T1 links needed 3mbits (300kbytes) for each RSCS/VNET full-duplex T1. I did a rewrite of CP spool file system in VS/Pascal running in a virtual memory supporting asynchronous interface, contiguous allocation, write-behind, and read-ahead able to provide RSCS/VNET with multi-mbyte/sec throughput.

Also, releasing internal mainframe TCP/IP (implemented in VS/Pascal) was being blocked by the communication group. When that eventually is overturned, they changed their strategy ... because the communication group had corporate strategic ownership of everything that crossed datacenter walls, it had to be release through them. What shipped got aggregate 44kbytes/sec using nearly whole 3090 CPU. I do RFC1044 support and in some tuning tests at Cray Research between Cray and 4341, got sustained 4341 channel throughput using only modest amount of 4341 processor (something like 500 times throughput in bytes moved per instruction executed). Later in the 90s, communication group hired a silicon valley contractor to implement TCP/IP support directly in VTAM. What he demo'ed had TCP running much faster than LU6.2. He was then told that everbody knows that a "proper" TCP/IP implementation is much slower than LU6.2 and they would only be paying for a "proper" implementation.

trivia: The Pisa Science Center had done "SPM" for CP67 (inter virtual machine protocol, a superset of later VM370 VMCF, IUCV and SMSG combination) which was ported to (internal VM370 ... which was also supported by the product RSCS/VNET (even though "SPM" never shipped to customers). Late 70s, there was multi-user spacewar client/server game done using "SPM" between CMS 3270 users and the server ... and since RSCS/VNET supported the protocol, users didn't have to be on same system as the server. An early problem was people started doing robot players beating human players (and server was modified to increase power use non-linear as interval between user moves dropped below human threashold).

some VM (customer/product) history at Melinda's site

https://www.leeandmelindavarian.com/Melinda#VMHist

trivia, most of JES2 network came from HASP that had "TUCC" in cols68-71 of the source, problem was it defined network nodes in unused entries in the 255 pseudo spool device table ... typically limit of 160-180 definitions ... and somewhat intermixed network fields with job control fields in the header. RSCS/VNET had clean layered implementation so was able to do a JES2 emulation driver w/o much trouble. However the internal corporate network had quickly/early passed 256 nodes and JES2 would trash traffic for origin or destination wasn't in local table ... so JES2 systems typically had to be restricted to boundary nodes behind protective RSCS/VNET nodes. Also because of intermixing of fields, traffic between JES2 systems at different release levels could crash the destination MVS system. As a result a large body of RSCS/VNET JES2 emulation driver code grew up that understood different origin and destination JES2 formats and adjust fields for the directly connected JES2 destination.

CSC posts

https://www.garlic.com/~lynn/subtopic.html#545tech

internal network posts

https://www.garlic.com/~lynn/subnetwork.html#internalnet

internet posts

https://www.garlic.com/~lynn/subnetwork.html#internet

RFC1044 posts

https://www.garlic.com/~lynn/subnetwork.html#1044

HASP, ASP, JES2, JES3, NJE, NJI posts

https://www.garlic.com/~lynn/submain.html#hasp

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM VM/CMS Mainframe Date: 10 Mar, 2025 Blog: Facebookre:

Aka ... some of the MIT CTSS/7094 people went to the 5th flr for MULTICS and others went to the IBM science center on 4th flr and did virtual machines (initially CP40 on 360/40 with virtual memory hardware mods, morphs into CP67 when 360/67 standard with virtual memory became available), internal network, lots of online apps, inventing "GML" in 1969, etc. When decision was made to add virtual memory to all 370s, some of the people split off from CSC and take-over the IBM Boston Programming Center on the 3rd flr for VM370 (and cambridge monitor system becomes conversational monitor system).

I had taken 2hr credit hr intro to fortran/computers and at the end of

semester was hired to do some 360 assembler on 360/30. The univ was

getting 360/67 for tss/360 replacing 709/1401; got a 360/30 temporary

replacing 1401 until 360/67 arrives (univ shutdown datacenter on

weekends and I had it all dedicated, but 48hrs w/o sleep made monday

classes hard). Within a year of taking intro class, the 360/67 comes

in and I was hired fulltime responsible for os/360 (tss/360 didn't

make it to production) and I still had the whole datacenter dedicated

weekends. CSC comes out to install CP67 (3rd after CSC itself and MIT

Lincoln Labs) and I rewrite large amounts of CP67 code ... as well as

adding TTY/ASCII terminal support (all picked up and shipped by

CSC). Tale of CP67 across tech sq quad at MIT Urban lab ... my

TTY/ASCII had max line length of 80chars ... they do a quick hack for

1200 chars (new ASCII device down at harvard) but don't catch all

dependencies ... and CP67 crashes 27 times in single day.

https://www.multicians.org/thvv/360-67.html

other history by Melinda

https://www.leeandmelindavarian.com/Melinda#VMHist

CSC posts

https://www.garlic.com/~lynn/subtopic.html#545tech

posts mentioning Urban lab and 27 crashes

https://www.garlic.com/~lynn/2024g.html#92 CP/67 Multics vs Unix

https://www.garlic.com/~lynn/2024c.html#16 CTSS, Multicis, CP67/CMS

https://www.garlic.com/~lynn/2024.html#100 Multicians

https://www.garlic.com/~lynn/2023f.html#66 Vintage TSS/360

https://www.garlic.com/~lynn/2023f.html#61 The Most Important Computer You've Never Heard Of

https://www.garlic.com/~lynn/2022c.html#42 Why did Dennis Ritchie write that UNIX was a modern implementation of CTSS?

https://www.garlic.com/~lynn/2022.html#127 On why it's CR+LF and not LF+CR [ASR33]

https://www.garlic.com/~lynn/2016e.html#78 Honeywell 200

https://www.garlic.com/~lynn/2015c.html#57 The Stack Depth

https://www.garlic.com/~lynn/2013c.html#30 What Makes an Architecture Bizarre?

https://www.garlic.com/~lynn/2010c.html#40 PC history, was search engine history, was Happy DEC-10 Day

https://www.garlic.com/~lynn/2006c.html#28 Mount DASD as read-only

https://www.garlic.com/~lynn/2004j.html#47 Vintage computers are better than modern crap !

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM VM/CMS Mainframe Date: 11 Mar, 2025 Blog: Facebookre:

In the 60s, IBM had 2701 that supported T1, then in 70s move to SNA/VTAM, issues capped the controllers at 56kbits. I got HSDT project in the early 80s, T1 and faster computer links (both terrestrial and satellite) and lots of conflict with the communication group. Mid-80s, communication group prepared report for corporate executive committee that customers wouldn't be needing T1 before sometimes in the 90s. What they had done was survey of 37x5 "fat pipes", multiple parallel 56kbits links treated as single logical link ... declining number customers from 2-5 parallel links, dropping to zero by 6 or 7. What they didn't know (or didn't want to tell corporate executive committee) was typical telco tariff for T1 was about the same as six or seven 56kbit links. HSDT trivial survey found 200 customers with T1 links, just moved to non-communication group hardware & software (mostly non-IBM, but for gov. customers with failing 2701, FSD had Zirpel T1 cards for Series/1s).

Later in the 80s, communication group had 3737 that ran T1 link, whole boatload of Motorola 68k processors and memories, had a mini-VTAM emulation simulating CTCA to real local host VTAM. 3737 would immediately reflect ACK to the local host (to keep transmission flowing) before transmitting traffic to remote 3737, which reversed at the remote end to remote host. The trouble was host VTAM would hit max outstanding transmission, long before ACKs started coming back. Even with short-haul, terrestrial T1, the latency for returning ACKs resulting in VTAM only able to use trivial amount of the T1. HSDT had early gone to dynamic adaptive rate-based pacing, easily adapting to much higher transmission than T1, including much longer latency satellite links (and gbit terrestrial cross-country links).

Trivia: 1988, IBM branch office asks me if I could help LLNL standardize some serial stuff they were working with, which quickly becomes fibre-channel standard ("FCS", including some stuff I had done in 1980, initially 1gbit/sec, full-duplex, 200mbyte/sec aggregate). Eventually IBM releases their serial channel with ES/9000 as ESCON (when it is already obsolete, 17mbytes/sec). Then some POK engineers become involved with FCS and define a protocol that radically limits throughput, eventually released as FICON. The most recent public benchmark I've found was z196 "Peak I/O" getting 2M IOPS using 104 FICONs (20K IOPS/FICON). At the same time there was (native) FCS announced for E5-2600 server blades claiming over million IOPS (two such FCS higher throughput than 104 FICONs).

Even if SNA was saturating T1, it would be about 150kbytes/sec (late 80s w/o 3737 spoofing host VTAM, lucky to be 10kbytes/sec)... HSDT saturating cross-country 80s native 1gbit FCS would be 100mbytes/sec ... IBM 3380 3mbyte/sec ... would need 33 3380 drive disk RAID at both ends. Native FCS 3590 tape 42mbyte/sec (with 3:1 compression).

2005 TS1120, IBM & non-IBM, "native" data transfer up to 104mbytes/sec

(up to 1.5tbytes at 3:1 compressed)

https://asset.fujifilm.com/www/us/files/2020-03/71d28509834324b81a79d77b21af8977/359X_Data_Tape_Seminar.pdf

other trivia: Internal mainframe tcp/ip implementation was done in vs/pascal ... and mid-80s communication group was blocking release. When that got overturned, they changed their tactic and said that since they had corporate strategic responsibility for everything that crossed datacenter walls, it had to be released through them. What shipped got aggregate 44kbyes/sec using nearly whole 3090 CPU. I then did the changes to support RFC1044 and in some tuning tests at Cray Research between a Cray and 4341, got sustained 4341 channel throughput using only modest amount of 4341 CPU (something like 500 times improvement in bytes moved per instruction executed).

HSDT posts

https://www.garlic.com/~lynn/subnetwork.html#hsdt

FICON and FCS posts

https://www.garlic.com/~lynn/submisc.html#ficon

RFC1044 posts

https://www.garlic.com/~lynn/subnetwork.html#1044

some old 3737 email:

https://www.garlic.com/~lynn/2011g.html#email880130

https://www.garlic.com/~lynn/2011g.html#email880606

https://www.garlic.com/~lynn/2018f.html#email880715

https://www.garlic.com/~lynn/2011g.html#email881005

some posts mentioning 3737:

https://www.garlic.com/~lynn/2025.html#35 IBM ATM Protocol?

https://www.garlic.com/~lynn/2024f.html#116 NASA Shuttle & SBS

https://www.garlic.com/~lynn/2024e.html#95 RFC33 New HOST-HOST Protocol

https://www.garlic.com/~lynn/2024e.html#91 When Did "Internet" Come Into Common Use

https://www.garlic.com/~lynn/2024d.html#7 TCP/IP Protocol

https://www.garlic.com/~lynn/2024c.html#44 IBM Mainframe LAN Support

https://www.garlic.com/~lynn/2024b.html#62 Vintage Series/1

https://www.garlic.com/~lynn/2023e.html#41 Systems Network Architecture

https://www.garlic.com/~lynn/2023d.html#120 Science Center, SCRIPT, GML, SGML, HTML, RSCS/VNET

https://www.garlic.com/~lynn/2023d.html#31 IBM 3278

https://www.garlic.com/~lynn/2023c.html#57 Conflicts with IBM Communication Group

https://www.garlic.com/~lynn/2022c.html#80 Peer-Coupled Shared Data

https://www.garlic.com/~lynn/2022c.html#14 IBM z16: Built to Build the Future of Your Business

https://www.garlic.com/~lynn/2021j.html#32 IBM Downturn

https://www.garlic.com/~lynn/2021j.html#31 IBM Downturn

https://www.garlic.com/~lynn/2021j.html#16 IBM SNA ARB

https://www.garlic.com/~lynn/2021h.html#49 Dynamic Adaptive Resource Management

https://www.garlic.com/~lynn/2021d.html#14 The Rise of the Internet

https://www.garlic.com/~lynn/2021c.html#97 What's Fortran?!?!

https://www.garlic.com/~lynn/2021c.html#83 IBM SNA/VTAM (& HSDT)

https://www.garlic.com/~lynn/2019d.html#117 IBM HONE

https://www.garlic.com/~lynn/2019c.html#35 Transition to cloud computing

https://www.garlic.com/~lynn/2019b.html#16 Tandem Memo

https://www.garlic.com/~lynn/2018f.html#110 IBM Token-RIng

https://www.garlic.com/~lynn/2018b.html#9 Important US technology companies sold to foreigners

https://www.garlic.com/~lynn/2017.html#57 TV Show "Hill Street Blues"

https://www.garlic.com/~lynn/2016b.html#82 Qbasic - lies about Medicare

https://www.garlic.com/~lynn/2015g.html#42 20 Things Incoming College Freshmen Will Never Understand

https://www.garlic.com/~lynn/2015e.html#31 Western Union envisioned internet functionality

https://www.garlic.com/~lynn/2015e.html#2 Western Union envisioned internet functionality

https://www.garlic.com/~lynn/2015d.html#47 Western Union envisioned internet functionality

https://www.garlic.com/~lynn/2014j.html#66 No Internet. No Microsoft Windows. No iPods. This Is What Tech Was Like In 1984

https://www.garlic.com/~lynn/2014e.html#7 Last Gasp for Hard Disk Drives

https://www.garlic.com/~lynn/2014b.html#46 Resistance to Java

https://www.garlic.com/~lynn/2013n.html#16 z/OS is antique WAS: Aging Sysprogs = Aging Farmers

https://www.garlic.com/~lynn/2013j.html#66 OSI: The Internet That Wasn't

https://www.garlic.com/~lynn/2012o.html#47 PC/mainframe browser(s) was Re: 360/20, was 1132 printerhistory

https://www.garlic.com/~lynn/2012m.html#24 Does the IBM System z Mainframe rely on Security by Obscurity or is it Secure by Design

https://www.garlic.com/~lynn/2012j.html#89 Gordon Crovitz: Who Really Invented the Internet?

https://www.garlic.com/~lynn/2012j.html#87 Gordon Crovitz: Who Really Invented the Internet?

https://www.garlic.com/~lynn/2012g.html#57 VM Workshop 2012

https://www.garlic.com/~lynn/2012f.html#92 How do you feel about the fact that India has more employees than US?

https://www.garlic.com/~lynn/2012d.html#20 Writing article on telework/telecommuting

https://www.garlic.com/~lynn/2011p.html#103 Has anyone successfully migrated off mainframes?

https://www.garlic.com/~lynn/2011g.html#77 Is the magic and romance killed by Windows (and Linux)?

https://www.garlic.com/~lynn/2011g.html#75 We list every company in the world that has a mainframe computer

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM VM/CMS Mainframe Date: 11 Mar, 2025 Blog: Facebookre:

trivia: source code card sequential numbers were used by source update system (CMS update program). as undergraduate in 60s, I was changing so much code, I created the "$" convention ... preprocessor (to update program) would generate the sequence numbers for new statements before passing a work/temp file to update command. after joining the science center and the decision to add virtual memory to all 370s, joint project with endicott was to 1) add virtual 370 machine support to CP67 (running on real 360/67) and 2) modify CP67 to run on virtual memory 370 ... which included implementing multi-level source update (originally done in EXEC recursively applying source updates) ... was running in CP67 370 virtual machine for a year before 1st engineer 370 (w/virtual memory) was operational (ipl'ing the 370 CP67 was used to help verify that machine)

trivia: mid-80s, got a request from Melinda

https://www.leeandmelindavarian.com/Melinda#VMHist

asking for a copy of the original multi-level source update done in

exec. I had triple-redundant tape of archived files from 60s&70s

... and was able to pull it off from archive tape. It was fortunate

because because not long later, Almaden Research had an operational

problem mounting random tapes as scratch and I lost nearly dozen tapes

... including all three replicated tapes with 60s&70s archive.