From: Lynn Wheeler <lynn@garlic.com> Subject: IBM Numeric Intensive Date: 25 Sept, 2024 Blog: Facebookafter the Future System implosion, I got sucked into helping with a 16-processor multiprocessor and we con'ed the 3033 processor engineers into working on it in their spare time (a lot more interesting that remapping 168 logic to 20% faster chips). Everybody thought it was great until somebody told head of POK that it could be decades before the POK favorite son operating system (MVS) had (effective) 16-way support (at the time, MVS documentation said that MVS 2-processor support only had 1.2-1.5 times the throughput of a single processor). Then some of us were invited to never visit POK again and the 3033 processor engineers told to heads down on 3033 and stop being distracted. POK doesn't ship a 16-processor machine until after the turn of the century. Once the 3033 was out the door, the processor engineers start on trout/3090. Later the processor engineers had improved 3090 scalar floating point processing so it ran as fast as memory (and complained vector was purely marketing since it would be limited by memory throughput).

A decade after 16-processor project, Nick Donofrio approved our HA/6000 project, originally for NYTimes to move their newspaper system (ATEX) off DEC VAXCluster to RS/6000. I rename it HA/CMP when I start doing numeric/scientific cluster scale-up with the national labs and commercial cluster scale-up with RDBMS vendors (Oracle, Sybase, Informix, and Ingres that had RDBMS VAXCluster support in the same source base with Unix).

IBM had been marketing a fault tolerant system as S/88 and the S/88 product administrator started taking us around to their customers ... and also got me to write a section for the corporate strategic continuous availability document (section got pulled when both Rochester/AS400 and POK/mainframe complained that they couldn't meet the requirements). Early Jan1992, in meeting with Oracle CEO, AWD/Hester told Ellison that we would have 16-system clusters by mid-92 and 128-system clusters by ye92 ... however by end of Jan1992, cluster scale-up had been transferred for announce as IBM Supercomputer and we were told we couldn't work on anything with more than four processors (we leave IBM a few months later). Complaints from the other IBM groups likely contributed to the decision.

(benchmarks are number of program iterations compared to reference

platform, not actual instruction count)

1993: eight processor ES/9000-982 : 408MIPS, 51MIPS/processor

1993: RS6000/990 : 126MIPS; 16-system: 2016MIPs, 128-system: 16,128MIPS

trivia: in the later half of the 90s, the i86 processor chip vendors

do a hardware layer that translates i86 instructions into RISC

micro-ops for execution.

1999: single IBM PowerPC 440 hits 1,000MIPS

1999: single Pentium3 (translation to RISC micro-ops for execution)

hits 2,054MIPS (twice PowerPC 440)

2003: single Pentium4 processor 9.7BIPS (9,700MIPS)

2010: E5-2600 XEON server blade, two chip, 16 processor, aggregate

500BIPS (31BIPS/processor)

The 2010-era mainframe was 80 processor z196 rated at 50BIPS aggregate

(625MIPS/processor), 1/10th XEON server blade

Future System posts

https://www.garlic.com/~lynn/submain.html#futuresys

SMP, tightly-coupled, shared memory multiprocessor posts

https://www.garlic.com/~lynn/subtopic.html#smp

HA/CMP posts

https://www.garlic.com/~lynn/subtopic.html#hacmp

continuous availability, disaster survivability, geographic

survivability posts

https://www.garlic.com/~lynn/submain.html#available

801/risc, iliad, romp, rios, pc/rt, rs/6000, power, power/pc posts

https://www.garlic.com/~lynn/subtopic.html#801

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM (Empty) Suits Date: 25 Sept, 2024 Blog: FacebookI drank the kool-aid when I graduated and joined IBM ... got white shirts and 3-piece suits. Even tho I was in the science center, lots of customers liked me to stop by and shoot the breeze, including the manager of one of the largest, all-blue financial dataprocessing centers on the east coast. Then the branch manager did something that horribly offended the customer and the customer announced they were ordering an Amdahl system (would be the only one in large sea of IBM systems) in retribution.

I was asked to go sit onsite for 6-12 months to obfuscate why the customer was ordering Amdahl system (this was back when Amdahl was only selling into technical/university market and had yet to crack the commercial market) as part of branch manager cover-up. I talked to the customer and they said they would enjoy having me onsite, but that wouldn't change their decision to order an Amdahl machine so I declined IBM's offer. I was told that the branch manager was good sailing buddy of IBM CEO, and if I didn't, I could forget having an IBM career, promotions, raises. Last time I wore suits for IBM. Later found customers commenting it was refreshing change from the usual empty suits.

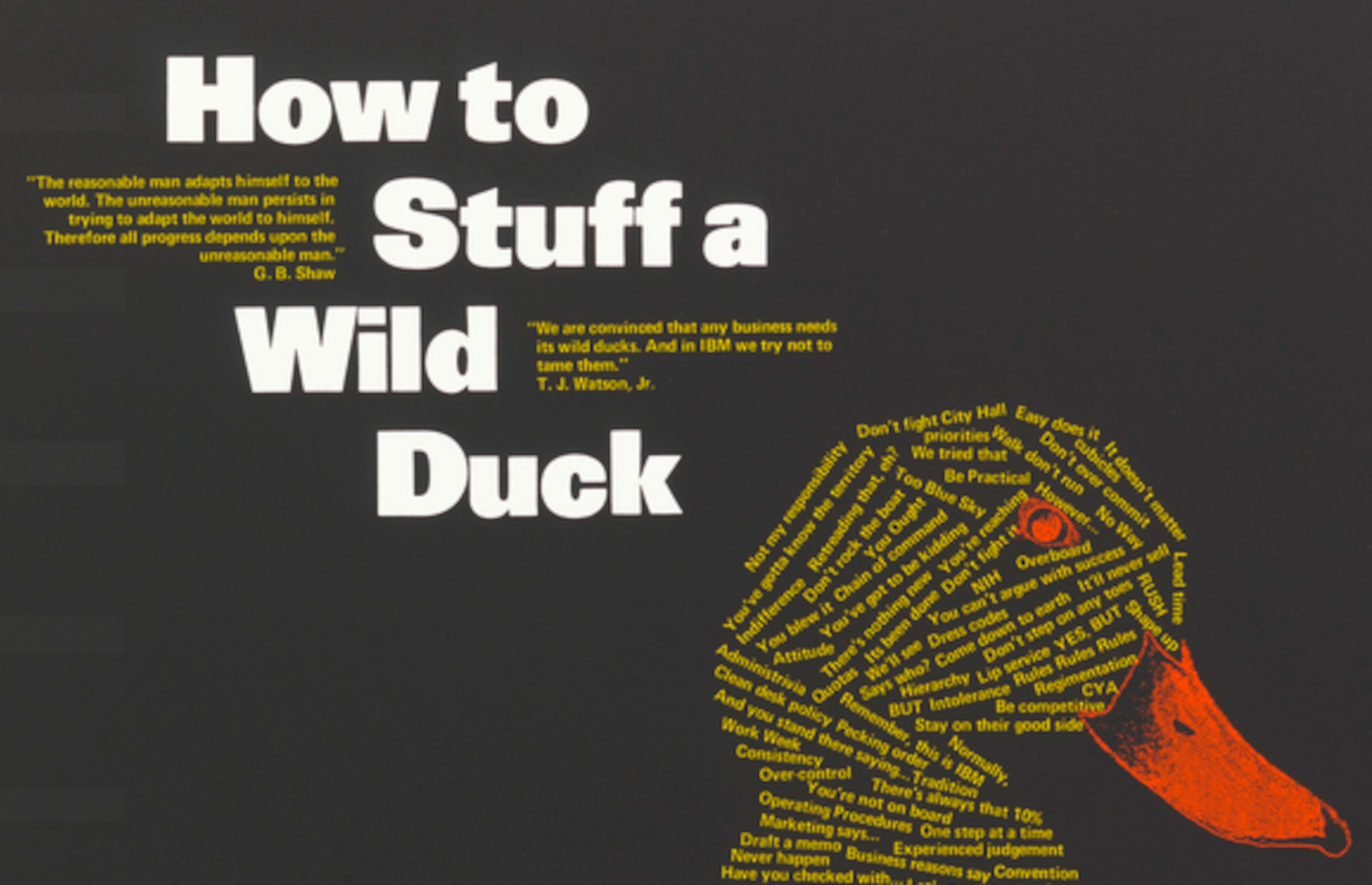

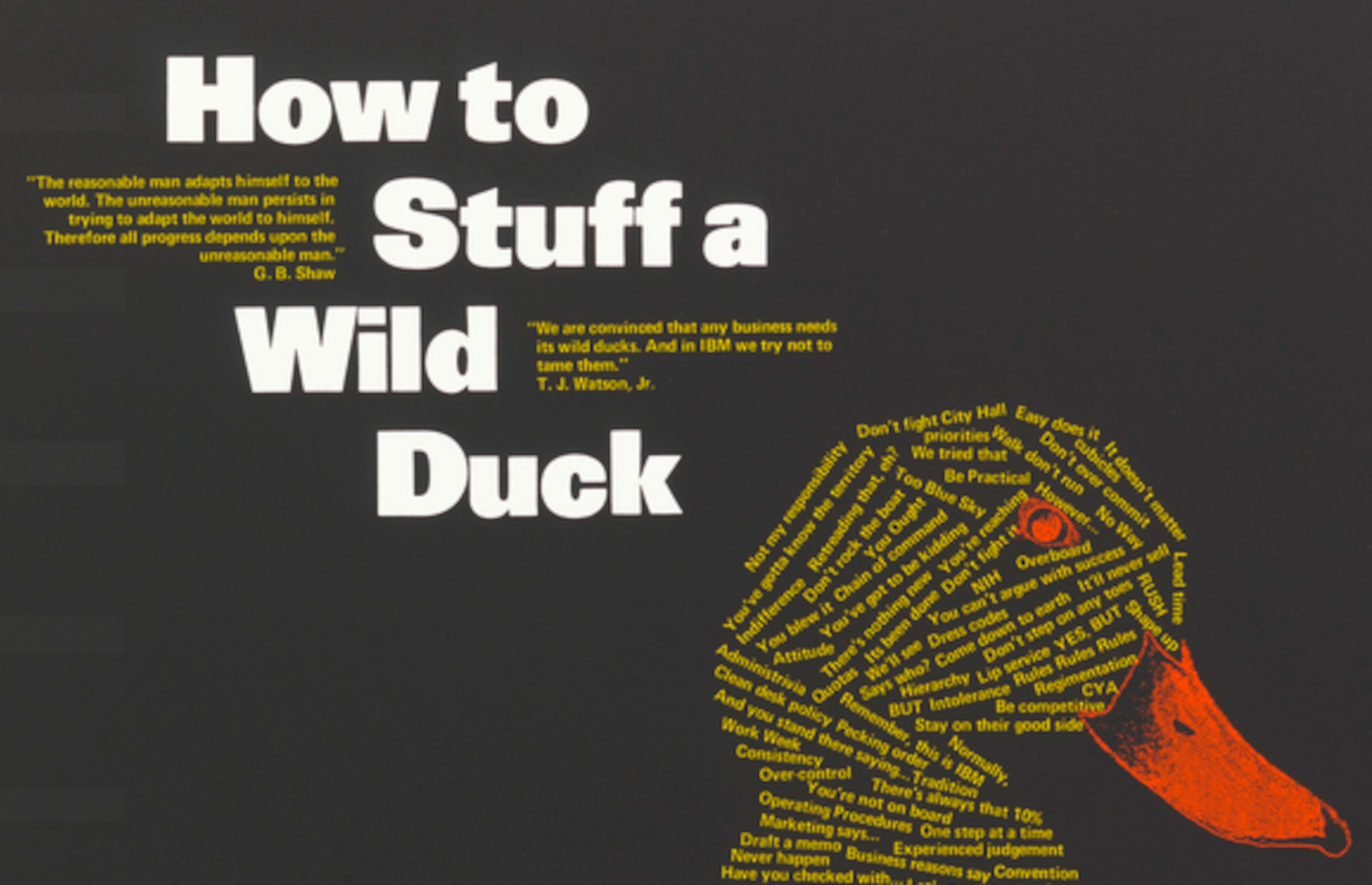

This was after (earlier) CEO Learson had tried&failed to block the

bureaucrats, careerists and MBAs from destroying Watsons'

culture/legacy

https://www.linkedin.com/pulse/john-boyd-ibm-wild-ducks-lynn-wheeler/

which was accelerated during the failing Future System project

"Computer Wars: The Post-IBM World", Time Books

https://www.amazon.com/Computer-Wars-The-Post-IBM-World/dp/1587981394

... and perhaps most damaging, the old culture under Watson Snr and Jr

of free and vigorous debate was replaced with *SYNCOPHANCY* and *MAKE

NO WAVES* under Opel and Akers. It's claimed that thereafter, IBM

lived in the shadow of defeat ... But because of the heavy investment

of face by the top management, F/S took years to kill, although its

wrong headedness was obvious from the very outset. "For the first

time, during F/S, outspoken criticism became politically dangerous,"

recalls a former top executive

... snip ...

some more FS detail:

http://www.jfsowa.com/computer/memo125.htm

IBM downturn/downfall/breakup posts

https://www.garlic.com/~lynn/submisc.html#ibmdownfall

future system posts

https://www.garlic.com/~lynn/submain.html#futuresys

some past post mentioning "suit kool-aid":

https://www.garlic.com/~lynn/2023g.html#42 IBM Koolaid

https://www.garlic.com/~lynn/2023c.html#56 IBM Empty Suits

https://www.garlic.com/~lynn/2023.html#51 IBM Bureaucrats, Careerists, MBAs (and Empty Suits)

https://www.garlic.com/~lynn/2022g.html#66 IBM Dress Code

https://www.garlic.com/~lynn/2021j.html#93 IBM 3278

https://www.garlic.com/~lynn/2021i.html#81 IBM Downturn

https://www.garlic.com/~lynn/2021.html#82 Kinder/Gentler IBM

https://www.garlic.com/~lynn/2018f.html#68 IBM Suits

https://www.garlic.com/~lynn/2018e.html#27 Wearing a tie cuts circulation to your brain

https://www.garlic.com/~lynn/2018d.html#6 Workplace Advice I Wish I Had Known

https://www.garlic.com/~lynn/2018.html#55 Now Hear This--Prepare For The "To Be Or To Do" Moment

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: The joy of FORTRAN Newsgroups: comp.os.linux.misc, alt.folklore.computers Date: Thu, 26 Sep 2024 07:49:14 -1000Lynn Wheeler <lynn@garlic.com> writes:

trivia: 1972, CEO Learson tried (& failed) to block the bureaucrats,

careerists, and MBAs from destroying Watsons' culture/legacy

https://www.linkedin.com/pulse/john-boyd-ibm-wild-ducks-lynn-wheeler/

it was greatly accelerated during the failing Future System effort,

Ferguson & Morris, "Computer Wars: The Post-IBM World", Time Books

https://www.amazon.com/Computer-Wars-The-Post-IBM-World/dp/1587981394

... and perhaps most damaging, the old culture under Watson Snr and Jr

of free and vigorous debate was replaced with *SYNCOPHANCY* and *MAKE NO

WAVES* under Opel and Akers. It's claimed that thereafter, IBM lived in

the shadow of defeat ... But because of the heavy investment of face by

the top management, F/S took years to kill, although its wrong

headedness was obvious from the very outset. "For the first time, during

F/S, outspoken criticism became political

... snip ...

more FS info

http://www.jfsowa.com/computer/memo125.htm

then 1992, IBM has one of the largest losses in the history of US

companies and was being reorged into the 13 baby blues (take-off on

AT&T "baby bells" breakup a decade earlier) in preperation for breakup

https://web.archive.org/web/20101120231857/http://www.time.com/time/magazine/article/0,9171,977353,00.html

https://content.time.com/time/subscriber/article/0,33009,977353-1,00.html

we had already left IBM but get a call from the bowels of Armonk asking if we could help with the company breakup. Before we get started, the board brings in the former president of Amex as CEO, who (somewhat) reverses the breakup ... but it was difficult time saving a company that was on the verge of going under ... IBM somewhat barely surviving as financial engineering company

IBM downturn/downfall/breakup posts

https://www.garlic.com/~lynn/submisc.html#ibmdownfall

future system posts

https://www.garlic.com/~lynn/submain.html#futuresys

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: Emulating vintage computers Newsgroups: alt.folklore.computers Date: Thu, 26 Sep 2024 08:39:41 -1000Lars Poulsen <lars@beagle-ears.com> writes:

little over decade ago was asked to track down the IBM decision to add virtual memory to all 370s, found staff member to executive making the decision. Basically MVT storage management was so bad that regions sizes had to be specified four times larger than used ... as a result typical 1mbyte 370/165 only ran four concurrent regions, insufficient to keep system busy and justified. Mapping MVT to 16mbyte virtual memory would allow concurrent regions to be increased by factor of four times (capped at 15 for the 4mbit storage protect keys) with little or no paging (aka VS2/SVS), sort of like running MVT in a CP/67 16mbyte virtual machine.

Lat 80s got approval for HA/6000 project, originally for NYTimes to move their newspaper system (ATEX) off DEC VAXCluster to RS/6000. I rename it HA/CMP when I start doing numeric/scientific cluster scale-up with the national labs and commercial cluster scale-up with RDBMS vendors (Oracle, Sybase, Informix, and Ingres that had RDBMS VAXCluster support in the same source base with Unix).

IBM had been marketing a fault tolerant system as S/88 and the S/88 product administrator started taking us around to their customers ... and also got me to write a section for the corporate continuous availability strategy document, section got pulled when both Rochester (AS/400) and POK (mainframe) complained that they couldn't meet the requirements.

Early Jan1992, in meeting with Oracle CEO, AWD/Hester told Ellison that we would have 16-system clusters by mid-92 and 128-system clusters by ye-92 ... however by end of Jan1992, cluster scale-up had been transferred for announce as IBM Supercomputer and we were told we couldn't work on anything with more than four processors (we leave IBM a few months later). Complaints from the other IBM groups likely contributed to the decision.

(benchmarks are number of program iterations compared to reference

platform, not actual instruction count)

1993: eight processor ES/9000-982 : 408MIPS, 51MIPS/processor

1993: RS6000/990 : 126MIPS; 16-system: 2016MIPs, 128-system: 16,128MIPS

trivia: in the later half of the 90s, the i86 processor chip vendors do

a hardware layer that translates i86 instructions into RISC micro-ops

for execution.

1999: single IBM PowerPC 440 hits 1,000MIPS

1999: single Pentium3 (translation to RISC micro-ops for execution)

hits 2,054MIPS (twice PowerPC 440)

2003: single Pentium4 processor 9.7BIPS (9,700MIPS)

2010: E5-2600 XEON server blade, two chip, 16 processor, aggregate

500BIPS (31BIPS/processor)

The 2010-era mainframe was 80 processor z196 rated at 50BIPS aggregate

(625MIPS/processor), 1/10th XEON server blade

Future System posts

https://www.garlic.com/~lynn/submain.html#futuresys

HA/CMP posts

https://www.garlic.com/~lynn/subtopic.html#hacmp

801/risc, iliad, romp, rios, pc/rt, power, power/pc

https://www.garlic.com/~lynn/subtopic.html#801

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM (Empty) Suits Date: 27 Sept, 2024 Blog: Facebookre:

After transfer to SJR got to wander around silicon valley, would

periodically drop in on TYMSHARE and/or see lots of people at the

monthly meetings sponsored by Stanford SLAC. In aug1976, TYMSHARE

makes their CMS-based online computer conferencing (precursor to

social media) "free" to (ibm user group) SHARE as VMSHARE ... archives

here:

http://vm.marist.edu/~vmshare

I cut a deal with TYMSHARE to get monthly tape dump of all VMSHARE (and later PCSHARE) files for putting up on internal systems and the internal network. One of the biggest problems was IBM lawyers concerned that internal employees would be contaminated by direct exposure to unfiltered customer information.

recent posts mentioning TYMSHARE, VMSHARE, SLAC:

https://www.garlic.com/~lynn/2024e.html#143 The joy of FORTRAN

https://www.garlic.com/~lynn/2024e.html#139 RPG Game Master's Guide

https://www.garlic.com/~lynn/2024d.html#77 Other Silicon Valley

https://www.garlic.com/~lynn/2024c.html#43 TYMSHARE, VMSHARE, ADVENTURE

https://www.garlic.com/~lynn/2023f.html#116 Computer Games

https://www.garlic.com/~lynn/2023f.html#64 Online Computer Conferencing

https://www.garlic.com/~lynn/2023f.html#60 The Many Ways To Play Colossal Cave Adventure After Nearly Half A Century

https://www.garlic.com/~lynn/2023e.html#9 Tymshare

https://www.garlic.com/~lynn/2023e.html#6 HASP, JES, MVT, 370 Virtual Memory, VS2

https://www.garlic.com/~lynn/2023d.html#115 ADVENTURE

https://www.garlic.com/~lynn/2023d.html#62 Online Before The Cloud

https://www.garlic.com/~lynn/2023d.html#37 Online Forums and Information

https://www.garlic.com/~lynn/2023d.html#16 Grace Hopper (& Ann Hardy)

https://www.garlic.com/~lynn/2023c.html#25 IBM Downfall

https://www.garlic.com/~lynn/2023c.html#14 Adventure

https://www.garlic.com/~lynn/2022f.html#50 z/VM 50th - part 3

https://www.garlic.com/~lynn/2022f.html#37 What's something from the early days of the Internet which younger generations may not know about?

https://www.garlic.com/~lynn/2022c.html#8 Cloud Timesharing

https://www.garlic.com/~lynn/2022b.html#107 15 Examples of How Different Life Was Before The Internet

https://www.garlic.com/~lynn/2022b.html#28 Early Online

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM (Empty) Suits Date: 27 Sept, 2024 Blog: Facebookre:

... also wandering around datacenters in silicon valley, including bldg14 (disk engineering) and bldg15 (disk product test) across the street. They were running 7x24, stand-alone, pre-scheduled testing and had mentioned trying MVS, but it had 15min MTBF (requiring manual re-ipl). I offer to rewrite I/O supervisor to enable any amount of concurrent, on-demand testing (greatly improving productivity). Downside was they started blaming me anytime they had problem and I had to spending increasing amount of time playing disk engineer and diagnosing their problems. I then write an (internal only) research report and happened to mention the MVS 15min MTBF, bringing the wrath of the POK MVS group down on my head.

1980, STL (since renamed SVL) was bursting at the seams and were moving 300 people from the IMS group to offsite bldg with dataprocessing service back to the STL datacenter. They had tried "remote 3270" but found the human factors unacceptable. I get con'ed into do channel-extender support so they can place channel-attached 3270 controllers at the offsite bldg so there is no perceptible difference in human factors offsite and in STL. Side-effect was 168-3 system throughput increased by 10-15%; 3270 controllers had been spread across all channels with DASD controllers, channel-extender significantly reduced channel busy (for same 3270 terminal traffic) compared to the (really slow) 3270 controllers (improving DASD throughput I/O) ... and STL considered using channel-extender for all 3270s.

Then there was attempt to release my support, but there was was group in POK playing with some serial stuff afraid if it was in the market, it would make it more difficult releasing their stuff (and get it vetoed).

Mid-80s, the father of 801/RISC wants me to help him get disk "wide-head" released. The original 3380 had 20track spacing between each data track, which was cut in half, doubling tracks&cylinders; then it was cut again, tripling tracks&cylinders. Disk wide-head would transfer 16 closely data placed tracks in parallel ... however required 50mbytes/sec channel ... and mainframe channels were still 3mbytes/sec.

Then in 1988, the IBM branch office asks if I could help LLNL standardize some serial stuff they were working with, which quickly becomes fibre-channel standard (FCS, 1gbit/sec, full-duplex, aggregate 200mbyte/sec, including some stuff I had done in 1980) and do FCS for RS/6000. Then in 1990s, POK gets their serial stuff released as ESCON, when it is already obsolete (17mbytes/sec). Then POK engineers become involved in FCS and define a heavy-weight protocol that significantly cuts the throughput ... which is eventually released as FICON. The most recent public benchmark I've found is z196 "Peak I/O" benchmark getting 2M IOPS using 104 FICON. About the same time, a FCS was announced as E5-2600 server blades claiming over million IOPS (two such FCS having higher throughput than 104 FICON). Also IBM pubs recommended keeping SAPS (system assist processors that do actual I/O) to 70% CPU (which would be 1.5M IOPS). Also with regard to mainframe CKD DASD throughput, none have been made for decades, all being simulated on industry standard fixed-block disks.

One of the problems was 3880 controller ... which people assumed would be like 3830 controller but able to support 3mbyte/sec transfer ... which 3090 planned on. However while 3880 had special hardware for 3mbye/sec transfer, it had a really slow processor for everything else ... which significantly drove up channel busy. When 3090 found out how bad it really was, they realized they had to significantly increase the number of (3mbyte/sec) channels to achieve target system throughput. The increase in number of channel required an additional TCM (and the 3090 group semi-facetiously said they would bill the 3880 group for the increase in 3090 manufacturing costs). Marketing eventually respun the large increase in 3090 channel numbers to be a great I/O machine (but actually was to offset the 3880 increase in channel busy).

trivia: bldg15 got early engineering system (for I/O testing) and received early engineering 3033 (#3 or #4?). Since product testing was only taking percent or two of CPU, we scrounge up 3830 controller and string of 3330s, putting up our own private online service (including running 3270 coax under the street to my office in bldg28). Then the air-bearing simulation (originally for 3370 FBA thin-film floating head design, but also used later for 3380 CKD heads) was getting multiple week turn-around on SJR 370/195 (even with high priority designation), we set it up on the bldg15 3033 and air-bearing simulation was able to get multiple turn-arounds/day. Note that 3380 CKD was already transitioning to fixed-block, can be seen in records/track calculations that had record size rounded up to fixed cell-size).

getting to play disk engineer posts

https://www.garlic.com/~lynn/subtopic.html#disk

channel-extender posts

https://www.garlic.com/~lynn/submisc.html#channel.extender

FCS &/or FICON posts

https://www.garlic.com/~lynn/submisc.html#ficon

801/risc, iliad, romp, rios, pc/rt, rs/6000, power, power/pc posts

https://www.garlic.com/~lynn/subtopic.html#801

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM (Empty) Suits Date: 27 Sept, 2024 Blog: Facebookre:

I was repeatedly being told I had no career, no promotions, no raises ... so when head hunter asked me to interview for assistant to president of clone 370 maker (sort of subsidiary of company on the other side of the pacific), I thought why not. It was going along well until one of the staff broached the subject of 370/xa documents (I had a whole drawer full of the documents, registered ibm confidential, kept under double lock&key and subject to surprise audits by local security). In response I mentioned that I had recently submitted some text to upgrade ethics in the Business Conduct Guidlines (had to be read and signed once a year) ... that ended the interview. That wasn't the end of it, later had a 3hr interview with FBI agent, the gov. was suing the foreign parent company for industrial espionage (and I was on the building visitor log). I told the agent, I wondered if somebody in plant site security might of leaked names of individuals who had registered ibm confidential documents.

Somebody in Endicott cons me into helping them with 138/148 ECPS

microcode (also used for 4331/4341 follow-on), find the 6kbytes of

vm370 kernel code that was the highest executed to be moved to

microcode (running ten times faster) ... archived usenet/afc post with

the analysis, 6kbytes represented 79.55% of kernel execution

https://www.garlic.com/~lynn/94.html#21

then he cons me into running around the world presenting the ECPS business case to local planners and forecasters. I'm told WT forecasters can get fired for bad forecasts because they effectively are firm orders to the manufacturing plants for delivery to countries, while US region forecasters get promoted for forecasting whatever corporate tells them are strategic (and plants have to "eat" bad US forecasts, as a result plants will regularly redo US forecasts)

much later my wife had been con'ed into co-authoring a response to gov. agency RFI for a campus-like, super-secure operation where she included 3-tier architecture. We were then out doing customer executive presentations on Ethernet, TCP/IP, Internet, high-speed routers, super-secure operation and 3-tier architecture (at a time when the communication group was fiercely fighting off client/server and distributed computing) and the communication group, token-ring, SNA and SAA forces where attacking us with all sorts of misinformation. The Endicott individual then had a top floor, large corner office in Somers running SAA and we would drop by periodically and tell him how badly his people were behaving.

3-tier architecture posts

https://www.garlic.com/~lynn/subnetwork.html#3tier

some posts mentioning Business Conduct Guidelines

https://www.garlic.com/~lynn/2022d.html#35 IBM Business Conduct Guidelines

https://www.garlic.com/~lynn/2022c.html#4 Industrial Espionage

https://www.garlic.com/~lynn/2022.html#48 Mainframe Career

https://www.garlic.com/~lynn/2022.html#47 IBM Conduct

https://www.garlic.com/~lynn/2021k.html#125 IBM Clone Controllers

https://www.garlic.com/~lynn/2021j.html#38 IBM Registered Confidential

https://www.garlic.com/~lynn/2021g.html#42 IBM Token-Ring

https://www.garlic.com/~lynn/2021d.html#86 Bizarre Career Events

https://www.garlic.com/~lynn/2021b.html#12 IBM "811", 370/xa architecture

https://www.garlic.com/~lynn/2019e.html#29 IBM History

https://www.garlic.com/~lynn/2019.html#83 The Sublime: Is it the same for IBM and Special Ops?

https://www.garlic.com/~lynn/2013e.html#42 More Whistleblower Leaks on Foreclosure Settlement Show Both Suppression of Evidence and Gross Incompetence

https://www.garlic.com/~lynn/2009h.html#66 "Guardrails For the Internet"

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: The joy of FORTRAN Newsgroups: alt.folklore.computers, comp.os.linux.misc Date: Fri, 27 Sep 2024 09:55:38 -1000Peter Flass <peter_flass@yahoo.com> writes:

The IBM communication group was fiercely fighting off client/server and distributed computing and trying to block mainframe TCP/IP release. When that got overturned they changed their tactic and claimed that since they had corporate strategic responsibility for everything that crossed datacenter walls, it had to be release through them. What shipped got aggregate 44kbytes/sec using nearly whole 3090 processor. It was also made available on MVS by doing MVS VM370 "diagnose" instruction simulation.

I then do RFC1044 implementation and in some tuning tests at Cray Research between Cray and IBM 4341, get 4341 sustained channel throughput using only modest amount of 4341 CPU (something like 500 times improvement in bytes moved per instruction executed).

In the 90s, the IBM communication group hires a silicon valley contractor to implement tcp/ip support directly in VTAM, what he demo'ed had TCP running much faster than LU6.2. He was then told that everybody knows that LU6.2 is much faster than a "proper" TCP/IP implementation and they would only be paying for a "proper" implementation.

RFC1044 posts

https://www.garlic.com/~lynn/subnetwork.html#1044

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: The joy of FORTRAN Newsgroups: alt.folklore.computers, comp.os.linux.misc Date: Fri, 27 Sep 2024 11:13:06 -1000re:

trivia: late 80s univ studying mainframe VTAM implementation LU6.2 had 160k instruction pathlength (and 15 buffer copies) compared to unix/bsd (4.3 tahoe/reno) TCP had a 5k instruction pathlength (and 5 buffer copies).

I was on Greg Chesson's XTP TAB and did further optimization with CRC trailer protocol with outboard XTP LAN chip where CRC was calculated as the packet flowed through and added/checked it in the trailor. Also allowed for no buffer copy with scatter/gather (aka doing packet I/O directly from user memory).

XTP/HSP posts

https://www.garlic.com/~lynn/subnetwork.html#xtphsp

posts mention VTAM/BSD-tcp/ip study

https://www.garlic.com/~lynn/2024e.html#71 The IBM Way by Buck Rogers

https://www.garlic.com/~lynn/2022h.html#94 IBM 360

https://www.garlic.com/~lynn/2022h.html#86 Mainframe TCP/IP

https://www.garlic.com/~lynn/2022h.html#71 The CHRISTMA EXEC network worm - 35 years and counting!

https://www.garlic.com/~lynn/2022g.html#48 Some BITNET (& other) History

https://www.garlic.com/~lynn/2006l.html#53 Mainframe Linux Mythbusting (Was: Using Java in batch on z/OS?)

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: Emulating vintage computers Newsgroups: alt.folklore.computers Date: Sat, 28 Sep 2024 13:02:48 -1000antispam@fricas.org (Waldek Hebisch) writes:

from original post:

(benchmarks are number of program iterations compared to reference

platform, not actual instruction count)

...

industry standard MIPS benchmark had been number of program iterations compared to one of the reference platforms (370/158-3 assumed to be one MIPS) ... not actual instruction count ... sort of normalizes across large number of different architectures.

consideration has been increasing processor rates w/o corresponding

improvement in memory latency. For instance IBM documentation claimed

that half of the per processor throughput increase going from z10 to

z196 was the introduction of some out-of-order execution (attempting

some compensation for cache miss and memory latency, features that have

been in other platforms for decades).

z10, 64 processors, 30BIPS (469MIPS/proc), Feb2008

z196, 80 processors, 50BIPS (625MIPS/proc), Jul2010

aka half of the 469MIPS/proc to 625MIPS/proc ... (625-469)/2; aka 78MIPS

per processor from Z10 to z196 due to some out-of-order execution.

There have been some pubs about recent memory latency when measured in terms of processor clock cycles is similar to 60s disk latency when measured in terms of 60s processor clock cycles.

trivia: early 80s, I wrote a tome that disk relative system throughput had declined by an order of magnitude since mid-60 (i.e. disks got 3-5 faster while systems got 40-50 times faster). Disk division executive took exception and assigned the performance group to refute the claims. After a few weeks they came back and effectively said I had slightly understated the problem. They then respun the analysis to about configuring disks to increase system throughput (16Aug1984, SHARE 63, B874).

trivia2: a litle over decade ago, I was asked to track down the decision

to add virtual memory to all IBM 370s. I found staff member to executive

making the decision. Basically MVT storage management was so bad that

region sizes had to be specified four times larger than used. As a

result a typical 1mbyte, 370/165 only ran four concurrent regions at a

time, insufficient to keep 165 busy and justified. Going to MVT in

16mbyte virtual memory (VS2/SVS) allowed increasing the number of

regsions by factor of four times (capped at 15 because of 4bit storage

protect keys) with little or no paging ... similar to running MVT in a

CP67 16mbyte virtual machine (aka increasing overlapped execution while

waiting on disk I/O, and our-of-order execution increasing overlapped

execution while waiting on memory). post with some email extracts

about adding virtual memory to all 370s

https://www.garlic.com/~lynn/2011d.html#73

some recent post mentioning B874 share presentation

https://www.garlic.com/~lynn/2024e.html#116 what's a mainframe, was is Vax addressing sane today

https://www.garlic.com/~lynn/2024e.html#24 Public Facebook Mainframe Group

https://www.garlic.com/~lynn/2024d.html#88 Computer Virtual Memory

https://www.garlic.com/~lynn/2024d.html#32 ancient OS history, ARM is sort of channeling the IBM 360

https://www.garlic.com/~lynn/2024d.html#24 ARM is sort of channeling the IBM 360

https://www.garlic.com/~lynn/2024c.html#109 Old adage "Nobody ever got fired for buying IBM"

https://www.garlic.com/~lynn/2024c.html#55 backward architecture, The Design of Design

https://www.garlic.com/~lynn/2023g.html#32 Storage Management

https://www.garlic.com/~lynn/2023e.html#92 IBM DASD 3380

https://www.garlic.com/~lynn/2023e.html#7 HASP, JES, MVT, 370 Virtual Memory, VS2

https://www.garlic.com/~lynn/2023b.html#26 DISK Performance and Reliability

https://www.garlic.com/~lynn/2023b.html#16 IBM User Group, SHARE

https://www.garlic.com/~lynn/2023.html#33 IBM Punch Cards

https://www.garlic.com/~lynn/2023.html#6 Mainrame Channel Redrive

PC-based IBM mainframe-compatible systems

https://en.wikipedia.org/wiki/PC-based_IBM_mainframe-compatible_systems

flex-es (gone 404 but lives on at wayback machine)

https://web.archive.org/web/20240130182226/https://www.funsoft.com/

some posts mention both hercules and flex-es

https://www.garlic.com/~lynn/2017e.html#82 does linux scatter daemons on multicore CPU?

https://www.garlic.com/~lynn/2012p.html#13 AMC proposes 1980s computer TV series Halt & Catch Fire

https://www.garlic.com/~lynn/2010e.html#71 Entry point for a Mainframe?

https://www.garlic.com/~lynn/2009q.html#29 Check out Computer glitch to cause flight delays across U.S. - MarketWatch

https://www.garlic.com/~lynn/2009q.html#26 Check out Computer glitch to cause flight delays across U.S. - MarketWatch

https://www.garlic.com/~lynn/2003d.html#10 Low-end processors (again)

https://www.garlic.com/~lynn/2003.html#39 Flex Question

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: Emulating vintage computers Newsgroups: alt.folklore.computers Date: Sat, 28 Sep 2024 14:01:37 -1000re:

emulation trivia

Note upthread mentions helping endicott do 138/148 ECPS ... basically

manual compiling selected code into "native" (micro)code running ten

times faster. Then in the late 90s did some consulting for Fundamental

Software

https://web.archive.org/web/20240130182226/https://www.funsoft.com/

What is this zPDT? (and how does it fit in?)

https://www.itconline.com/wp-content/uploads/2017/07/What-is-zPDT.pdf

More recent versions of zPDT have added a "Just-In-Time" (JIT)

compiled mode to this. Some algorithm determines whether a section of

code should be interpreted or whether it would be better to invest

some more initial cycles to compile the System z instructions into

equivalent x86 instructions to simplify the process somewhat). This

interpreter plus JIT compiler is what FLEX-ES used to achieve its high

performance. FLEX-ES also cached the compiled sections of code for

later reuse. I have not been able to verify that zPDT does this

caching also, but I suspect so.

... snip ...

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: TYMSHARE, Engelbart, Ann Hardy Date: 29 Sept, 2024 Blog: FacebookWhen M/D was buying TYMSHARE,

I was also brought in to evaluate GNOSIS for its spin-off as KeyKos to

Key Logic, Ann Hardy at Computer History Museum

https://www.computerhistory.org/collections/catalog/102717167

Ann rose up to become Vice President of the Integrated Systems

Division at Tymshare, from 1976 to 1984, which did online airline

reservations, home banking, and other applications. When Tymshare was

acquired by McDonnell-Douglas in 1984, Ann's position as a female VP

became untenable, and was eased out of the company by being encouraged

to spin out Gnosis, a secure, capabilities-based operating system

developed at Tymshare. Ann founded Key Logic, with funding from Gene

Amdahl, which produced KeyKOS, based on Gnosis, for IBM and Amdahl

mainframes. After closing Key Logic, Ann became a consultant, leading

to her cofounding Agorics with members of Ted Nelson's Xanadu project.

... snip ...

GNOSIS

http://cap-lore.com/CapTheory/upenn/Gnosis/Gnosis.html

Tymshare (, IBM) & Ann Hardy

https://medium.com/chmcore/someone-elses-computer-the-prehistory-of-cloud-computing-bca25645f89

Ann Hardy is a crucial figure in the story of Tymshare and

time-sharing. She began programming in the 1950s, developing software

for the IBM Stretch supercomputer. Frustrated at the lack of

opportunity and pay inequality for women at IBM -- at one point she

discovered she was paid less than half of what the lowest-paid man

reporting to her was paid -- Hardy left to study at the University of

California, Berkeley, and then joined the Lawrence Livermore National

Laboratory in 1962. At the lab, one of her projects involved an early

and surprisingly successful time-sharing operating system.

... snip ...

note: TYMSHARE made their VM370/CMS-based online computer conferencing

system "free" to (ibm mainframe user group) SHARE in Aug1976 as

VMSHARE, archives

http://vm.marist.edu/~vmshare

I would regularly drop in on TYMSHARE (and/or see them at monthly meetings hosted by Stanford SLAC) and cut a deal with them to get monthly tape dump of all VMSHARE (and later PCSHARE) files for putting up on internal IBM systems and network (biggest hassle were lawyers concerned that internal IBM employees would be contaminated directly exposed to unfiltered customer information). Probably contributed to being blamed for online computer conferencing on the IBM internal network in the late 70s and early 80s (folklore is when corporate executive committee was told, 5of6 wanted to fire me).

On one TYMSHARE visit, they demoed ADVENTURE that somebody found on Stanford SAIL PDP10 system and ported to VM370/CMS and I got a full source copy for putting up executable on internal IBM systems (I use to send full source to anybody that demonstrated that they got all points and shortly there were versions that had more points as well as PLI port).

online computer conferencing posts

https://www.garlic.com/~lynn/subnetwork.html#cmc

some past posts mentioning Tymshare, Ann Hardy, Engelbart

https://www.garlic.com/~lynn/2024c.html#25 Tymshare & Ann Hardy

https://www.garlic.com/~lynn/2023e.html#9 Tymshare

https://www.garlic.com/~lynn/2008s.html#3 New machine code

https://www.garlic.com/~lynn/aadsm17.htm#31 Payment system and security conference

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: 3270 Terminals Date: 29 Sept, 2024 Blog: Facebook3277/3272 had hardware response of .086sec ... it was followed with 3278 that moved a lot of electronics back into 3274 (reducing 3278 manufacturing cost) ... drastically increasing protocol chatter and latency, increasing hardware response to .3-.5sec (depending on amount of data). At the time there were studies showing quarter sec response improved productivity. Some number of internal VM datacenters were claiming quarter second system response ... but you needed at least .164sec system response with 3277 terminal to get quarter sec response for the person (I was shipping enhanced production operating system internally, getting .11sec system response). A complaint written to the 3278 Product Administrator got back a response that 3278 wasn't for interactive computing but for "data entry" (aka electronic keypunch). The MVS/TSO crowd never even noticed, it was a really rare TSO operation that even saw 1sec system response. Later IBM/PC 3277 hardware emulation card would get 4-5 times upload/download throughput of 3278 card.

Also 3270 protocol was half-duplex ... so if you tried hitting a key when screen was updated, it would lock keyboard and would have to stop and reset. YKT did a FIFO box, unplug the 3277 keyboard from the 3277 display, plug the FIFO box into the display and plug the keyboard into the FIFO box (there was enough electronics in the 3277 terminal that it was possible to do a number of adaptations, including the 3277GA), eliminating the keyboard lock scenario.

trivia: 1980, IBM STL (since renamed SVL) was bursting at the seams and 300 people (w/3270 terminals) from the IMS group were being moved to offsite bldg with dataprocessing service back to STL datacenter. They had tried "remote 3270" but found the human factors totally unacceptable. I get con'ed into doing channel-extender (STL was running my enhanced systems) service so that channel-attached 3270 controllers could be placed at the offsite bldg with no perceptible difference in human factors between offsite and in STL. Note a side-effect was that 168 system throughput increased by 10-15%. The 3270 controllers had previously been spread across 168 channels with DASD, moving the 3270 channel-attached controllers to channel-extenders significantly reduced the channel busy (for same amount of 3270 traffic) improving DASD (& system) throughput. There was consideration moving all their 3270 controllers to channel-extenders.

channel-extender posts

https://www.garlic.com/~lynn/submisc.html#channel.extender

recent posts mentioning 3272/3277 and 3274/3278 interactive

https://www.garlic.com/~lynn/2024e.html#26 VMNETMAP

https://www.garlic.com/~lynn/2024d.html#13 MVS/ISPF Editor

https://www.garlic.com/~lynn/2024c.html#19 IBM Millicode

https://www.garlic.com/~lynn/2024b.html#31 HONE, Performance Predictor, and Configurators

https://www.garlic.com/~lynn/2024.html#68 IBM 3270

https://www.garlic.com/~lynn/2024.html#42 Los Gatos Lab, Calma, 3277GA

https://www.garlic.com/~lynn/2023g.html#70 MVS/TSO and VM370/CMS Interactive Response

https://www.garlic.com/~lynn/2023f.html#78 Vintage Mainframe PROFS

https://www.garlic.com/~lynn/2023e.html#0 3270

https://www.garlic.com/~lynn/2023c.html#42 VM/370 3270 Terminal

https://www.garlic.com/~lynn/2023b.html#4 IBM 370

https://www.garlic.com/~lynn/2023.html#2 big and little, Can BCD and binary multipliers share circuitry?

https://www.garlic.com/~lynn/2022h.html#96 IBM 3270

https://www.garlic.com/~lynn/2022c.html#68 IBM Mainframe market was Re: Approximate reciprocals

https://www.garlic.com/~lynn/2022b.html#123 System Response

https://www.garlic.com/~lynn/2022b.html#110 IBM 4341 & 3270

https://www.garlic.com/~lynn/2022b.html#33 IBM 3270 Terminals

https://www.garlic.com/~lynn/2022.html#94 VM/370 Interactive Response

https://www.garlic.com/~lynn/2021j.html#74 IBM 3278

https://www.garlic.com/~lynn/2021i.html#69 IBM MYTE

https://www.garlic.com/~lynn/2021c.html#0 Colours on screen (mainframe history question) [EXTERNAL]

https://www.garlic.com/~lynn/2021.html#84 3272/3277 interactive computing

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: The boomer generation hit the economic jackpot Date: 29 Sept, 2024 Blog: FacebookThe boomer generation hit the economic jackpot. Young people will inherit their massive debts

Early last decade, the new (US) Republican speaker of the house publicly said he was cutting the budget for the agency responsible for recovering $400B in taxes on funds illegally stashed in overseas tax havens by 52,000 wealthy Americans (over and above new legislation after the turn of century that provided for legal stashing funds overseas). Later there was news on a few billion in fines for banks responsible for facilitating the illegal tax evasion ... but nothing on recovering the owed taxes or associated fines or jail sentences (significantly contributing to congress being considered most corrupt institution on earth).

Earlier the previous decade, 2002 (shortly after turn of the century), congress had let the fiscal responsibility act lapse (spending couldn't exceed revenue, on its way to eliminating all federal debt). 2010 CBO report that 2003-2009, spending increased $6T and taxes cut $6T for $12T gap compared to fiscal responsible budget (1st time taxes were cut to not pay for two wars) ... sort of confluence of special interests wanting huge tax cut, military-industrial complex wanting huge spending increase, and Too-Big-To-Fail wanting huge debt increase (since then the US federal debt has close to tripled).

more refs:

https://www.icij.org/investigations/paradise-papers/

http://www.amazon.com/Treasure-Islands-Havens-Stole-ebook/dp/B004OA6420/

tax fraud, tax evasion, tax loopholes, tax abuse, tax avoidance, tax

haven posts

https://www.garlic.com/~lynn/submisc.html#tax.evasion

too-big-to-fail (too-big-to-prosecute, too-big-to-jail) posts

https://www.garlic.com/~lynn/submisc.html#too-big-to-fail

military-industrial(-congressional) complex posts

https://www.garlic.com/~lynn/submisc.html#military.industrial.complex

capitalism posts

https://www.garlic.com/~lynn/submisc.html#capitalism

past specific posts mentioning Paradise papers and Treasure Island Havens

https://www.garlic.com/~lynn/2021j.html#44 The City of London Is Hiding the World's Stolen Money

https://www.garlic.com/~lynn/2021f.html#56 U.K. Pushes for Finance Exemption From Global Taxation Deal

https://www.garlic.com/~lynn/2019e.html#99 Is America ready to tackle economic inequality?

https://www.garlic.com/~lynn/2019e.html#93 Trump Administration Scaling Back Rules Meant to Stop Corporate Inversions

https://www.garlic.com/~lynn/2018f.html#8 The LLC Loophole; In New York, where an LLC is legally a person, companies can use the vehicles to blast through campaign finance limits

https://www.garlic.com/~lynn/2018e.html#107 The LLC Loophole; In New York, where an LLC is legally a person

https://www.garlic.com/~lynn/2017h.html#64 endless medical arguments, Disregard post (another screwup)

https://www.garlic.com/~lynn/2017.html#52 TV Show "Hill Street Blues"

https://www.garlic.com/~lynn/2017.html#35 Hammond threatens EU with aggressive tax changes after Brexit

https://www.garlic.com/~lynn/2016f.html#103 Chain of Title: How Three Ordinary Americans Uncovered Wall Street's Great Foreclosure Fraud

https://www.garlic.com/~lynn/2016f.html#35 Deutsche Bank and a $10Bn Money Laundering Nightmare: More Context Than You Can Shake a Stick at

https://www.garlic.com/~lynn/2016.html#92 Thanks Obama

https://www.garlic.com/~lynn/2015e.html#94 1973--TI 8 digit electric calculator--$99.95

https://www.garlic.com/~lynn/2015c.html#56 past of nukes, was Future of support for telephone rotary dial ?

https://www.garlic.com/~lynn/2014m.html#2 weird apple trivia

https://www.garlic.com/~lynn/2013m.html#66 NSA Revelations Kill IBM Hardware Sales In China

https://www.garlic.com/~lynn/2013l.html#60 Retirement Heist

https://www.garlic.com/~lynn/2013l.html#1 What Makes a Tax System Bizarre?

https://www.garlic.com/~lynn/2013k.html#60 spacewar

https://www.garlic.com/~lynn/2013k.html#57 The agency problem and how to create a criminogenic environment

https://www.garlic.com/~lynn/2013k.html#2 IBM Relevancy in the IT World

https://www.garlic.com/~lynn/2013j.html#26 What Makes an Architecture Bizarre?

https://www.garlic.com/~lynn/2013j.html#3 What Makes an Architecture Bizarre?

https://www.garlic.com/~lynn/2013i.html#81 What Makes a Tax System Bizarre?

https://www.garlic.com/~lynn/2013i.html#65 The Real Snowden Question

https://www.garlic.com/~lynn/2013i.html#54 How do you feel about the fact that India has more employees than US?

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Computer Wars: The Post-IBM World Date: 29 Sept, 2024 Blog: FacebookCEO Learson had tried&failed to block the bureaucrats, careerists and MBAs from destroying Watsons' culture/legacy

... was greatly accelerated by the failing Future System project from

"Computer Wars: The Post-IBM World", Time Books

https://www.amazon.com/Computer-Wars-The-Post-IBM-World/dp/1587981394

... and perhaps most damaging, the old culture under Watson Snr and Jr

of free and vigorous debate was replaced with *SYNCOPHANCY* and *MAKE

NO WAVES* under Opel and Akers. It's claimed that thereafter, IBM

lived in the shadow of defeat ... But because of the heavy investment

of face by the top management, F/S took years to kill, although its

wrong headedness was obvious from the very outset. "For the first

time, during F/S, outspoken criticism became politically dangerous,"

recalls a former top executive

... snip ...

... note: FS was completely different and was going to replace all

370s (during FS, internal politics was killing off 370 efforts, claim

is that the lack of new 370 during FS is credited with giving the

clone 370 makers their market foothold). When FS implodes, there is

mad rush to get stuff back into the 370 product pipelines, including

kicking off the quick&dirty 3033&3081 efforts in

parallel. Trivia: I continued to work on 360&370 stuff all during

FS, including periodically ridiculing what they were doing. Some more

FS detail:

http://www.jfsowa.com/computer/memo125.htm

https://people.computing.clemson.edu/~mark/fs.html

https://en.wikipedia.org/wiki/IBM_Future_Systems_project

The damage was done in the 20yrs between Learson failed effort and

1992 when IBM has one of the largest losses in the history of US

companies and was being reorged into the 13 baby blues (take off on

AT&T "baby bells" breakup decade earlier) in preparation for

breaking up the company

https://web.archive.org/web/20101120231857/http://www.time.com/time/magazine/article/0,9171,977353,00.html

https://content.time.com/time/subscriber/article/0,33009,977353-1,00.html

we had already left IBM but get a call from the bowels of Armonk

asking if we could help with the company breakup. Before we get

started, the board brings in the former president of Amex as CEO, who

(somewhat) reverses the breakup ... and uses some of the techniques

used at RJR (ref gone 404, but lives on at wayback machine).

https://web.archive.org/web/20181019074906/http://www.ibmemployee.com/RetirementHeist.shtml

future system posts

https://www.garlic.com/~lynn/submain.html#futuresys

IBM downturn/downfall/breakup posts

https://www.garlic.com/~lynn/submisc.html#ibmdownfall

Former AMEX President posts

https://www.garlic.com/~lynn/submisc.html#gerstner

pension posts

https://www.garlic.com/~lynn/submisc.html#pensions

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: CSC Virtual Machine Work Date: 29 Sept, 2024 Blog: FacebookAs far as I know, science center reports were trashed when the science centers were shutdown

I got hard copy of Comeau's presentation at SEAS and OCR'ed it:

https://www.garlic.com/~lynn/cp40seas1982.txt

Also another version at Melinda's history site:

https://www.leeandmelindavarian.com/Melinda#VMHist

and

https://www.leeandmelindavarian.com/Melinda/JimMarch/CP40_The_Origin_of_VM370.pdf

We had the IBM HA/CMP product and subcontracted a lot of work out to CLaM, Comeau had left IBM and was doing a number of things including founder of CLaM. When Cambridge was shutdown, CLaM took over the science center space.

CSC had wanted 360/50 to modify with virtual memory, but all the spare 360/50s were going to FAA ATC project ... so they got a 360/40 to modify and did CP40/CMS. It morphs into CP67/CMS when a 360/67 standard with virtual memory becomes available

I take a two credit hr into to fortran/computers. Univ was getting 360/67 for tss/360 to replace 709/1401, temporarily pending availability of 360/67, the 1401 was replaced with 360/30 and at end of intro class, I was hired to rewrite 1401 MPIO (unit record front-end for 709) in 360 assembler for 360/30 (OS360/PCP). The univ shutdown the datacenter on weekends and I would have the place dedicated (although 48hrs w/o sleep made monday classes hard). I was given a lot of software&hardware manuals and got to design and implement my own monitor, device drivers, interrupt handlers, error recovery, storage management, etc ... and within a few weeks have a 2000 card assembler program.

Within a year of taking intro class, the 360/67 arrives and univ hires me fulltime responsible for OS/360 (running on 360/67 as 360/65, tss/360 never came to production fruition). Student fortran had run under second on 709 but well over a minute with os360/MFT. I install HASP and it cuts the time in half. First sysgen was MFTR9.5. Then I start redoing stage2 sysgen to carefully place datasets and PDS members (to optimize arm seek and multi-track search), cutting another 2/3rds to 12.9 secs (student fortran never gets better than 709 until I install univ of waterloo Watfor.

Jan1968, CSC came out to install CP67 at the univ (3rd after CSC itself and MIT Lincoln Labs) and I mostly got to play with it during my weekend dedicated times, initial 1st few months rewriting lots of CP67 for running OS/360 in virtual machine. My OS360 test job stream ran 322secs on bare machine, initially 856secs virtually (534secs CP67 CPU), managed to get CP67 CPU down to 113secs. I then redo dispatching/scheduling (dynamic adaptive resource management), page replacement, thrashing controls, ordered disk arm seek, 2301/drum multi-page rotational ordered transfers (from 70-80 4k/sec to 270/sec peak), bunch of other stuff ... for CMS interactive computing. Then to further cut CMS CP67 CPU overhead I do a special CCW. Bob Adair criticizes it because it violates 360 architecture ... and it has to be redone as DIAGNOSE instruction (which is defined to be "model" dependent ... and so have facade of virtual machine model diagnose).

cambrdige science center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

HA/CMP posts

https://www.garlic.com/~lynn/subtopic.html#hacmp

some posts mentioning computer work as undergraduate

https://www.garlic.com/~lynn/2024d.html#111 GNOME bans Manjaro Core Team Member for uttering "Lunduke"

https://www.garlic.com/~lynn/2024d.html#76 Some work before IBM

https://www.garlic.com/~lynn/2024d.html#36 This New Internet Thing, Chapter 8

https://www.garlic.com/~lynn/2024b.html#114 EBCDIC

https://www.garlic.com/~lynn/2024b.html#97 IBM 360 Announce 7Apr1964

https://www.garlic.com/~lynn/2024b.html#63 Computers and Boyd

https://www.garlic.com/~lynn/2024b.html#60 Vintage Selectric

https://www.garlic.com/~lynn/2024b.html#44 Mainframe Career

https://www.garlic.com/~lynn/2024.html#87 IBM 360

https://www.garlic.com/~lynn/2023f.html#83 360 CARD IPL

https://www.garlic.com/~lynn/2023f.html#34 Vintage IBM Mainframes & Minicomputers

https://www.garlic.com/~lynn/2023e.html#54 VM370/CMS Shared Segments

https://www.garlic.com/~lynn/2023e.html#34 IBM 360/67

https://www.garlic.com/~lynn/2023d.html#88 545tech sq, 3rd, 4th, & 5th flrs

https://www.garlic.com/~lynn/2022e.html#95 Enhanced Production Operating Systems II

https://www.garlic.com/~lynn/2022d.html#57 CMS OS/360 Simulation

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: The joy of FORTRAN Newsgroups: alt.folklore.computers, comp.os.linux.misc Date: Tue, 01 Oct 2024 09:29:37 -1000rbowman <bowman@montana.com> writes:

somewhat like IBM's failed "Future System" effort (replacing all 370s)

http://www.jfsowa.com/computer/memo125.htm

https://people.computing.clemson.edu/~mark/fs.html

https://en.wikipedia.org/wiki/IBM_Future_Systems_project

except FS was enormous amounts of microcode. i432 gave talk at early 80s ACM SIGOPS at asilomar ... one of their issues was really complex stuff in silicon and nearly every fix required new silicon.

future system posts

https://www.garlic.com/~lynn/submain.html#futuresys

some posts mentioning i432

https://www.garlic.com/~lynn/2023f.html#114 Copyright Software

https://www.garlic.com/~lynn/2023e.html#22 Copyright Software

https://www.garlic.com/~lynn/2021k.html#38 IBM Boeblingen

https://www.garlic.com/~lynn/2021h.html#91 IBM XT/370

https://www.garlic.com/~lynn/2019e.html#27 PC Market

https://www.garlic.com/~lynn/2019c.html#33 IBM Future System

https://www.garlic.com/~lynn/2018f.html#52 All programmers that developed in machine code and Assembly in the 1940s, 1950s and 1960s died?

https://www.garlic.com/~lynn/2018e.html#95 The (broken) economics of OSS

https://www.garlic.com/~lynn/2017j.html#99 OS-9

https://www.garlic.com/~lynn/2017j.html#98 OS-9

https://www.garlic.com/~lynn/2017g.html#28 Eliminating the systems programmer was Re: IBM cuts contractor bil ling by 15 percent (our else)

https://www.garlic.com/~lynn/2017e.html#61 Typesetting

https://www.garlic.com/~lynn/2016f.html#38 British socialism / anti-trust

https://www.garlic.com/~lynn/2016e.html#115 IBM History

https://www.garlic.com/~lynn/2016d.html#63 PL/I advertising

https://www.garlic.com/~lynn/2016d.html#62 PL/I advertising

https://www.garlic.com/~lynn/2014m.html#107 IBM 360/85 vs. 370/165

https://www.garlic.com/~lynn/2014k.html#23 1950: Northrop's Digital Differential Analyzer

https://www.garlic.com/~lynn/2014c.html#75 Bloat

https://www.garlic.com/~lynn/2013f.html#33 Delay between idea and implementation

https://www.garlic.com/~lynn/2012n.html#40 history of Programming language and CPU in relation to each other

https://www.garlic.com/~lynn/2012k.html#57 1132 printer history

https://www.garlic.com/~lynn/2012k.html#14 International Business Marionette

https://www.garlic.com/~lynn/2011l.html#42 i432 on Bitsavers?

https://www.garlic.com/~lynn/2011l.html#15 Selectric Typewriter--50th Anniversary

https://www.garlic.com/~lynn/2011l.html#2 68000 assembly language programming

https://www.garlic.com/~lynn/2011k.html#79 Selectric Typewriter--50th Anniversary

https://www.garlic.com/~lynn/2011c.html#91 If IBM Hadn't Bet the Company

https://www.garlic.com/~lynn/2011c.html#7 RISCversus CISC

https://www.garlic.com/~lynn/2010j.html#22 Personal use z/OS machines was Re: Multiprise 3k for personal Use?

https://www.garlic.com/~lynn/2010h.html#40 Faster image rotation

https://www.garlic.com/~lynn/2010h.html#8 Far and near pointers on the 80286 and later

https://www.garlic.com/~lynn/2010g.html#45 IA64

https://www.garlic.com/~lynn/2010g.html#1 IA64

https://www.garlic.com/~lynn/2009q.html#74 Now is time for banks to replace core system according to Accenture

https://www.garlic.com/~lynn/2009o.html#46 U.S. begins inquiry of IBM in mainframe market

https://www.garlic.com/~lynn/2009o.html#18 Microprocessors with Definable MIcrocode

https://www.garlic.com/~lynn/2009o.html#13 Microprocessors with Definable MIcrocode

https://www.garlic.com/~lynn/2009d.html#52 Lack of bit field instructions in x86 instruction set because of patents ?

https://www.garlic.com/~lynn/2008k.html#22 CLIs and GUIs

https://www.garlic.com/~lynn/2008e.html#32 CPU time differences for the same job

https://www.garlic.com/~lynn/2008d.html#54 Throwaway cores

https://www.garlic.com/~lynn/2007s.html#36 Oracle Introduces Oracle VM As It Leaps Into Virtualization

https://www.garlic.com/~lynn/2006t.html#7 32 or even 64 registers for x86-64?

https://www.garlic.com/~lynn/2006p.html#15 "25th Anniversary of the Personal Computer"

https://www.garlic.com/~lynn/2006n.html#44 Any resources on VLIW?

https://www.garlic.com/~lynn/2006n.html#42 Why is zSeries so CPU poor?

https://www.garlic.com/~lynn/2006c.html#47 IBM 610 workstation computer

https://www.garlic.com/~lynn/2005q.html#31 Intel strikes back with a parallel x86 design

https://www.garlic.com/~lynn/2005k.html#46 Performance and Capacity Planning

https://www.garlic.com/~lynn/2005d.html#64 Misuse of word "microcode"

https://www.garlic.com/~lynn/2004q.html#73 Athlon cache question

https://www.garlic.com/~lynn/2004q.html#64 Will multicore CPUs have identical cores?

https://www.garlic.com/~lynn/2004q.html#60 Will multicore CPUs have identical cores?

https://www.garlic.com/~lynn/2004e.html#52 Infiniband - practicalities for small clusters

https://www.garlic.com/~lynn/2003e.html#54 Reviving Multics

https://www.garlic.com/~lynn/2002o.html#5 Anyone here ever use the iAPX432 ?

https://www.garlic.com/~lynn/2002l.html#19 Computer Architectures

https://www.garlic.com/~lynn/2002d.html#46 IBM Mainframe at home

https://www.garlic.com/~lynn/2002d.html#27 iAPX432 today?

https://www.garlic.com/~lynn/2000f.html#48 Famous Machines and Software that didn't

https://www.garlic.com/~lynn/2000e.html#6 Ridiculous

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: The joy of FORTRAN Newsgroups: alt.folklore.computers, comp.os.linux.misc Date: Tue, 01 Oct 2024 13:30:58 -1000Lynn Wheeler <lynn@garlic.com> writes:

I continued to work on 360/370 all during FS, even periodically ridiculing what they were doing (which wasn't exactly career enhancing) ... during FS, 370 stuff was being killed off and claims lack of new 370 during FS gave the clone 370 system makers their market foothold. when FS finally imploded (one of the final nails was analysis that if 370/195 applications were redone for FS machine made out of the fastest hardware available, they would have throughput of 370/145 ... about 30times slowdown) there was mad rush to get stuff back into the 370 product pipelines, including kicking off the Q&D 3033&3081 efforts in parallel.

I have periodically claimed that John did 801/RISC to go the extreme opposite of Future System (mid-70s there was internal adtech conference where we presented 370 16-cpu multiprocessor and the 801/RISC group presented RISC).

I got dragged into helping with a 370 16-cpu multiprocessor and we con the 3033 processor engineers into working on it in their spare time (a lot more interesting than remapping 370/168 logic to 20% faster chips). Everybody thought it was great until somebody tells the head of POK that it could be decades before the POK favorite son operating system ("MVS") had effective 16-cpu support (at the time MVS docs had 2-cpu system support with only 1.2-1.5 times the throughput of a 1-cpu system (I had number of 2-cpu systems that had twice the throughput of single cpu system) and head of POK invites some of us to never visit POK again ... and the 3033 processor engineers to keep their heads down and no distractions. Note: POK doesn't ship a 16-cpu system until after the turn of the century (more than two decades later).

Future System posts

https://www.garlic.com/~lynn/submain.html#futuresys

SMP, tightly-coupled, multiprocessor posts

https://www.garlic.com/~lynn/subtopic.html#smp

801/risc, iliad, romp, rios, pc/rt, rs/6000, power, power/pc posts

https://www.garlic.com/~lynn/subtopic.html#801

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: The joy of RISC Newsgroups: alt.folklore.computers, comp.os.linux.misc Date: Tue, 01 Oct 2024 15:39:23 -1000Lawrence D'Oliveiro <ldo@nz.invalid> writes:

ROMP was originally targeted to be DISPLAYWRITER follow-on, written in PL.8 and running cp.r ... when that was canceled (market moving to personal computing), they decided to pivot to the UNIX workstation market and got the company that had done AT&T Unix port to IBM/PC for PC/IX ... to do one for ROMP ... some claim they had 200 PL.8 programmers and decided to use them to implement a ROMP abstract virtual machine and tell the company doing AIX that it would be much faster and easier to do it to VM, than to the bare hardware.

However, there was the IBM Palo Alto group doing UCB BSD port to 370 that got redirected to do BSD port to the (bare hardware) ROMP instead (in much less time and resources than either the abstract virtual machine or the AIX effort) as "AOS".

Late 80s, my wife and I got the HA/6000 project, originally for the NYTimes to move their newspaper system (ATEX) off VAXCluster to RS/6000. I rename it HA/CMP when I start doing scientific/technical cluster scale-up with national labs and commercial cluster scale-up with RDBMS vendors (Oracle, Sybase, Informix, Ingres that had VAXCluster support in same source base with UNIX). Then the executive we reported to went over to head up Somerset (AIM; apple, ibm, motorola; single-chip RISC)

Early Jan92 in meeting with Oracle CEO, AWD/Hester tells Ellison we would have 16-system clusters by mid92 and 128-system clusters by ye92, however by end jan92, cluster scale-up was transferred for announce as IBM supercomputer (for technical/scientific *ONLY*) and we were told we couldn't work on anything with more than four systems (we leave IBM a few months later).

There had been complaints by commercial mainframe possibly contributing

to the decision:

1993: eight processor ES/9000-982 : 408MIPS, 51MIPS/processor

1993: RS6000/990 : 126MIPS; 16-system: 2BIPS/2016MIPs,

128-system: 16BIPS/16,128MIPS

801/risc, iliad, romp, rios, pc/rt, rs/6000, power, power/pc posts

https://www.garlic.com/~lynn/subtopic.html#801

HA/CMP posts

https://www.garlic.com/~lynn/subtopic.html#hacmp

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: CSC Virtual Machine Work Date: 02 Oct, 2024 Blog: Facebookre:

Trivia: A little over decade ago, I was asked to track down decision

to add virtual memory to all 370s and found staff to executive making

decision, basically MVT storage management was so bad that regions had

to be specified four times larger than used so a typical 1mbyte

370/165 would only run four regions concurrently, insufficient to keep

165 busy (and justified). Going to MVT in 16mbyte virtual address

space (VS2/SVS) allowed number of regions to be increased by four

times (capped at 15 because of 4bit storage protect keys) with little

or no paging (sort of like running MVT in CP67 16mbyte virtual

machine). some of the email exchange tracking down virtual memory for

all 370s

https://www.garlic.com/~lynn/2011d.html#73 Multiple Virtual Memory

We were visiting POK a lot ... part was trying to get CAS justified in 370 architecture, but I was also hammering the MVT performance group about their proposed page replacement algorithm (eventually they claimed that it didn't make any difference because paging rate would be 5/sec or less). Also would drop in on Ludlow offshift that was implementing VS2/SVS prototype on 360/67. It involved a little bit of code to build the 16mbyte virtual address table, enter/exit virtual address mode, and some simple paging. The biggest effort was EXCP/SVC0 where channel programs built in application space were passed to the supervisor for execution. Since they had virtual addresses, EXCP/SVC0 faced the same problem as CP67, making a copy of the channel program, replacing virtual addresses with real ... and he borrows a copy of CP67 CCWTRAN for the implementation.

Near the end of the 70s, somebody in POK gets an award for fixing the MVS page replacement algorithm (that I complained about in the early 70s, aka by late 70s, the paging rate had significantly increased and it had started to make a difference).

SMP, tightly-coupled, multiprocessor (and/or compare&swap) posts

https://www.garlic.com/~lynn/subtopic.html#smp

posts mentioning Ludlow, EXCP, CP67 CCWTRAN

https://www.garlic.com/~lynn/2024e.html#24 Public Facebook Mainframe Group

https://www.garlic.com/~lynn/2024d.html#24 ARM is sort of channeling the IBM 360

https://www.garlic.com/~lynn/2024.html#27 HASP, ASP, JES2, JES3

https://www.garlic.com/~lynn/2023g.html#77 MVT, MVS, MVS/XA & Posix support

https://www.garlic.com/~lynn/2023f.html#69 Vintage TSS/360

https://www.garlic.com/~lynn/2023f.html#47 Vintage IBM Mainframes & Minicomputers

https://www.garlic.com/~lynn/2023f.html#40 Rise and Fall of IBM

https://www.garlic.com/~lynn/2023f.html#26 Ferranti Atlas

https://www.garlic.com/~lynn/2023e.html#43 IBM 360/65 & 360/67 Multiprocessors

https://www.garlic.com/~lynn/2023e.html#15 Copyright Software

https://www.garlic.com/~lynn/2023e.html#4 HASP, JES, MVT, 370 Virtual Memory, VS2

https://www.garlic.com/~lynn/2023b.html#103 2023 IBM Poughkeepsie, NY

https://www.garlic.com/~lynn/2022h.html#93 IBM 360

https://www.garlic.com/~lynn/2022h.html#22 370 virtual memory

https://www.garlic.com/~lynn/2022f.html#41 MVS

https://www.garlic.com/~lynn/2022f.html#7 Vintage Computing

https://www.garlic.com/~lynn/2022e.html#91 Enhanced Production Operating Systems

https://www.garlic.com/~lynn/2022d.html#55 CMS OS/360 Simulation

https://www.garlic.com/~lynn/2022.html#70 165/168/3033 & 370 virtual memory

https://www.garlic.com/~lynn/2022.html#58 Computer Security

https://www.garlic.com/~lynn/2022.html#10 360/65, 360/67, 360/75

https://www.garlic.com/~lynn/2021h.html#48 Dynamic Adaptive Resource Management

https://www.garlic.com/~lynn/2021g.html#6 IBM 370

https://www.garlic.com/~lynn/2021b.html#63 Early Computer Use

https://www.garlic.com/~lynn/2021b.html#59 370 Virtual Memory

https://www.garlic.com/~lynn/2019.html#18 IBM assembler

https://www.garlic.com/~lynn/2016.html#78 Mainframe Virtual Memory

https://www.garlic.com/~lynn/2014d.html#54 Difference between MVS and z / OS systems

https://www.garlic.com/~lynn/2013l.html#18 A Brief History of Cloud Computing

https://www.garlic.com/~lynn/2013i.html#47 Making mainframe technology hip again

https://www.garlic.com/~lynn/2013.html#22 Is Microsoft becoming folklore?

https://www.garlic.com/~lynn/2012l.html#73 PDP-10 system calls, was 1132 printer history

https://www.garlic.com/~lynn/2012i.html#55 Operating System, what is it?

https://www.garlic.com/~lynn/2011o.html#92 Question regarding PSW correction after translation exceptions on old IBM hardware

https://www.garlic.com/~lynn/2011d.html#72 Multiple Virtual Memory

https://www.garlic.com/~lynn/2011.html#90 Two terrific writers .. are going to write a book

https://www.garlic.com/~lynn/2007f.html#6 IBM S/360 series operating systems history

https://www.garlic.com/~lynn/2005s.html#25 MVCIN instruction

https://www.garlic.com/~lynn/2004e.html#40 Infiniband - practicalities for small clusters

https://www.garlic.com/~lynn/2002p.html#51 Linux paging

https://www.garlic.com/~lynn/2002p.html#49 Linux paging

https://www.garlic.com/~lynn/2000c.html#34 What level of computer is needed for a computer to Love?

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM 360/30, 360/65, 360/67 Work Date: 03 Oct, 2024 Blog: FacebookI take a two credit hr into to fortran/computers. Univ was getting 360/67 for tss/360 to replace 709/1401, temporarily pending availability of 360/67, the 1401 was replaced with 360/30 and at end of intro class, I was hired to rewrite 1401 MPIO (unit record front-end for 709) in 360 assembler for 360/30 (OS360/PCP). The univ shutdown the datacenter on weekends and I would have the place dedicated (although 48hrs w/o sleep made monday classes hard). I was given a lot of software&hardware manuals and got to design and implement my own monitor, device drivers, interrupt handlers, error recovery, storage management, etc ... and within a few weeks have a 2000 card assembler program ... ran stand-alone, loaded with the BPS card loader. I then add assembler option and OS/360 system services I/O. The stand-alone version took 30mins to assemble, the OS/360 version took an hour to assemble (each DCB macro taking over five minutes to assemble). Later somebody claimed that the people implementing the assembler were told they only had 256bytes to implement op-code lookup ... so enormous amount of disk I/O.

trivia: periodically i would come in Sat. morning and find production had finished early and the machine room was dark and everything turn off. I would try and power on 360/30 and it wouldn't complete. Lots of pouring over documents and trail and error, I found I could put all controllers in CE-mode, power-on 360/30, power on individual controllers and return each controller to normal mode.

Within a year of taking intro class, the 360/67 shows up and I was hired fulltime responsible for OS/360 (TSS/360 hadn't come to production fruition) ... and I continued to have my dedicated weekend 48hrs (and monday classes still difficult). My first sysgen was MFT9.5. Note student fortran jobs took under a second on 709 (tape->tape), but over a minute with OS/360. I install HASP, cutting the time in half. I then start redoing stage2 sysgen, carefully placing datasets and PDS members to optimize arm seek and multi-track search, cutting another 2/3rds to 12.9secs. Student Fortran never got better than 709 until I install Univ. of Waterloo Watfor.

Before I graduate, I'm hired fulltime into small group in the Boeing CFO office to help with the formation of Boeing Computer Services (consolidate all dataprocessing in a independent business unit). I think Renton datacenter possibly largest in the world (couple hundred million in 360 systems), 360/65s arriving faster than they could be installed, boxes constantly staged in the hallways around the machine room. Renton did have one 360/75, there was black rope around the area perimeter and guards when it was running classified jobs (and heavy black velvet draped over console lights and 1403 printers). Lots of politics between Renton director and CFO, who only had a 360/30 up at Boeing field for payroll (although they enlarge the machine room and install a 360/67 for me to play with when I wasn't doing other stuff). When I graduate, I join IBM (instead of staying with Boeing CFO).

Boeing Huntsville had two processor 360/67 SMP (and several 2250 display for CAD/CAM) that was brought up to Seattle. It had been acquired (also) for TSS/360 but ran as two systems with MVT. They had also ran into the MVT storage management problem (that later resulted in the decision to add virtual memory to all 370s). Boeing Huntsville had modified MVTR13 to run in virtual memory mode (but no paging), using virtual memory to partially compensate for the MVT storage management problems. A little over decade ago, I was asked to track down the 370 virtual memory decision and found staff member for executive making decision. Basically MVT storage management was so bad that region sizes had to be specified four times larger than used, limiting typical 1mbyte 370/165 to four concurrent running regions, insufficient to keep 165 busy and justified. They found that moving MVT to 16mbyte virtual address space (VS2/SVS, similar to running MVT in CP67 16mbyte virtual machine), they could increase number of concurrent regions by factor of four times (capped at 15 because of 4bit storage protect key) with little or no paging.

trivia: in the early 80s, I was introduced to John Boyd and would

sponsor his briefings at IBM.

https://www.linkedin.com/pulse/john-boyd-ibm-wild-ducks-lynn-wheeler/

One of his stories was about being very vocal that the electronics

across the trail wouldn't work. Then (possibly as punishment) he is

put in command of "spook base" (about the same time I'm at

Boeing). His biography has "spook base" was a $2.5B "windfall" for IBM

(ten times Renton) ... some ref:

https://web.archive.org/web/20030212092342/http://home.att.net/~c.jeppeson/igloo_white.html

https://en.wikipedia.org/wiki/Operation_Igloo_White

Before TSS/360 was decomitted, there was claim that there were 1200 people on TSS at a time there were 12 people (including secretary) in the Cambridge Science Center group on CP67/CMS. CSC had wanted a 360/50 to hardware modify with virtual memory support, but all the spare 50s were going to the FAA ATC project, and so had to settle for 360/40. CP40/CMS morphs into CP67/CMS when 360/67 standard with virtual memory becomes available. Some CSC people come out to the univ to install CP67/CMS (3rd installaion after Cambridge itself and MIT Lincoln Labs) and I mostly get to play with it in my dedicated weekend time. Initially I rewrite a lot of CP67 to improve overhead running OS/360 in virtual machine. My OS/360 test jobstream ran 322 seconds on real hardware and initially 856secs virtually (534secs CP67 CPU). After a few months managed to get it down to 435secs (CP67 CPU 113secs).

I then redo dispatching/scheduling (dynamic adaptive resource management), page replacement, thrashing controls, ordered disk arm seek, 2301/drum multi-page rotational ordered transfers (from 70-80 4k/sec to 270/sec peak), bunch of other stuff ... for CMS interactive computing. Then to further cut CMS CP67 CPU overhead I do a special CCW. Bob Adair criticizes it because it violates 360 architecture ... and it has to be redone as DIAGNOSE instruction (which is defined to be "model" dependent ... and so have facade of virtual machine model diagnose).

Boyd postings and URL refs

https://www.garlic.com/~lynn/subboyd.html

Cambridge Scientific Center posts

https://www.garlic.com/~lynn/subtopic.html#545tech

some recent posts mentioning 709/1401, MPIO, 360/30, 360/67, CP67/CMS,

Boeing CFO, Renton datacenter,

https://www.garlic.com/~lynn/2024d.html#103 IBM 360/40, 360/50, 360/65, 360/67, 360/75

https://www.garlic.com/~lynn/2024d.html#76 Some work before IBM

https://www.garlic.com/~lynn/2024b.html#97 IBM 360 Announce 7Apr1964

https://www.garlic.com/~lynn/2024b.html#63 Computers and Boyd

https://www.garlic.com/~lynn/2024b.html#60 Vintage Selectric

https://www.garlic.com/~lynn/2024b.html#44 Mainframe Career

https://www.garlic.com/~lynn/2024.html#87 IBM 360

https://www.garlic.com/~lynn/2023f.html#83 360 CARD IPL

https://www.garlic.com/~lynn/2023e.html#54 VM370/CMS Shared Segments

https://www.garlic.com/~lynn/2023d.html#106 DASD, Channel and I/O long winded trivia

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: Byte ordering Newsgroups: comp.arch Date: Thu, 03 Oct 2024 15:33:54 -1000Lawrence D'Oliveiro <ldo@nz.invalid> writes:

... note that RS/6000 didn't have design that supported cache consistency, shared-memory multiprocessing ... (one of the reason ha/cmp had to resort to cluster operation for scale-up)

https://en.wikipedia.org/wiki/PowerPC_600#PowerPC_620

https://wiki.preterhuman.net/The_Somerset_Design_Center

the executive we reported to when we were doing HA/CMP

https://en.wikipedia.org/wiki/IBM_High_Availability_Cluster_Multiprocessing

went over to head up Somerset

801/risc, iliad, romp, rios, pc/rt, rs/6000, power, power/pc posts

https://www.garlic.com/~lynn/subtopic.html#801

HA/CMP posts

https://www.garlic.com/~lynn/subtopic.html#hacmp