From: Lynn Wheeler <lynn@garlic.com> Subject: Mainframe and Cloud Date: 06 Oct, 2025 Blog: Facebookre:

... note online/cloud tends to have capacity much greater than avg use ... to meet peek on-demand use which could be order of magnitude greater. cloud operators had heavily optimized server blade systems costs (including assembling their own systems for a fraction of brand name servers) ... and power consumption was increasingly becoming a major expense. There was then increasing pressure on makers of server components to optimize power use as well as allowing power use to drop to zero when idle ... but instant on to meet on-demand requirements.

large cloud operation can have a score (or more) of megadatacenters around the world, each with half million or more server blades, and each server blade with ten times rocessing of max. configured mainframe .... and enormous automation; a megadatacenter with 70-80 staff (upwards of 10,000 or more systems per staff). In the past were articles about being able to use a credit card to on-demand spin up for a couple of hrs, a cloud ("cluster") supercomputer (that ranked in the top 40 in the world)

megadatacenter posts

https://www.garlic.com/~lynn/submisc.html#megadatacenter

GML was invented at the IBM Cambridge Science Center in 1969 (about the same time that CICS product appeared) .... after decade morphs into ISO standard SGML and after another decade morphs into HTML at CERN.

CSC posts

https://www.garlic.com/~lynn/subtopic.html#545tech

GML, SGML, HTML posts

https://www.garlic.com/~lynn/submain.html#sgml

CICS/BDAM posts

https://www.garlic.com/~lynn/submain.html#bdam

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Mainframe skills Date: 06 Oct, 2025 Blog: FacebookIn CP67->VM370 (after decision to add virtual memory to all 370s), lots of stuff was simplified and/or dropped (including multiprocessor support) ... adding virtual memory to all 370s

Then in 1974 with a VM370R2, I start adding a bunch of stuff back in for my internal CSC/VM (including kernel re-org for multiprocessor, but not the actual SMP support). Then with a VM370R3, I add SMP back in, originally for (online sales&marketing support) US HONE so they could upgrade all their 168s to 2-CPU 168s (with little slight of hand getting twice throughput).

Then with the implosion of Future System

https://en.wikipedia.org/wiki/IBM_Future_Systems_project

https://people.computing.clemson.edu/~mark/fs.html

http://www.jfsowa.com/computer/memo125.htm

I get asked to help with a 16-CPU 370, and we con the 3033 processor

engineers into helping in their spare time (a lot more interesting

than remapping 168 logic to 20% faster chips). Everybody thought it

was great until somebody tells the head of POK that it could be

decades before POK favorite son operating system ("MVS") had

(effective) 16-CPU support (MVS docs at the time saying 2-CPU systems

had 1.2-1.5 times throughput of single CPU; POK doesn't ship 16-CPU

system until after turn of century). Then head of POK invites some of

us to never visit POK again and directs 3033 processor engineers heads

down and no distractions.

2nd half of 70s transferring to SJR on west coast, I worked with Jim Gray and Vera Watson on original SQL/relational, System/R (done on VM370); we were able to tech transfer ("under the radar" while corporation was pre-occupied with "EAGLE") to Endicott for SQL/DS. Then when "EAGLE" implodes, there was request for how fast could System/R be ported to MVS ... which was eventually released as DB2, originally for decision-support *only*.

I also got to wander around IBM (and non-IBM) datacenters in silicon valley, including DISK bldg14 (engineering) and bldg15 (product test) across the street. They were running pre-scheduled, 7x24, stand-alone testing and had mentioned recently trying MVS, but it had 15min MTBF (requiring manual re-ipl) in that environment. I offer to redo I/O system to make it bullet proof and never fail, allowing any amount of on-demand testing, greatly improving productivity. Bldg15 then gets 1st engineering 3033 outside POK processor engineering ... and since testing only took percent or two of CPU, we scrounge up 3830 controller and 3330 string to setup our own private online service. Then bldg15 also gets engineering 4341 in 1978 and some how branch hears about it and in Jan1979 I'm con'ed into doing a 4341 benchmark for a national lab that was looking at getting 70 for compute farm (leading edge of the coming cluster supercomputing tsunami).

Decade later in 1988, got approval for HA/6000 originally for NYTimes

to port their newspaper system (ATEX) from DEC VAXCluster to

RS/6000. I rename it HA/CMP

https://en.wikipedia.org/wiki/IBM_High_Availability_Cluster_Multiprocessing

when start doing technical/scientific cluster scale-up with national

labs (LANL, LLNL, NCAR, etc) and commercial cluster scale-up with

RDBMS vendors (Oracle, Ingres, Sybase, Informix that have DEC

VAXCluster support in same source base with UNIX). IBM S/88 Product

Administrator was also taking us around to their customers and also

had me write a section for corporate continuous availability strategy

document (it gets pulled when both Rochester/AS400 and POK/mainframe

complain).

Early Jan92 meeting with Oracle CEO, AWD executive Hester tells Ellison that we would have 16-system clusters by mid92 and 128-system clusters by ye92. Mid-jan92 convince FSD to bid HA/CMP for gov. supercomputers. Late-jan92, cluster scale-up is transferred for announce as IBM Supercomputer (for technical/scientific *only*) and we were told we couldn't work on clusters with more than four systems (we leave IBM a few months later).

Some speculation that it would eat the mainframe in the commercial

market. 1993 benchmarks (number of program iterations compared to the

industry MIPS reference platform):

• ES/9000-982 : 8CPU 408MIPS, 51MIPS/CPU

• RS6000/990 : 126MIPS, 16-systems: 2BIPS, 128-systems: 16BIPS

Former executive we had reported to, goes over to head up Somerset/AIM

(Apple, IBM, Motorola), single chip RISC with M88k bus/cache (enabling

clusters of shared memory multiprocessors)

i86 chip makers then do hardware layer that translate i86 instructions

into RISC micro-ops for actual execution (largely negating throughput

difference between RISC and i86); 1999 industry benchmark:

• IBM PowerPC 440: 1,000MIPS

• Pentium3: 2,054MIPS (twice PowerPC 440)

early numbers actual industry benchmarks, later used IBM pubs giving

percent change since previous:

z900, 16 processors 2.5BIPS (156MIPS/core), Dec2000

z990, 32 cores, 9BIPS, (281MIPS/core), 2003

z9, 54 cores, 18BIPS (333MIPS/core), July2005

z10, 64 cores, 30BIPS (469MIPS/core), Feb2008

z196, 80 cores, 50BIPS (625MIPS/core), Jul2010

EC12, 101 cores, 75BIPS (743MIPS/core), Aug2012

z13, 140 cores, 100BIPS (710MIPS/core), Jan2015

z14, 170 cores, 150BIPS (862MIPS/core), Aug2017

z15, 190 cores, 190BIPS (1000MIPS/core), Sep2019

z16, 200 cores, 222BIPS (1111MIPS/core), Sep2022

z17, 208 cores, 260BIPS (1250MIPS/core), Jun2025

Also in 1988, the branch office asks if I could help LLNL (national

lab) standardize some serial they were working with, which quickly

becomes fibre-channel standard, "FCS" (not First Customer Ship),

initially 1gbit/sec transfer, full-duplex, aggregate 200mbye/sec

(including some stuff I had done in 1980). Then POK gets some of their

serial stuff released with ES/9000 as ESCON (when it was already

obsolete, initially 10mbytes/sec, later increased to

17mbytes/sec). Then some POK engineers become involved with FCS and

define a heavy-weight protocol that drastically cuts throughput

(eventually released as FICON).

2010, a z196 "Peak I/O" benchmark released, getting 2M IOPS using 104 FICON (20K IOPS/FICON). About the same time a FCS is announced for E5-2600 server blade claiming over million IOPS (two such FCS having higher throughput than 104 FICON). Also IBM pubs recommend that SAPs (system assist processors that actually do I/O) be kept to 70% CPU (or 1.5M IOPS) and no new CKD DASD has been made for decades, all being simulated on industry standard fixed-block devices. Note: 2010 E5-2600 server blade (16 cores, 31BIPS/core) benchmarked at 500BIPS (ten times max configured Z196).

CSC posts

https://www.garlic.com/~lynn/subtopic.html#545tech

CP67L, CSC/VM, SJR/VM posts

https://www.garlic.com/~lynn/submisc.html#cscvm

HONE posts

https://www.garlic.com/~lynn/subtopic.html#hone

SMP, tightly-coupled, shared-memory multiprocessor

https://www.garlic.com/~lynn/subtopic.html#smp

Future System posts

https://www.garlic.com/~lynn/submain.html#futuresys

original SQL/relational posts

https://www.garlic.com/~lynn/submain.html#systemr

getting to play disk engineer posts

https://www.garlic.com/~lynn/subtopic.html#disk

HA/CMP posts

https://www.garlic.com/~lynn/subtopic.html#hacmp

Fibre Channel Standard ("FCS") &/or FICON posts

https://www.garlic.com/~lynn/submisc.html#ficon

DASD, CKD, FBA, multi-track posts

https://www.garlic.com/~lynn/submain.html#dasd

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: PS2 Microchannel Date: 07 Oct, 2025 Blog: FacebookIBM AWD had done their own cards for PC/RT workstation (16bit AT-bus), including a token-ring 4mbit T/R card. For RS/6000 w/microchannel, they were told they couldn't do their own cards, but had to use (heavily performance kneedcaped by communication group) PS2 microchannel cards. It turned out that the PC/RT 4mbit token ring card had higher card throughput than the PS2 16mbit token ring card (joke that PC/RT 4mbit T/R server would have higher throughput than RS/6000 16mbit T/R server).

Almaden research had been heavily provisioned with IBM CAT wiring, assuming 16mbit T/R use. However they found that 10mbit Ethernet LAN (running over IBM CAT wiring) had lower latency and higher aggregate throughput than 16mbit T/R LAN. Also $69 10mbit Ethernet cards had much higher throughput than $800 PS2 microchannel 16mbit T/R cards (joke communication group trying to severely hobble anything other than 3270 terminal emulation).

30yrs of PC market

https://arstechnica.com/features/2005/12/total-share/

Note above article makes reference to success of IBM PC clones

emulating IBM mainframe clones. Big part of the IBM mainframe clone

success was the IBM Future System effort in 1st half of 70s (going to

completely replace 370 mainframes, internal politics was killing off

370 efforts and claims is the lack of new 370s during the period is

what gave the 370 clone makers their market foothold)

https://en.wikipedia.org/wiki/IBM_Future_Systems_project

https://people.computing.clemson.edu/~mark/fs.html

http://www.jfsowa.com/computer/memo125.htm

801/risc, iliad, romp, rios, pc/rt, rs/6000, power, power/pc posts

https://www.garlic.com/~lynn/subtopic.html#801

future system posts

https://www.garlic.com/~lynn/submain.html#futuresys

PC/RT 4mbit T/R, PS2 16mbit T/R, 10mbit Ethernet

https://www.garlic.com/~lynn/2025d.html#81 Token-Ring

https://www.garlic.com/~lynn/2025d.html#8 IBM ES/9000

https://www.garlic.com/~lynn/2025d.html#2 Mainframe Networking and LANs

https://www.garlic.com/~lynn/2025c.html#114 IBM VNET/RSCS

https://www.garlic.com/~lynn/2025c.html#88 IBM SNA

https://www.garlic.com/~lynn/2025c.html#56 IBM OS/2

https://www.garlic.com/~lynn/2025c.html#53 IBM 3270 Terminals

https://www.garlic.com/~lynn/2025c.html#41 SNA & TCP/IP

https://www.garlic.com/~lynn/2025b.html#10 IBM Token-Ring

https://www.garlic.com/~lynn/2024g.html#101 IBM Token-Ring versus Ethernet

https://www.garlic.com/~lynn/2024f.html#27 The Fall Of OS/2

https://www.garlic.com/~lynn/2024e.html#64 RS/6000, PowerPC, AS/400

https://www.garlic.com/~lynn/2024e.html#52 IBM Token-Ring, Ethernet, FCS

https://www.garlic.com/~lynn/2024d.html#7 TCP/IP Protocol

https://www.garlic.com/~lynn/2024c.html#69 IBM Token-Ring

https://www.garlic.com/~lynn/2024c.html#56 Token-Ring Again

https://www.garlic.com/~lynn/2024b.html#50 IBM Token-Ring

https://www.garlic.com/~lynn/2024b.html#41 Vintage Mainframe

https://www.garlic.com/~lynn/2024b.html#22 HA/CMP

https://www.garlic.com/~lynn/2024.html#117 IBM Downfall

https://www.garlic.com/~lynn/2024.html#5 How IBM Stumbled onto RISC

https://www.garlic.com/~lynn/2023g.html#76 Another IBM Downturn

https://www.garlic.com/~lynn/2023c.html#91 TCP/IP, Internet, Ethernett, 3Tier

https://www.garlic.com/~lynn/2023c.html#49 Conflicts with IBM Communication Group

https://www.garlic.com/~lynn/2023c.html#6 IBM Downfall

https://www.garlic.com/~lynn/2023b.html#83 IBM's Near Demise

https://www.garlic.com/~lynn/2023b.html#50 Ethernet (& CAT5)

https://www.garlic.com/~lynn/2023.html#77 IBM/PC and Microchannel

https://www.garlic.com/~lynn/2022h.html#57 Christmas 1989

https://www.garlic.com/~lynn/2022f.html#18 Strange chip: Teardown of a vintage IBM token ring controller

https://www.garlic.com/~lynn/2021g.html#42 IBM Token-Ring

https://www.garlic.com/~lynn/2021d.html#15 The Rise of the Internet

https://www.garlic.com/~lynn/2021c.html#87 IBM SNA/VTAM (& HSDT)

https://www.garlic.com/~lynn/2018f.html#109 IBM Token-Ring

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: Switching On A VAX Newsgroups: alt.folklore.computers Date: Tue, 07 Oct 2025 16:49:38 -1000Lars Poulsen <lars@beagle-ears.com> writes:

Some of the CTSS/7094 people went to the 5th flr to do Multics, others went to the 4th flr to the IBM science center and did virtual machines, internal network, lots of other stuff. CSC originally wanted 360/50 to do virtual memory hardware mods, but all the spare 50s were going to FAA/ATC and had to settle for 360/40 to modify and did (virtual machine) CP40/cms. Then when 360/67 standard with virtual memory came available, CP40/CMS morphs into CP67/CMS.

Univ was getting 360/67 to replace 709/1401 and I had taken two credit hr intro to fortran/computers; at end of semester was hired to rewrite 1401 MPIO for 360/30 (temporarily replacing 1401 pending 360/67). Within a yr of taking intro class, the 360/67 arrives and I'm hired fulltime responsible for OS/360 (Univ. shutdowns datacenter on weekend and I got it dedicated, however 48hrs w/o sleep made monday classes hard).

Eventually CSC comes out to install CP67 (3rd after CSC itself and MIT Lincoln Labs) and I mostly get to play with it during my 48hr weekend dedicated time. I initially work on pathlengths for running OS/360 in virtual machine. Test stream ran 322secs on real machine, initially 856secs in virtual machine (CP67 CPU 534secs), after a couple months I have reduced CP67 CPU from 534secs to 113secs. I then start rewriting the dispatcher, (dynamic adaptive resource manager/default fair share policy) scheduler, paging, adding ordered seek queuing (from FIFO) and mutli-page transfer channel programs (from FIFO and optimized for transfers/revolution, getting 2301 paging drum from 70-80 4k transfers/sec to channel transfer peak of 270). Six months after univ initial install, CSC was giving one week class in LA. I arrive on Sunday afternoon and asked to teach the class, it turns out that the people that were going to teach it had resigned the Friday before to join one of the 60s CP67 commercial online spin-offs.

Original CP67 came with 1052 & 2741 terminal support with

automagic terminal identification, used SAD CCW to switch controller's

port terminal type scanner. Univ. had some number of TTY33&TTY35

terminals and I add TTY ASCII terminal support integrated with

automagic terminal type. I then wanted to have a single dial-in number

("hunt group") for all terminals. It didn't quite work, IBM had taken

short cut and had hard-wired line speed for each port. This kicks off

univ effort to do our own clone controller, built channel interface

board for Interdata/3 programmed to emulate IBM controller with the

addition it could do auto line-speed/(dynamic auto-baud). It was later

upgraded to Interdata/4 for channel interface with cluster of

Interdata/3s for port interfaces. Interdata (and later Perkin-Elmer)

was selling it as clone controller and four of us get written up

responsible for (some part of) the IBM clone controller business.

https://en.wikipedia.org/wiki/Interdata

https://en.wikipedia.org/wiki/Perkin-Elmer#Computer_Systems_Division

Univ. library gets an ONR grant to do online catalog and some of the money is used for a 2321 datacell. IBM also selects it as betatest for the original CICS product and supporting CICS added to my tasks.

Then before I graduate, I'm hired fulltime into a small group in the Boeing CFO office to help with the formation of Boeing Computer Services (consolidate all dataprocessing into independent business unit). I think Renton datacenter largest in the world (360/65s arriving faster than they could be installed, boxes constantly staged in hallways around machine room; joke that Boeing was getting 360/65s like other companies got keypunch machines). Lots of politics between Renton director and CFO, who only had a 360/30 up at Boeing field for payroll (although they enlarge the machine room to install 360/67 for me to play with when I wasn't doing other stuff). Renton did have a (lonely) 360/75 (among all the 360/65s) that was used for classified work (black rope around the area, heavy black felt draopped over console lights & 1403s with guards at perimeter when running classified). After I graduate, I join IBM CSC in cambridge (rather than staying with Boeing CFO).

One of my hobbies after joining IBM CSC was enhanced production operating systems for internal datacenters. At one time had rivalry with 5th flr over whether they had more total installations (internal, development, commercial, gov, etc) running Multics or I had more internal installations running my internal CSC/VM.

A decade later, I'm at SJR on the west coast and working with Jim Gray and Vera Watson on the original SQL/relational implementation System/R. I also had numerous internal datacenters running my internal SJR/VM system ... getting .11sec trivial interactive system response. This was at the time of several studies showing .25sec response getting improved productivity.

The 3272/3777 controller/terminal had .089 hardware response (plus the .11 system response resulted in .199 response, meeting .25sec criteria). The 3277 still had half-duplex problem attempting to hit a key at same time as screen write, keyboard would lock and would have to stop and reset. YKT was making a FIFO box available, unplug the 3277 keyboard for the head, plug-in the FIFO box and plug keyboard into FIFO ... which avoided the half-duplex keyboard lock).

Then IBM produced 3274/3278 controller/terminal where lots of electronics were moved back into the controller, reducing cost to make the 3278, but significantly increase coax protocol chatter ... significantly increasing hardware response to .3-.5secs depending on how much data was written to screen. Letters to the 3278 product administrator complaining, got back response that 3278 wasn't designed for interactive computing ... but data entry.

clone (pc ... "plug compatible") controller built w/interdata posts

https://www.garlic.com/~lynn/submain.html#360pcm

csc posts

https://www.garlic.com/~lynn/subtopic.html#545tech

original sql/relational, "system/r" posts

https://www.garlic.com/~lynn/submain.html#systemr

some posts mentioning undergraduate work at univ & boeing

https://www.garlic.com/~lynn/2025d.html#112 Mainframe and Cloud

https://www.garlic.com/~lynn/2025d.html#99 IBM Fortran

https://www.garlic.com/~lynn/2025d.html#91 IBM VM370 And Pascal

https://www.garlic.com/~lynn/2025d.html#69 VM/CMS: Concepts and Facilities

https://www.garlic.com/~lynn/2025c.html#115 IBM VNET/RSCS

https://www.garlic.com/~lynn/2025c.html#103 IBM Innovation

https://www.garlic.com/~lynn/2025c.html#64 IBM Vintage Mainframe

https://www.garlic.com/~lynn/2025c.html#55 Univ, 360/67, OS/360, Boeing, Boyd

https://www.garlic.com/~lynn/2025.html#111 Computers, Online, And Internet Long Time Ago

https://www.garlic.com/~lynn/2025.html#91 IBM Computers

https://www.garlic.com/~lynn/2024g.html#17 60s Computers

https://www.garlic.com/~lynn/2024f.html#69 The joy of FORTH (not)

https://www.garlic.com/~lynn/2024d.html#103 IBM 360/40, 360/50, 360/65, 360/67, 360/75

https://www.garlic.com/~lynn/2024d.html#76 Some work before IBM

https://www.garlic.com/~lynn/2024b.html#97 IBM 360 Announce 7Apr1964

https://www.garlic.com/~lynn/2024b.html#63 Computers and Boyd

https://www.garlic.com/~lynn/2024b.html#60 Vintage Selectric

https://www.garlic.com/~lynn/2024b.html#44 Mainframe Career

https://www.garlic.com/~lynn/2024.html#87 IBM 360

https://www.garlic.com/~lynn/2024.html#73 UNIX, MULTICS, CTSS, CSC, CP67

https://www.garlic.com/~lynn/2024.html#17 IBM Embraces Virtual Memory -- Finally

https://www.garlic.com/~lynn/2023f.html#83 360 CARD IPL

https://www.garlic.com/~lynn/2023f.html#65 Vintage TSS/360

https://www.garlic.com/~lynn/2023e.html#54 VM370/CMS Shared Segments

https://www.garlic.com/~lynn/2023d.html#106 DASD, Channel and I/O long winded trivia

https://www.garlic.com/~lynn/2023d.html#88 545tech sq, 3rd, 4th, & 5th flrs

https://www.garlic.com/~lynn/2022f.html#44 z/VM 50th

https://www.garlic.com/~lynn/2022e.html#95 Enhanced Production Operating Systems II

https://www.garlic.com/~lynn/2022d.html#57 CMS OS/360 Simulation

https://www.garlic.com/~lynn/2022c.html#0 System Response

posts rementioning response

https://www.garlic.com/~lynn/2025d.html#102 Rapid Response

https://www.garlic.com/~lynn/2025c.html#6 Interactive Response

https://www.garlic.com/~lynn/2025.html#69 old pharts, Multics vs Unix

https://www.garlic.com/~lynn/2024d.html#13 MVS/ISPF Editor

https://www.garlic.com/~lynn/2024b.html#31 HONE, Performance Predictor, and Configurators

https://www.garlic.com/~lynn/2023d.html#27 IBM 3278

https://www.garlic.com/~lynn/2022c.html#68 IBM Mainframe market was Re: Approximate reciprocals

https://www.garlic.com/~lynn/2021j.html#74 IBM 3278

https://www.garlic.com/~lynn/2021i.html#69 IBM MYTE

https://www.garlic.com/~lynn/2017d.html#25 ARM Cortex A53 64 bit

https://www.garlic.com/~lynn/2016e.html#51 How the internet was invented

https://www.garlic.com/~lynn/2016d.html#104 Is it a lost cause?

https://www.garlic.com/~lynn/2016c.html#8 You count as an old-timer if (was Re: Origin of the phrase "XYZZY")

https://www.garlic.com/~lynn/2015g.html#58 [Poll] Computing favorities

https://www.garlic.com/~lynn/2014g.html#26 Fifty Years of BASIC, the Programming Language That Made Computers Personal

https://www.garlic.com/~lynn/2013g.html#21 The cloud is killing traditional hardware and software

https://www.garlic.com/~lynn/2013g.html#14 Tech Time Warp of the Week: The 50-Pound Portable PC, 1977

https://www.garlic.com/~lynn/2012p.html#1 3270 response & channel throughput

https://www.garlic.com/~lynn/2012d.html#19 Writing article on telework/telecommuting

https://www.garlic.com/~lynn/2012.html#15 Who originated the phrase "user-friendly"?

https://www.garlic.com/~lynn/2012.html#13 From Who originated the phrase "user-friendly"?

https://www.garlic.com/~lynn/2011p.html#61 Migration off mainframe

https://www.garlic.com/~lynn/2011g.html#43 My first mainframe experience

https://www.garlic.com/~lynn/2010b.html#31 Happy DEC-10 Day

https://www.garlic.com/~lynn/2009q.html#72 Now is time for banks to replace core system according to Accenture

https://www.garlic.com/~lynn/2009q.html#53 The 50th Anniversary of the Legendary IBM 1401

https://www.garlic.com/~lynn/2009e.html#19 Architectural Diversity

https://www.garlic.com/~lynn/2006s.html#42 Ranking of non-IBM mainframe builders?

https://www.garlic.com/~lynn/2005r.html#15 Intel strikes back with a parallel x86 design

https://www.garlic.com/~lynn/2005r.html#12 Intel strikes back with a parallel x86 design

https://www.garlic.com/~lynn/2003k.html#22 What is timesharing, anyway?

https://www.garlic.com/~lynn/2001m.html#19 3270 protocol

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Mainframe skills Date: 08 Oct, 2025 Blog: Facebookre:

re: bldg15 3033, during FS (that was going to completely replace 370), internal politics was killing off 370 efforts and the lack of new 370s during the period is credited with given the 370 clone makers (including Amdahl) their marketing foothold. When FS implodes there was mad rush to get stuff back into the 370 product pipelines, including kicking off quick&dirty 3033&3081 in parallel. For the 303x channel director, they took 158 engine with just the integrated channel microcode (and no 370 microcode). A 3031 was two 158 engines, one with just the channel microcode and 2nd with just the 370 microcode. A 3032 was 168 redone to use 303x channel director. A 3033 started out 168 logic remapped to 20% faster chips.

One of the bldg15 early engineering 3033 problems were channel director boxes would hang and somebody would have to manual re-impl hung channel director box. Discovered doing variation on missing interrupt handler, where CLRCH done quickly for all six channel addresses for the hung box ... which would force the box re-impl.

posts mentioning getting to play disk engineer in bldgs 14&15:

https://www.garlic.com/~lynn/subtopic.html#disk

posts mentioning 303x CLRCH force re-impl:

https://www.garlic.com/~lynn/2025d.html#58 IBM DASD, CKD, FBA

https://www.garlic.com/~lynn/2024b.html#48 Vintage 3033

https://www.garlic.com/~lynn/2024b.html#11 3033

https://www.garlic.com/~lynn/2023f.html#27 Ferranti Atlas

https://www.garlic.com/~lynn/2023e.html#91 CP/67, VM/370, VM/SP, VM/XA

https://www.garlic.com/~lynn/2023d.html#19 IBM 3880 Disk Controller

https://www.garlic.com/~lynn/2021i.html#85 IBM 3033 channel I/O testing

https://www.garlic.com/~lynn/2021b.html#2 Will The Cloud Take Down The Mainframe?

https://www.garlic.com/~lynn/2014m.html#74 SD?

https://www.garlic.com/~lynn/2011o.html#23 3270 archaeology

https://www.garlic.com/~lynn/2001l.html#32 mainframe question

https://www.garlic.com/~lynn/2000c.html#69 Does the word "mainframe" still have a meaning?

https://www.garlic.com/~lynn/97.html#20 Why Mainframes?

--

virtualization experience starting Jan1968, online at home since Mar1970

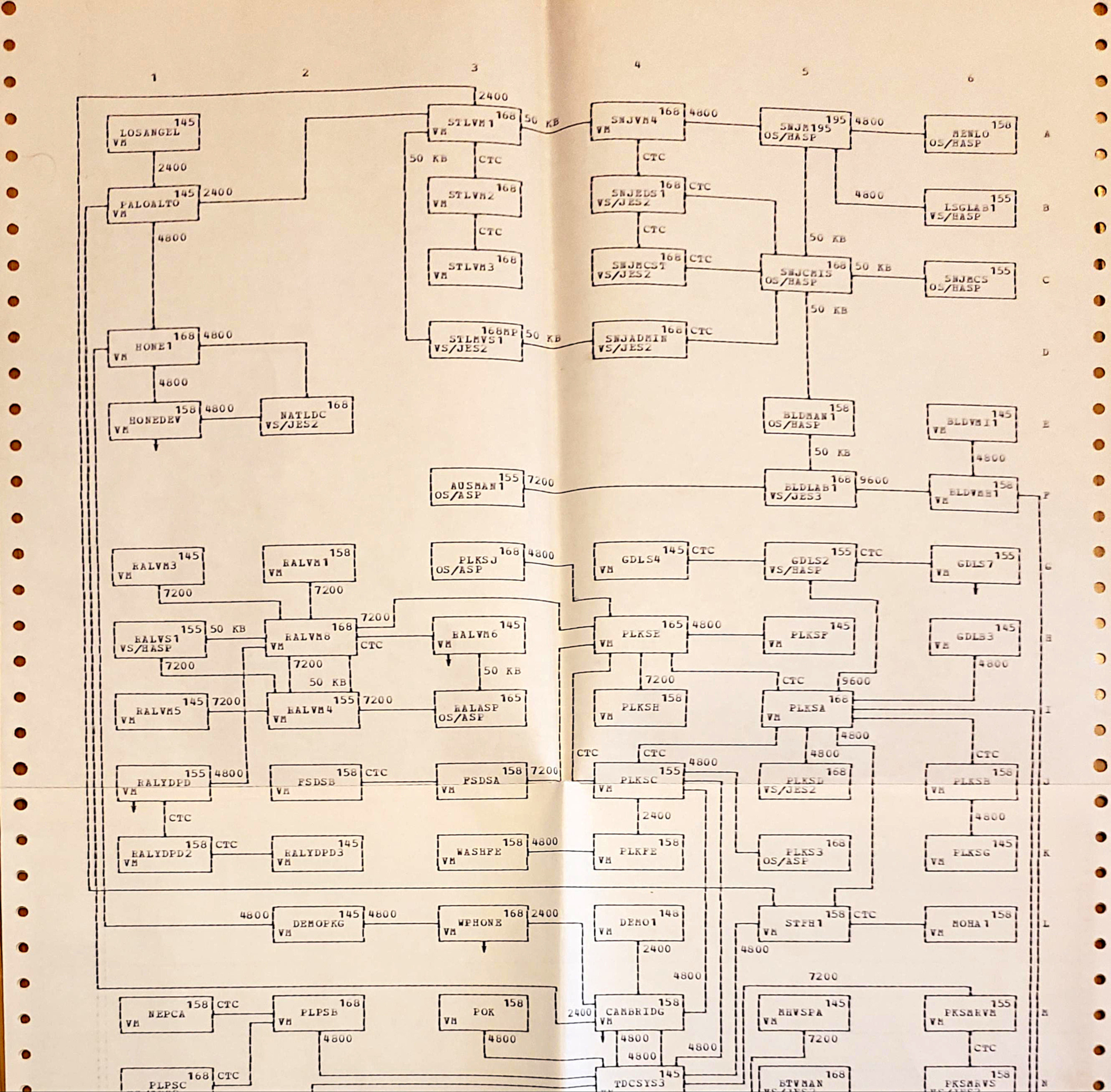

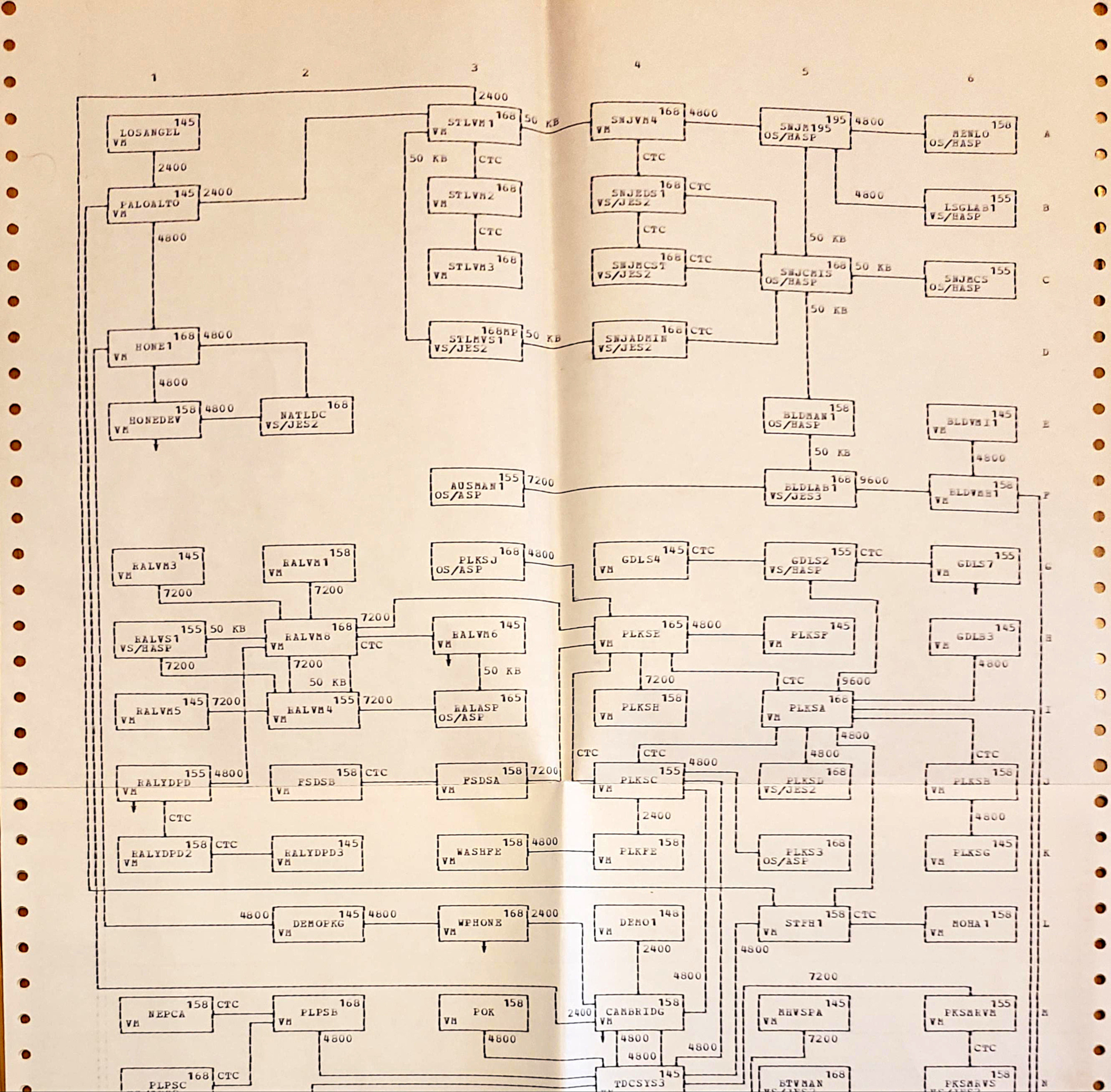

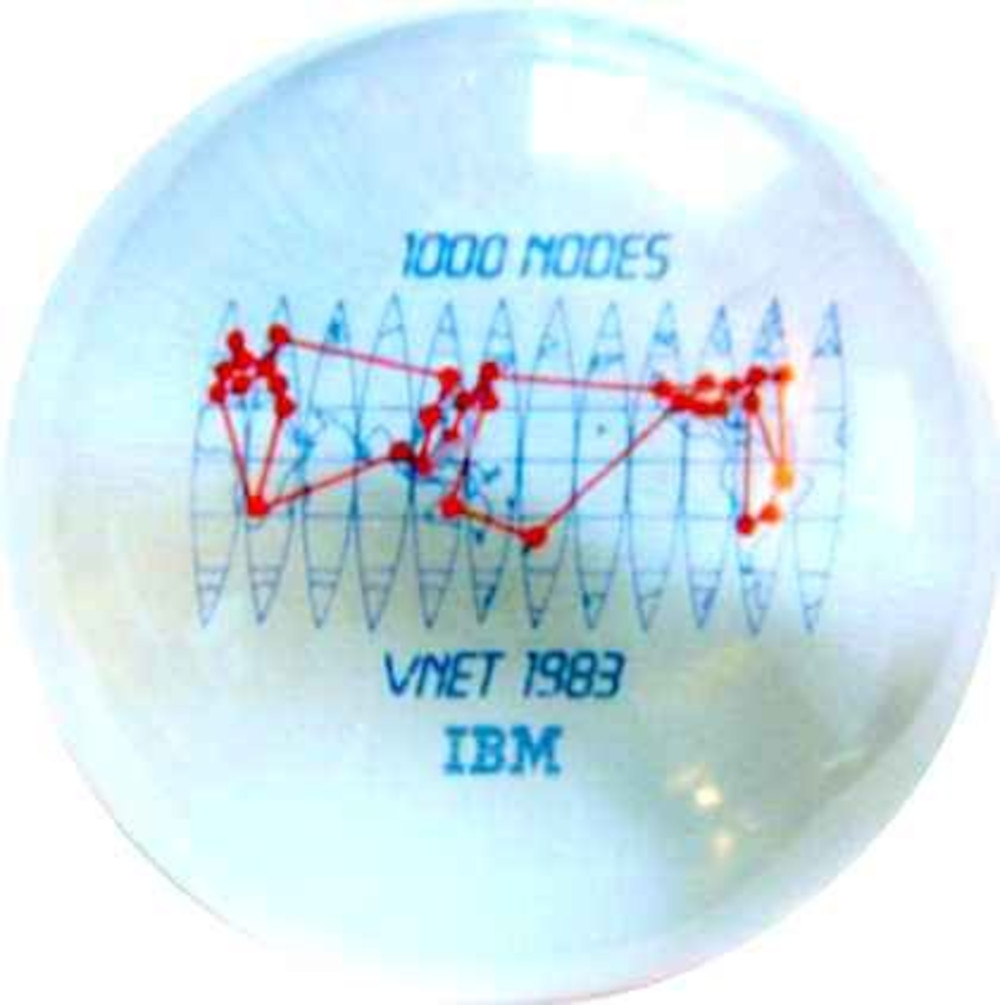

From: Lynn Wheeler <lynn@garlic.com> Subject: Kuwait Email Date: 09 Oct, 2025 Blog: FacebookFrom long ago and far away; one of my hobbies after joining IBM was enhanced production operating systems for internal datacenters and the online sales&marketing HONE systems was (one of the 1st and) long time customer; ... in the mid-70s, all the US HONE datacenters were consolidated in Palo Alto (trivia: when FACEBOOK 1st moved into silicon valley, it was into a new bldg built next door to the former consolidated US HONE datacenter), then HONE systems started cropping up all over the world.

Co-worker at science center was responsible for the the wide-area

network

https://en.wikipedia.org/wiki/Edson_Hendricks

reference by one of the CSC 1969 GML inventors

https://web.archive.org/web/20230402212558/http://www.sgmlsource.com/history/jasis.htm

Actually, the law office application was the original motivation for

the project, something I was allowed to do part-time because of my

knowledge of the user requirements. My real job was to encourage the

staffs of the various scientific centers to make use of the

CP-67-based Wide Area Network that was centered in Cambridge.

... snip ...

which morphs into the corporate internal network (larger than

arpanet/internet from just about the beginning until sometime mid/late

80s ... about the time it was forced to convert to SNA/VTAM);

technology also used for the corporate sponsored univ. BITNET

https://en.wikipedia.org/wiki/BITNET

also EARN in Europe

https://en.wikipedia.org/wiki/European_Academic_Research_Network#EARN

I also would visit various datacenters around silicon valley,

including TYMSHARE:

https://en.wikipedia.org/wiki/Tymshare

which started providing their CMS-base online computer conferencing

system in Aug1976, "free" to the mainframe user group SHARE as vmshare

... archives here

http://vm.marist.edu/~vmshare

I cut a deal with Tymshare to get monthly tape dump of all VMSHARE

files for putting up on internal network and systems (including

HONE). The following is email from IBM sales/marketing branch office

employee in Kuwait:

Date: 14 February 1983, 09:44:58 CET

From: Fouad xxxxxx

To: Lynn Wheeler

Subject: VMSHARE registration

Hello , I dont know if you are the right contact , but I really dont

know whom to ask.

My customer wants to get into TYMNET and VMSHARE.

They have a teletype and are able to have a dial-up line to USA.

How can they get a connection to TYMNET and a registration to VMSHARE.

The country is KUWAIT, which is near to SAUDI ARABIA

Can you help me

thanks

... snip ... top of post, old email index

TYMSHARE's TYMNET:

https://en.wikipedia.org/wiki/Tymnet

CSC posts

https://www.garlic.com/~lynn/subtopic.html#545tech

CP67L, CSC/VM, SJR/VM posts

https://www.garlic.com/~lynn/submisc.html#cscvm

HONE posts

https://www.garlic.com/~lynn/subtopic.html#hone

GML, SGML, HTML posts

https://www.garlic.com/~lynn/submain.html#sgml

internal network posts

https://www.garlic.com/~lynn/subnetwork.html#internalnet

BITNET (& EARN) posts

https://www.garlic.com/~lynn/subnetwork.html#bitnet

some recent posts mentioning VMSHARE:

https://www.garlic.com/~lynn/2025b.html#14 IBM Token-Ring

https://www.garlic.com/~lynn/2024d.html#74 Some Email History

https://www.garlic.com/~lynn/2024d.html#47 E-commerce

https://www.garlic.com/~lynn/2024b.html#90 IBM User Group Share

https://www.garlic.com/~lynn/2024b.html#87 Dialed in - a history of BBSing

https://www.garlic.com/~lynn/2024b.html#34 Internet

https://www.garlic.com/~lynn/2024.html#109 IBM User Group SHARE

https://www.garlic.com/~lynn/2022g.html#16 Early Internet

https://www.garlic.com/~lynn/2022f.html#50 z/VM 50th - part 3

https://www.garlic.com/~lynn/2022f.html#37 What's something from the early days of the Internet which younger generations may not know about?

https://www.garlic.com/~lynn/2022c.html#28 IBM Cambridge Science Center

https://www.garlic.com/~lynn/2022c.html#8 Cloud Timesharing

https://www.garlic.com/~lynn/2022b.html#126 Google Cloud

https://www.garlic.com/~lynn/2022b.html#107 15 Examples of How Different Life Was Before The Internet

https://www.garlic.com/~lynn/2022b.html#28 Early Online

https://www.garlic.com/~lynn/2021j.html#71 book review: Broad Band: The Untold Story of the Women Who Made the Internet

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: VM370 Teddy Bear Date: 09 Oct, 2025 Blog: Facebookthe SHARE MVS mascot was the "turkey" (because MVS/TSO was so bad) as opposed to the VM370 mascot as the teddy bear.

trivia2: customers not migrating to MVS as planned (I was at the the

initial SHARE when it was played).

http://www.mxg.com/thebuttonman/boney.asp

trivia3: after FS imploded:

https://en.wikipedia.org/wiki/IBM_Future_Systems_project

https://people.computing.clemson.edu/~mark/fs.html

http://www.jfsowa.com/computer/memo125.htm

the head of POK managed to convince corporate to kill the VM370 product, shutdown the development group and transfer all the people to POK for MVS/XA (Endicott eventually managed to save the VM370 product mission for the mid-range, but had to recreate a development group from scratch). They weren't planning on telling the people until the very last minute to minimize the number that might escape. It managed to leak early and several managed to escape (it was during infancy of DEC VAX/VMS ... before it even first shipped and joke was that head of POK was a major contributor to VMS). There was then a hunt for the leak, fortunately for me, nobody gave up the leaker.

Melinda's history

https://www.leeandmelindavarian.com/Melinda#VMHist

CSC posts

https://www.garlic.com/~lynn/subtopic.html#545tech

CP67l, CSC/VM, SJR/VM posts

https://www.garlic.com/~lynn/submisc.html#cscvm

Future System posts

https://www.garlic.com/~lynn/submain.html#futuresys

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Mainframe skills Date: 10 Oct, 2025 Blog: Facebookre:

... I had coined disaster survivability and "greographic survivability" (as counter to disaster/recovery) when out marketing HA/CMP.

trivia: as undergraduate, when 360/67 arrived (replacing 709/1401) within a year of taking 2 credit hr intro to fortran/computers, I was hired fulltime responsible for os/360 (360/67 as 360/65, tss/360 never came to production). then before I graduate, I was hired fulltime into small group in the Boeing CFO office to help with the formation of Boeing Computer Services (consolidate all dataprocessing into an independent business unit). I think Renton is the largest datacenter (in the world?) ... 360/65s arriving faster than they could be installed, boxes constantly staged in the hallways around the machine room (joke that Boeing was getting 360/65s like other companies got keypunches).

Disaster plan was to replicate Renton up at the new 747 plant at Paine Field (in Everett) as countermeasure to Mt. Rainier heating up and the resulting mud slide taking out Renton.

HA/CMP posts

https://www.garlic.com/~lynn/subtopic.html#hacmp

continuous availability, disaster survivability, geographic

survivability posts

https://www.garlic.com/~lynn/submain.html#available

posts mentioning assurance

https://www.garlic.com/~lynn/subintegrity.html#assurance

recent posts mentioning Boeing disaster plan to replicate Renton up at

Paine Field:

https://www.garlic.com/~lynn/2025.html#91 IBM Computers

https://www.garlic.com/~lynn/2024c.html#15 360&370 Unix (and other history)

https://www.garlic.com/~lynn/2024c.html#9 Boeing and the Dark Age of American Manufacturing

https://www.garlic.com/~lynn/2024b.html#111 IBM 360 Announce 7Apr1964

https://www.garlic.com/~lynn/2024.html#87 IBM 360

https://www.garlic.com/~lynn/2023g.html#39 Vintage Mainframe

https://www.garlic.com/~lynn/2023g.html#31 Mainframe Datacenter

https://www.garlic.com/~lynn/2023f.html#105 360/67 Virtual Memory

https://www.garlic.com/~lynn/2023f.html#35 Vintage IBM Mainframes & Minicomputers

https://www.garlic.com/~lynn/2023f.html#32 IBM Mainframe Lore

https://www.garlic.com/~lynn/2023f.html#19 Typing & Computer Literacy

https://www.garlic.com/~lynn/2023e.html#64 Computing Career

https://www.garlic.com/~lynn/2023e.html#54 VM370/CMS Shared Segments

https://www.garlic.com/~lynn/2023e.html#34 IBM 360/67

https://www.garlic.com/~lynn/2023e.html#11 Tymshare

https://www.garlic.com/~lynn/2023d.html#106 DASD, Channel and I/O long winded trivia

https://www.garlic.com/~lynn/2023d.html#101 Operating System/360

https://www.garlic.com/~lynn/2023d.html#88 545tech sq, 3rd, 4th, & 5th flrs

https://www.garlic.com/~lynn/2023d.html#83 Typing, Keyboards, Computers

https://www.garlic.com/~lynn/2023d.html#66 IBM System/360, 1964

https://www.garlic.com/~lynn/2023d.html#32 IBM 370/195

https://www.garlic.com/~lynn/2023d.html#20 IBM 360/195

https://www.garlic.com/~lynn/2023c.html#73 Dataprocessing 48hr shift

https://www.garlic.com/~lynn/2023.html#66 Boeing to deliver last 747, the plane that democratized flying

https://www.garlic.com/~lynn/2022h.html#4 IBM CAD

https://www.garlic.com/~lynn/2022g.html#63 IBM DPD

https://www.garlic.com/~lynn/2022g.html#26 Why Things Fail

https://www.garlic.com/~lynn/2022.html#30 CP67 and BPS Loader

https://www.garlic.com/~lynn/2022.html#22 IBM IBU (Independent Business Unit)

https://www.garlic.com/~lynn/2021k.html#55 System Availability

https://www.garlic.com/~lynn/2021f.html#16 IBM Zcloud - is it just outsourcing ?

https://www.garlic.com/~lynn/2021e.html#54 Learning PDP-11 in 2021

https://www.garlic.com/~lynn/2021d.html#34 April 7, 1964: IBM Bets Big on System/360

https://www.garlic.com/~lynn/2021.html#78 Interactive Computing

https://www.garlic.com/~lynn/2021.html#48 IBM Quota

https://www.garlic.com/~lynn/2020.html#45 Watch AI-controlled virtual fighters take on an Air Force pilot on August 18th

https://www.garlic.com/~lynn/2020.html#10 "This Plane Was Designed By Clowns, Who Are Supervised By Monkeys"

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM Somers Date: 11 Oct, 2025 Blog: FacebookLate 80s, a senior disk engineer gets talk scheduled at annual, internal, world-wide communication group conference, supposedly on 3174 performance. However, the opening was that the communication group was going to be responsible for the demise of the disk division. The disk division was seeing drop in disk sales with data fleeing mainframe datacenters to more distributed computing friendly platforms. The disk division had come up with a number of solutions, but they were constantly being vetoed by the communication group (with their corporate ownership of everything that crossed the datacenter walls) trying to protect their dumb terminal paradigm. Senior disk software executive partial countermeasure was investing in distributed computing startups that would use IBM disks (he would periodically ask us to drop in on his investments to see if we could offer any assistance).

Early 90s, head of SAA (in late 70s, worked with him on ECPS microcode assist for 138/148) had top flr, corner office in Somers and and would perodically drop in to talk about some of his people. We were out doing customer executive presentations on Ethernet, TCP/IP, 3-tier networking, high-speed routers, etc and taking barbs in the back from SNA&SAA members. We would periodically drop in on other Somers' residents asking shouldn't they be doing something about the way the company was heading.

The communication group stranglehold on mainframe datacenters wasn't

just disks and IBM has one of the largest losses in the history of US

corporations and was being reorganized into the 13 "baby blues" in

preparation for breaking up the company ("baby blues" take-off on the

"baby bell" breakup decade earlier).

https://web.archive.org/web/20101120231857/http://www.time.com/time/magazine/article/0,9171,977353,00.html

https://content.time.com/time/subscriber/article/0,33009,977353-1,00.html

We had already left IBM but get a call from the bowels of Armonk

asking if we could help with the breakup. Before we get started, the

board brings in the former AMEX president as CEO to try and save the

company, who (somewhat) reverses the breakup and uses some of the same

techniques used at RJR (gone 404, but lives on at wayback)

https://web.archive.org/web/20181019074906/http://www.ibmemployee.com/RetirementHeist.shtml

20yrs earlier, Learson tried (and failed) to block bureaucrats,

careerists, and MBAs from destroying Watson culture/legacy, pg160-163,

30yrs of management briefings 1958-1988

https://bitsavers.org/pdf/ibm/generalInfo/IBM_Thirty_Years_of_Mangement_Briefings_1958-1988.pdf

90s, we were doing work for large AMEX mainframe datacenters spin-off and former AMEX CEO.

3-tier, ethernet, tcp/ip, etc posts

https://www.garlic.com/~lynn/subnetwork.html#3tier

HSDT posts

https://www.garlic.com/~lynn/subnetwork.html#hsdt

demise of disk division and communication group stranglehold posts

https://www.garlic.com/~lynn/subnetwork.html#emulation

IBM downturn/downfall/breakup posts

https://www.garlic.com/~lynn/submisc.html#ibmdownfall

pension posts

https://www.garlic.com/~lynn/submisc.html#pension

recent posts mentioning IBM Somers:

https://www.garlic.com/~lynn/2025d.html#98 IBM Supercomputer

https://www.garlic.com/~lynn/2025d.html#86 Cray Supercomputer

https://www.garlic.com/~lynn/2025d.html#73 Boeing, IBM, CATIA

https://www.garlic.com/~lynn/2025d.html#68 VM/CMS: Concepts and Facilities

https://www.garlic.com/~lynn/2025d.html#51 Computing Clusters

https://www.garlic.com/~lynn/2025d.html#24 IBM Yorktown Research

https://www.garlic.com/~lynn/2025d.html#7 IBM ES/9000

https://www.garlic.com/~lynn/2025d.html#1 Chip Design (LSM & EVE)

https://www.garlic.com/~lynn/2025c.html#116 Internet

https://www.garlic.com/~lynn/2025c.html#110 IBM OS/360

https://www.garlic.com/~lynn/2025c.html#104 IBM Innovation

https://www.garlic.com/~lynn/2025c.html#98 5-CPU 370/125

https://www.garlic.com/~lynn/2025c.html#93 FCS, ESCON, FICON

https://www.garlic.com/~lynn/2025c.html#69 Tandem Computers

https://www.garlic.com/~lynn/2025c.html#50 IBM RS/6000

https://www.garlic.com/~lynn/2025c.html#40 IBM & DEC DBMS

https://www.garlic.com/~lynn/2025c.html#1 Interactive Response

https://www.garlic.com/~lynn/2025b.html#118 IBM 168 And Other History

https://www.garlic.com/~lynn/2025b.html#108 System Throughput and Availability

https://www.garlic.com/~lynn/2025b.html#100 IBM Future System, 801/RISC, S/38, HA/CMP

https://www.garlic.com/~lynn/2025b.html#92 IBM AdStar

https://www.garlic.com/~lynn/2025b.html#78 IBM Downturn

https://www.garlic.com/~lynn/2025b.html#41 AIM, Apple, IBM, Motorola

https://www.garlic.com/~lynn/2025b.html#22 IBM San Jose and Santa Teresa Lab

https://www.garlic.com/~lynn/2025b.html#8 The joy of FORTRAN

https://www.garlic.com/~lynn/2025b.html#2 Why VAX Was the Ultimate CISC and Not RISC

https://www.garlic.com/~lynn/2025.html#119 Consumer and Commercial Computers

https://www.garlic.com/~lynn/2025.html#96 IBM Token-Ring

https://www.garlic.com/~lynn/2025.html#86 Big Iron Throughput

https://www.garlic.com/~lynn/2025.html#74 old pharts, Multics vs Unix vs mainframes

https://www.garlic.com/~lynn/2025.html#37 IBM Mainframe

https://www.garlic.com/~lynn/2025.html#23 IBM NY Buildings

https://www.garlic.com/~lynn/2025.html#21 Virtual Machine History

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM Interactive Response Date: 11 Oct, 2025 Blog: FacebookI took a 2 credit hr intro to fortran/computers and at end of semester was hired to rewrite 1401 MPIO in assembler for 360/30 ... univ was getting 360/67 for tss/360 to replace 709/1401 and got a 360/30 temporarily pending availability of a 360/67 (part of getting some 360 experience). Univ. shutdown datacenter on weekends and I would have it dedicated (although 48hrs w/o sleep made monday classes hard), I was given a pile of hardware & software manuals and got to design and implement my own monitor, device drivers, interrupt handlers, storage management, error recovery, etc ... and within a few weeks had a 2000 card program.

Then within a yr of taking intro class, 360/67 arrives and i'm hired fulltime responsible for os/360 (tss/360 never came to production so ran as 360/65).

CSC comes out to univ for CP67/CMS (precursor to VM370/CMS) install (3rd after CSC itself and MIT Lincoln Labs) and I mostly get to play with it during my 48hr weekend dedicated time. I initially work on pathlengths for running OS/360 in virtual machine. Test stream ran 322secs on real machine, initially 856secs in virtual machine (CP67 CPU 534secs), after a couple months I have reduced CP67 CPU from 534secs to 113secs. I then start rewriting the dispatcher/scheduler (dynamic adaptive resource manager/default fair share policy) paging, adding ordered seek queuing (from FIFO) and mutli-page transfer channel programs (from FIFO and optimized for transfers/revolution, getting 2301 paging drum from 70-80 4k transfers/sec to channel transfer peak of 270). Six months after univ initial install, CSC was giving one week class in LA. I arrive on Sunday afternoon and asked to teach the class, it turns out that the people that were going to teach it had resigned the Friday before to join one of the 60s CP67 commercial online spin-offs.

Before I graduate, I'm hired fulltime into a small group in the Boeing CFO office to help with form Boeing Computer Services (consolidate all dataprocessing into an independent business unit ... including offering services to non-Boeing entities). When I graduate, I leave to join CSC (instead of staying with CFO).

One of my hobbies after joining IBM was enhance production operating systems for internal datacenters ... and in the morph of CP67->VM370 a lot of stuff was dropped and/or simplified and every few years I would be asked to redo stuff that had been dropped and/or rewritten (... in part dynamic adaptive default policy calculated dispatching order based on resource utilization over the last several minutes compared to target resource utilization established by their priority and number of users).

Late 80s, the OS2 team was told to contact VM370 group (because VM370 dispatching was much better than OS2) ... it was passed between the various groups before being forwarded to me.

Example I didn't have much control over was late 70s, IBM San Jose Research got a MVS 168 and a VM370 158 replacing MVT 195. My internal VM370s were getting 90th percentile .11sec interactive system response (with 3272/3277 hardware response of .086sec resulted in .196sec seen by users ... better than the .25sec requirement mentioned in various studies). All the SJR 3830 controllers and 3330 strings were dual-channel connection to both systems but strong rules that no MVS 3330s can be mounted on VM370 strings. One morning operators mounted a MVS 3330 on a VM370 string and within minutes they were getting irate calls from all over the bldg complaining about response. The issue was MVS has a OS/360 heritage of multi-track search for PDS directory searches ... a MVS multi-cylinder PDS directory search can have multiple full multi-track cylinder searches that lockout the (vm370) controller for the duration (60revs/sec, 19tracks/search, .317secs lockout per multi-track search I/O). Demand to move the pack was answered with they would get around to it on 2nd shift.

CSC posts

https://www.garlic.com/~lynn/subtopic.html#545tech

dynamic adaptive resource dispatch/scheduling posts

https://www.garlic.com/~lynn/subtopic.html#fairshare

commercial virtual machine service posts

https://www.garlic.com/~lynn/submain.html#timeshare

CP67L, CSC/VM, SJR/VM posts

https://www.garlic.com/~lynn/submisc.html#cscvm

DASD, CKD, FBA, multi-track search posts

https://www.garlic.com/~lynn/submain.html#dasd

some posts mentioning .11sec system response and .086sec

3272/3277 hardware response for .196sec

https://www.garlic.com/~lynn/2025c.html#6 Interactive Response

https://www.garlic.com/~lynn/2025.html#69 old pharts, Multics vs Unix

https://www.garlic.com/~lynn/2024d.html#13 MVS/ISPF Editor

https://www.garlic.com/~lynn/2022c.html#68 IBM Mainframe market was Re: Approximate reciprocals

https://www.garlic.com/~lynn/2021j.html#74 IBM 3278

https://www.garlic.com/~lynn/2021i.html#69 IBM MYTE

https://www.garlic.com/~lynn/2016e.html#51 How the internet was invented

https://www.garlic.com/~lynn/2016d.html#104 Is it a lost cause?

https://www.garlic.com/~lynn/2016c.html#8 You count as an old-timer if (was Re: Origin of the phrase "XYZZY")

https://www.garlic.com/~lynn/2015g.html#58 [Poll] Computing favorities

https://www.garlic.com/~lynn/2014g.html#26 Fifty Years of BASIC, the Programming Language That Made Computers Personal

https://www.garlic.com/~lynn/2013g.html#14 Tech Time Warp of the Week: The 50-Pound Portable PC, 1977

https://www.garlic.com/~lynn/2012p.html#1 3270 response & channel throughput

https://www.garlic.com/~lynn/2012.html#15 Who originated the phrase "user-friendly"?

https://www.garlic.com/~lynn/2012.html#13 From Who originated the phrase "user-friendly"?

https://www.garlic.com/~lynn/2011p.html#61 Migration off mainframe

https://www.garlic.com/~lynn/2011g.html#43 My first mainframe experience

https://www.garlic.com/~lynn/2010b.html#31 Happy DEC-10 Day

https://www.garlic.com/~lynn/2009q.html#72 Now is time for banks to replace core system according to Accenture

https://www.garlic.com/~lynn/2009q.html#53 The 50th Anniversary of the Legendary IBM 1401

https://www.garlic.com/~lynn/2009e.html#19 Architectural Diversity

https://www.garlic.com/~lynn/2006s.html#42 Ranking of non-IBM mainframe builders?

https://www.garlic.com/~lynn/2005r.html#15 Intel strikes back with a parallel x86 design

https://www.garlic.com/~lynn/2005r.html#12 Intel strikes back with a parallel x86 design

https://www.garlic.com/~lynn/2001m.html#19 3270 protocol

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM Interactive Response Date: 12 Oct, 2025 Blog: Facebookre: https://www.garlic.com/~lynn/2025e.html#9 IBM Interactive Response

... further detail ... during the .317sec multi-track search, the vm-side could build up several queued I/O requests for other vm 3330s on the busy controller (SM+BUSY) ... when it ends, the vm-side might get in one of the queued requests ... before the MVS hits it with another multi-track search ... and so vm-side might see increasing accumulating queued I/O requests waiting for nearly second (or more).

.. trivia: also after transfer to San Jose, I got to wander around IBM (& non-IBM) datacenters in silicon valley, including disk bldg14 (engineering) and bldg15 (product test) across the street. They were running pre-scheduled, 7x24, stand-alone mainframe testing and mentioned that they had recently tried MVS, but it had 15min MTBF (requiring manual re-ipl) in that environment. I offered to rewrite I/O supervisor to make it bullet proof and never fail allowing any amount of on-demand, concurrent testing ... greatly improving productivity. I write an internal IBM paper on the I/O integrity work and mention the MVS 15min MTBF ... bringing down the wrath of the MVS organization on my head. Later, a few months before 3880/3380 FCS, FE (field engineering) had test of 57 simulated errors that were likely to occur and MVS was failing in all 57 cases (requiring manual re-ipl) and in 2/3rds of the cases no indication of what caused the failure.

posts mentioning getting to play disk engineer in bldgs 14&15

https://www.garlic.com/~lynn/subtopic.html#disk

posts mentioning MVS failing in test of 57 simulated errors

https://www.garlic.com/~lynn/2025d.html#58 IBM DASD, CKD, FBA

https://www.garlic.com/~lynn/2025d.html#45 Some VM370 History

https://www.garlic.com/~lynn/2025d.html#35 IBM Internal Apps, Retain, HONE, CCDN, ITPS, Network

https://www.garlic.com/~lynn/2025d.html#34 IBM Internal Apps, Retain, HONE, CCDN, ITPS, Network

https://www.garlic.com/~lynn/2025d.html#19 370 Virtual Memory

https://www.garlic.com/~lynn/2025c.html#92 FCS, ESCON, FICON

https://www.garlic.com/~lynn/2025c.html#2 Interactive Response

https://www.garlic.com/~lynn/2025b.html#44 IBM 70s & 80s

https://www.garlic.com/~lynn/2025b.html#25 IBM 3880, 3380, Data-streaming

https://www.garlic.com/~lynn/2024e.html#35 Disk Capacity and Channel Performance

https://www.garlic.com/~lynn/2024d.html#9 Benchmarking and Testing

https://www.garlic.com/~lynn/2024c.html#75 Mainframe and Blade Servers

https://www.garlic.com/~lynn/2024.html#114 BAL

https://www.garlic.com/~lynn/2024.html#88 IBM 360

https://www.garlic.com/~lynn/2023g.html#15 Vintage IBM 4300

https://www.garlic.com/~lynn/2023f.html#115 IBM RAS

https://www.garlic.com/~lynn/2023f.html#27 Ferranti Atlas

https://www.garlic.com/~lynn/2023d.html#111 3380 Capacity compared to 1TB micro-SD

https://www.garlic.com/~lynn/2023d.html#97 The IBM mainframe: How it runs and why it survives

https://www.garlic.com/~lynn/2023d.html#72 Some Virtual Machine History

https://www.garlic.com/~lynn/2023c.html#25 IBM Downfall

https://www.garlic.com/~lynn/2023b.html#80 IBM 158-3 (& 4341)

https://www.garlic.com/~lynn/2023.html#74 IBM 4341

https://www.garlic.com/~lynn/2023.html#71 IBM 4341

https://www.garlic.com/~lynn/2022e.html#10 VM/370 Going Away

https://www.garlic.com/~lynn/2022d.html#97 MVS support

https://www.garlic.com/~lynn/2022b.html#77 Channel I/O

https://www.garlic.com/~lynn/2022b.html#70 IBM 3380 disks

https://www.garlic.com/~lynn/2021.html#6 3880 & 3380

https://www.garlic.com/~lynn/2019b.html#53 S/360

https://www.garlic.com/~lynn/2018d.html#86 3380 failures

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: Interesting timing issues on 1970s-vintage IBM mainframes Newsgroups: alt.folklore.computers Date: Sun, 12 Oct 2025 08:24:39 -1000James Dow Allen <user4353@newsgrouper.org.invalid> writes:

modulo MVT (VS2/SVS & VS2/MVS) documentation (heavy-weight multiprocessor overhead) SMP, only had 2-CPU throughtput 1.2-1.5 times single processor throughput.

early last decade, I was asked to track down the decision to add

virtual memory to all 370s (pieces, originally posted here and in

ibm-main NGs) ... adding virtual memory to all 370s

https://www.garlic.com/~lynn/2011d.html#73

Basically MVT storage management was so bad that region sizes had to be specified four times larger than used ... as result typical 1mbyte 370/165 only ran four concurrent regions, insufficient to keep system busy and justified. Going to single 16mbyte virtual address space (i.e. VS2/SVS ... sort of like running MVT in a CP67 16mbyte virtual machine) allowed concurrent regions to be increased by factor of four (modulo capped at 15 because 4bit storage protect keys) with little or no paging.

It was deemed that it wasn't worth the effort to add virtual memory to 370/195 and all new work was killed.

Then there was the FS effort, going to completely replace 370 and

internal politics was killiing off 370 efforts, claims that lack of new

370s during FS gave the clone 370 makers their market foothold).

http://www.jfsowa.com/computer/memo125.htm

https://en.wikipedia.org/wiki/IBM_Future_Systems_project

https://people.computing.clemson.edu/~mark/fs.html

Note 370/165 avg 2.1 machine cycles per 370 instruction. for 168 they significantly increase main memory size & speed and microcode was optimized resulting in avg of 1.6 machine cycles per instruction. Then for 168-3, they doubled the size of processor cache, increasing rated MIPS from 2.5MIPS to 3.0MIPS.

With the implosion of FS there was mad rush to get stuff back into the 370 product pipelines, kicking off the quick&dirty 3033 and 3081 efforts. The 3033 started off remapping 168 logic to 20% faster chips and then optimized the microcode getting it down to avg of one machine cycle per 370 instruction.

I was also talked into helping with a 16-CPU SMP/multiprocessor effort and we con the 3033 processor engineers into helping (a lot more interesting than remapping 168 logic). Everybody thought it was great until somebody reminds the head of POK that POK's favorite son operating system ("VS2/MVS") 2CPU multiprocessor overhead only getting 1.2-1.5 times throughput of non-multiprocessor (and overhead increasing significantly as #CPUs increased ... POK doesn't ship a 16-CPU machine until after the turn of century). Then head of POK invites some of us to never visit POK again and directs the 3033 processor engineers, heads down and no distractions.

trivia: when I graduate and join IBM Cambridge Science Center, one of my hobbies was enhanced production operating systems and one of my first (and long time) customers was the online sales&marketing HONE systems. With the decision to add virtual memory to all 370s, there was also decision to form development group to do VM370. In the morph of CP67->VM370, lots of stuff was simplified and/or dropped (including multiprocessor support). 1974, I start adding stuff back into a VM370R2-base for my interal CSC/VM (including kernel-reorg for SMP, but not the actual SMP support). Then for VM370R3-base CSC/VM, I add multiprocessor support back in, originally for HONE so they could upgrade their 168s to 2-CPU systems (with some slight-of-hand and cache affinity, was getting twice throughput of single processor).

other trivia: US HONE had consolidated all their datacenters in silicon valley, when FACEBOOK first moved into silicon valley, it was into new bldg built next door to the former consolidated US HONE datacenter.

CSC posts

https://www.garlic.com/~lynn/subtopic.html#545tech

CP67L, CSC/VM, SJR/VM posts

https://www.garlic.com/~lynn/submisc.html#cscvm

SMP, tightly-coupled, shared-memory multiprocessor

https://www.garlic.com/~lynn/subtopic.html#smp

Future System posts

https://www.garlic.com/~lynn/submain.html#futuresys

HONE posts

https://www.garlic.com/~lynn/subtopic.html#hone

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: Re: Interesting timing issues on 1970s-vintage IBM mainframes Newsgroups: alt.folklore.computers Date: Sun, 12 Oct 2025 11:21:54 -1000James Dow Allen <user4353@newsgrouper.org.invalid> writes:

when FS imploded, they start on 3033 (remap 168 logic to 20% faster chips). They take a 158 engine with just the integrated channel microcode for the 303x channel director. A 3031 was two 158 engines, one for the channel director (integrated channel microcode) and 2nd with just the 370 microcode. The 3032 was 168-3 reworked to use the 303x channel director for external channels.

I had transferred out to the west coast and got to wander around IBM (and non-IBM) datacenters in silicon valley, including disk bldg14 (engineering) and bldg15 (product test) across the street. They were running pre-scheduled, 7x24, stand-alone mainframe testing and mentioned that they had recently tried MVS, but it had 15min MTBF (requiring manual re-IPL) in that environment. I offer to rewrite I/O supervisor making it bullet-proof and never fail, allowing any amount of on-demand, concurrent testing ... greatly improving productivity. Then bldg15 gets the 1st engineering 3033 outside POK processor engineering. Testing was only taking percent or two of CPU, so we scrounge up a 3830 controller and string of 3330 drives and setup our own private online service.

One of the things found was the engineering channel directors (158 engines) still had habit of periodic hanging, requiring manual re-impl. Discover then if you hit all six channels of a channel director quickly with CLRCH, it would force automagic re-impl ... so modify missing interrupt handler to deal with hung channel director.

getting to play disk engineer in bldgs14&15 posts

https://www.garlic.com/~lynn/subtopic.html#disk

some recent posts mentioning MVS 15min MTBF

https://www.garlic.com/~lynn/2025e.html#10 IBM Interactive Response

https://www.garlic.com/~lynn/2025e.html#1 Mainframe skills

https://www.garlic.com/~lynn/2025d.html#107 Rapid Response

https://www.garlic.com/~lynn/2025d.html#98 IBM Supercomputer

https://www.garlic.com/~lynn/2025d.html#87 IBM 370/158 (& 4341) Channels

https://www.garlic.com/~lynn/2025d.html#78 Virtual Memory

https://www.garlic.com/~lynn/2025d.html#71 OS/360 Console Output

https://www.garlic.com/~lynn/2025d.html#68 VM/CMS: Concepts and Facilities

https://www.garlic.com/~lynn/2025d.html#58 IBM DASD, CKD, FBA

https://www.garlic.com/~lynn/2025d.html#45 Some VM370 History

https://www.garlic.com/~lynn/2025d.html#35 IBM Internal Apps, Retain, HONE, CCDN, ITPS, Network

https://www.garlic.com/~lynn/2025d.html#34 IBM Internal Apps, Retain, HONE, CCDN, ITPS, Network

https://www.garlic.com/~lynn/2025d.html#19 370 Virtual Memory

https://www.garlic.com/~lynn/2025d.html#11 IBM 4341

https://www.garlic.com/~lynn/2025d.html#1 Chip Design (LSM & EVE)

https://www.garlic.com/~lynn/2025c.html#107 IBM San Jose Disk

https://www.garlic.com/~lynn/2025c.html#101 More 4341

https://www.garlic.com/~lynn/2025c.html#92 FCS, ESCON, FICON

https://www.garlic.com/~lynn/2025c.html#78 IBM 4341

https://www.garlic.com/~lynn/2025c.html#62 IBM Future System And Follow-on Mainframes

https://www.garlic.com/~lynn/2025c.html#53 IBM 3270 Terminals

https://www.garlic.com/~lynn/2025c.html#47 IBM 3270 Terminals

https://www.garlic.com/~lynn/2025c.html#42 SNA & TCP/IP

https://www.garlic.com/~lynn/2025c.html#29 360 Card Boot

https://www.garlic.com/~lynn/2025c.html#12 IBM 4341

https://www.garlic.com/~lynn/2025c.html#2 Interactive Response

https://www.garlic.com/~lynn/2025b.html#112 System Throughput and Availability II

https://www.garlic.com/~lynn/2025b.html#108 System Throughput and Availability

https://www.garlic.com/~lynn/2025b.html#91 IBM AdStar

https://www.garlic.com/~lynn/2025b.html#82 IBM 3081

https://www.garlic.com/~lynn/2025b.html#47 IBM Datacenters

https://www.garlic.com/~lynn/2025b.html#44 IBM 70s & 80s

https://www.garlic.com/~lynn/2025b.html#25 IBM 3880, 3380, Data-streaming

https://www.garlic.com/~lynn/2025b.html#20 IBM San Jose and Santa Teresa Lab

https://www.garlic.com/~lynn/2025b.html#12 IBM 3880, 3380, Data-streaming

https://www.garlic.com/~lynn/2025.html#122 Clone 370 System Makers

https://www.garlic.com/~lynn/2025.html#113 2301 Fixed-Head Drum

https://www.garlic.com/~lynn/2025.html#105 Giant Steps for IBM?

https://www.garlic.com/~lynn/2025.html#86 Big Iron Throughput

https://www.garlic.com/~lynn/2025.html#77 IBM Mainframe Terminals

https://www.garlic.com/~lynn/2025.html#71 VM370/CMS, VMFPLC

https://www.garlic.com/~lynn/2025.html#59 Multics vs Unix

https://www.garlic.com/~lynn/2025.html#29 IBM 3090

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM CP67 Multi-leavel Update Date: 12 Oct, 2025 Blog: FacebookSome of the MIT CTSS/7094 people went to the 5th flr to do MULTICS. Others went to the IBM Science Center on the 4th flr and did virtual machines (1st modified 360/40 w/virtual memory and did CP40/CMS, morphs into CP67/CMS when 360/67 standard with virtual memory becomes available), science center wide-area network (that grows into corporate internal network, larger than arpanet/internet from science-center beginning until sometime mid/late 80s; technology also used for the corporate sponsored univ BITNET), invented GML 1969 (precursor to SGML and HTML), lots of performance tools, etc.

I took a 2 credit hr intro to fortran/computers and at end of semester was hired to rewrite 1401 MPIO in assembler for 360/30 ... univ was getting 360/67 for tss/360 to replace 709/1401 and got a 360/30 temporarily pending availability of a 360/67 (part of getting some 360 experience). Univ. shutdown datacenter on weekends and I would have it dedicated (although 48hrs w/o sleep made monday classes hard), I was given a pile of hardware & software manuals and got to design and implement my own monitor, device drivers, interrupt handlers, storage management, error recovery, etc ... and within a few weeks had a 2000 card program. 360/67 arrived within a year of taking intro class and I was hired fulltime responsible for OS/360 (tss/360 never came to production, so ran as 360/65). Student Fortran jobs ran under second on 709. Initially MFTR9.5 ran well over minute. I install HASP cutting time in half. I then start redoing MFTR11 STAGE2 SYSGEN to carefully place datasets and PDS members to optimize arm seek and multi-track seach cutting another 2/3rds to 12.9secs. Student Fortran never got better than 709 until I install UofWaterloo WATFOR (on 360/65 ran at 20,000 cards/min or 333q cards/sec, student Fortran jobs typically 30-60 cards).

CSC came out to univ for CP67/CMS (precursor to VM370/CMS) install (3rd after CSC itself and MIT Lincoln Labs) and I mostly get to play with it during my 48hr weekend dedicated time. I initially work on pathlengths for running OS/360 in virtual machine. Test stream ran 322secs on real machine, initially 856secs in virtual machine (CP67 CPU 534secs), after a couple months I have reduced that CP67 CPU from 534secs to 113secs. I then start rewriting the dispatcher, (dynamic adaptive resource manager/default fair share policy) scheduler, paging, adding ordered seek queuing (from FIFO) and mutli-page transfer channel programs (from FIFO and optimized for transfers/revolution, getting 2301 paging drum from 70-80 4k transfers/sec to channel transfer peak of 270). Six months after univ initial install, CSC was giving one week class in LA. I arrive on Sunday afternoon and asked to teach the class, it turns out that the people that were going to teach it had resigned the Friday before to join one of the 60s CP67 commercial online spin-offs.

Initially CP67 source was deliivered on OS/360, source modified, assembled, txt decks collected, marked with stripe & name across top, all fit in card tray, BPS loader placed in front and IPLed ... would write memory image to disk for system IPL. A couple months later, new release now resident on CMS ... modifications in CMS "UPDATE" files, exec that applied update and generated temp file that was assembled. A system generation exec, "punched" txt decks spooled to virtual reader that was then IPLed.

After graduating and joining CSC, one of my hobbies was enhanced

production operating systems ("CP67L") for internal datacenters

(inluding online sales&marketing support HONE systems, was one of the

first, and long time customer). With the decision to add virtual

memory to all 370s, there was also decision to do CP67->VM370 and some

of the CSC people went to the 3rd flr, taking over the IBM Boston

Programming Center for the VM370 group. CSC developed set of CP67

updates that provided (simulated) VM370 virtual machines

("CP67H"). Then there were a set of CP67 updates that ran on 370

virtual memory architecture ("CP67I"). At CSC, because there were

profs, staff, and students from Boston area institutions using the CSC

systems, CSC would run "CP67H" in a 360/67 virtual machine (to

minimize unannounced 370 virtual memory leaking).

CP67L ran on real 360/67

... CP67H ran in a CP67L 360/67 virtual machine

...... CP67I ran in a CP67H 370 virtual machine

CP67I was in general use, a year before the 1st engineering 370 (with

virtual memory) was operation ... in fact, IPL'ing CP67I on the real

machine was test case.

As part of CP67L, CP67H, CP67I effort, the CMS Update execs were improved to support multi-level update operation (later multi-level update support was added to various editors). Three engineers come out from San Jose and add 2305 & 3330 support to CP67I, creating CP67SJ which was widely use on internal machines, even after VM370 was available.

Mid-80s, Melinda

https://www.leeandmelindavarian.com/Melinda#VMHist

asked if I could send her the original exec multi-level update

implementation. I had large archive dating back to the 60s on triple

redundant tapes in the IBM Almaden Research tape library. It was

fortunate since within a few weeks, Almaden had an operation problem

mounting random tapes as scratch and I lost nearly dozen tapes,

including triple redundant tape archive.

In the morph of CP67->VM370, a lot of stuff was simplified and/or dropped (including multiprocessor support). 1974, I start adding a lot of stuff back into VM370R2-base for my internal CSC/VM (including kernel re-org for SMP, but not the actual SMP support). Then with VM370R3-base, I add multiprocessor support into CSC/VM, initially for HONE so they could upgrade all their 168 systems to 2-CPU (getting twice throughput of 1-CPU systems). HONE trivia: All the US HONE datacenters had been consolidated in Palo Alto ... when FACEBOOK 1st moved into silicon valley, it was into a new bldg built next door to the former consolidate US HONE datacenter.

CSC posts

https://www.garlic.com/~lynn/subtopic.html#545tech

SMP, tightly-coupled, shared-memory multiprocessor posts

https://www.garlic.com/~lynn/subtopic.html#smp

CP67L, CSC/VM, SJR/VM posts

https://www.garlic.com/~lynn/submisc.html#cscvm

some CP67L, CP67H, CP67I, CP67SJ, CSC/VM posts

https://www.garlic.com/~lynn/2025d.html#91 IBM VM370 And Pascal

https://www.garlic.com/~lynn/2025.html#122 Clone 370 System Makers

https://www.garlic.com/~lynn/2025.html#121 Clone 370 System Makers

https://www.garlic.com/~lynn/2025.html#120 Microcode and Virtual Machine

https://www.garlic.com/~lynn/2024g.html#108 IBM 370 Virtual Storage

https://www.garlic.com/~lynn/2024g.html#73 Early Email

https://www.garlic.com/~lynn/2024f.html#112 IBM Email and PROFS

https://www.garlic.com/~lynn/2024f.html#80 CP67 And Source Update

https://www.garlic.com/~lynn/2024f.html#29 IBM 370 Virtual memory

https://www.garlic.com/~lynn/2024d.html#68 ARPANET & IBM Internal Network

https://www.garlic.com/~lynn/2023e.html#70 The IBM System/360 Revolution

https://www.garlic.com/~lynn/2023d.html#98 IBM DASD, Virtual Memory

https://www.garlic.com/~lynn/2022h.html#22 370 virtual memory

https://www.garlic.com/~lynn/2022.html#55 Precursor to current virtual machines and containers

https://www.garlic.com/~lynn/2022.html#12 Programming Skills

https://www.garlic.com/~lynn/2021g.html#34 IBM Fan-fold cards

https://www.garlic.com/~lynn/2014d.html#57 Difference between MVS and z / OS systems

https://www.garlic.com/~lynn/2006.html#38 Is VIO mandatory?

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM DASD, CKD and FBA Date: 13 Oct, 2025 Blog: FacebookWhen I offered the MVS group fully tested FBA support ... they said I needed $26M incremental new revenue (some $200M in sales) to cover cost of education and documentation. However, since IBM at the time, was selling every disk it made, FBA support would just move some CKD sales to FBA ... also I wasn't allowed to use life-time savings as part of the business case.

All disk technology was actually moving to FBA ... can be seen in 3380 formulas for records/track calculations, having to round record sizes up to multiple of fixed cell size. Now no real CKD have been made for decades, all being simulated on industry standard fixed-block devices. A big part of FBA was error correcting technology performance ... part of recent FBA technology moving from 512byte blocks to 4k blocks.

trivia: 80s, large corporations were ordering hundreds of vm/4341s at a time for deploying out in departmental areas (sort of the leading edge of the coming distributed departmental computing tsunami). Inside IBM, conference rooms were becoming scarce, being converted into departmental vm/4341 rooms. MVS looked at the big explosion in sales and wanted a piece of the market. However the only new non-datacenter disks were FBA/3370 ... eventually get CKD emulation as 3375. However didn't do MVS much good, distributed departmental dataprocessing was looking at scores of systems per support person ... while MVS was scores of support people per system.

Note: ECKD was originally channel commands for Calypso, 3880 speed matching buffer allowing 3mbyte/sec 3380 to be used with existing 1.5mbyte/sec channels ... it went through significant teething problems ... lots and lots of sev1.

Other trivia: I had transferred from CSC out to SJR on west coast and got to wander around IBM (and non-IBM) datacenters in silicon valley, including disk bldg14 (engineering) and bldg15 (product test) across the street. They were running pre-scheduled, 7x24, stand-alone mainframe testing and mentioned that they had recently tried MVS, but it had 15min MTBF (requiring manual re-ipl) in that environment. I offered to rewrite I/O supervisor to make it bullet proof and never fail allowing any amount of on-demand, concurrent testing ... greatly improving productivity. I write an internal IBM paper on the I/O integrity work and mention the MVS 15min MTBF ... bringing down the wrath of the MVS organization on my head. Later, a few months before 3880/3380 FCS, FE (field engineering) had test of 57 simulated errors that were likely to occur and MVS was failing in all 57 cases (requiring manual re-ipl) and in 2/3rds of the cases no indication of what caused the failure.

DASD, CKD, FBA, and multi-track search posts

https://www.garlic.com/~lynn/submain.html#dasd

getting to play disk engineer in bldgs 14&15 posts

https://www.garlic.com/~lynn/subtopic.html#disk

a few posts mentioning calypso, eckd, mtbf

https://www.garlic.com/~lynn/2025b.html#12 IBM 3880, 3380, Data-streaming

https://www.garlic.com/~lynn/2024g.html#3 IBM CKD DASD

https://www.garlic.com/~lynn/2023c.html#103 IBM Term "DASD"

https://www.garlic.com/~lynn/2015f.html#89 Formal definition of Speed Matching Buffer

a few posts mentioning FBA fixed-block 512 4k

https://www.garlic.com/~lynn/2021i.html#29 OoO S/360 descendants

https://www.garlic.com/~lynn/2019c.html#70 2301, 2303, 2305-1, 2305-2, paging, etc

https://www.garlic.com/~lynn/2017f.html#39 MVS vs HASP vs JES (was 2821)

https://www.garlic.com/~lynn/2014g.html#84 real vs. emulated CKD

https://www.garlic.com/~lynn/2012j.html#12 Can anybody give me a clear idea about Cloud Computing in MAINFRAME ?

https://www.garlic.com/~lynn/2010d.html#9 PDS vs. PDSE

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: IBM DASD, CKD and FBA Date: 13 Oct, 2025 Blog: Facebookre:

semi-related, 1988 IBM branch asks if I could help LLNL (national lab) standardize some serial stuff they were working with ... which quickly becomes fibre-channel standard ("FCS", including some stuff I had done in 1980), initially 1gbit/sec transfer, full-duplex, 200mbyte/sec. Then POK finally gets their serial stuff released (when it is already obsolete), initially 10mbyte/sec, later improved to 17mbyte/sec.

Some POK engineers then become involved with FCS and define a heavy-weight protocol that radically reduces throughput, eventually released as FICON

Latest public benchmark I've seen is 2010 z196 "Peak I/O", getting 2M IOPS using 104 FICON (20K IOPS/FICON). About the same time a FCS is announced for E5-2600 server blades claiming over million IOPS (two such FCS having higher throughput than 104 FICON). Also IBM pubs recommend that SAPs (system assist processors that actually do I/O) be kept to 70% CPU (or 1.5M IOPS).

FCS &/or FICON posts

https://www.garlic.com/~lynn/submisc.html#ficon

--

virtualization experience starting Jan1968, online at home since Mar1970

From: Lynn Wheeler <lynn@garlic.com> Subject: CTSS, Multics, Unix, CSC Date: 13 Oct, 2025 Blog: FacebookSome of the MIT CTSS/7094 people went to the 5th flr for MULTICs

Note that original UNIX had been done at AT&T ... somewhat after the

become disenchanted with MIT Multics ... UNIX is supposedly take-off

on the name MULTICS and is simplification.

https://en.wikipedia.org/wiki/Multics#Unix

Others from MIT CTSS/7094 went to the IBM Cambridge Scientific Center on the 4th flr and did virtual machines, science center wide-area network that morphs into the internal network (larger than arpanet/internet from just about the beginning until sometime mid/late 80s, about the time it was forced to convert to SNA/VTAM), invented GML in 1969 (decade morphs into ISO standard SGML, after another decade morphs into HTML at CERN), bunch of other stuff.

I was at univ that had gotten a 360/67 for tss/360. The 360/67 (replacing 709/1401) arrives within a year of my taking a 2 credit hr intro to fortran/computers and I'm hired fulltime responsible of OS/360 (tss/360 didn't come to production, so ran as 360/65). Later CSC comes out to install CP67 (3rd install after CSC itself and MIT Lincoln Labs). Nearly two decades later I'm dealing with some UNIX source and notice some similarity between UNIX code and that early CP67 (before I started reWriting a lot of the code) ... possibly indicating some common heritage back to CTSS. Before I graduate, I'm hired fulltime into small group in Boeing CFO office to help with the formation of Boeing Computer services (consolidate all dataprocessing into independent business unit, including offering services to non-Boeing entities). Then when I graduate, I join CSC, instead of staying with the CFO.